Slides - Cenic

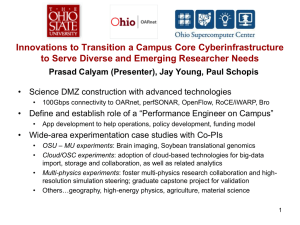

advertisement

Christopher Paolini Computational Science Research Center San Diego State University 100G and Beyond Workshop: Ultra High Performance Networking in California Calit2 Auditorium • First floor, Atkinson Hall • UC San Diego • La Jolla, CA Tuesday, February 26, 2013 · Campus and Lab Strategies Panel · 11:00AM – 12:00PM University network operations centers support multiple, conflicting missions. Network Security or Network Performance: which is more important? NOCs typically accountable to university business divisions and contend with legal and public relations pressures → security wins always. NOCs not usually accountable to research groups (often never communicate with faculty). University enterprise (e.g. general purpose/financial/personal) computing: security > performance Computational and “Big Data” research: performance > security What can we do to ensure efficient scientific data transfer between universities and national labs? vs. A network optimized for business is not designed or capable of supporting data intensive science. Universities will always need to support security features that protect organizational financial and personnel data. Solution: create separate data intensive science network, external to university enterprise network Design formalized by ESnet, based on traditional network DMZ paradigm Science DMZ: (1) dedicated access to high-performance WAN, (2) high-performance switching infrastructure (large buffer memory), (3) dedicated data transfer nodes Science DMZ using CENIC California Research and Education Network resources NSF Office of CyberInfrastructure CC-NIE Grant 1245312 • Alcatel-Lucent 10 and 40 Gbps switching devices, per CSU policy • DMZ spans four campus buildings: Administration, Life Sciences (CSRC Data Center), Education & Business Administration (UCO Data Center), and Chemical Sciences (VizCenter) • Primary users: CSRC affiliated faculty and students • AL OmniVista 2500 for network management • Computational science network connects to the DMZ • Funded in 2009 through NSF MRI award 0922702 • 8 Cisco 10 Gbps Catalyst 4900M switching devices • CSRCnet spans five campus buildings: Administration, Life Sciences (CSRC Data Center), Education & Business Administration (UCO Data Center), Physics, and Engineering • Sole users: CSRC affiliated faculty and students • 10G access to SDSC Facilitate high-performance data transfer for scientific applications using Globus Online GridFTP Alcatel-Lucent OmniSwitch 10K (core device) Two Alcatel-Lucent OmniSwitch 6900s (satellite devices) Dedicated and independent 10GE (maybe 40GE) uplink to Internet2 and ESnet via CENIC Optimized network for high-volume bulk transfer of scientific datasets Unencumbered, high-speed access to online scientific applications and data generated at SDSU External access to science resources not impacted by regular “enterprise” or business class Internet traffic Focus on “BigData” Intensive Science: earthquake rupture and wave propagation, parallel 3D unified curvilinear coastal ocean modeling, geologic sequestration simulation of supercritical CO2, large-scale proteomic data, bioinformatics of gene promoter analysis, microbial metagenomics, and high-order PSIC methods for simulation of pulse detonation engines Network performance measurement based on the PerfSONAR framework InCommon Federation global federated system for identity management and authentication to DMZ connected hosts and services Extension of the standard, two channel FTP protocol Control Channel ◦ Command/Response ◦ Used to establish data channels ◦ Basic file system operations (e.g. mkdir, delete, etc.) Data channel: Pathway over which file is transferred Scheduled transfers using command line interface: $ scp xsede#lonestar4:~/GO/bigdatafile xsede#trestles:~/GO/bigdatafile $ scp xsede#trestles:~/GO/bigdatafile paolini#sdsu:~/GO/bigdatafile Science DMZ performance monitoring accomplished using perfSONAR tool suite Server side tools run on designated hosts attached to key switches End-to-end testing with collaborating perfSONAR sites Determine one way latencies and packet loss between hosts using One-Way Active Measurement Protocol (OWAMP) owping -c 10000 -i .01 remotedmz Periodic throughput tests to remote Science DMZs using Bandwidth Test Controller (BWCTL) Resource allocation and scheduling daemon for regularlyscheduled Iperf tests bwctl -s remotedmz -P 4 -t 30 -f M -w 4M -S 32 U.S. education and research identity federation service Provides common framework for trusted shared management of access to on-line resources Provide users single sign-on convenience and privacy protection – Shibboleth Service Provider Federating software Site admins can delegate responsibility for administering service provider (SP) metadata to another admin Primary SDSU faculty/staff for Science DMZ implementation: Name Role E-Mail Phone Christopher Paolini CSRC Affiliated Faculty, Network Engineering and Research paolini@engineering.sdsu.edu (619) 594-7159 Jose Castillo Director of Computational Science Research Center jcastillo@mail.sdsu.edu (619) 594-3430 Rich Pickett Campus CIO rich.pickett@sdsu.edu (619) 594-8370 Kent McKelvey Director of Network Services kent@sdsu.edu (619) 594-3245 Skip Austin Network Planning and Design austin@mail.sdsu.edu (619) 594-4211 Gene LeDuc Technology Security Officer (TSO) gleduc@mail.sdsu.edu (619) 594-0838 Robert Osborn Infrastructure Installation, Configuration, and Support osborn@mail.sdsu.edu (619) 594-6004 Current and planned DMZ related research: Development of new transport layer protocols that use compressed sensing techniques to perform sparse sampling on streaming petabyte sized datasets originating from remote CO2 sequestration, curvilinear coastal ocean modeling, and earthquake rupture and wave propagation simulations Development of a new Alcatel-Lucent SDN/Application Fluent Network based protocol for the OS10K that bridges Lustre RDMA traffic between 40GE and FDR InfiniBand