Characteristics of Sound Tests - California State University, Fullerton

advertisement

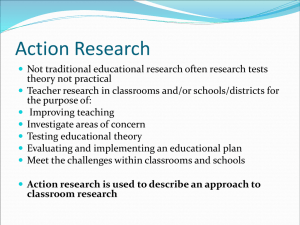

Characteristics of Sound Tests Instructor: Jessie Jones, Ph.D. Co-director, Center for Successful Aging California State University, Fullerton Criteria for Evaluating Tests Reliability Validity Discrimination Performance Standards Social Acceptability Feasibility Test Reliability Refers to the consistency of a score from one trial to the next (especially from one day to another). • test-retest reliability • r = .80 Test Reliability Test objectivity- refers to the degree of accuracy in scoring a test. • Also referred to as rater reliability Rater Reliability Is especially important if measures are going to be collected on multiple occasions and/or by more than one rater. • Intrarater reliability refers to the same evaluator. • Interrater reliability refers to different evaluators. Test Reliability How to increase scoring precision •Practice giving the test to a sample of clients •Follow the exact published protocol •Provide consistent motivation •Provide rest to reduce fatigue •Help to reduce client fear •Note any adaptations in test protocol Chair Stand Reliability - Review Reliability Test-retest reliability Test Objectivity • Intra-rater reliability • Inter-rater reliability Test Validity A valid test is one that measures what it is intended to measure. • Physical fitness • Functional limitations • Motor and sensory impairments • Fear-of-falling Tests must be validated on intended clients Types of Validity Content Construct Criterion Test Validity Validity – the degree to which a test reflects a defined “domain” of interest. Content • Also referred to as “face” or “logical” validity. Example: Berg Balance Scale Domain of interest is balance. Participant performs a series of 14 functional tasks that require balance. Test Validity Construct-related - the degree to which a test measures a particular construct. • A construct is an attribute that exists in theory but cannot be directly observed. Example Test: 8’ Up & Go • Construct measured is functional mobility Test Validity – evidence demonstrates that test scores are statistically related to one or more outcome criteria. Concurrent Validity Predictive Validity Criterion-related Criterion-Related validity – the degree to which a test correlates with a criterion measure. Concurrent • Criterion measure is often referred to as the “gold standard” measure. • > .70 • Example: Chair Sit & Reach Criterion-Related Predictive Validity evidence demonstrates the degree of accuracy with which an assessment predicts how participants will perform in a future situation. Predictive Validity Example Older Test: Berg Balance Scale adults who score above 46/56 have a high probability of not falling when compared to older adults who score below this cutoff. Validity - Review Content-related Construct-related Criterion-related • Concurrent validity • Predictive validity Discrimination Power Important for measuring different ability levels, and measuring over time. Continuous measure tests • Result in a spread of scores • Avoid “ceiling effects”- test too easy • Avoid “floor effects” – test too hard • Responsiveness Discrimination Power Examples: • Senior Fitness Test (ratio scale) Uses time and distance measures • FAB and BBS (5 pt. ordinal scale) Allows for “more change in scores” than Tinetti’s POMA; FEMBAF which only have 2-3 point scales). Characteristics of Sound Tests Performance Standards • Evaluated relative to a peer group (norm- referenced standards). Example: Senior Fitness Test • In relation to predetermined, desired outcomes (criterion-referenced standards) Example: 8 ft Up & Go Other Characteristics of Sound Tests acceptability – meaningful Feasibility – suitable for use in a particular setting Social Review! Reliability Validity Discrimination Performance Standards Social Acceptability Feasibility