Student peer-marking of critical appraisal essays – Gillian

advertisement

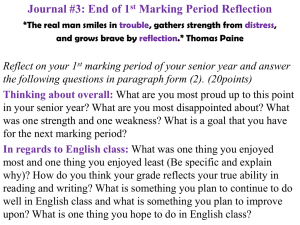

Student Peer-Assessment of critical appraisal essays Gill Price, 2012 Norwich Medical School University of East Anglia Outline • Why critical appraisal essays – history • Why peer-marking • Why formative • The assignment – Instructions, paper/s to be appraised – Marksheet • Peer-marking arrangements • Moderating Outline (contd) • Results – Marks – Student evaluations of the process – Student feedback to peers • Conclusions Critical appraisal – Why? • Essential for practice of EBM (?) • Required by ‘Tomorrow’s Doctors’ : “Outcomes for graduates: The Doctor as a Scholar and a Scientist #12 Applying scientific method to medical research Critically appraise the results of relevant diagnostic, prognostic and treatment trials and other qualitative and quantitative studies as reported in the medical and scientific literature.” (General Medical Council 2009) Critical appraisal Local history (Norwich Medical School, UEA) • 5 critical appraisal assignments (‘Analytical Reviews’, ARs) in Years 1-2 (~150 students each year) – Some short-answer, some essay format (all 2000 words) – General medical research article – 5 weeks to brew – 3 Study designs plus 1 qualitative methods – Formative assessment started in 2008-9 • 1 in Year 1, 1 in year 2 Critical appraisal examples • • • • instructions paper marksheet marking guidelines Teaching support • Extensive “Research Methods” teaching series in Year 1-2 (10-20 sessions/term) • ‘Basic’ stats (plus regression) – all focussed on interpretation • Study designs, critical appraisal seminars Peer-assessment – why? • To distil student learning and deepen understanding • To give students experience & practice at giving feedback • To save staff marking time Bloom’s Taxonomy of educational objectives (Atherton, 2005) Peer assessment - how • Students submit one script to deadline • Marking guidelines then made available – each student instructed to mark their own script in a week • Next week have a ‘marking lecture’ – explains the procedure and main points • Compulsory 2-hr marking session in 5 rooms – each has a tutor Formative peer-assessment exercise Submitted scripts (ID number only) Room 5 29 stdts Mark room 4 scripts Room 4 29 stdts Mark room 5 scripts Room 1 30 stdts Mark room 3 scripts Room 2 30 stdts Mark room 1 scripts Room 3 30 stdts Mark room 2 scripts Peer assessment – what each student does • • • • • Each student receives the script of another plus marking guidelines Marking done within the session Marks and feedback to be given Marker is anonymous – script identified only by ID • Staff have a record of who marked whose • Hand in for ‘moderation’ and returned to author with feedback Principles of Marking • scope for flexibility in marking scheme • not exact science, subjective, markers will differ ! • give credit for sound and consistent arguments based on evidence • credit points made in a different section • must show understanding of concepts, not merely repeat what authors say • must be consistent in argument, not contradictory • ‘grammatical prose’ ‘Moderation’ • Module leader entered student marks (from second year of peer-marking only) and checked a sample of scripts from each stratum (around 20% of total) • Added to student feedback where necessary • Message sent to all students giving the distribution of marks, a summary of differences with moderator in the sample • The mean difference between the moderator’s score and the student’s score was -0.2 (SD 1.4) in 2009-10. Results (1): Student marks (% failed first time) 2007-8 2008-9 2009-10 *2010-11 *2011-12 Term 1 Formative 7% (summative) No data 36% 28% 13% Term 2 Summative 5% 7% 11% 9% 9% *From 2010-11 the marking range was expanded from 0-15 (with pass mark 8) to 0-24 (with pass mark 14) Comparison of Term 1 formative and Term 2 summative marks for the same students 2009-10 (pass mark=8) 0 .1 Density .2 .3 Peer-assessed, formative marks 2009-10, n=160 0 5 10 15 Rot 1 mark (peer-assessed) 0 .05 Density .1 .15 .2 .25 Staff-marked, summative marks 2009-10, n=160 0 5 10 Rot 2 mark 15 Comparison of Term 1 formative and Term 2 summative marks for the same students 2010-11 (pass mark=14) 0 Density .05 .1 .15 .2 Peer-assessed, formative marks 2010-11, n=146 5 10 15 Rot 1 mark (peer-assessed) 20 25 0 Density .05 .1 .15 Staff-marked, summative marks 2010-11, n=146 5 10 15 corrected rot2 mark after moderating 20 25 Results (2) Student feedback on the process (N=159) after first year of peer-assessment 2008-9 Most-common responses to open-ended questions What you learned • What markers are looking for in AR*, what aspects to focus on (n=79) • Marking ARs is difficult (24) • How to organise/structure essay better (15) • Subjectivity: Unclear what happens if you disagree with marker or include things not in guidelines (9) • How others appraise a paper (6) What needs improving • Was useful as it was (n=17) • Want scripts marked also by examiners, more authoritative feedback (11) • Streamline the process (12) • Improve anonymity (11) *AR=analytical review (=critical appraisal) Results (2) Student feedback on the process: The ‘formative’ effect • In later years some students commented in evaluations about their peers not making an effort in writing the script – spoiled the marking experience! • Scrutiny of the scripts with lowest peer-marks revealed that students wrote something vague but when they came to the parts where most students struggle (eg. the Results section!) they simply gave up. • Some scripts could be regarded as ‘token submissions’ (very low word count and apparently little effort applied) • Negation of the point of formative - ‘having a go’ Results (3) – some favourite examples of peer-to-peer feedback Avoid using confusing technical terms as sometimes you don’t seem to understand them” • Marker: “ • Excerpt from script: “Also the objectives the researchers were measuring were presented well which helped produce more readily interpretive and generalisable results. This also helped the aims of the researchers to be achieved.” Comment by marker: “Because the data was well presented? Really? ” • Marker: “It is evident that you understand what you’re talking about, just make sure and cover what is asked “ [this and take ] Moderator marks on right edge of structured feedback-sheet Some students do ‘get it’ !!... [you know this as ] [ terminology correctly in ] Conclusions • Peer assessment can be a great learning experience if taken seriously • Gives insight for writing the assignment, what is expected, how subjective is critical appraisal, and how hard it is to mark BUT • Interpretations in essays which are not covered in marking guidelines are hard for students to assess • Formative assignments mean many students do not try very hard: they skim over the ‘difficult’ parts • Students not satisfied with peer-only assessment still want staff to mark their work for ‘authoritative’ feedback References • ATHERTON JS. Learning and Teaching: Bloom's taxonomy [On-line] UK. 2005 [updated 2005; cited January 2009 ]; Available from: http://www.learningandteaching.info/learning/bloomtax.htm • • General Medical Council (2009) Tomorrow's doctors: Outcomes and standards for undergraduate medical education. London: General Medical Council.