Slides for lecture 12

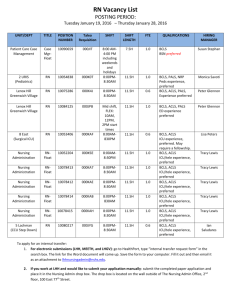

advertisement

CMSC 414

Computer (and Network) Security

Lecture 12

Jonathan Katz

Midterm?

Will be held Oct 21, in class

Will cover everything up to and including

the preceding lecture (Oct 16)

Includes all reading posted on the class

syllabus!

Homework review?

Questions on HWs 1 or 2??

Integrity policies

(Chapter 6)

Some requirements/assumptions

Users will not write their own programs

– Will use existing programs and databases

Programs will be written/tested on a

nonproduction system

Special process must be followed to install

new program on production system

Requirements, continued…

The special installation process is controlled

and audited

Auditors must have access to both system

state and system logs

Some corollaries…

“Separation of duty”

– Basically, have multiple people check any

critical functions (e.g., software installation)

“Separation of function”

– Develop new programs on a separate system

Auditing

– Recovery/accountability

Biba integrity model

Ordered integrity levels

– The higher the level, the more confidence

• More confidence that a program will act correctly

• More confidence that a subject will act appropriately

• More confidence that data is trustworthy

– Note that integrity levels may be independent

of security labels

• Confidentiality vs. trustworthiness

• Information flow vs. information modification

Information transfer

An information transfer path is a sequence of

objects o1, …, on and subjects s1, …, sn-1, such

that, for all i, si can read oi and write to oi+1

Information can be transferred from o1 to on via a

sequence of read-write operations

“Low-water-mark” policy

s can write to o if and only if the integrity

level of s is higher than that of o

– The information obtained from a subject cannot

be more trustworthy than the subject itself

If s reads o, then the integrity level of s is

changed to min(i(o), i(s))

– The subject may be relying on data less

trustworthy than itself

Continued…

s1 can execute s2 iff the integrity level of s1

is higher than the integrity level of s2

– Note that, e.g., s1 provides inputs to s2 so s2

cannot be more trustworthy than s1

Security theorem

If there is an information transfer path from

o1 to on, then i(on) i(o1)

– Informally: information transfer does not

increase the trustworthiness of the data

Drawbacks of this approach

The integrity level of a subject is non-

increasing

– A subject will soon be unable to access objects

at high integrity levels

Does not help if integrity levels of objects

are lowered instead

– Downgrades the integrity level of trustworthy

information

Ring policy

Only deals with direct modification

– Any subject may read any object

– s can write to o iff i(o) i(s)

– s1 can execute s2 iff i(s2) i(s1)

The difference is that integrity levels of

subjects do not change…

Security theorem holds here as well

Strict integrity policy

“Biba’s model”

– s can read o iff i(s) i(o)

– s can write o iff i(o) i(s)

– s1 can execute s2 iff i(s2) i(s1)

Note that read/write are both allowed only if

i(s) = i(o)

Security theorem holds here as well

Lipner’s basic model

Based loosely on Bell-LaPadula

– Two security levels

• Audit manager (AM)

• System low (SL)

– Five categories

•

•

•

•

Development (D) - production programs under development

Production code (PC) - processes/programs

Production data (PD)

System development (SD) - system programs under

development

• Software tools (T) - programs unrelated to protected data

Lipner’s model, continued

Assign users to levels/categories; e.g.:

– Regular users: (SL, {PC, PD})

– Developers: (SL, {D, T})

– System auditors (AM, {D, PC, PD, SD, T})

– Etc.

Lipner’s model, continued

Objects assigned levels/categories based on

who should access them; e.g.:

– Ordinary users should be able to read

production code, so this is labeled (SL, {PC})

– Ordinary users should be able to write

production data, so this is labeled

(SL, {PC, PD})

– Follows Bell-LaPadula methodology…

Properties

This satisfies the initial requirements:

– Users cannot execute category T, so they cannot

write their own programs

– Developers do not have read/write access to

PD, so cannot access production data

• If they need production data, the data must first be

downgraded to D (this requires sys admins)

– Etc.

Lipner’s full model

Augment security classifications with

integrity classifications

Now, a subject’s access rights to an object

depend on both its security classification

and its integrity classification

– E.g., subject can read an object only if subject’s

security class is higher and subject’s integrity

class is lower

Clark-Wilson model (highlights)

Transactions are the basic operation

– Not subjects/objects

The system should always remain in a

“consistent state”

– A well-formed transaction leaves the system in

a consistent state

Must also verify the integrity of the

transactions themselves

Access control mechanisms

(Chapter 15)

The problem

Drawbacks of access control matrices…

– In practice, number of subjects/objects is large

– Most entries blank/default

– Matrix is modified every time subjects/objects

are created/deleted

Access control lists (ACLs)

Instead of storing central matrix, store each

column with the object it represents

– Stored as pairs (s, r)

Subjects not in list have no rights

– Can use wildcards to give default rights

Example: Unix

Unix divides users into three classes:

– Owner of the file

– Group owner of the file

– All other users

Note that this leaves little flexibility…

Some systems have been extended to allow

for more flexibility

– Abbrev. ACLs overridden by explicit ACLs

Modifying ACLs

Only processes which “own” the object can

modify the ACL of the object

– Sometimes, there is a special “grant” right

(possibly per right)

Privileged user?

How do ACLs apply to privileged user?

– E.g., in Solaris both abbreviations of ACLs and

“full” ACLs are used

• Abbreviated ACLs ignored for root, but full ACLs

apply even to root

Groups/wildcards?

Groups and wildcards reduce the size and

complexity of ACLs

– E.g., user : group : r

• * : group : r

• user : * : r