Presentation - SNOW Workshop

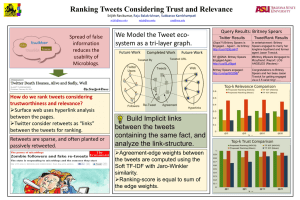

advertisement

Real-time topic detection with bursty ngrams: RGU participation in SNOW 2014 challenge Carlos Martin and Ayse Goker (Robert Gordon University) SNOW Workshop, 8th April 2014 Outline • Architecture diagram • Results • Future work #2 Architecture diagram Entities Extractor Crawler Tweets (English) Tweets Tweets (with Entities) Solr #3 Architecture diagram Ranked topics Entities Extractor Crawler Tweets (English) Tweets Keyword Extractor Merged topics Topics Combiner BNgram Tweets (with Entities) Topic Aggregator Topics (+ keywords, entities, hashtags and urls) Solr #4 Topics (+ tweets) Query Builder Topic Labeller Topics (+ label) Entities Extractor • Extract entities per tweet using Stanford NER (http://nlp.stanford.edu/software/CRF-NER.shtml). • 3 class model Identifies Person, Location and Organization. • Efficient enough for a real-time system. #5 Architecture diagram Ranked topics Entities Extractor Crawler Tweets (English) Tweets BNgram Tweets (with Entities) Solr #6 BNgram approach • Detection of bursty ngrams based on df-idf score Bursty entities, hashtags and urls are also included in the approach. Re ngrams, 2- and 3-grams are considered (no unigrams anymore). • Variant of tf-idf Penalization of frequent terms in previous timeslots. • Terms containing hashtags, entities, urls are boosted. • Two previous timeslots (s=2) were considered in our experiments. #7 BNgram approach • “Partial” membership clustering approach is an interesting alternative as one term could belong to different clusters (For example, entity “Obama” for the stories “Obama wins in Ohio” and “Obama wins in Illinois”). • Apriori clustering algorithm has been used in the experiments of SNOW challenge • Explore maximal associations between terms based on the number of shared tweets. #8 BNgram approach • Output: Clusters of trending terms with tweets from the last timeslot associated to them. • A tweet should contain a minimum number of cluster terms to be included. • Clusters are ranked by their bursty scores (maximum df-idf value of topic terms) #9 Architecture diagram Ranked topics Entities Extractor Crawler Tweets (English) Tweets Keyword Extractor BNgram Tweets (with Entities) Topic Aggregator Topics (+ keywords, entities, hashtags and urls) Solr #10 Keyword Extractor and Topic Aggregator modules • Topic Aggregator module: – Aggregate entities, hashtags and urls per topic (coming from topic tweets of the corresponding timeslot) keeping their frequencies. – Keep those ones whose frequency is higher than a threshold. • Keyword Extractor module: – Extract main keywords (including ngrams) per topic (not extracted from Topic Aggregator) using bursty terms from the clusters. – Removal of urls, hashtags, user mentions, entities and acronyms. – Overlaps are also removed. – Keep df-idf scores as their weights. #11 Architecture diagram Ranked topics Entities Extractor Crawler Tweets (English) Tweets Keyword Extractor Merged topics Topics Combiner BNgram Tweets (with Entities) Topic Aggregator Topics (+ keywords, entities, hashtags and urls) Solr #12 Topic Combiner module • Topic Combiner module: – Merge similar topics from the same timeslot. – Based on the co-occurrence of keywords (unigrams), entities, hashtags and urls from the compared topics. – According to preliminary results, Apriori algorithm makes this module more accurate as one term could belong to different topics. #13 Architecture diagram Ranked topics Entities Extractor Crawler Tweets (English) Tweets Keyword Extractor Merged topics Topics Combiner BNgram Tweets (with Entities) Topic Aggregator Topics (+ keywords, entities, hashtags and urls) Solr #14 Topics (+ tweets) Query Builder Query Builder module • Creation of final queries to retrieve all the related tweets to the topic (Solr queries) and also filtering by time (simulating real-time scenario). • 3 types of queries: – Keywords – Entities and Hashtags – Urls • If keywords and entities in topic, keywords closer to the entities are the selected ones. • Image population: If tweets contains links to images (metadata), they are added to the topic. #15 Query Builder module • Replies are also considered. Be careful with spam replies • Replies are not text-query dependent. More diversity?. • Sentiment analysis, extraction of relevant keywords. #16 Query Builder module • Diverse tweets are computed based on cosine similarity. • This approach could be more or less strict depending on the selected threshold. #17 Architecture diagram Ranked topics Entities Extractor Crawler Tweets (English) Tweets Keyword Extractor Merged topics Topics Combiner BNgram Tweets (with Entities) Topic Aggregator Topics (+ keywords, entities, hashtags and urls) Solr #18 Topics (+ tweets) Query Builder Topic Labeller Topics (+ label) Topic Labeller module • BuzzFeed editor-in-chief Ben Smith: “Headlines sure look a lot like tweets these days.” (http://perryhewitt.com/5-lessons-buzzfeedharvard/) • For each topic tweet, a score is computed based on the following formula. where α = 0.8. The tweet with the highest score is selected as the Topic label after cleaning it. #19 Topic Labeller module • Example of tweets after cleaning them • Granularity is still an issue Some topic labels are too general or specific. #20 Results - Examples of topics #21 Future work • Improve Topic Combiner module – use of similarity measures. • Further research on the use of replies and diverse tweets per Topic. • Improve Topic Labeller module – granularity issue. • Modifications in QueryBuilder module – use of term weights (Solr). #22 Thank you! E-mail address: c.j.martin-dancausa@rgu.ac.uk Twitter account: @martincarloscit