A Process From Start to Finish

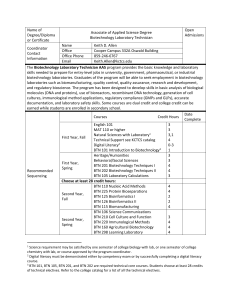

advertisement

Program Assessment: A Process From Start to Finish RJ Ohgren – Office of Judicial Affairs Mandalyn Swanson, M.S. – Center for Assessment and Research Studies James Madison University Session Outcomes By the end of this session, attendees will be able to: • Explain how assessment design informs program design • Describe the “Learning Assessment Cycle” • Express the difference between a goal, learning objective and program objective • Identify effective frameworks to design learning outcomes • Define fidelity assessment and recognize its role in the Learning Assessment Cycle By The Numbers Where We Were v. Where We Wanted to Be Why Assess? It’s simple: • The assessment cycle keeps us accountable and intentional • We want to determine if the benefits we anticipated occur • Are changes in student performance due to our program? If we don’t assess: • Programming could be ineffective – we won’t know • Our effective program could be terminated – we have no proof it’s working Typical Assessment • We’ll, we’ve got to do a program. Let’s put some activities together. • Let’s ask them questions about what we hope they get out of it afterwards. • Um…let’s ask if they liked the program too. And let’s track attendance. • Survey says….well, they didn’t really learn what we’d hoped. But they liked it? And a good bit of people came? Success! Proper Assessment What do we want students to know, think or do as a result of this program? • Let’s define goals and objectives that get at what we want students to know, think or do. • What specific, measurable things could show that we’re making progress towards these goals and objectives? • What activities can we incorporate to get at those goals and objectives? We have a program! How are these approaches different? Learning Assessment Cycle Establish Program Objectives Use Information Analyze & Maintain Information Collect Objective Information Create & Map Programming to Objectives Select and Design Instrument Implementation Fidelity Program Goals vs. Learning Goals Item Item Item Item Item Objective Item Objective Objective Objective Goal Goals, Objectives, & Items Item Item Item Item Item Item Goals v. Objectives • Goals can be seen as the broad, general expectations for the program • Objectives can be seen as the means by which those goals are met • Items measure our progress towards those objectives and goals Goals vs. Objectives Goal Objective • General expectation of student (or program) outcome • Can be broad and vague • Statement of what students should be able to do or how they should change developmentally as a result of the program • More specific; measurable • Example: Students will understand and/or recognize JMU alcohol and drug policies. • Example: Upon completion of the BTN program, 80% of students will be able to identify 2 JMU Policies relating to alcohol. Putting it All Together GOAL Objective Assessment Objective Assessment GOAL GOAL Objective Objective Assessment Objective Assessment Objective Assessment By The Numbers Program Goal 1 of 3 • Goal: To provide a positive classroom experience for students sanctioned to By the Numbers • Objective: 80% of students will report that the class met or exceeded their expectations of the class. • Item: Class Evaluation #15 – Overall, I feel like this class… • Objective: 80% of students will agree (or better) with the statement “the facilitators presented the material in an nonjudgemental way.” • Item: Class Evaluation #5.5 – The facilitators presented the material in a non-judgemental way. • Objective: 60% of students will report an engaging classroom experience. • Item: Class Evaluation #5.1 – The facilitators encouraged participation. • Item: Class Evaluation #5.4 – The facilitators encouraged discussion between participants. By The Numbers Learning Goal 1 of 5 • Goal: To ensure student understanding and/or recognition of JMU alcohol and drug policies. • Objective: After completing BTN, 80% of students will be able to identify 2 JMU Policies relating to alcohol. • Objective: …identify the circumstances for parental notification. • Objective: …identify the parties able to apply for amnesty in a given situation. • Objective: …identify the geographic locations in which JMU will address an alcohol/drug violation. • Objective: …articulate the three strike policy. By The Numbers Learning Goal 2 of 5 • Goal: To ensure student understanding and/or recognition of concepts surrounding alcohol. • Objective: After completing BTN, 60% of students will be able to provide the definition of a standard drink for beer, wine, and liquor. • Objective: …identify the definition for BAC. • Objective: …describe the relationship between tolerance and BAC. • Objective: …identify at least 2 factors that influence BAC. • Objective… identify the definition of the point of diminishing returns. • Objective: …identify how the body processes alcohol and its effects on the body. By The Numbers Learning Goal • Goal: To ensure student understanding and/or recognition of concepts surrounding alcohol consumption. • Objective: After completing BTN, 80% of students will be able to correctly identify the definition of the point of diminishing returns. • Item: Assessment Question #12, #29 • Activity: Tolerance Activity, Point of Diminishing Returns discussion • Objective: After completing BTN, 80% of students will be able to identify how the body processes alcohol and its effects on the body. • Item: Assessment Question #8, #9, #10 • Activity: Alcohol in the Body Activity Developing Learning Outcomes • Should be Student Focused – Worded to express what the student will learn, know, or do (Knowledge, Attitude, or Behavior) • Should be Reasonable – should reflect what is possible to accomplish with the program • Should be Measurable – “Know” and “understand” are not measurable. The action one can take from knowing or understanding is. • Should have Success Defined – What is going to be considered passing? Bloom’s Taxonomy Less complex More complex Level Description 1. Knowledge Recognize facts, terms, and principles 2. Comprehension Explain or summarize in one’s own words 3. Application Relate previously learned material to new situations 4. Analysis Understand organizational structure of material; draw comparisons and relationships between elements 5. Synthesis Combine elements to form a new original entity 6. Evaluation Make judgments about the extent to which material satisfies criteria Bloom’s Taxonomy Bloom’s Level Verbs 1. Knowledge match, recognize, select, compute, define, label, name, describe 2. Comprehension restate, elaborate, identify, explain, paraphrase, summarize 3. Application give examples, apply, solve problems using, predict, demonstrate 4. Analysis outline, draw a diagram, illustrate, discriminate, subdivide 5. Synthesis compare, contrast, organize, generate, design, formulate 6. Evaluation support, interpret, criticize, judge, critique, appraise The ABCD Method • A = Audience • What population are you assessing? • B = Behavior • What is expected of the participant? • C = Conditions • Under what circumstances is the behavior to be performed? • D = Degree • How well must the behavior be performed? To what level? From “How to Write Clear Objectives” The ABCD Method: Example Audience By the Numbers Participants Behavior Condition Describe relationship between tolerance and BAC After taking the class Degree 80% • Objective: After completing BTN, 80% of students will be able to describe the relationship between tolerance and BAC. Common Mistakes Vague behavior • Example: Have a thorough understanding of the university honor code. Gibberish • Example: Have a deep awareness and thorough humanizing grasp on… Not Student-Focused • Example: Train students on how and where to find information. Program Implementation Give the program you say you will. How? Pre Test (Low Item Score) Program Post Test (High Item Score) Pre Test (Low Item Score) Program Post Test (Low Item Score) Fidelity Assessment • Are you doing what you say you’re doing? • Helps to ensure your program is implemented as you intended • Links learning outcomes to programming • Helps to answer “why” we aren’t observing the outcomes we think we should be observing Fidelity Components • Program Differentiation • How are the many components of your program different from one another? • Adherence • Was your program delivered as intended? • Quality • How well were the components administered? • Exposure • How long did each component last? How many students attended? • Responsiveness • Were participants engaged during the program? Fidelity Checklist - Generic Student Learning Outcomes Program Component Objective X Component(s) aligned with Objective X Duration Features Length of List of component specific features Adherence to Features Quality (Y/N) Quality recorded for rating for each feature each feature What is rated? • The live/videotaped program Who does the rating? • Independent auditors • Facilitators • Participants Please walk away with this: You Must Assess.