Pub.policy221.winter.11.wk9

advertisement

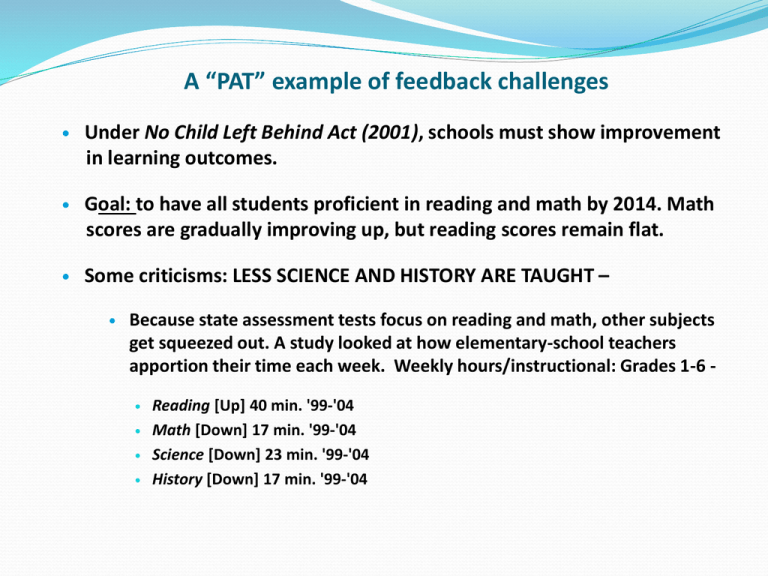

A “PAT” example of feedback challenges Under No Child Left Behind Act (2001), schools must show improvement in learning outcomes. Goal: to have all students proficient in reading and math by 2014. Math scores are gradually improving up, but reading scores remain flat. Some criticisms: LESS SCIENCE AND HISTORY ARE TAUGHT – Because state assessment tests focus on reading and math, other subjects get squeezed out. A study looked at how elementary-school teachers apportion their time each week. Weekly hours/instructional: Grades 1-6 Reading [Up] 40 min. '99-'04 Math [Down] 17 min. '99-'04 Science [Down] 23 min. '99-'04 History [Down] 17 min. '99-'04 A “PAT” example (con.) LOWER STATE STANDARDS: Federal law requires students be tested annually to determine reading and math skills – up to states to devise exam. Result, critics say, is that some states make their tests easier so it appears that their students are doing well. The evidence: huge gaps between state results and scores on national standardized tests. Anecdotal evidence suggests when time-to-teach increases, so do scores. By its own count, Mississippi is tied for the best score in the country. But on a U.S. test called the National Assessment of Educational progress, the state drops to 50th place—a whopping 71 points lower Sources: National Center for Education Statistics; the Education Trust; Testing, Learning and Teaching by Martin West, Brown University Conclusion – information and implementation Governments often fail to acknowledge need to synthesize what experts and policy analysts know with local , indigenous, or “street-level” knowledge. Over-reliance on experts can lead to efforts to intimidate or indoctrinate public (e.g., efforts to compel immigrants to abandon their folkways; efforts to get public to accept the ‘safety’ of nuclear power – Stone, 2001). We need to translate expert knowledge into information useable by local populations through sensitivity to local cultural practices, norms, and traditions. Experts must incorporate community perspectives into professional judgments – to gather all the information needed for making good decisions (Blood, 2003; Frank Fischer, 2001 (e.g., citizen participation and environmental risk, community expectations about schools, local public health and nutrition). Evaluating policy – outcomes and evaluators Evaluation is often termed the “final” stage of policy process. Viewed as scientific and “detached” – but is usually neither: it is agenda- driven, valuational, and political. Two issues: 1) the range of organizations doing evaluation, and the approaches they employ; and 2) the divergent ways problems are defined and evaluated – by policy makers and analysts. Who are the “evaluators”? Numerous organizations: some issue-based, others philosophy-based, have different sources of funding, clients, products. Evaluation has become a “boutique” industry – you may choose your analysis – and evaluation based on your goals, policy orientation, analytic approach, major products, stage of process you want evaluated. Evaluation as “final” stage of policy AGENDA SETTING POLICY MAKING PHASE POLICY IMPLEMENTATION PHASE Demands – FOR POLICY CHANGE, REFORM, RE-DIRECTION Outputs – laws, rules, regulations Supports – THROUGH CONTINUED FUNDING, REVENUE STREAMS, FAVORABLE OPINIONS. Policy formulation/ law-making by legislatures Policy execution – application/ enforcement by bureaucracy Feedback – evaluation and policy impact assessment – ARE POLICIES MEETING GOALS & EXPECTATIONS? • Every stage of policy features interaction among competing interests. • Diversity of evaluators and their approaches/philosophies/expectations reflects these interests. • This explains why – at some level, ALL policies are seen as “failures;” no outcomes will ever be viewed as favorable by all interests. Failure is in the eyes of the beholder/evaluator. • Assume, moreover that IF a policy outcome is viewed as widely successful – supporters will want the policy extended to other groups or issues – thus, it must be shown that outcomes “fall short” in some way (e.g., national parklands, public works programs, cancer research). An overview of policy evaluation If evaluation is an interest-laden component of the policy process, then how it is conducted (and whether something is viewed as a failure or success) is subject to divergent perspectives: Time bias and disposition: Temporal data for evaluation – short vs. long; accuracy of projections and interpretations. Changes in public attitudes – if a policy “regime” persists for a long-period of time, public attitudes toward policy may change – sometimes due to success! Time paradox – if a policy is successful it will be continued beyond it’s original anticipated duration (e.g., block grants for urban re-development, energy price supports) - increasing likelihood of some failure! Spatial and perceptual failures Facility-siting issues: e.g., “NIMBY, NIABY, AND LULUs.” (energy issues, waste management problems, homes and facilities for special populations (e.g., mentally challenged, ex-convicts). Jurisdictional extension-ism issues: federalizing a state policy; internationalizing a national program. Social expectation issues: when a program and cultural values are “out of sync.” (e.g., civil rights, public housing, public transit). Public perception or “unanticipated consequences” bind – e.g., land use, housing, transportation, many environmental decisions All these “failures” are inter-connected and systemic – i.e., failures of both outcome and process or, political not just program failures! Success, failure, “successful failure” – Project Apollo (1961 – 1972) Apollo 11 – first landing of humans on the moon (July 1969) Apollo 13 – oxygen tank explosion in mid-flight forced mission to be aborted, astronauts safely returned to earth (April 1970) Manned lunar program and policy evaluation Goal – to land an American on the moon and bring him/her back safely by “end of the decade” (April 1961, President Kennedy – succeeded in 1969). Cost = $10 billion; divided into three sub-programs to devise different spacecraft, missions, and technical feats – Mercury, Gemini, Apollo. Run by NASA – one of most popular Cold War agencies; aim was partly scientific, partly political (restore national prestige and land on another world). Initially successful and popular (e.g., “the Right Stuff” – astronauts were folk heroes). Other benefits: Huge economic multiplier effects: California, Florida, other sun-belt states; for every $1 of public expenditure, $5-7 in other investment/spending/jobs. Huge technological spinoffs: telecommunications, personal computers and digital technology, materials science, aerospace, bio-metrics, education (space grant program). Failure as success Changes in public mood: Americans became more pessimistic , cynical by end of 1960s; Vietnam War, racial divide, persistent poverty and urban problems. New questions were posed in the framework of Congressional oversight : Should we continue to spend money on this? If we achieved the goal, then is it appropriate to continue to do this? If the goal is “science;” can’t we meet it more economically with un-manned probes? If we can “land a man on the moon” why can’t we fix our cities? Program succeeded beyond expectations – while there was tragedy (e.g., Apollo 1 in 1967), goal was achieved and scientific/political gains were significant. This played out in context of program evaluation: Success bred contempt – NASA made it look too easy – perhaps new goals are needed! In 1970 – when near-tragedy averted (Apollo 13) reaction was: is the risk worth it? Given the need for new goals, and desire to avoid risk – maybe we could better spend a space program budget on other things? Feedback as a new policy agenda By 1972, Congress forced NASA into “choice:” Continue moon landings or have a space shuttle program and space station. A re-useable spacecraft that could perform various missions & build “near earth” capacity (i.e., shuttle would help build space station). Such a program would – serve many constituencies: scientific, educational, and even commercial (space policy as distributive policy). This option would also permit other monies to be spent on un-manned planetary probes. Changes in temporal, perceptual & cultural assessments led to policy transformation! – (John Logsdon – space policy expert, others). MEDIA REACTION TO APOLLO PROGRAM TERMINATION – 1972: William Hines, syndicated Chicago Sun-Times columnist, who had opposed the project from the start: "And now, thank God, the whole crazy business is over.“ The Christian Science Monitor cautioned that “Such technological feats as going to the moon do not absolve people of responsibilities on earth.” Time magazine – “after the magnificent effort to develop the machines and the techniques to go to the moon, Americans lost the will and the vision to press on. Apollo's detractors … were prisoners of limited vision who cannot comprehend, or do not care, that Neil Armstrong's step in the lunar dust will be well remembered when most of today's burning issues have become mere footnotes to history.” Pruitt-Igoe federal housing project • Modernist housing project designed in 1951; high-rise “designed for interaction;” seen as a multi-benefit solution to problems of urban renewal: • combine housing & services • park-like environmental amenities • facilitate sense of community • Completed 1956; thirty-three, eleven story buildings on a 35 acre site just north of downtown St. Louis, MO. City officials wanted to build a spatiallydense “Manhattan-style” development as a model for middle- as well as low-income families; cheaper to build and maintain. Pruitt-Igoe as multi-modal policy failure Social expectations –public perceptions – it was anticipated as an integrated development. Whites were unwilling to live in close proximity to blacks (de facto segregation). “(Thus) the entire … project soon had only black residents." (Alexander von Hoffman, Joint Center for Housing Studies, Harvard University). Once it was populated by impoverished residents, the project’s outcomes became out-of-synch with original decision-maker goals of becoming a highdensity model of residential development. Initially viewed as a social experiment, over time project became seen by the public as a LULU. It was poorly maintained and neglected, largely due to inadequate continued funding (perceptual/temporal change). Multi-modal policy failure (con.) Unanticipated consequences: from failure-to-failure – "The problems were endless: Elevators stopped on only the fourth, seventh and 10th floors. Tenants complained of mice and roaches. Children were exposed to crime and drug use, despite the attempts of their parents to provide a positive environment. No one felt ownership of the green spaces that were designed as recreational areas, so no one took care of them. A mini-city of 10,000 people was stacked into an environment of despair.” – “A federally built and supported slum.” Lee Rainwater, 1970 Problems effectively “cascaded” from one failure to another: neither blame nor responsibility could be ascribed clearly. Once perceived as failure, policy-makers literally (as well as figuratively) abandoned it. Goal-based failure – planners and policy-makers assumed that a rationallyconstructed project based on objectivist models of human behavior (i.e., poverty as understood by social scientists) would provide a tenable solution. A classic “principal-agent” problem. See - http://www.defensiblespace.com/book/illustrations.htm Razed: 1972- 1976 So …. Why do policies fail? Bad timing, poor information – not drawing on adequate information about future conditions; especially in making investment decisions, predicting societal attitudes. Poor administrative capacity for reversing flawed decisions – centralized bureaucracies are immune to countervailing pressures; accountability-resistant cultures – Ingram and Fraser, 2005). Seen in many public works-type decisions, as well as social welfare programs. Vague rights and responsibilities of policy actors (i.e., who’s responsible for what aspects of a project? Who gets “accountable” if something goes wrong? (Ascher, 1999) Bias and agenda-driven behavior – decisions driven by an agency’s or organization’s ideology or political objectives, rather than in response to a clearly defined problem. Conflicts of interest and poor distinction between implementation and evaluation - leading to ‘boondoggles’ or failures in systems that are supposed to be fool-proof and resistant to accident (e.g., Gulf oil-”blow-out;” space shuttle accidents). Failure to seek and acquire public acceptance of decisions – this includes input into alternative models of choice and incorporation of public values in program design. Can we ensure that policies succeed? No …. However, design them to be technically credible, scientifically defensible, and politically effective if they are adaptively designed. Assume that public policies will fail to fully embrace temporal, spatial, perceptual, goal based failures. incorporate an “error-provocative design” within the policy – i.e., assume that flaws are inevitable and politically-unavoidable given the interest group pressures in their design (Ackerman, 1980; Robison, 1994). Prepare for these flaws by providing checks and balances in their implementation – at the first sign of a problem, even a “street-level bureaucrat” responsible for some policy “mode” can veto a decision; demand that some problem be inspected, audited, examined, evaluated, or revisited. Permit input from a wide range of stakeholders & provide capacity to modify the policy or program when new information is acquired.