Average - Ottawa Area Intermediate School District

advertisement

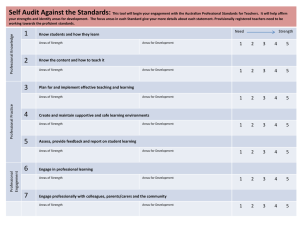

August 13, 2012 8:00 a.m. – Noon by Doug Greer & Laurie Smith 1. RELATIONSHIPS 2. RELEVANCE 3. RIGOR 4. RESULTS Please take a moment to briefly describe your answer during sessions of review and at designated times to the following questions: What is working to help struggling math students? What is working to help readers who struggle? What is working to help writers who struggle? What is working to help struggling science students? What is working to help students who struggle with social studies (content and skills)? What are others doing to help struggling learners? Take 10-20 minutes … What does the new accountability data mean for our schools and our students? How do we use the tools provided by MDE to improve teaching and learning? What other data should we consider when closing the achievement gaps? Tuesday, July 31: “Embargoed” notice to district superintendents of Priority and Focus schools Thursday, August 2: Public release likely of the following: ◦ Ed YES! Report Card (old letter grade) ◦ AYP Status (old pass or fail system) ◦ Top to Bottom Ranking and possibly: Reward schools (Top 5%, Top improvement, BtO) Focus schools (largest achievement gap top vs. bottom) Priority schools (Bottom 5%) Doug Greer 877-702-8600 x4109 DGreer@oaisd.org Principal 2 of 4 – Accountability & Support 1. Top to Bottom Ranking given to all schools with 30 or more students tested, full academic year (0 – 99th percentile where 50th is average) 2. NEW designation for some schools Reward schools (Top 5%, Significant Improvement or Beating the Odds) Focus schools (10% of schools with the largest achievement gab between the top and bottom) Priority schools (Bottom 5%, replaces PLA list) 3. NEW in 2013, AYP Scorecard based on point system replacing the “all or nothing” of NCLB. Understanding the TWO Labels Priority/Focus/Reward (Top to Bottom List) AYP Scorecard (Need > 50%) Green-Yellow-Red Normative—ranks schools against each other Criterion--referenced—are schools achieving a certain PROFICIENCY level? Focuses attention on a smaller subset of schools; targets resources Given to all schools; acts as an “early warning” system; easy indicators The primary mechanism for sanctions and supports Used primarily to identify areas of intervention and differentiate supports Fewer schools All schools 10 Z-scores are centered around zero or the “state average” Positive is ABOVE the state average Negative is BELOW the state average -3 -2 2% -1 16% State Average Z-score = Zero 0.5 -0.5 31% 50% 69% Percentile State Average 1 2 84% 98% 3 13 In terms of achievement gaps, how well do you think your school (or schools in your district) compare to all schools in the state? Specifically, which content areas do you feel will have the smallest gaps versus the largest gaps relative to the state average? 2011-12 Top-to-Bottom Individual School Lookup … Some schools may be exempt from Focus school designation in year 2 IF they are deemed Good-Getting-Great (G-G-G): ◦ Overall achievement is above 75th percentile ◦ Bottom 30% meets Safe Harbor improvement (or possibly AYP differentiated improvement) G-G-G schools will be exempt for 2 years, then will need to reconvene a similar deep diagnostic study in year 4. Note: See ESEA Approved Waiver pp. 151-152 Unlike Priority label, Focus label may only be one year. (Title I set-aside lasts 4 years) NOTE: AYP Scorecard, Top to Bottom Ranking and Reward/Focus/Priority designation for August 2013 determined by Fall MEAP, 2012 and Spring MME, 2013. Requirement for all Focus schools: ◦ Notification of Focus status by August 21, 2012 via the Annual Ed Report ◦ Quarterly reports to the district board of education ◦ Deep diagnosis of data prior to SIP revision (if Title I by Oct 1) ◦ Professional Dialogue, toolkit available to all (if Title I requires DIF with time range of Oct – Jan.) ◦ Revision of School Improvement Plan with activities focused on the Bottom 30% included (if Title I additional revisions to Cons App, both by Jan 30) ◦ NOTE: Additional requirements of Title I schools regarding set-asides and specific uses of Title I funds. Supports Available: ◦ OAISD work session on August 13 ◦ OAISD follow up session ??? TBD ◦ OAISD work session on “Data Utilization driving Instruction and School Improvement” October 25 ◦ “Defining the Problem (Data Planning)” work session at OAISD on January 22, 2013 ◦ “SIP Planning Session” at OAISD on March 22, 2013 ◦ Individualized support by OAISD per request ◦ MDE Toolkit available in September, 2012 ◦ Sept. MDE assigns DIF for Title I schools only ◦ MDE Regional meeting on September 11 in GR Understanding the TWO Labels Priority/Focus/Reward (Top to Bottom List) AYP Scorecard (Need > 50%) Green-Yellow-Red Normative—ranks schools against each other Criterion--referenced—are schools achieving a certain PROFICIENCY level? Focuses attention on a smaller subset of schools; targets resources Given to all schools; acts as an “early warning” system; easy indicators The primary mechanism for sanctions and supports Used primarily to identify areas of intervention and differentiate supports Fewer schools All schools 20 Top to Bottom Ranking: 95th 2 points possible: 2 = Achievement > linear trajectory towards 85% by 2022 (10 years from 11/12 baseline) 1 = Achievement target NOT met; Met Safe Harbor 0 = Achievement target NOT met; Safe Harbor NOT met STATUS: Lime Green Top to Bottom Ranking: 75th STATUS: Orange Top to Bottom Ranking: 50th School Proficiency Targets (AMOs) 100% 90% 85.0% 85.0% 85.0% 85.0% 85.0% 85.0% 85.0% 80% 85.0% 78.0% Proficiency Target (AMO) 74.5% 85.0% 81.5% 85.0% 76.5% 71.0% 70% 68.0% 67.5% 64.0% 60.5% 60% 59.5% 57.0% 53.5% 51.0% 50% 42.5% 40% 34.0% 30% 25.5% 20% 17.0% 10% 0% 2012 8.5% 2013 2014 2015 2016 2017 Year 2018 2019 2020 2021 2022 24 Normal “Bell-Shaped” Curve Below Avg. Bottom 10% target Average Above Avg. Top 10% target Average Scale Score or Average % Correct % at Level 4 or Lv 3 & 4 or below set % Average % at Level 1 or Lv 1 & 2 or above set % Goal: All students will be proficient in math. SMART Measureable Objective: All students will increase skills in the area of math on MEAP and Local assessments: • The average scale score for all students in math on the MEAP will increase from 622 (10/11) to 628 by 2013/14 school year (2 points per year) • The percentage of all students reaching Level 1 on the math portion of the MEAP will increase from 28% (2010-11) to 40% by 2013/14 school year (4% per year) • The percentage of all students at Level 4 on the math portion of the MEAP will decrease from 18% (10/11) to 6% by 2013/14 school year (4% per year) • The average proficiency across the grade levels on the Winter Benchmark in Delta Math will increase from 74% (2010-11) to 85% by the January, 2013. • The number of students identified as “At Risk” on Delta Math on the Fall screener will reduce from 58 (2010-11) to 40 by 27 the Fall of 2012. Goal: All students will be proficient in math. SMART Measureable Objective: All students will increase skills in the area of math on MEAP and Local assessments: • The average percentage correct for all students in math on the MEAP will increase from 52% (10/11) to 61% by 2013/14 school year (3% per year) • The percentage of all students reaching 80% accuracy on math portion of the MEAP will increase from 28% (2010-11) to 40% by 2013/14 school year (4% per year) • The percentage of all students reaching 40% accuracy on math portion of the MEAP will increase from 82% (10/11) to 94% by 2013/14 school year (4% per year) Percent Correct example from 2010/11 New Cut Score Proficiency and Scale Score on previous slide from 2011/12 28 Take a break then discuss (or vice versa) the following two questions: Why should MDE use Full Academic Year (FAY) students (those who have 3 counts in the year tested) to hold schools accountable? Why should local school districts NOT use FAY student data to set goals to improve instruction? TOP TO BOTTOM RANKING Ranks all schools in the state with at least 30 full academic year students in at least two tested content areas (Reading, Writing, Math, Science and Social Studies weighted equally plus graduation). • Each content area is “normed” in three categories: • 2 years of Achievement (50 – 67%) • 3 – 4 years of Improvement (0 – 25%) • Achievement gaps between top and bottom (25 – 33%) • Graduation rate (10% if applicable) • 2 year Rate (67%) • 4 year slope of improvement (33%) HOW IS THE TOP TO BOTTOM RANKING CALCULATED For science, social studies, writing, and grade 11 all tested subjects Two-Year Average Standardized Student Scale (Z) Score School Achievement Z-Score Four-Year Achievement Trend Slope School Performance Achievement Trend Z-Score Two-Year Average Bottom 30% - Top 30% Z-Score Gap School Achievement Gap Z-Score 1/ 2 1/ 4 1/ 4 School Content Area Index Content Index Zscore Z-scores are centered around zero or the “state average” Positive is ABOVE the state average Negative is BELOW the state average -3 -2 2% -1 16% State Average Z-score = Zero 0.5 -0.5 31% 50% 69% Percentile State Average 1 2 84% 98% 3 2011-12 Top-to-Bottom Individual School Lookup … When finished with the worksheet please add to the Google “Chalk Talk” about what works. -2.0 to -.5 -.4 to .4 .5 to 2.0 … … … Suppose there are 20 students (most of whom are shown) and the … average Z-score… of all 20 is 0.28, this represents the Achievement Score before it is standardized again into the Z-score … Top 30% of students (n=6) has average score of 1.62 … Mid 40% (n=8)…has average score of -0.34 Bottom 30% (n=6) has average score of -1.12 Gap = -1.12 – 1.62 or -2.74 then standardized … Year X Grade Y MEAP Performance Level Year X+1 Grade Y+1 MEAP Performance Level Not Proficient Partially Proficient Proficient Adv Low Mid High Low High Low Mid High Mid Low Not Mid Proficient High M D D I M D I I M SI I I SI SI I SI SI SI SI SI SI SI SI SI SI SI SI Partially Low Proficient High SD D D M I I SI SI SI Low Proficient Mid High SD SD SD SD SD SD SD SD D SD SD SD D D SD SD M D D SD I M D D I I M D SI I I M SI SI I I Advanced Mid SD SD SD SD SD SD D D M GLOBAL data ◦ District level School level Grade Level ◦ Best used to study trends of student performance over time (3-5 years) & across different subgroups. ◦ Informs school-wide focus, must drill deeper STUDENT level data ◦ Use only when timely reports (less than 2 weeks) are available at a more specific diagnostic level. DIAGNOSTIC levels ◦ Cluster (formerly Strands in HS/GLCEs) ◦ Standards (formerly Expectations in HS/GLCEs) ◦ Learning Targets Have you seen this new IRIS report? ◦ What are your predictions around what the historic cut scores will look like? ◦ Do you have assumptions about strengths and weaknesses at certain grade levels and content areas? You may have noticed many of the green lines are stagnant. Did you notice any bright spots with a steady increase and separation from state & county average? Surfacing experiences and expectation Make predictions, recognize assumptions and possible learning Analyzing the data in terms of observable facts Search for patterns, “ah-ha”, be specific (avoid judging & inferring) Within the Google Doc Collection: ◦ Dialogue in small groups and record what is observable in the district data at ALL grade levels. ◦ Do NOT judge, conjecture, explain or infer. ◦ Make statements about quantities (i.e. 3rd grade math fluctuated between 57-72%; however the past three years have been stagnant around 64% ◦ Look for connections across grade levels (i.e. A sharp increase was seen in 5th grade math in 2009 (5380%), then the same group of students increased in 7th grade math in 2011 (5476%) OAISD School Year % Adv + Prof % Adv % Prof Mean Number Scale % Partial % Not Prof Assessed Score 2007-08 64.50% 22.00% 42.50% 19.70% 15.80% 355 841.1 2008-09 60.40% 20.80% 39.60% 24.80% 14.90% 404 834 2009-10 59.80% 19.80% 40.00% 23.10% 17.10% 420 839 2010-11 59.20% 12.50% 46.80% 24.70% 16.10% 417 835.3 2011-12 46.00% 9.00% 37.00% 35.00% 18.00% 398 831 •Surfacing experiences and expectation Activate •Make predictions, recognize assumptions and possible learning •Analyzing the data in terms of observable facts Explore •Search for patterns, “ah-ha”, be specific (avoid judging & inferring) REPEAT Activate & Explore until data drilled down to diagnostic level •Generating Theory, making meaning, finding causality, inference Infer •What else should we explore? Are there lurking variables? •What are some solutions we might explore and discuss? Act •What data will we need to collect to guide possible implementation? Doug Greer 877-702-8600 x4109 DGreer@oaisd.org Diagnostic …NOT Timely Dig DEEPER than just proficiency by looking at trends at both the strand and GLCE level. Triangulate, i.e. What are some of the advantages of the ACT Explore Item Analysis and released items? Once you have dug deeper and looked at multiple types of data, then ask: What conclusions can be drawn? Are our current focus addressing the issues? What theories do you have that are supported by data about why deficiencies exist? Develop an action plan: ◦ WHO should explore this data? WHO are the experts able to make instructional changes? WHO needs to be empowered? ◦ WHEN will time be given to dialogue about data that will impact instruction and ultimately make a difference for students? ◦ WHAT data have you filtered that will be useful in a data dialogue? WHAT four steps will you use to facilitate a data dialogue? ◦ To truly have a balanced assessment system, WHAT data is missing or under utilized? “There exists a vast sea of information … As leaders, you must filter this information and select small, critical components for the practitioners to draw solid conclusions that will result in improved teaching and learning.” Doug Greer