HSR Intro

advertisement

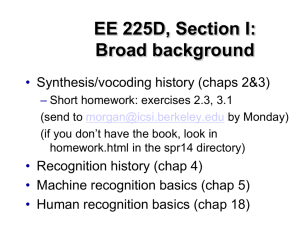

From last time … ASR System Architecture Grammar Cepstrum Speech Signal Signal Processing Recognized Words “zero” “three” “two” Probabilities “z” -0.81 “th” = 0.15 “t” = 0.03 Probability Estimator Decoder Pronunciation Lexicon A Few Points about Human Speech Recognition (See Chapter 18 for much more on this) Human Speech Recognition • Experiments dating from 1918 dealing with noise, reduced BW (Fletcher) • Statistics of CVC perception • Comparisons between human and machine speech recognition • A few thoughts The Ear The Cochlea Assessing Recognition Accuracy • Intelligibility • Articulation - Fletcher experiments – CVC, VC, CV, syllables in carrier sentences – Tests over different SNR, bands – Example: “The first group is `mav’ (forced choice between mav and nav) – Used sharp lowpass and/or highpass filtered. For equal energy, crossover is 450 Hz; for equal articulation, 1550 Hz. Results • S = vc2 • Articulation Index (the original “AI”) • Error independence between bands – – – – – Articulatory band ~ 1 mm along basilar membrane 20 filters between 300 and 8000 Hz A single zero error band -> no error! Robustness to a range of problems AI = ∑k 1/K (SNRk / 30) where SNR saturates at 0 and 30 AI additivity • s(a,b) = phone accuracy for band from a to b, a<b<c • (1-s(a,c)) = (1-s(a,b))(1-s(b,c)) • log10(1-s(a,c)) = log10(1-s(a,b)) + log10(1-s(b,c)) • AI(s) = log10(1-s) / log10(1-smax) • AI(s(a,c)) = AI(s(a,b)) + AI(s(b,c)) Jont Allen interpretation: The Big Idea • • • • Humans don’t use frame-like spectral templates Instead, partial recognition in bands Combined for phonetic (syllabic?) recognition Important for 3 reasons: – Based on decades of listening experiments – Based on a theoretical structure that matched the results – Different from what ASR systems do Questions about AI • Based on phones - the right unit for fluent speech? • Lost correlation between distant bands? • Lippmann experiments, disjoint bands – Signal above 8 kHz helps a lot in combination with signal below 800 Hz Human SR vs ASR: Quantitative Comparisons • Lippmann compilation (see book): typically ~factor of 10 in WER • Hasn’t changed too much since his study • Keep in mind this caveat: “human” scores are ideal - under sustained real conditions people don’t pay perfect attention (especially after lunch) Human SR vs ASR: Quantitative Comparisons (2) System 10 dB SNR 16 dB SNR “Quiet” Baseline HMM ASR 77.4% 42.2% 7.2% ASR w/ noise compensation 12.8% 10.0% - Human Listener 1.1% 1.0% 0.9% Word error rates for 5000 word Wall Street Journal read speech task using additive automotive noise (old numbers – ASR would be a bit better now) Human SR vs ASR: Qualitative Comparisons • • • • Signal processing Subword recognition Temporal integration Higher level information Human SR vs ASR: Signal Processing • Many maps vs one • Sampled across time-frequency vs sampled in time • Some hearing-based signal processing already in ASR Human SR vs ASR: Subword Recognition • Knowing what is important (from the maps) • Combining it optimally Human SR vs ASR: Temporal Integration • Using or ignoring duration (e.g., VOT) • Compensating for rapid speech • Incorporating multiple time scales Human SR vs ASR: Higher levels • • • • • Syntax Semantics Pragmatics Getting the gist Dialog to learn more Human SR vs ASR: Conclusions • When we pay attention, human SR much better than ASR • Some aspects of human models going into ASR • Probably much more to do, when we learn how to do it right