Talk - Muthukumaran Chandrasekaran

advertisement

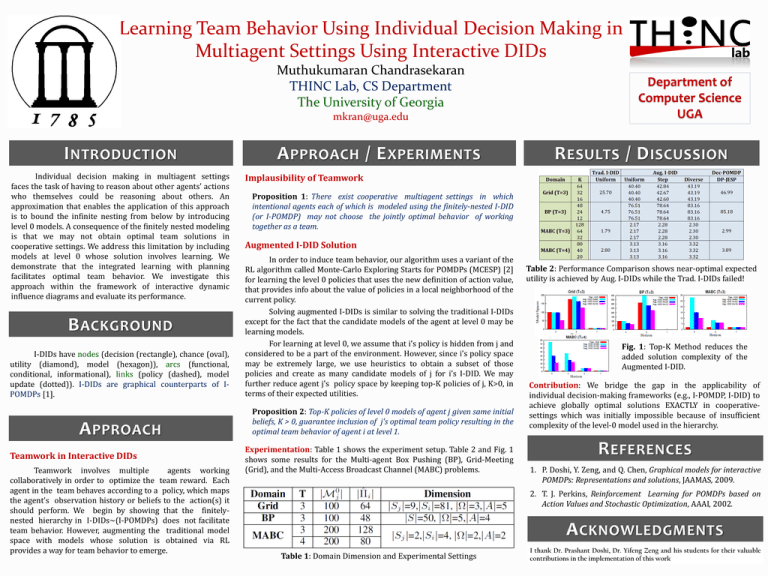

Learning Team Behavior Using Individual Decision Making in Multiagent Settings Using Interactive DIDs Muthukumaran Chandrasekaran THINC Lab, CS Department The University of Georgia mkran@uga.edu I NTRODUCTION Individual decision making in multiagent settings faces the task of having to reason about other agents' actions who themselves could be reasoning about others. An approximation that enables the application of this approach is to bound the infinite nesting from below by introducing level 0 models. A consequence of the finitely nested modeling is that we may not obtain optimal team solutions in cooperative settings. We address this limitation by including models at level 0 whose solution involves learning. We demonstrate that the integrated learning with planning facilitates optimal team behavior. We investigate this approach within the framework of interactive dynamic influence diagrams and evaluate its performance. B ACKGROUND I-DIDs have nodes (decision (rectangle), chance (oval), utility (diamond), model (hexagon)), arcs (functional, conditional, informational), links (policy (dashed), model update (dotted)). I-DIDs are graphical counterparts of IPOMDPs [1]. A PPROACH Teamwork in Interactive DIDs Teamwork involves multiple agents working collaboratively in order to optimize the team reward. Each agent in the team behaves according to a policy, which maps the agent's observation history or beliefs to the action(s) it should perform. We begin by showing that the finitelynested hierarchy in I-DIDs~(I-POMDPs) does not facilitate team behavior. However, augmenting the traditional model space with models whose solution is obtained via RL provides a way for team behavior to emerge. A PPROACH / E XPERIMENTS Department of Computer Science UGA R ESULTS / D ISCUSSION Implausibility of Teamwork Proposition 1: There exist cooperative multiagent settings in which intentional agents each of which is modeled using the finitely-nested I-DID (or I-POMDP) may not choose the jointly optimal behavior of working together as a team. Augmented I-DID Solution In order to induce team behavior, our algorithm uses a variant of the RL algorithm called Monte-Carlo Exploring Starts for POMDPs (MCESP) [2] for learning the level 0 policies that uses the new definition of action value, that provides info about the value of policies in a local neighborhood of the current policy. Solving augmented I-DIDs is similar to solving the traditional I-DIDs except for the fact that the candidate models of the agent at level 0 may be learning models. For learning at level 0, we assume that i’s policy is hidden from j and considered to be a part of the environment. However, since i’s policy space may be extremely large, we use heuristics to obtain a subset of those policies and create as many candidate models of j for i’s I-DID. We may further reduce agent j's policy space by keeping top-K policies of j, K>0, in terms of their expected utilities. Proposition 2: Top-K policies of level 0 models of agent j given same initial beliefs, K > 0, guarantee inclusion of j's optimal team policy resulting in the optimal team behavior of agent i at level 1. Experimentation: Table 1 shows the experiment setup. Table 2 and Fig. 1 shows some results for the Multi-agent Box Pushing (BP), Grid-Meeting (Grid), and the Multi-Access Broadcast Channel (MABC) problems. Table 2: Performance Comparison shows near-optimal expected utility is achieved by Aug. I-DIDs while the Trad. I-DIDs failed! Fig. 1: Top-K Method reduces the added solution complexity of the Augmented I-DID. Contribution: We bridge the gap in the applicability of individual decision-making frameworks (e.g., I-POMDP, I-DID) to achieve globally optimal solutions EXACTLY in cooperativesettings which was initially impossible because of insufficient complexity of the level-0 model used in the hierarchy. R EFERENCES 1. P. Doshi, Y. Zeng, and Q. Chen, Graphical models for interactive POMDPs: Representations and solutions, JAAMAS, 2009. 2. T. J. Perkins, Reinforcement Learning for POMDPs based on Action Values and Stochastic Optimization, AAAI, 2002. A CKNOWLEDGMENTS Table 1: Domain Dimension and Experimental Settings I thank Dr. Prashant Doshi, Dr. Yifeng Zeng and his students for their valuable contributions in the implementation of this work