Liam Murray - Higher Education Academy

advertisement

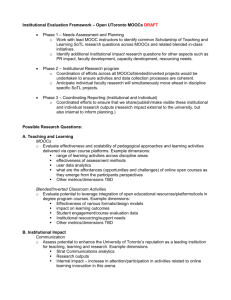

Developing and implementing collaborative evaluation approaches with MOOCs and SLA Liam Murray, Elaine Riordan, Amanda Whyler, Laura Swilley, Aylar Vazirabad, Mansour Alammar, Norah Banafi, Vera Carvalho, Yanuar DwiPrastyo, Geraldine Exton, Ingrid Deloughery, Lisa Cadogan, Sinead Nash, Qing Miao, Mazin Allyhani and Osama Al-Moughera University of Limerick, Ireland Presentation at “Massive Open Online Courses in the Arts and Humanities: Opportunities, Challenges and Implications Across UK Higher Education”, at University of Central Lancashire in Preston, April 24th, 2014. Overview • The Evaluation Team and types of MOOCs followed; • Evaluation Methods • Common and discrete features of the MOOC experiences; • Pedagogic Implications of MOOCs; • Analysis and Assessment Issues; • Results and recommendations on potential for repurposing and designing for SLA; • Adding to the current debate. The Evaluation Team and types of MOOCs followed Team 8 PhD students 6 MA students 2 lecturers Evaluation: was part of their own module on ICT and SLA, but participation in MOOC activity was purely voluntary and not assessed. Types of MOOCs - Games programming (X2) - Academic writing (X3) - Anatomy - Gamification (X2) (Coursera) - Corpus Linguistics (X5) - Web science: how the web is changing the world (X2) - How the brain functions. Our Evaluation Methods Set tasks: possible to repurpose an existing MOOC for SLA? Possible to design a dedicated MOOC for SLA based on their MOOC experiences? • Iterative and summative • Qualitative and experiential rather than quantitative • Courses ranged from 3-10 weeks. Common and discrete features of the MOOC experiences • Common features – Standard and personalised emails at the beginning and end of each week – An introduction and summary each week – Short lecture-type video recordings (with PPT and transcripts) – Resources, links and references on weekly topics – Discussion forums (moderated but not mandatory) – Weekly quizzes (MCQs) • Discrete features – Use of and access to software specific to areas (e.g. Corpus Linguistics – length of access unknown) – Anonymous peer feedback on assignments (e.g. Writing) – Revision and feedback on writing (e.g. Writing) – Essay writing and analytical activities. Pedagogic Implications of MOOCs • Autonomous learning (playing ‘catch-up’) • Control theory: two control systems—inner controls and outer controls—work against our tendencies to deviate. Social bonds & personal investments. • Motivation theory (both intrinsic and extrinsic motivation) • Connectivism • Low affective filter • Anonymous (pros and cons) • Lurking • Information seekers Curiosity Learning. Analysis and Assessment Issues • Peer evaluation: students not happy with this (cultural issues? Anxious about the unknown). • However: “Coursera’s foray into crowd-sourced peerassessment seems to be a useful response to the problem of the M in Massive”. • Note: Coursera’s students would be docked 20% of our marks if refuse to self-evaluate and locked out until we completed evaluation of 5 others students’ work. • Self evaluation: perceived as worthwhile • MCQs: could be completed without reading the material • Assessment : • Was complex • Was dependent on aims: professional development or learn about a topic • Needs to be (1) credible and (2) scalable. Assessing writing in MOOCS (Balfour, 2013) • Automated Essay Scoring (AES) Vs Calibrated Peer Review (CPR) • AES: good on grammar, syntax, speeling and “irrelevant segments”. • AES: bad on complex novel metaphors, humour, slang and offered “vague feedback”. • CPR: given a MC rubric; peer review & selfevaluation; receive all feedback from peers • CPR: Some peer-resistance but improves particular writing skills; limitations in a MOOC environment. Balfour’s Conclusions • For both AES and CPR, the more unique or creative a piece of work is, then less likelihood of producing a good evaluation for the student. • Same for original research pieces. • Final conclusion: not every UG course that uses writing as a form of assessment will translate to the MOOC format. Results and recommendations on potential for repurposing and designing for SLA • Pros – No fees – Clear Guidelines given – Ability to work at own pace – Well-known academics delivering material – Some courses followed PPP model (implications for SLA) – Suitable as tasters • Cons – Technical issues – Not a lot of variety in the delivery – Discussion forums too large – No chat function – Personalised support needed depending on the field/area – Not ab initio Results and recommendations on potential for repurposing and designing for SLA • Recommendations – Have more variety in interaction patterns (synchronous and asynchronous) – Have more variety in modes of delivery and presenters/lecturers – Organise smaller discussion groups – Offer clear guidelines and instructions – Stage input and activities – Offer credits Adding to the current debate • • • • MOOCs to SPOOCs for SLA Maintaining motivation Financing & monetising the Courses Weighting (accreditation). MOOCs to SPOOCs and back to Gapfilling