Principles of Neocortical Function

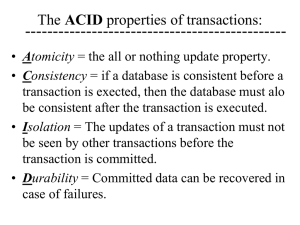

advertisement

On-line Learning From Streaming Data ACM CIKM October 31, 2013 Jeff Hawkins jhawkins@GrokSolutions.com Industrial Research Track 1) Discover operating principles of neocortex Anatomy, Physiology Theoretical principles Software 2) Build systems based on these principles Cortical algorithms Anomaly detection in high velocity data The neocortex is a memory system. retina cochlea somatic data stream The neocortex learns a model from sensory data - predictions - anomalies - actions The neocortex learns a sensory-motor model of the world Principles of Neocortical Function 1) On-line learning from streaming data retina cochlea somatic data stream Principles of Neocortical Function 1) On-line learning from streaming data 2) Hierarchy of memory regions retina cochlea somatic data stream Principles of Neocortical Function 1) On-line learning from streaming data 2) Hierarchy of memory regions retina cochlea somatic data stream 3) Sequence memory - inference - motor Principles of Neocortical Function 1) On-line learning from streaming data 2) Hierarchy of memory regions 3) Sequence memory retina cochlea somatic data stream 4) Sparse Distributed Representations Principles of Neocortical Function 1) On-line learning from streaming data 2) Hierarchy of memory regions 3) Sequence memory retina cochlea data stream 4) Sparse Distributed Representations 5) All regions are sensory and motor somatic Motor Principles of Neocortical Function 1) On-line learning from streaming data 2) Hierarchy of memory regions retina cochlea somatic data stream xx xxx xx xx x 3) Sequence memory x xx 4) Sparse Distributed Representations 5) All regions are sensory and motor 6) Attention Principles of Neocortical Function 1) On-line learning from streaming data 2) Hierarchy of memory regions 3) Sequence memory retina data stream cochlea somatic 4) Sparse Distributed Representations 5) All regions are sensory and motor 6) Attention These six principles are necessary and sufficient for biological and machine intelligence. - All mammals from mouse to human have them Dense Representations • • • Few bits (8 to 128) All combinations of 1’s and 0’s Example: 8 bit ASCII 01101101 = m • • Individual bits have no inherent meaning Representation is assigned by programmer Sparse Distributed Representations (SDRs) • • • Many bits (thousands) Few 1’s mostly 0’s Example: 2,000 bits, 2% active • • Each bit has semantic meaning Meaning of each bit is learned, not assigned 01000000000000000001000000000000000000000000000000000010000…………01000 A Few SDR Properties 1) Similarity: shared bits = semantic similarity 2) Store and Compare: store indices of active bits subsampling is OK Indices 1 2 3 4 5 | 40 Indices 1 2 | 10 Sequence Memory (for inference and motor) Coincidence detectors How does a layer of neurons learn sequences? Each cell is one bit in our Sparse Distributed Representation SDRs are formed via a local competition between cells. SDR (time =1) SDR (time =2) Cell forms connections to subsample of previously active cells. Predicts its own future activity. Multiple Predictions Can Occur at Once With one cell per column, 1st order memory We need a high order memory High Order Sequence Memory Enabled by Columns of Cells Cortical Learning Algorithm (CLA) Distributed sequence memory High order High capacity Multiple simultaneous predictions Semantic generalization Three Current Directions 1) NuPIC Open Source Project NuPIC Open Source Project www.Numenta.org Single source tree (used by GROK) GPLv3 Steady community growth – – – 67 contributors (+26 since July) 245 mailing list subscribers 1621 total messages eBook from community member OS community joining Kaggle Competitions Fall Hackathon: 70 attendees Three Current Directions 1) NuPIC Open Source Project 2) Custom CLA Hardware - Needed for scaling research and commercial applications - DARPA “Cortical Processor” - IBM, Seagate, Sandia Labs 3) Commercialization Data: Past and Future Past 1. Store data Future 2. Look at data 3. Build models Problem: - Doesn’t scale with velocity and #models Solution: - Automated model creation - Continuous learning - Temporal inference Stream data Automated model creation Continuous learning Temporal inference Predictions Anomalies Actions Anomaly Detection Using Predictive Cortical Models Cortical Memory Metric 1 Encoder SDR Prediction Point anomaly score Time average Distribution of averages Metric anomaly score System Anomaly Score . . . Cortical Memory Metric N Encoder SDR Prediction Point anomaly score Time average Distribution of averages Metric anomaly score Anomaly score Metric value Anomaly score Metric value Largely predictable Largely unpredictable Grok for IT Monitoring Breakthrough Science for Anomaly Detection Detects problems thresholds miss Continuous learning Automated model building State-of-the art neocortical model Reinventing UX for IT Monitoring Smartphone-centric Ranks anomalous instances Rapid drill down Continuously updated User-controlled notifications In private beta for Amazon AWS cloud users grokbeta@GrokSolutions.com Extensible Architecture Custom metrics for any application/server Web interface and mobile client source code available under no-cost license Engine API to be published NuPIC open source community