chemistry

advertisement

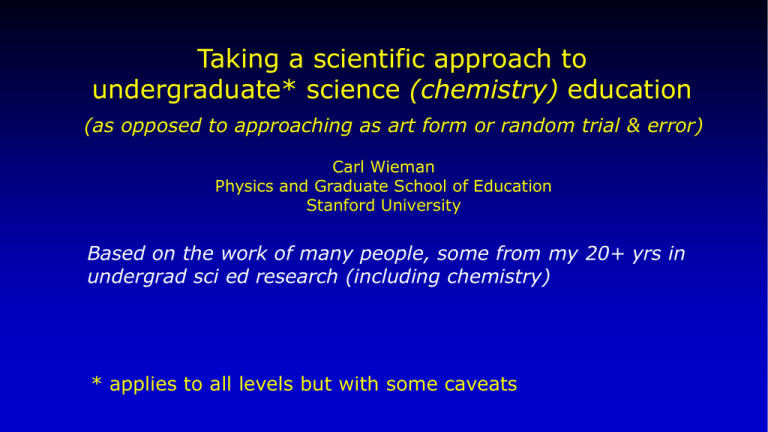

Taking a scientific approach to undergraduate* science (chemistry) education (as opposed to approaching as art form or random trial & error) Carl Wieman Physics and Graduate School of Education Stanford University Based on the work of many people, some from my 20+ yrs in undergrad sci ed research (including chemistry) * applies to all levels but with some caveats How to optimize chemistry education in lieu of increasing knowledge and needs? New stuff and new skills to learn, more specialization– Have to fit more in! What should the curriculum look like? Before any discussion of curriculum What fraction of the material you learned in classes do you use? What fraction of the material you use did you learn in classes? So what is important for student to learn? What should students learn? The basic elements of chemistry expertise (how to think more like chemist) I. Nature of expertise and how it is learned II. Implementation in science classroom and data on effectiveness III. Some particular challenges in chemistry for improving teaching Major advances in past few decades Guiding principles for achieving learning College sci classroom studies brain research cognitive psychology I. Expertise research* historians, scientists, chess players, doctors,... Expert competence = • factual knowledge • Mental organizational framework retrieval and application or ? patterns, relationships, scientific concepts, • Ability to monitor own thinking and learning New ways of thinking-- everyone requires MANY hours of intense practice to develop. Brain changed *Cambridge Handbook on Expertise and Expert Performance Learning expertise* (any level)-- Challenging but doable tasks/questions Practice all the elements of expertise with feedback and reflection. Motivation critical! • conceptual and mental models + selection criteria • recognizing relevant & irrelevant information • does result make sense? - ways to test •… Exercise brain Not listening passively to someone talk about subject Subject expertise of instructor essential! * “Deliberate Practice”, A. Ericsson research accurate, readable summary in “Talent is over-rated”, by Colvin II. Application in the classroom (best opportunity for useful feedback & student-student learning) Student practicing thinking like scientist, with feedback Example from teaching about current & voltage 1. Preclass assignment--Read pages on electric current. Learn basic facts and terminology. Short online quiz to check/reward. (Simple information transfer. Accomplish without using valuable expert & class time) 2. Class starts with question: 1 2 3 When switch is closed, bulb 2 will a. stay same brightness, b. get brighter c. get dimmer, d. go out. 3. Individual answer with clicker (accountability=intense thought, primed for feedback) Jane Smith chose a. 4. Discuss with “consensus group”, revote. Practicing physicist thinking– conceptual model, examining conclusion, finding ways to test, further testing & refining model. Listening in! What aspects of student thinking right, what not? 5. Demonstrate/show result (phet cck) 6. Instructor follow up summary– feedback on which models & which reasoning was correct, & which incorrect and why. Large number of student questions/discussion. Students-practicing thinking like scientist (with feedback) Instructor talking ~ 50% time, but responsive Chemistry clicker/peer discussion/practicing-expert-thinking question examples (analytic): Research– comparing learning in the in classroom* two ~identical sections of 1st year college physics. 270 students each. Control--standard lecture class– highly experienced Prof with good student ratings. (A “good teacher”) Experiment–- physics postdoc trained in principles & methods of effective teaching. They agreed on: • Same learning objectives • Same class time (3 hours, 1 week) • Same exam (jointly prepared)- start of next class *Deslauriers, Schewlew, Wieman, Sci. Mag. May 13, ‘11 Class design- as described 1. Targeted pre-class readings 2. Questions to solve, respond with clickers or on worksheets, discuss with neighbors (“Peer Instruction”) 3. Discussion by instructor follows, not precedes. 4. Activities address motivation (relevance) and prior knowledge. Histogram of test scores 50 number of students 45 40 35 30 ave 41 ± 1 % standard lecture 74 ± 1 % experiment 25 20 15 10 5 0 1 2 3 guess 4 5 6 7 8 Test score 9 10 11 12 Clear improvement for entire student population. Engagement 85% vs 45%. Most research --Learning in a course (class, homework, exam studying) ~ 1000 studies, all fields of STEM (~20 by me) Active practice and feedback versus conventional lecture Typical-• x 50-100% more learning on instructor-independent measures • 1/3 -2/3 lower failure and drop rate Meta-analyis of several hundred studies (Freeman et al PNAS 2014) --gains similar all levels, all sci & eng disciplines NRC-- “Discipline-based Educ. Research in Sci & Eng.” (NAS Press 2012) Examples: • Cal Poly-- improved learning & teaching methods dominant factor in teacher effectiveness (=amount learned) • UCSD– failure rates average trad. Cal Poly instruction 1st year mechanics Hoellwarth and Moelter, Am. J. Physics May ‘11 9 instructors, 8 terms, 40 students/section. Same prescribed set of in-class learning tasks. U. Cal. San Diego, Computer Science Failure & drop rates– Beth Simon et al., 2012 4 different instructors 30% 25% Standard Instruction Peer Instruction 25% 24% 20% Fail Rate 20% 15% 10% 16% 14% 10% 11% 6% 5% 7% 3% 0% CS1* CS1.5 Theory* Arch* Average* III. Cultural challenges to improving chem ed (relative to other sciences) • Instructors feel compelled to cover too much, too fast. • Excessive reliance on poor exams. High failure rates OK. • Data has less impact-- how well expertise being learned & conditions for long term retention. Examples: 1. a. What is in the bubbles in boiling water? (After completing 1st year chem class less than half get correct,~ 40% say H and O atoms,. Only 6% change due to course.) Even a few grad TAs miss!! Similar on other very basic questions like conservation of mass and number of each type of atom in chemical reactions. Examples (cont.) 2. Not just in intro. We observed profound conceptual deficiencies in 3rd year P-chem. 3. Belief that “equilibrium means everything has stopped” still present in many upper level students. Most fundamental aspect of chemistry expertise– basic mental models and when to apply. Results like #1-3 known but much less concern/response in chemistry than similar results in physics. My groups work studying learning of Intro Quantum Mech.– Students leave intro chem class with some memorized QM facts & small mangled pieces of the concepts Instruction-induced perceptions of subject (expert-novice) Chemistry vs. Physics Measured for bio majors taking both intro chem and physics Both courses generally bad results but two particularly surprising* 1. Significantly more likely to agree with “It is impossible to discuss ideas in chemistry without using equations.” than with physics equivalent. 2. Significantly less likely to perceive chemistry as having real world connections compared to physics. real world connections response strongly correlates with interest & choice of major * CLASS.colorado.edu --survey and some research papers Summary: Taking a scientific approach to chemistry teaching Tremendous opportunity for improvement– • What is desired chemistry expertise? • How to provide sufficient practice and feedback to learn? • Measure results rigorously, use data to guide instruction Good References: S. Ambrose et. al. “How Learning works” Colvin, “Talent is over-rated” copies of slides + 20 available Discipline-based Educ. Research in Sci. & Eng. Nat. Acad. Press (2012) cwsei.ubc.ca-- resources, references, and videos CBE—Life Sciences Education Vol. 13, 552–569, Fall 2014 The Teaching Practices Inventory: Wieman & Gilbert Effective teaching practices, ETP, scores various math and science departments at UBC before and after for dept that made serious effort to improve teaching extras below, may need to answer questions Some ideas for moving ahead 1. Use new educational tools that enhance learning (e.g. simulations -- show gas and salts sims) 2. Chem Curriculum and Teaching of the Future a. Delineate desired cognitive capabilities (What can they do that indicates success?) Look for common cognitive processes across areas of chemistry and chemical engineering practice b. Design curriculum by figuring out how to embed these mental processes into range of desired contexts. c. Always focus on “What thinking can they do?”, not “What material has been presented to them?” and be scientific--measure results and iterate II. What does it look like in classroom? (can go into more details later if desired) • Designed around problems and questions, not transmission of information and solutions • Students actively engaged in class and out with thinking and solving, while receiving extensive feedback • Teacher is “cognitive coach”-- designing practice tasks, motivating, providing feedback Me—Hypothesis. Jargon in biology increases processing demands. So presenting concepts before jargon would achieve better learning. Biologists Lisa M. & Megan B. to test Reading before class. Textbook vs. textbook without jargon. Then same active-learning class for both with jargon. Total Score My most recent paper… Cog. psych– “cognitive load” (processing demands on brain) impacts learning. Control 40 35 30 25 20 15 10 5 0 DNA Structure Concepts-First Genomes Free response question test of learning at end of class Lisa McDonnell & Megan Barker & CW II. Role of faculty Practice tasks– challenging but achievable. (and motivating) Explicitly practice expert-like thinking. Specific and timely feedback to guide thinking. demand expertise in teacher teacher as “expertise coach” Unique at Stanford– extraordinary expertise of faculty “Talking textbook”—little expertise needed or transferred Effective teaching (exercising learner’s brain)– demands and transfers expertise IV. What does “transformed teaching” feel like to faculty? led large-scale experiment– changing how entire large science departments teach Is possible, but is a new expertise. Takes ~~ 100 hours to master basics. Normally, no incentive to. (teaching practices & student learning never count) Requires changing beliefs about learning--What is best, what is possible. New faculty perspectives: • teaching more rewarding & intellectually challenging • different limitations on learning Role of technology Useful (=evidence of improved learning) at college level so far only when enhances capability of teachers. • task accountability and feedback to more students (online homework, in-class clickers) • interactive simulations online--better convey expert conceptual models (e.g. PhET.colorado.edu ~ 130 Million delivered, 75 languages) Large part of my time. Crusading for improved undergrad math and science teaching. (outside Stanford) Talk & write about & my large scale experiments in change– UBC and CU science depts. Better ways to evaluate university teaching What about K-12? These effective methods work at all levels, but require much more subject mastery than does lecture. K-12 science teachers need much better college science education and better model for teaching science before they can use these methods effectively. Compare with typical HW & exam problems, in-class examples • Provide all information needed, and only that information, to solve the problem • Say what to neglect • Not ask for argument why answer reasonable • Only call for use of one representation • Possible to solve quickly and easily by plugging into equation/procedure • concepts and mental models + selection criteria • • • • • recognizing relevant & irrelevant information what information is needed to solve How I know this conclusion correct (or not) model development, testing, and use moving between specialized representations (graphs, equations, physical motions, etc.) The conventional alternative: “Here is circuit with resistors and voltage sources. Here is how to calculate currents at A and B and voltage difference using the proper equations.... “ 1 8V 2 A B 12 V 1 What expert thinking will students not practice? Has NONE of the expertise in light bulb question design: • Recognize expert conceptual model of current. • Recognize how physicists would use to make predictions in real world situation. • Find motivational aspects in the physics (“Lets you understand how electricity in house works!”) When switch is closed, 1 answer & bulb 2 will reasoning a. stay same brightness, b. get brighter c. get dimmer, d. go out. Physics expertise in question design: • Recognize expert conceptual model of current. • Recognize how physicists would use to make predictions in real world situation. • Find motivational aspects in the physics (“Lets you understand how electricity in house works!”) 2 3 Some components of S & E expertise • • • • • • concepts and mental models + selection criteria recognizing relevant & irrelevant information what information is needed to solve does answer/conclusion make sense- ways to test model development, testing, and use moving between specialized representations (graphs, equations, physical motions, etc.) • ... Only make sense in context of topics. Knowledge important but only as integrated part– how to use/make-decisions with that knowledge.