NetFPGA Project 1 4-Port Layer 2/3 Switch

NetFPGA Project:

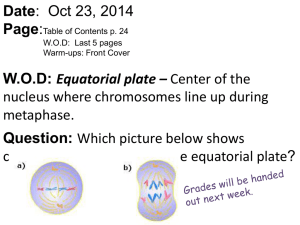

4-Port Layer 2/3 Switch

Ankur Singla (asingla@stanford.edu)

Gene Juknevicius (genej@stanford.edu)

Agenda

NetFPGA Development Board

Project Introduction

Design Analysis

Bandwidth Analysis

Top Level Architecture

Data Path Design Overview

Control Path Design Overview

Verification and Synthesis Update

Conclusion

NetFPGA Development Board

Ethernet

MAC/PHY

CFPGA SRAM

CFPGA Interface Logic

UFPGA SRAM

Project Introduction

4 Port Layer-2/3 Output Queued Switch Design

Ethernet (Layer-2), IPv4, ICMP, and ARP

Programmable Routing Tables – Longest Prefix Match, Exact

Match

Register support for Switch Fwd On/Off, Statistics, Queue

Status, etc.

Layer-2 Broadcast, and limited Layer-3 Multicast support

Limited support for Access Control

Highly Modular Design for future expandability

Bandwidth Analysis

Available Data Bandwidth

Memory bandwidth: 32 bits * 25 MHz = 800 Mbits/sec

CFPGA to Ingress FIFO/Control Block bandwidth:

32 bits * 25 MHz / 4 = 200 Mbits/sec

Packet Queue to Egress bandwidth:

32 bits * 25 MHz / 4 = 200 Mbits/sec

Packet Processing Requirements

4 ports operating at 10 Mbits/sec => 40 Mbits/sec

Minimum size packet 64 Byte => 512 bits

512 bits / 40 Mbits/sec = 12.8 us

Internal clock is 25 MHz

12.8 us * 25 MHz = 320 clocks to process one packet

Top Level Architecture

To CFPGA Chip

CFPGA Interface Logic

Main Arbiter

Control

Block

Ingress FIFO

Controller

Switching and

Routing

Engine

Memory

Controller

To SRAM

Data Flow Diagram

Output Queued Shared

Memory Switch

Ingress

FIFO

Controller

Round Robin Scheduling

Packet Processing Engine provides L2/L3 functionality

Coarse Pipelined Arch. at the

Block Level

Forwarding

Engine

Control

Block

Memory

Controller

Data Ingress from CFPGA

Data Egress to CFPGA

Master Arbiter

Round Robin Scheduling of service to Each Input and Output

Interfaces Rest of the

Design with Control FPGA

Co-ordinates activities of all high level blocks

Maintains Queue Status for each Output

Port 3 Port 0

Round

Robin

Algorithm

Port 2 Port 1

Reset

Packet Move

from: Ingress to: Ingress FIFO

Packet Move

from: Control Block to: Egress

Idle State

Packet Move

from: Ingress to: Control Block

Port 3 Port 0

Port 2

Round

Robin

Algorithm

Port 1

Packet Move

from: Queue Memory to: Egress

Packet Move

from: Ingress FIFO to: Queue Memory

Ingress FIFO Control Block

Interfaces three blocks

Control FPGA

Forwarding Engine

Packet Buffer Controller

IDLE packetMoveDone eop_ci_ufpga_o packetProcessingDone

& grant_sw_sram gnt_ci_sw

Move Packet from CFPGA

Forwarding

CFPGA

Forwarding

Move Packet to SRAM

CFPGA

Dual Packet Memories for coarse pipelining

Forwarding

Engine

Bank 0 Bank 1

Responsible for Packet

Replication for Broadcast

Master

Arbiter

Length

Src Port

Packet Memory

(Pkt 0)

Length

Src Port

Packet Memory

(Pkt 1)

Packet Processing Engine Overview

Goals

Features – L3/L2/ICMP/ARP Processing

Performance Requirements – 78Kpps

Fit within 60% of Single User FPGA Block

Modularity / Scalability

Verification / Design Ease

Actual

Support for all required features + L2 broadcast, L3 multicast, LPM,

Statistics and Policing (coarse access control)

Performance Achieved – 234Kpps ( worst case 69Kpps for ICMP echo requests 1500bytes )

Requires only 12% of Single UFPGA resources

Highly Modular Design for design/verification/scalability ease

Pkt Processing Engine Block Diagram

From CFPGA

First Level

Parsing

L3 Processing ICMP Processing

Packet

Memory0

Native

Packet

Forwarding Master State Machine

Packet

Memory1

ARP Processing L2 Processing

Statistics and

Policing

To Packet Buffer

Forwarding Master State Machine

Responsible for controlling individual processing blocks

Request/Grant Scheme for future expandability

Initiates a Request for Packet to

Ingress FIFO and then assigns to responsible agents based on packet contents

Replication of MSM to provide more throughput

Statistics

Update

Policing/

Drop

!gntForProcessing || softReset

IDLE defaultL2Fwd gntForProcessing

Initiate

First Level

Parsing

!Completion

parsingDone

Processing

Selection?

Type == ipv4

!Completion

Type == ARP

L3

Processing

!Completion

Protocol Type==ICMP

ARP

Processing

!Completion

!Completion

ICMP

Processing defaultL2Fwd

L2

Forwarding defaultL2Fwd defaultL2Fwd

L3 Processing Engine

Parsing of the L3 Information:

Src/Dest Addr, Protocol Type, Checksum, Length, TTL

Longest Prefix Match Engine

Mask Bits to represent the prefix. Lookup Key is Dest Addr

Associated Info Table (AIT) Indexed using the entry hit

AIT provides Destination Port Map, Destination L2 Addr, Statistics Bucket Index

Request/Done scheme to allow for expandability (e.g. future m-way Trie implementation project)

ICMP Support Engine Request (if Dest Addr is Routers IP Address + Protocol

Type is ICMP)

Total 85 cycles for Packet Processing with 80% of the cycles spent on Table

Lookup

If using 4-way trie, total processing time can be reduced to less than 30 cycles.

L2 Processing Engine

If there is any processing problems with ARP, ICMP, and/or L3, then L2 switching is done

Exact Match Engine

Re-use of the LPM match engine but with Mask Bits set to all 1’s.

Associated Info Table (AIT) Indexed using the entry hit

AIT provides Destination Port Map, and Statistics Bucket Index

Request/Done scheme to allow for expandability (e.g. future Hash implementation project)

Learning Engine removed because of Switch/Router Hardware Verification problems (HP Switch bug)

Total 76 cycles for Packet Processing with over 80% of the cycles spent on Table

Lookup

If using Hashing Function, total processing time can be reduced to less than 20 cycles.

Packet Buffer Interface

Interfaces with Master

Arbiter and Forward

Engine

Output Queued Switch

Statically Assigned

Single Queue per port

Off-chip ZBT SRAM on NetFPGA board

Reset

Packet Write Into

Queue Memory

Idle State

Packet Read from

Queue Memory

Packet Queue Memory Organization

256K x 36 bits SRAM Device

4 Static Queues

128 packets per queue

2 KBytes per packet

Control Block

Typical Register Rd/Wr

Functionality

Status Register

Control Register (forwarding disable, reset)

Router’s IP Addresses

(port 1-4)

Queue Size Registers

Statistics Registers

Layer-2 Table Programming

Registers

Layer-3 Table Programming

Registers

Reset

Packet Reception

Packet Parcing

Idle State

Packet Transmission

Packet Processing

Read / Write

Verification

Three Levels of Verification Performed

Simulations:

Module Level – to verify the module design intent and bus functional model

System Level – using the NetFPGA verification environment for packet level simulations

Hardware Verification

Ported System Level tests to create tcpdump files for NetFPGA traffic server

Very good success on Hardware with all System Level tests passing.

Only one modification required (reset generation) after

Hardware Porting

Demo - Greg can provide lab access to anyone interested

Synthesis Overview

Design was ported to

Altera EP20K400 Device

Logic Elements Utilized –

5833 (35% of Total LEs)

RAM ESBs Used – 46848

(21% of Total ESBs)

Max Design Clock

Frequency ~ 31MHz

No Timing Violations

Total

Design Block

Name

Main Arbiter

Memory Controller

Control Block

Ingress FIFO

Controller

Switching and

Routing Engine

Flip-flops

(Actual)

71

109

608

60

Ram bits

(Actual)

Gates

(Actual)

0

0

0

1500

2000

5000

64000 1200

925 14000 14000

1773 78000 23700

Conclusion

Easy to achieve “required” performance in an OQ

Shared Memory Switch in NetFPGA

Modularity of the design allows more interesting and challenging future projects

Design/Verification Environment was essential to meet schedule

NetFPGA is an excellent design exploration platform