4a. osb4MIPs

advertisement

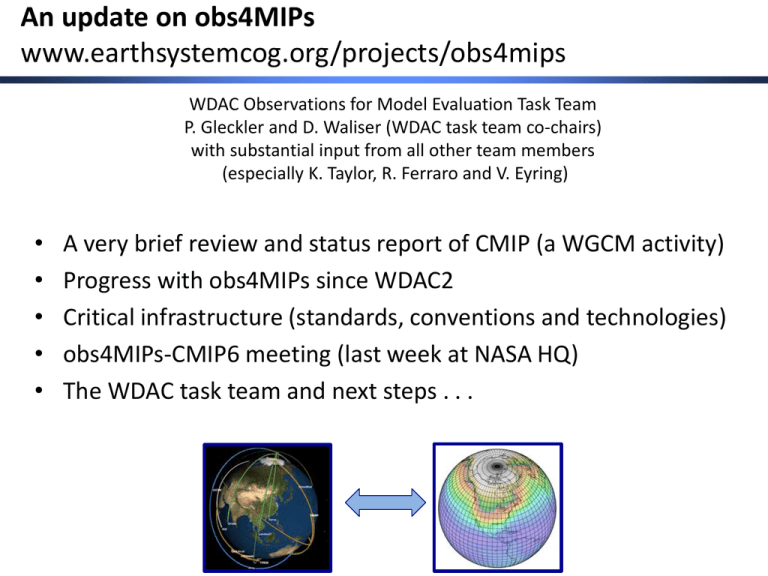

An update on obs4MIPs www.earthsystemcog.org/projects/obs4mips WDAC Observations for Model Evaluation Task Team P. Gleckler and D. Waliser (WDAC task team co-chairs) with substantial input from all other team members (especially K. Taylor, R. Ferraro and V. Eyring) • • • • • A very brief review and status report of CMIP (a WGCM activity) Progress with obs4MIPs since WDAC2 Critical infrastructure (standards, conventions and technologies) obs4MIPs-CMIP6 meeting (last week at NASA HQ) The WDAC task team and next steps . . . CMIP6 Timeline 2014 2015 2016 2017 2018 Diagnostic, Evaluation and Characterization CMIP DECK Diagnostic, Evaluation and Characterization of Klima (DECK) Model Version 1 Model Version 2 2019 with standardized metrics & assessment Model Version 4 Model Version 3 MIP1 CMIP6 Endorsed MIPs MIP2 MIP1 MIP3 MIP2 MIP4 Finalize experiment design (WGCM) Future projection runs Scenario MIP studies , MIP matrix, pattern scaling, scenario pairs Forcing data: harmonization, emissions to concentrations Community input on CMIP6 design Formulate scenarios to be run by AOGCMs and ESMs … 2020 Run and analyze scenario simulations from matrix Possible IPCC AR6 Preliminary ESM/AOGCM runs with new scenarios Nominal Simulation Period of CMIP6 Many are contributing to obs4MIPs Obs4MIPs initiated by JPL (R. Ferraro, J. Teixeira and D. Waliser) and PCMDI (P. Gleckler, K. Taylor) with oversight and support provided by NASA (T. Lee) and U.S. DOE (R. Joseph) Several dozen scientists (thus far especially in NASA and CFMIP-OBS) doing substantial work to contribute datasets Encouragement at WDAC2 to “internationalize” with a task team (now in place) Participation is broadening, and the hope is sponsorship will also Jet Propulsion Laboratory California Institute of Technology obs4MIPs: The 4 Commandments Target Quantities 1. Use the CMIP5 simulation protocol (Taylor et al. 2009) as guideline for selecting observations. Matching variable required 1. Observations to be formatted/structured the same as CMIP Model output (e.g. NetCDF files, CF Convention) 2. 3. Include a Technical Note for each variable describing observation and use for model evaluation (at graduate student level). Model Output Variables Satellite Retrieval Variables Modelers Observation Experts Analysis Community Hosted side by side on the ESGF with CMIP model output. Initial Target Community obs4MIPs “Technical Note” Content (5-8 pages) • Intent of the Document • Data Field Description • Data Origin • Validation and Uncertainty Estimate • Considerations for use in Model Evaluation • Instrument Overview • References • Revision History • Point of contact obs4MIPs: Current Set of Observations AIRS (≥ 300 hPa) Temperature profile Specific humidity profile Temperature profile MLS (< 300 hPa) Specific humidity profile QuikSCAT Ocean surface winds TES Ozone profile AMSR-E SST ATSR (ARC/CMUG) SST TOPEX/JASON SSH TOA & Surface radiation fluxes CERES CFMIP-OBS Provided Cloudsat Calipso Clouds & Aerosols ISCCP Clouds Parasol Clouds & Aerosols Clouds ana4MIPs Provided Reanalysis ECMWF Zonal Winds Meridional Winds Expected to expand to other fields and sources of reanalysis TRMM Precipitation Initial in-situ Example GPCP Precipitation MISR Aerosol Optical Depth Clouds, Radiation, ARMBE/DOE Meteorology, Land Surface, etc Discussions on protocols for in-situ holdings underway MODIS Cloud fraction Aerosol Optical Depth NSIDC Sea Ice (in progress) Critical Infrastructure for CMIP, obs4MIPs and related activities Why data standards? Standards facilitate discovery and use of data • MIP standardization has increased steadily over more than 2 decades • User community expanded from 100’s to 10,000 Standardization requires • Conventions and controlled vocabularies • Tools enforcing or facilitating conformance Standardization enables: • ESG federated data archive • Uniform methods of reading and interpreting data • Automated methods and “smart” software to analyze data efficiently obs4MIPs-CMIP6 Planning Meeting Apr 29 – May 1, 2014 What standards is obs4MIPs building on? netCDF – an API for reading and writing certain types of HDF formatted data (www.unidata.ucar.edu/software/netcdf/) CF Conventions – providing for standardized description of data contained in a file (cf-convention.github.io) Data Reference Syntax (DRS) – defining vocabulary used in uniquely identifying MIP datasets and specifying file and directory names (cmippcmdi.llnl.gov/cmip5/output_req.html). CMIP output requirements – specifying the data structure and metadata requirements for CMIP (cmip-pcmdi.llnl.gov/cmip5/output_req.html) CMIP “standard output” list (cmip-pcmdi.llnl.gov/cmip5/output_req.html) Climate Model Output Rewriter (CMOR): used to produce CF-compliant netCDF files that fulfill requirements of standard model experiments. Much of the metadata written to the output files is defined in MIP-specific tables. CMOR relies on these tables to provide much of the needed metadata. obs4MIPs-CMIP6 Planning Meeting Apr 29 – May 1, 2014 Earth System Grid Federation (ESGF) esgf.org The Earth System Grid Federation (ESGF) Peer-to-Peer (P2P) enterprise system is a collaboration that develops, deploys and maintains software infrastructure for the management, dissemination, and analysis of model output and observational data. The US DOE has funded development of this system for over a decade obs4MIPs-CMIP6 Planning Meeting Apr 29 – May 1, 2014 The WCRP supports adoption and extension of the CMIP standards for all its projects/activities Working Group on Coupled Modelling (WGCM) mandates use of established infrastructure for all MIPs • Standards and conventions • Earth System Grid Federation (ESGF) The WGCM has established the WGCM Infrastructure Panel (WIP) to govern evolution of standards, including: • CF metadata standards • Specifications beyond CF guaranteeing fully self-describing and easy-to-use datasets (e.g., CMIP requirements for output) • Catalog and software interface standards ensuring remote access to data, independent of local format (e.g., OPeNDAP, THREDDS) • Node management and data publication protocols • Defined dataset description schemes and controlled vocabularies (e.g., the DRS) • Standards governing model and experiment documentation (e.g., CIM) obs4MIPs-CMIP6 Planning Meeting Apr 29 – May 1, 2014 Earth System Commodity Governance (CoG) This is NEW! earthsystemcog.org CoG enables users to create project workspaces, connect projects, share information, and seamlessly link to tools for data archival. CoG is integrated with the ESGF data. Easy to tailor to project specific needs. The primary interface to CMIP and related MIPs is likely to migrate to COG in the next few months All obs4MIPs information is now hosted on the COG, including access to data obs4MIPs-CMIP6 Planning Meeting Apr 29 – May 1, 2014 Immediate infrastructural needs of obs4MIPs CMOR needs to be generalized to better handle observational data. • CMOR was developed to meet modeling needs • Some of the attributes written by CMOR don’t apply to observations (e.g., model name, experiment name) Modifications and extensions are needed for the DRS (should be proposed by the WDAC obs4MIPs Task Team to the WIP) A streamlined “recipe” for preparation/hosting obs4MIPs datasets CoG further refined to meet obs4MIPs requirements. obs4MIPs-CMIP6 Planning Meeting Apr 29 – May 1, 2014 Obs4MIPs / CMIP6 Planning meeting • Invite only, held at NASA HQ (Washington DC), April 29– May 1 • ~ 55 attendees: a very diverse mix of data experts (mostly satellite), modelers, and agency representatives from NASA, DOE, NOAA, ESA, EUMETSAT, and JAXA • Focused on identifying opportunities to improve the use of existing satellite datasets in CMIP (model evaluation and research) Obs4MIPs / CMIP6 Planning meeting Day 1 Day 2 • • • • • Broadening involvement: Agency views Perspectives, Reanalysis • Terrestrial Water & Energy, Land Cover/use • Carbon cycle • Oceanography & Cryosphere • Moderated discussion topics: - Satellite simulators - Reanalysis (relationship to ana4MIPs) Background presentations Atmospheric Composition & Radiation Atmospheric physics Moderated discussion topics: - CMIP6 forcing data sets - High frequency observations for CMIP6 - High spatial resolution for CMIP6 - Geostationary data? - Going beyond satellite data? Day 3 (morning) • Rapporteur summaries • General discussion of future directions • A brief post meeting gathering of the WDAC task team Great discussion, lots of thoughtful feedback & recommendations Example topics • • • • • • • • • • • • Some specific recommendations (what we were after!) More data sets – which ones, priority? Higher frequency (strong interest in this) Process & Model Development focus – how to? Satellite Simulator/Observation Proxy priorities? Relaxing the “model-equivalent” criteria – how far? Better characterization of obs uncertainty needed Optimizing connections to ana4MIPs/reanalysis Use of averaging kernels – how far? Geostationary priorities/guidance Gridded In-Situ data sets In-Situ – where to start, how far to go? obs4MIPs WDAC & Task Team obs4MIPs obs4MIPs obs4MIPs obs4MIPs-CMIP6 Planning Meeting Apr 29 – May 1, 2014 Meeting open forum: mixed views on several key issues • Should obs4MIPs continue is focus on “enabling” CMIP research, or should it strive to lead it? • Should obs4MIPs filter datasets based on quality? What about “obsolete” datasets? • How many different datasets of the same observable should be included? Do we want 10 different SST products? • Should obs4MIPs expand beyond focusing on “near globally gridded data sets”, and if so, how quickly and how much? The WDAC task team & WDAC needs to take a position on these issues obs4MIPs-CMIP6 Planning Meeting Apr 29 – May 1, 2014 A range of possibilities for obs4MIPs guidelines/requirements : Alignment with CMIP Model Output (& Used for Evaluation) No Requirement / No obvious near-term use for Evaluation Indirect or Alignment via Alignment via via simple steps simulator or complex e.g. ratio, operational processing column operator steps average Strict Alignment Process/Value demonstrated via peer-reviewed literature Significant <-> No Influence from models Demonstrated via robust cal/val activity Evidence for Data Quality? None Subjective <-> Objective error estimates Large <-> Small error/uncertainties Demonstrated via N(?) uses in peer-reviewed literature Should we construct a “Maturity Matrix” or Model Evaluation Readiness Level (MERL)? WDAC task team discussions (90min, post obs4MIPs meeting) Team members: P. Gleckler (co-chair; PCMDI), D. Waliser (co-chair; JPL), S. Bony (IPSL), M. Boslovich (GSFC), H. Chepfer (IPSL), V. Eyring (DLR), R. Ferraro (JPL), R. Saunders (MOHC), J. Schulz (EUMETSAT), K. Taylor (PCMDI), J-N Thépaut, ECMWF • Task team should oversee the connection between obs4MIPs and ana4MIPs, but not ana4MIPs itself • Connecting with GEWEX assessments • Review existing “Maturity Matrix” applications and consider building on them to create a Model Evaluation Readiness Level (MERL)? • Considering ex-officio members from agencies contributing or planning to contribute (ESA, NASA, etc.)… liaisons with other MIPs may also be necessary • Augmenting CMOR to better handle observations is urgently needed • For now, webX sessions being considered on a ~ bimonthly basis obs4MIPs-CMIP6 Planning Meeting Apr 29 – May 1, 2014 Some closing thoughts on how all this works Standards and infrastructure fundamentally rely on community consensus • Must serve the needs of multiple projects • Must meet the the diverse set of needs of a broad spectrum of users • Requires substantial coordination • Demands community input and the oversight relying on the expertise of a diversity of scientists and data specialists The current system is fragile in that many of the components are funded by individual projects that could disappear, impairing viability of the entire infrastructure Every funder is essential to supporting this collaborative effort where • Leadership and recognition is shared across multiple projects • Integration of the “whole” imposes external requirements on the individual components. obs4MIPs-CMIP6 Planning Meeting Apr 29 – May 1, 2014