I want to have some cash

advertisement

Computational Linguistics

Yoad Winter

*

General overview

*

Examples:

Transducers; Stanford Parser;

Google Translate; Word-Sense

Disambiguation

*

Finite State Automata and Formal

Grammars

Linguistics - from Theory to Technology

Language Technology

Natural Language Processing

Computational Linguistics

Theoretical Linguistics

Industrie

INFO

TLW

Computational Linguistics

Goals of CL:

* Foundations for Linguistics in Computer

Science (e.g. Formal Language theory)

* Computable linguistic theories (HPSG, LFG,

Categorial Grammar)

* Implementation of demos for linguistic

theories

* (Mathematical Linguistics)

Natural Language Processing

Goals of NLP – practical applications of CL:

* Speech recognition/synthesis

* Machine translation

* Summarization

* Question answering

* Text categorization

* Grammar checking

Statistical NLP:

* Unsupervised

* Supervised (corpus-based)

Language Technology

Goals of LT:

* Useful linguistic resources (lexicons, grammar

rules, semantics webs)

* Implementation of most useful tools involving

language processing

(Google translation, Word spell checker,

MS Speech Recognizer etc.)

Language Technology

Natural Language Processing

Computational Linguistics

Theoretical Linguistics

Language Processing - Tasks

Input:

Words in text

Output:

J&M (2009)

Part of speech (Noun/Verb);

I: Words

Morphological Information

Speech Sound

Wave

Text

II: Speech

text

Speech Sound Wave

Sentence in text

Phrases in Sentences (noun

phrase, verb phrase)

Sentence/text

Action/Reasoning

Sentence/text

Translation

III: Syntax

IV: Semantics

& Pragmatics

V: Applications

Processing - General Idea

Start with null information state I=0

Repeat while there is language to read:

- Read a language token T

- Recognize T: extract information I(T)

- Update information state I using I(T)

- Do some action using I(T)

Example 1 – Finite State Transducers

cash | V

I want to |ε

1

0

I want to have some |ε

2

3

cash | N

a check |ε

4

I want to cash a check:

- Start from state 0

- Read “I want to”, move to state 1, and

output nothing

- Read “cash”, move to state 3, and output V

- Read “a check”, move to state 4, and

output nothing

Example 1 – Finite State Transducers

cash | V

I want to |ε

1

0

I want to have some |ε

2

3

cash | N

a check |ε

4

I want to have some cash:

- Start from state 0

- Read “I want to have some”, move to state

2, and output nothing

- Read “cash”, move to state 4, and output N

Example 2 – Stanford Parser

http://nlp.stanford.edu/software/lex-parser.shtml link

Your query

I want to cash a check

Tagging

I/PRP want/VBP to/TO cash/VB a/DT check/NN

Parse

Example 2 – Stanford Parser

http://nlp.stanford.edu/software/lex-parser.shtml link

Your query

I want to have some cash

Tagging

I/PRP want/VBP to/TO have/VB some/DT cash/NN

Parse

Example 3 – Google Translate

Example 3 – Google Translate

Summary

We have seen ways to process:

- words, word-by-word:

transducers

- sentences, with a tree structure:

Stanford Parser

A word like CASH must be disambiguated

for Noun or Verb, in order to have a

correct translation.

Other kinds of disambiguation?

Example 4 - Word-Sense Disambiguation

the light blue car:

1. de lichtblauwe auto

2. de lichte blauwe auto

John likes the light blue car but not the deep blue

car

John was able to lift the light blue car but not the

heavy blue car

Google Translate: lichtblauwe auto in both cases

Word-Sense disambiguation: finding the right

sense of the word

Basic Model 1: Finite State Automata (FSA)

q0- start state

q4- accepting state

arrows – transitions, also defined by a transition

table

FSA - formally

Tracing the execution of an FSA

“baaa!” is accepted because when taking the

input symbols one by one, we reached the

accepting state q4.

FSA’s as Grammars

An FSA possibly describes an infinite set of

strings over a finite input alphabet Σ.

We thus say that an FSA describes a grammar

over Σ, which derives a formal language over Σ.

More officially:

Σ – a finite set

Σ* – all the strings over Σ (infinite)

L(FSA) = the language of the FSA

is the set of strings S in Σ* that are derived

by the FSA.

Any set described by an FSA is called regular.

Non-regular languages and complexity

L = { ab, aabb, aaabbb, aaaabbbb, … }

can be shown to be non-regular.

No FSA can derive this language L!

But there are grammars that can also generate

non-regular languages!

Are natural languages regular or non-regular?

How hard it is for a computer to recognize regular

and non-regular languages?

Are there different classes of formal languages in

terms of their complexity?

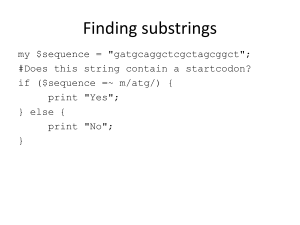

Another way to define regular langauges –

regular expressions

A regular expression is a compact way for

describing a regular language.

Example:

baa(a*)!

descibes the same language as the FSA we saw.

We say that this regular expression matches any string in this

language, and does not match other strings.

Regular expressions - formally

Σ - a finite alphabet

1- Any string in Σ is a regular expression that matches

itself

“a” matches “a”; “b” matches “b”; etc.

2- If A and B are regular expressions then AB is a regular

expression that matches any concatenation of a string

that A matches with a string that B matches.

“ab” matches “ab”

3- If A and B are regular expressions then A|B is a regular

expression that matches any string that A or B match.

“a|b” matches both “a” and “b”

4- If A is regular expression then A* matches any string

that has zero or more As.

“a*” matches the empty string, “a”, “aa”, “aaa” etc.

Examples

Convention: we give precedence to *.

AB* = A(B*)

Convenience: we let ε match the empty string.

a|b* matches {ε, "a", "b", "bb", "bbb", ...}

(a|b)* matches the set of all strings with no symbols other than "a"

and "b", including the empty string: {ε, "a", "b", "aa", "ab", "ba",

"bb", "aaa", ...}

ab*(c|ε) denotes the set of strings starting with "a", then zero or more

"b"s and finally optionally a "c": {"a", "ac", "ab", "abc", "abb",

"abbc", ...}

At home

Read 3.4-3.6 on Transducers as preparation

for Eva’s class.