Concept Net

advertisement

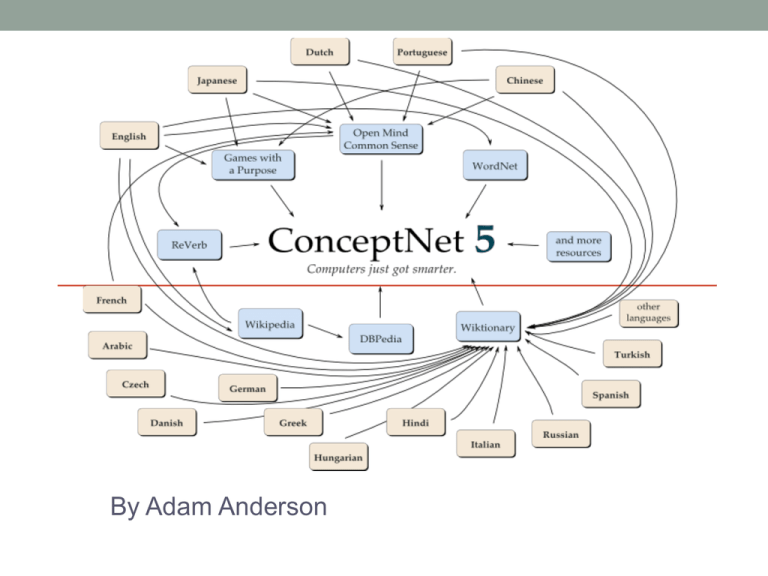

By Adam Anderson What is it? • A crowd sourced knowledgebase of common sense Common Sense • We have it and rely on it a lot • Computers do not • How am I feeling: • I had an awful day. • I got fired today. • What else can be inferred by the sentences? Common Sense Challenge Marvin Minsky made an estimation: ‘…commonsense is knowing maybe 30 or 60 million things about the world and having them represented so that when something happens, you can make analogies with others’ WordNet and Cyc • Two semantic knowledge basis that inspired ConceptNet • Comparable in size and effort WordNet • Began in 1985 at Princeton University • Words organized into discrete ‘senses’ • Words are linked by semantic relationships • Synonym • Is-a • Part-of • Great for lexical categorization and word-similarity determination ConceptNet vs WordNet • ConceptNet focuses on semantic relationships between compound concepts • Drive car • 20+ semantic relationships • Contains knowledge that is defeasible Cyc • Started in 1984 by Doug Lenat • A formalization of a commonsense knowledgebase into a logical framework • Uses its own unambiguous logical formulation language called CycL • All assertions and facts are entered in by knowledge engineers of Cycorp ConceptNet vs Cyc • ConceptNet doesn’t use a formalized logical framework • ConceptNet is openly available to the public • The largest difference is how ConceptNet gets its data • It uses crowdsourcing to retrieve data from the general public, and not just knowledge engineers. Open Mind Common Sense (OMCS) • Developed at MIT in 1999 • Inspired by the success of other collaborative projects on the Web • A website containing 30 activities • Each giving a simple commonsense assertion • Quickly gathered over 700,000 sentences from over 14,000 contributors CRIS • Commonsense Robust Inference System • Earliest precursor to ConceptNet • Tried to extract taxonomic, spatial, functional, causal, and emotional knowledge from OMCS to populate a semantic network • Idea of letting users provide information in semi-structured natural language instead of directly engineered structures Cyc used OMCSNet • A semantic network built on top of CRIS • Had a small three function API • Used in many MIT Media Lab projects ConceptNet • ConceptNet was the next interation • Added system for weighing knowledge • How many times in OMCS corpus • How well can it be inferred indirectly from other facts • Multiple assertions inferred from single OMCS sentence • Addition of NL tools like MontyLingua ConceptNet 5 • A lot has changed since 2004 • Many structural changes have occurred in each version. • We will discuss the current structure in a bit • Many new data sources • Development is currently being led by Rob Speer and Catherine Havasi Current Sources • Still uses OMCS • WordNet • English Wikipedia • DBPedia • ReVerb (Removed in v5.2) • Even games • GWAP project’s game Verbosity • Japanese game nadya.jp • English Wiktionary • Synonyms • Antonyms • Translations • Labeled word senses Assertions Two concepts connected by a relation and justified by sources Types of Relations • IsA • Desires • UsedFor • MadeOf • RelatedTo • CapableOf • AtLocation • TranslationOf • HasA • InheritsFrom • DefinedAs • LocatedNear • CreatedBy • Synonym • HasProperty • Antonym • DerivedFrom • ConceptuallyRelatedTo • MotivationOf • EffectOf Structure • ConceptNet is represented as a hypergraph • Edges in a hypergraph (hyperedges) are sets of nodes that contain an arbitrary number of nodes • Concept of k-uniform Structure: Concepts & Predicates Concepts • Words and phrases • Represented as nodes Predicates • Set of relations such as IsA or HasA • Also represented as nodes Structure: Assertions • Represented by edges in the graph that connect multiple nodes in the graph (concepts and relations) • Justified by other assertions, knowledge sources, and processes • An edge is an instance of an assertion. Multiple edges can represent the same assertion. An assertion is a bundle of edges • Think of each edge has being a way that assertion was learned Structure: Sources • Sources justify each assertion • Each edge contains a conjunction of sources that justify that edge • 𝑆1 ∧ 𝑆2 ∧ 𝑆3 • The sources that justify an assertion are represented by a disjunction of conjuctions • 𝑆1 ∧ 𝑆2 ∧ 𝑆3 ∨ 𝑆4 ∧ 𝑆5 ∨ (𝑆6 ∧ 𝑆7) Structure: Source Score • Each conjunction has a score • More positive gives more confidence that the assertion is true • More negative gives more confidence that the assertion is false • Negative scoring doesn’t mean that the negation of the assertion is true • Consider “Pigs cannot fly” • Should be represented using negated relations like NotCapableOf • Needed to assign credit in the multi-part process to create the assertion Web Frontend • http://conceptnet5.media.mit.edu/ Using ConceptNet • JSON REST API • Running it locally • Can choose which data to use and add more data • All the data is available for convenience • Flat JSON file • Solr JSON file • CSV REST • Representational State Transfer • Is defined by 6 constraints • Client-server • Stateless • Cacheable • Layered System • Code on demand • Uniform interface REST Web API • Has a base url: http://mysite.com/api/ • Uses an internet media type such as JSON, XML, or YAML • Supported by HTTP methods (GET, PUT, POST, and DELETE) • Hypertext Driven REST Web API ConceptNet only allows GET API use JSON • Javascript Object Notation • Text-based open standard designed for human-readable data interchange JSON Edge Data • id: the unique ID for this edge, which contains a SHA-1 hash of • • • • • • the information that makes it unique. uri: the URI of the assertion being expressed. The uri is not necessarily unique, because many edges can bundle together to express the same assertion. rel: the URI of the predicate (relation) of this assertion. start: the URI of the first argument of the assertion. end: the URI of the second argument of the assertion. weight: the strength with which this edge expresses this assertion. A typical weight is 1, but weights can be higher, lower, or even negative. sources: the sources that, when combined, say that this assertion should be true (or not true, if the weight is negative). JSON Edge Data • license: a URI representing the Creative Commons license • • • • that governs this data. See Copying and sharing ConceptNet. dataset: a URI representing the dataset, or the batch of data from a particular source that created this edge. context: the URI of the context in which this statement is said to be true. features: a list of three identifiers for features, which are essentially assertions with one of their three components missing. These can be useful in machine learning for inferring missing data. surfaceText: the original natural language text that expressed this statement. May be null, because not every statement was derived from natural language input. The locations of the start and end concepts will be marked by surrounding them with double brackets. An example of a surfaceText is "[[a cat]] is [[an animal]]". JSON Edge Data Example ConceptNet Web API • Lookups • http://conceptnet5.media.mit.edu/data/5.1 • Search • http://conceptnet5.media.mit.edu/data/5.1/search • Association • http://conceptnet5.media.mit.edu/data/5.1/assoc Lookup Base URIs • /a/: assertions • /c/: concepts (words, disambiguated words, and phrases, in a • • • • • • particular language) /ctx/: contexts in which assertions can be true, if they're language independent. /d/: datasets /e/: unique, arbitrary IDs for edges. Edges that assert the same thing combine to form assertions. /l/: license terms for redistributing the information in an edge. /r/: language-independent relations, such as /r/IsA /s/: knowledge sources, which can be human contributors, Web sites, or automated processes Let’s Try Some Lookups • http://conceptnet5.media.mit.edu/data/5.1/c/en/dragon Example Search • Searching for 10 things that are part of a car • http://conceptnet5.media.mit.edu/data/5.1/search?rel=/r/PartOf&en d=/c/en/car&limit=10 Association • Finds concepts that are similar concept or term list • Can specify the GET parameter filter to only return results that start with the given URI Examples • Measure how similar dogs and cats are • http://conceptnet5.media.mit.edu/data/5.1/assoc/c/en/cat?filter=/c/e n/dog&limit=1 • Find terms associated with these breakfast foods • http://conceptnet5.media.mit.edu/data/5.1/assoc/list/en/toast,cereal, juice@0.5,egg Conclusion • It is just common sense • Easy, right? Questions? Sources • http://csc.media.mit.edu/conceptnet • http://conceptnet5.media.mit.edu/ • http://en.wikipedia.org/wiki/Open_Mind_Common_Sense • http://web.media.mit.edu/~hugo/publications/papers/BTTJ -ConceptNet.pdf • http://en.wikipedia.org/wiki/Representational_state_transf er • http://en.wikipedia.org/wiki/Hypergraph