Approaches to POS Tagging

advertisement

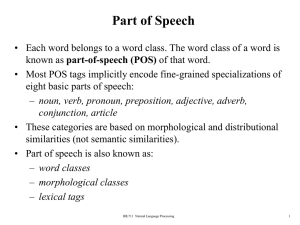

Part of Speech Tagging Importance Resolving ambiguities by assigning lower probabilities to words that don’t fit Applying to language grammatical rules to parse meanings of sentences and phrases Part of Speech Tagging Determine a word’s lexical class based on context Approaches to POS Tagging Approaches to POS Tagging • Initialize and maintain tagging criteria – Supervised: uses pre-tagged corpora – Unsupervised: Automatically induce classes by probability and learning algorithms – Partially supervised: combines the above approaches • Algorithms – Rule based: Use pre-defined grammatical rules – Stochastic: use HMM and other probabilistic algorithms – Neural: Use neural nets to learn the probabilities Example The man ate the fish on the boat in the morning Word Tag The Determiner Man Noun Ate Verb The Determiner Fish Noun On Preposition The Determiner Boat Noun In Preposition The Determiner Morning Noun Word Class Categories Note: Personal pronoun often PRP, Possessive Pronoun often PRP$ Word Classes – Open (Classes that frequently spawn new words) • Common Nouns, Verbs, Adjectives, Adverbs. – Closed (Classes that don’t often spawn new words): • • • • • • • prepositions: on, under, over, … particles: up, down, on, off, … determiners: a, an, the, … pronouns: she, he, I, who, ... conjunctions: and, but, or, … auxiliary verbs: can, may should, … numerals: one, two, three, third, … Particle: An uninflected item with a grammatical function but without clearly belonging to a major part of speech. Example: He looked up the word. The Linguistics Problem • Words often are in multiple classes. • Example: this – This is a nice day = preposition – This day is nice = determiner – You can go this far = adverb • Accuracy – 96 – 97% is a baseline for new algorithms – 100% impossible even for human annotators Unambiguous: 35,340 2 tags 3,760 3 tags 264 4 tags 61 5 tags 12 6 tags 2 7 tags 1 (Derose, 1988) Rule-Based Tagging • Basic Idea: – Assign all possible tags to words – Remove tags according to a set of rules o Example rule: IF word+1 is adjective, adverb, or quantifier ending a sentence IF word-1 is not a verb like “consider” THEN eliminate non-adverb ELSE eliminate adverb – There are more than 1000 hand-written rules Stage 1: Rule-based tagging • First Stage: FOR each word Get all possible parts of speech using a morphological analysis algorithm • Example PRP She VBN VBD promised TO to NN RB JJ VB back DT the VB NN bill Stage 2: Rule-based Tagging • Apply rules to remove possibilities • Example Rule: IF VBD is an option and VBN|VBD follows “<start>PRP” THEN Eliminate VBN VBN PRP VBD She promised TO to NN RB JJ VB back DT the VB NN bill Stochastic Tagging • Use probability of certain tag occurring given various possibilities • Requires a training corpus • Problems to overcome – Algorithm to assign type for words that are not in corpus – Naive Method • Choose most frequent tag in training text for each word! • Result: 90% accuracy HMM Stochastic Tagging • Intuition: Pick the most likely tag based on context • Maximize the formula using a HMM – P(word|tag) × P(tag|previous n tags) • Observe: W = w1, w2, …, wn • Hidden: T = t1,t2,…,tn • Goal: Find the part of speech that most likely generate a sequence of words Transformation-Based Tagging (TBL) (Brill Tagging) • Combine Rule-based and stochastic tagging approaches – Uses rules to guess at tags – machine learning using a tagged corpus as input • Basic Idea: Later rules correct errors made by earlier rules – Set the most probable tag for each word as a start value – Change tags according to rules of type: IF word-1 is a determiner and word is a verb THEN change the tag to noun • Training uses a tagged corpus – Step 1: Write a set of rule templates – Step 2: Order the rules based on corpus accuracy TBL: The Algorithm • Step 1: Use dictionary to label every word with the most likely tag • Step 2: Select the transformation rule which most improves tagging • Step 3: Re-tag corpus applying the rules • Repeat 2-3 until accuracy reaches threshold • RESULT: Sequence of transformation rules TBL: Problems • Problems – Infinite loops and rules may interact – The training algorithm and execution speed is slower than HMM • Advantages – It is possible to constrain the set of transformations with “templates” IF tag Z or word W is in position *-k THEN replace tag X with tag – – – – Learns a small number of simple, non-stochastic rules Speed optimizations are possible using finite state transducers TBL is the best performing algorithm on unknown words The Rules are compact and can be inspected by humans • Accuracy – First 100 rules achieve 96.8% accuracy First 200 rules achieve 97.0% accuracy Neural Network Digital approximation of biological neurons Digital Neuron I N P U T S W=Weight W Neuron W Σ f(n) W Activation W Function Outputs Transfer Functions Output 1 SIG M O ID : f ( n ) 1 1 e 0 Input L IN E A R : f ( n ) n n Networks without feedback Multiple Inputs and Single Layer Multiple Inputs and layers Feedback (Recurrent Networks) Feedback Supervised Learning Inputs from the environment Expected Output Actual System Actual Output + Σ Neural Network - Training Error Run a set of training data through the network and compare the outputs to expected results. Back propagate the errors to update the neural weights, until the outputs match what is expected Multilayer Perceptron Definition: A network of neurons in which the output(s) of some neurons are connected through weighted connections to the input(s) of other neurons. Inputs First Hidden layer Second Hidden Layer Output Layer Backpropagation of Errors Function Signals Error Signals