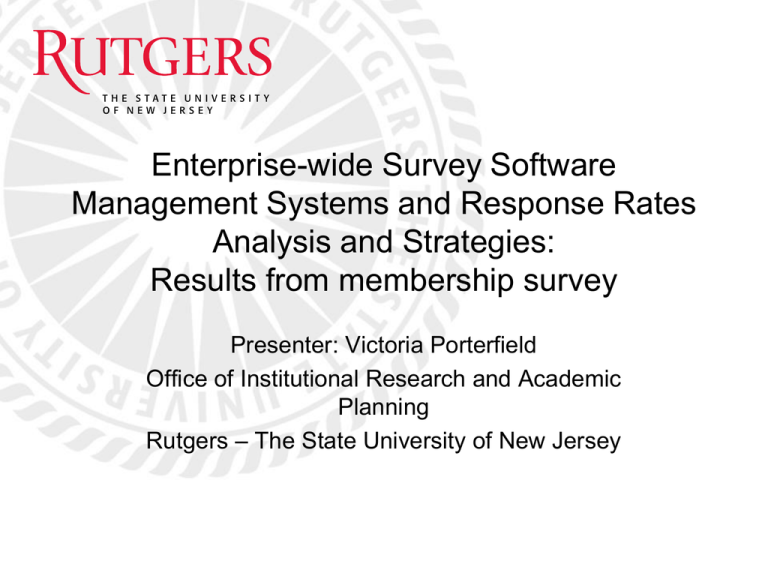

Response Rates for schools based on access restrictions/coordination

advertisement

Enterprise-wide Survey Software Management Systems and Response Rates Analysis and Strategies: Results from membership survey Presenter: Victoria Porterfield Office of Institutional Research and Academic Planning Rutgers – The State University of New Jersey Enterprise-wide Survey Software and Response Rates Overview of Declining Response Rates • Much of the research conducted on survey response rates, including higher education surveys, has witnessed a decline of response rates. – – – – Fosnacht, Sarraf, Howe & Peck (2013) National Research Council (2013) National Survey of Student Engagement (2014) Sax, Gilmartinn, & Bryant (2003) • Web surveys have particularly suffered in recent years – – – Dillman, Phelps, Tortora, Swift, Kohrell, Berck, & Messer (2009) Manfreda, Berzelak, Haas, & Vehovar (2008) Petchenik & Watermolen (2011) Enterprise-wide Survey Software and Response Rates Why this study was done? • Overall, response rates for SERU have declined over time • Two possible contributors: – General survey fatigue – Enterprise-wide survey software which has become increasingly available at higher education institutions in recent years Enterprise-wide Survey Software and Response Rates General survey fatigue Response Rates of Institutions from 2010-2014 Response Rates of Institutions from 2010-2014 0.50 0.50 0.45 0.45 0.40 0.40 0.35 0.35 Rutgers 0.30 0.30 Texas A&M 0.25 Pittsburgh 0.20 Washington 0.15 Iowa 0.10 Minnesota 0.25 All participating schools 0.20 0.15 0.10 0.05 0.05 0.00 2010 2011 2012 2013 2014 0.00 2010 2011 2012 2013 2014 Enterprise-wide Survey Software and Response Rates Enterprise-wide survey software • There are positives with enterprise-wide survey software – Easier to develop social science research – Cheaper to develop social science research – Many of the same enterprise-wide survey software products are used at different institutions (i.e. Qualtrics), which can be used to collaborate surveys with other institutions • But…. – With increased access to survey software, there are more surveys which can contribute to survey fatigue Enterprise-wide Survey Software and Response Rates Enterprise-wide survey software • What are the response rate differences before and after the survey software became more available? Response Rates of Institutions before and after University License (average) Response Rates of Institutions before and after University License (by year) 0.50 0.50 0.45 0.45 0.40 0.40 0.35 Rutgers 0.35 Rutgers 0.30 Texas A&M 0.30 Texas A&M 0.25 Pittsburgh 0.25 Pittsburgh 0.20 Washington 0.20 Washington 0.15 Iowa 0.15 Iowa 0.10 Minnesota 0.10 Minnesota 0.05 0.05 0.00 0.00 Before After Before After Enterprise-wide Survey Software and Response Rates Enterprise-wide survey software • But can’t these differences be attributed to general survey fatigue? Certainly! • Therefore, the access of each institution’s enterprise-wide survey software was evaluated. • Three schools have “high coordination” efforts – Clear and prominent terms of use policy – Formal survey coordination committee – Limits on access • Three schools have “low coordination” efforts – May require IRB but otherwise can gain access Enterprise-wide Survey Software and Response Rates Enterprise-wide survey software • 2-sample t-tests revealed significant differences between each group Response Rates for schools based on access restrictions/coordination 0.50 0.45 0.40 35.37% 0.35 28.63% 0.30 25.43% 0.25 0.20 0.15 0.10 0.05 0.00 Before After - High coordination After - Low coordination Enterprise-wide Survey Software and Response Rates Conclusions • For the most part, SERU response rates are declining – General survey fatigue is hurting response rates – Enterprise-wide survey software may be hurting response rates if coordination/restrictions are not employed across campus • Further research with more institutions is recommended to learn more about the intertwining relationship with survey fatigue, enterprise-wide software, and response rates. Enterprise-wide Survey Software and Response Rates Questions? Comments? • Special thanks to Iowa, Minnesota, Pittsburgh, Texas A&M, and Washington for participating in this study • Contact information: Victoria Porterfield Email: porterfield@instlres.rutgers.edu Enterprise-wide Survey Software and Response Rates References Dillman, D. A., Phelps, G., Tortora, R., Swift, K., Kohrell, J., Berck, J., & Messer, B. L. (2009). Response rate and measurement differences in mixed-mode surveys using mail, telephone, interactive voice response (IVR) and the Internetq. Social Science Research, 38, 1-18. Fosnacht, K., Sarraf, S., Howe, E., & Peck, L. (2013). How important are high response rates for college surveys? Paper presented at the annual forum of the Association for Institutional Research, Long Beach, CA. Manfreda, K. L., Bosnjak, M., Berzelak, J., Haas, I., & Vehovar, V. (2008). Web surveys versus other survey modes. A meta-analysis comparing response rates. International Journal of Market Research, 50(1), 79–104 National Research Council. (2013). Nonresponse in social science surveys: A research agenda. Washington, DC: The National Academies Press National Survey of Student Engagement. (2014). NSSE 2014 U.S. response rates by institutional characteristics. Petchenik, J., & Watermolen, D. J. (2011). A cautionary note on using the Internet to survey recent hunter education graduates. Human Dimensions of Wildlife 16(3): 216-218. Sax, L. J., Gilmartin, S. K., & Bryant, A. N. (2003). Assessing Response Rates and Nonresponse Bias in Web and Paper Surveys. Research in Higher Education, 44 (4), 409–432.