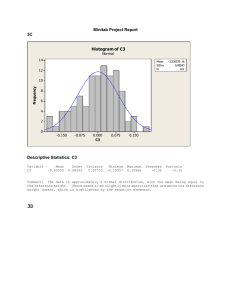

Module 8 Regression Analysis Model Building 1 Regression Analysis Model Building • • • • • • General Linear Model Determining When to Add or Delete Variables Analysis of a Larger Problem Variable-Selection Procedures Residual Analysis Multiple Regression Approach to Analysis of Variance and Experimental Design 2 General Linear Model • Models in which the parameters (0, 1, . . . , p ) all have exponents of one are called linear models. • A general linear model involving p independent variables is y 0 1z1 2 z2 pzp • Each of the independent variables z is a function of x1, x2,..., xk (the variables for which data have been collected). 3 General Linear Model • The simplest case is when we have collected data for just one variable x1 and want to estimate y by using a straightline relationship. In this case z1 = x1. • This model is called a simple first-order model with one predictor variable. y 0 1 x1 4 Modeling Curvilinear Relationships • To account for a curvilinear relationship, we might set z1 = x1 and z2 =x12 . • This model is called a second-order model with one predictor variable (Quadratic). y 0 1 x1 2 x12 5 Second Order or Quadratic • Quadratic functional forms take on a U or inverted U shapes depending on the values of the coefficients y 0 1 x1 2 x12 1 0and 2 0 6 Second Order or Quadratic • For example the relationship between earnings and age. Earnings would rise, level out and the fall as age increased. y 0 1 x1 2 x12 1 0and 2 0 7 Interaction • If the original data set consists of observations for y and two independent variables x1 and x2 we might develop a second-order model with two predictor variables. y 0 1 x1 2 x2 3 x12 4 x22 5 x1 x2 • In this model, the variable z5 = x1x2 is added to account for the potential effects of the two variables acting together. • This type of effect is called interaction. 8 Model Assumptions • Assumptions About the Error Term – The error is a random variable with mean of zero. – The variance of , denoted by 2, is the same for all values of the independent variables. – The values of are independent. – The error is a normally distributed random variable reflecting the deviation between the y value and the expected value of y given by 0 + 1 x1 + 2 x2 + . . . + p xp 9 Autocorrelation or Serial Correlation • Serial correlation or autocorrelation is the violation of the assumption that different observations of the error term are uncorrelated with each other. It occurs most frequently in time series data-sets. In practice, serial correlation implies that the error term from one time period depends in some systematic way on error terms from another time periods. 10 Residual Analysis: Autocorrelation • With positive autocorrelation, we expect a positive residual in one period to be followed by a positive residual in the next period. • With positive autocorrelation, we expect a negative residual in one period to be followed by a negative residual in the next period. • With negative autocorrelation, we expect a positive residual in one period to be followed by a negative residual in the next period, then a positive residual, and so on. 11 Residual Analysis: Autocorrelation • When autocorrelation is present, one of the regression assumptions is violated: the error terms are not independent. • When autocorrelation is present, serious errors can be made in performing tests of significance based upon the assumed regression model. • The Durbin-Watson statistic can be used to detect firstorder autocorrelation. 12 Residual Analysis: Autocorrelation Durbin-Watson Test for Autocorrelation • Statistic n 2 ( et et 1 ) d t 2 n 2 et t 1 • The statistic ranges in value from zero to four. • If successive values of the residuals are close together (positive autocorrelation), the statistic will be small. • If successive values are far apart (negative autocorrelation), the statistic will be large. • A value of two indicates no autocorrelation. 13 Durbin Watson Observation Residuals 1 2 3 4 5 (et-et-1) -3 1 -2 2 2 (et-et-1)^2 4 -3 4 0 Sum SSE d 16 9 16 0 41 22 1.86 14 General Linear Model Often the problem of nonconstant variance can be corrected by transforming the dependent variable to a different scale. • Logarithmic Transformations Most statistical packages provide the ability to apply logarithmic transformations using either the base-10 (common log) or the base e = 2.71828... (natural log). • Reciprocal Transformation Use 1/y as the dependent variable instead of y. 15 Transforming y • Transforming y. If residual vs y-hat is convex up lower the power on y. • If residual vs y-hat is convex down increase the power on y • Examples –1/y^2;-1/y;-1/y^.5; log y ; y; y^2;y3 16