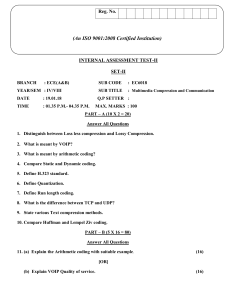

Module V35

Image Compression

fundamentals in Digital Image

Processing

Introduction

What is Image Compression?

• Image Compression is the art and science of reducing the amount

of data required to represent an image.

• Data required for two hour standard definition(SD) television

movie using 720×480×24 bits pixel arrays is 224 GB of data.

•

The images are compressed to save storage space and reduce

transmission time.

Image compression fundamentals

What is Data Compression?

• Data compression refers to the process of reducing the amount of data

required to represent a given quantity of information.

• Data contains irrelevant or repeated information called redundant data.

Types of Compression

Lossless compression

• This do not lose any information.

• For eg. Run-length encoding, Huffman coding,

Lempel Ziv coding(LZW) etc.

Lossy compression

• This loses part of information in a controlled way.

• For eg. JPEG, MPEG, MP3 etc.

Image compression fundamentals

• Let b and b’ denote the number of bits in two representations of the same

information, the Relative data redundancy R of the representation with b bits is,

R = 1 – 1/CR where CR commonly called the compression ratio is defined as

CR = b/b’

• b=b’, R = 0, and CR= 1, R = 0, no data redundancy.

• b>>b’ and CR>>1, R≅1 highly redundant data.

• If CR = 10 (or 10:1), for instance, means larger representation has 10 bits of data

for every 1 bit of data in the smaller representation.

• Corresponding relative data redundancy of the larger representation is 0.9

indicating 90% of its data is redundant.

Principal types of data redundancies

Data redundancies

Coding Redundancy

Spatial and temporal

redundancy

Irrelevant information

◦ Each piece of information or ◦ Pixels of most 2-D intensity arrays are ◦ Most 2-D intensity arrays contain

event is assigned a sequence of

correlated spatially (i.e. each pixel is

information that is ignored by

code symbols, called a code

similar to or dependent on neighboring

human visual system and/or

word.

pixels.

extraneous to the intended use of

◦ Number of symbols in each ◦ Information is unnecessarily replicated

the image.

code word is its length.

in the representations of the correlated ◦ It is redundant in the sense that it

pixels.

is not used.

Image compression Model (Encoder)

• Encoder: is the device that is used for compression.

• Mapper: The function of the mapper is to reduce the spatial or

temporal redundancy. The operation is generally reversible. e.g. DCT.

• Quantizer: It reduces the accuracy of mapper’s output in accordance with

some preestablished fidelity criterion. It reduces the psychovisual

redundancies and it is a irreversible process.

• Symbol coder: creates a fixed- or variable-length code to represent

the quantizer output and maps the output in accordance with the code.

The term symbol coder distinguishes this coding operation from the

overall source encoding process. In most cases, a variable-length code is

used to represent the mapped and quantized data set. e.g. VLE (Variable

Length Encoder).

• Channel: May be wired or wireless.

Image compression Model (Decoder)

• Decoder: is the device that is used for decompression.

• Symbol decoder: Performs the reverse operation of the symbol coder. It

decodes the code back. e.g. VLD (Variable Length Decoder).

• Inverse mapper: Performs the reverse operation of the mapper. It restores the

spatial or temporal redundancy in the image. e.g. DCT-1

• The Channel Encoder and Decoder: The channel encoder and decoder play

an important role in the overall encoding-decoding process when the channel is

noisy or prone to error. They are designed to reduce the impact of channel

noise by inserting a controlled form of redundancy into the source encoded

data. As the output of the source encoder contains little redundancy, it would

be highly sensitive to transmission noise without the addition of this "controlled

redundancy."

2

1

3

2

2

2

1

1

2

16

16

16

16

16

16

16

16

16

0.125 0.0625

-3

-4

0.1875

0.125 0.125 0.125 0.0625 0.0625 0.125

2.4150374992

7884

-3

-3

-3

-4

-4

-3

-0.375 -0.25 -0.452819531 -0.375 -0.375 -0.375 -0.25

3.0778

-0.25 -0.375

2

39Huffman coding

in Digital Image Processing

Huffman coding

• The most popular technique for removing coding redundancy is due to Huffman

(Huffman [1951]). When coding the symbols of an information source

individually, Huffman coding yields the smallest possible number of code symbols

per source symbol.

• Huffman coding is a variable length coding and it achieves error-free/lossless

compression as it is reversible. It exploits only coding and inter-pixel redundancy

to achieve compression.

• In terms of the noiseless coding theorem, the resulting code is optimal for a fixed

value of n, subject to the constraint that the source symbols be coded one at a time.

• The length of the code should be inversely proportional to its probability of

occurrence.

Steps of Huffman coding:

1. Sort the symbols according to decreasing order of their probabilities.

2. Combine the lowest probable symbols to form a composite symbol with

probability equal to sum of the respective probabilities.

3. Repeat step 2 until you are left with only 2 symbols.

4. Extract the codewords from the resulting binary tree representation of the code.

Image Size 10x10 (5 bit image)

Given Frequency:

a1=10, a2=40, a3=6, a4=10, a5=4 a6=30

Encoded String :

010100111100

• The first step in Huffman's approach is to create a series of source reductions by

ordering the probabilities of the symbols under consideration and combining

the lowest probability symbols into a single symbol that replaces them in the

next source reduction.

• At the far left, a hypothetical set of source symbols and their probabilities are

ordered from top to bottom in terms of decreasing probability values. To form

the first source reduction, the bottom two probabilities, 0.06 and 0.04, are

combined to form a "compound symbol" with probability 0.1.

• This compound symbol and its associated probability are placed in the first

source reduction column so that the probabilities of the reduced source are also

ordered from the most to the least probable. This process is then repeated until

a reduced source with two symbols (at the far right) is reached.

• The second step in Huffman's procedure is to code each reduced source,

starting with the smallest source and working back to the original source. The

minimal length binary code for a two-symbol source, of course, is the symbols 0

and 1.

• These symbols are assigned to the two symbols on the right (the assignment is

arbitrary; reversing the order of the 0 and 1 would work just as well). As the

reduced source symbol with probability 0.6 was generated by combining two

symbols in the reduced source to its left, the 0 used to code it is now assigned to

both of these symbols, and a 0 and 1 are arbitrarily appended to each to

distinguish them from each other. This operation is then repeated for each

reduced source until the original source is reached. The final code appears at

the far left.

• The entropy of the source is 2.1435 bits/symbol. The resulting Huffman code

efficiency is 97.43 %.

•

Huffman's procedure creates the optimal code for a set of symbols and probabilities

subject to the constraint that the symbols be coded one at a time. After the code has

been created, coding and/or decoding is accomplished in a simple lookup table

manner.

•

The code itself is an instantaneous uniquely decodable block code. It is called a block

code because each source symbol is mapped into a fixed sequence of code symbols. It

is instantaneous, because each code word in a string of code symbols can be decoded

without referencing succeeding symbols.

•

It is uniquely decodable, because any string of code symbols can be decoded in only

one way. Thus, any string of Huffman encoded symbols can be decoded by examining

the individual symbols of the string in a left to right manner. For the binary code, a

left-to-right scan of the encoded string 010100111100 reveals that the first valid code

word is 01010, which is the code for symbol a3 .The next valid code is 011, which

corresponds to symbol a1. Continuing in this manner reveals the completely decoded

message to be a3a1a2a2a6.

RUN-LENGTH

CODING

Run-length encoding (RLE)

• RLE is one of the simplest lossless data compression techniques. It reduces

interpixel redundancy. It consists of replacing a sequence (Run) of identical

symbols by a pair containing the symbol and the run length.

• It is used as the primary compression technique in the 1-D CCITT Group 3

Fax standard, bitmap images such as computer icons and in conjunction with

other techniques in the JPEG image compression standard.

• Scan the image horizontally or vertically and while scanning assign a group of

pixel with the same intensity into a pair (G ,L) where G is the intensity and L

is the length of the “run”. This method can also be used for detecting edges

and boundaries of an object. It is mostly used for images with a small number

of gray levels and is not effective for highly textured images.

STR=[ 5 5 5 5 5 5 4 4 4 3 3 2 ];

str=[1 1 1 1 1 1 1 1 1 1 1 1 1 0 0 0 0 0 0 0 1 1 1 1 1 1 1 1 1];

• Run-length coding (RLC) works by counting adjacent pixels with the same gray

level value called the run-length, which is then encoded and stored

• RLC works best for binary, two-valued, images

• RLC can also work with complex images that have been preprocessed by

thresholding to reduce the number of gray levels to two

RLC can be implemented in various ways, but the first step is to define the

required parameters

• Horizontal RLC (counting along the rows) or vertical RLC (counting along the

columns) can be used .

• This technique is especially successful in compressing bi-level images since the

occurrence of a long run of a value is rare in ordinary gray-scale images. A solution

to this is to decompose the gray-scale image into bit planes and compress every bitplane separately.

LOSSLESS COMPRESSION

AND

LOSSY COMPRESSION

JPG 52 KB

PNG 545KB

Sr. Parameter

No:

Lossless compression

Lossy compression

1.

Definition

The technique in which no data loss occurs and The technique in which there is data loss and the

output image is same as input image is called output image is not same(size, visually) as input

lossless image compression.

image is called lossy image compression.

2.

Removal

It removes only the redundant information It removes visually irrelevant

(coding and inter-pixel redundancy).

(Psychovisual redundancy).

3.

Information loss

There is no information loss.

There is always a loss of information.

4.

Other name

It is also called reversible compression.

It is also called irreversible compression.

5.

Compression ratio

CR is low.

CR can be very high.

6.

Algorithms/

Techniques used

Bit-plane coding, Run-length coding, Huffman Transform coding, DCT, DWT etc.

coding, LZW coding, Arithmetic coding etc.

7.

RMS error

Erms between original and reconstructed image is Erms between original and reconstructed image is

always zero.

never equal to zero.

8.

Advantages

It retains the file quality in smaller size.

9.

Drawbacks

The file size is much large as compared to lossy The quality of file is low, file is degraded. Original

and hence the data holding capacity is very less.

file cannot be recovered with decompression.

10.

Applications

Text files, medical images, space images etc.

Images, speech and videos.

11.

Examples

PNG, TIFF, BMP etc.

JPG, MPEG, MP3 etc.

information

It gives significantly reduced file size. It is supported

by many tools and software. Here we can choose

preferred degree of compression.

Predictive coding

Lossless Predictive Coding

Consider the pixel {23, 34, 39, 47, 55, 63}. Demonstrate Predictive coding

Consider the pixel {23, 64, 39, 47, 55, 63}. Demonstrate Predictive coding

Lossy Predictive Coding

• This process can be extended further by using only one bit

to encode the difference between the adjacent pixels.

• This scheme is known as Delta Modulation

45

BLOCK TRANSFORM

CODING

Transform Coding

• The lossless compression techniques discussed till now operate

directly on the pixels of an image and so are spatial domain

techniques.

• For transform coding, we modify the transform of an image. A

reversible linear transform (such as DCT/DFT ) is used to map the

image into a set of transform coefficients.

• These coefficients are then quantized and coded.

• The goal of transform coding is to decorrelate pixels and pack as

much information into small number of transform coefficients.

• Compression is achieved during quantization not during the

transform step.

Block diagram of Transform Coding

A transform coding system: (a) encoder; (b) decoder

Procedure for Transform Coding

• Divide the image into n × n sub-images.

• Transform each sub-image using a reversible transform (e.g., the Hartley

transform, the discrete Fourier transform (DFT) or the discrete cosine

transform (DCT)).

• Quantify, i.e., truncate the transformed image (e.g., by using DFT, and DCT

frequencies with small amplitude can be removed without much information

loss). The quantification can be either image dependent (IDP) or image

independent (IIP).

• Code the resulting data, normally using some kind of “variable length coding”,

e.g., Huffman code.

• The coding is not reversible (unless step 3 is skipped).

• Transform coding, is a form of block coding done in the transform domain.

The image is divided into blocks, or sub images, and the transform is

calculated for each block.

• Any of the previously defined transforms can be used, frequency (e.g. Fourier)

or sequency (e.g. Walsh-Hadamard), but it has been determined that the

discrete cosine transform (DCT) is optimal for most images.

• The newer JPEG2000 algorithms uses the wavelet transform, which has been

found to provide even better compression. After the transform has been

calculated, the transform coefficients are quantized and coded.

• This method is effective because the frequency/sequency transform of images is

very efficient at putting most of the information into relatively few coefficients,

so many of the high frequency coefficients can be quantized to 0 (eliminated

completely).

• This type of transform is a special type of mapping that uses spatial frequency

concepts as a basis for the mapping.

• The main reason for mapping the original data into another mathematical space

is to pack the information (or energy) into as few coefficients as possible.

• The simplest form of transform coding is achieved by filtering or eliminating

some of the high frequency coefficients.

• However, this will not provide much compression, since the transform data is

typically floating point and thus 4 or 8 bytes per pixel (compared to the original

pixel data at 1 byte per pixel), so quantization and coding is applied to the

reduced data

• Quantization includes a process called bit allocation,which determines the

number of bits to be used to code each coefficient based on its importance.

• Typically, more bits are used for lower frequency components where the energy

is concentrated for most images, resulting in a variable bit rate or nonuniform

quantization and better resolution.

• Two particular types of transform coding have been widely explored:

1. Zonal coding

2. Threshold coding

• These two vary in the method they use for selecting the transform coefficients to

retain (using ideal filters for transform coding selects the coefficients based on

their location in the transform domain).

Image taken from the book Digital Image Processing(Third Edition) by R. C. Gonzalez & R. E. Woods

ZONAL

CODING

• It involves selecting specific coefficients based on maximal variance.

• A zonal mask is determined for the entire image by finding the variance for

each frequency component.

• This variance is calculated by using each sub-image within the image as a

separate sample and then finding the variance within this group of subimages.

• The zonal mask is a bitmap of 1's and 0', where the 1's correspond to the

coefficients to retain, and the 0's to the ones to eliminate.

• As the zonal mask applies to the entire image, only one mask is required.

THRESHOLD

CODING

• It selects the transform coefficients based on specific value.

• A different threshold mask is required for each block, which increases file

size as well as algorithmic complexity.

• In practice, the zonal mask is often predetermined because the low frequency

terms tend to contain the most information, and hence exhibit the most

variance.

• In this case we select a fixed mask of a given shape and desired compression

ratio, which streamlines the compression process.

• It also saves the overhead involved in calculating the variance of each group

of sub-images for compression and also eases the decompression process.

• Typical masks may be square, triangular or circular and the cutoff frequency

is determined by the compression ratio.

JPEG

COMPRESSION

Transform Selection

• T(u,v) can be computed using various transformations, for example:

• DFT (Discrete Fourier Transform), DCT (Discrete Cosine Transform), KLT

(Karhunen-Loeve Transformation) etc.

• DCT is a real transform, while DFT is a complex transform.

• Blocking artifacts are less pronounced in the DCT than the DFT.

• The DCT is a good approximation of the Karhunen Loeve Transform(KLT)

which is optimal in terms of energy compaction.

• However unlike KLT the DCT has image independent basis functions.

• The DCT is used in JPEG compression standard.

JPEG Encoding

• It is used to compress pictures and graphics.

• In JPEG, a picture is divided into 8x8 pixel blocks to decrease the number of

calculations.

• The basic idea is to change the picture into a linear (vector) sets of numbers that

reveals the redundancies. The redundancies is then removed by one of lossless

compression methods.

DCT (Discrete Cosine Transform)

Forward:

Inverse:

if u=0

if u>0

if v=0

if v>0

DCT (cont’d)

Basis functions for a 4x4 image (i.e., cosines of different frequencies).

DCT (cont’d)

Using

8 x 8 sub-images

yields 64 coefficients

per sub-image.

DFT

WHT

DCT

RMS error: 2.32

1.78

1.13

Reconstructed images

by truncating

50% of the

coefficients

More compact

transformation

Quantization:

• In JPEG, each F[u,v] is divided by a constant q(u,v).

Table of q(u,v) is called quantization table T.

• After T table is created, the values are quantized to

reduce the number of bits needed for encoding.

• Quantization divides the number of bits by a constant,

then drops the fraction. This is done to optimize the

number of bits and the number of 0s for each

particular application.

Block diagram of (a) JPEG encoder, (b) JPEG decoder

Compression:

• Quantized values are read from the table and

redundant 0s are removed.

• To cluster the 0s together, the table is read diagonally

in an zigzag fashion. The reason is if the table doesn’t

have fine changes, the bottom right corner of the table

is all 0s.

• JPEG usually uses lossless run length encoding and

Huffman coding at the compression phase.

Block diagram of (a) JPEG encoder and

Decoder for color images

JPEG compression for color images

STEPS IN JPEG COMPRESSION

1. Pre-process image. (8*8 blocks subdivision

and level shifting for grayscale images, color

transformation, down-sampling and 8*8

blocks subdivision for color images.

2. Apply 2D forward DCT. Each block is

represented by 64 frequency components,

one of which (the DC component) is the

average color of the 64 pixels in the block.

3. Quantize DCT coefficients.

and

Huffman

4. Apply

RLE

encoding(Entropy encoding).

8*8 block of an image

f=

183

160

94

153

194

163

132

165

183

153

116

176

187

166

130

169

179

168

171

182

179

170

131

167

177

177

179

177

179

165

131

167

178

178

179

176

179

164

130

171

179

180

180

179

182

164

130

171

179

179

180

182

183

170

129

173

180

179

181

179

181

170

130

169

Level Shifting

fs = f - 128

55

32

-34

25

66

35

4

37

55

25

-12

48

59

38

2

41

51

40

43

54

51

42

3

39

49

49

51

49

51

37

3

39

50

50

51

48

54

36

2

43

51

52

52

51

55

36

2

43

51

51

52

54

55

42

1

45

52

51

53

51

53

42

2

41

Computing the DCT

312

56

-27

17

79

-60

26

-26

-38

-28

13

45

31

-1

-24

-10

-20

-18

10

33

21

-6

-16

-9

-11

-7

9

15

10

-11

-13

1

1

6

5

-4

-7

-5

5

3

3

0

-2

-7

-4

1

2

3

5

0

-4

-8

-1

2

4

3

1

-1

-2

-3

-1

4

1

DCT=round(dct2(fs)) -6

The Quantization Matrix

16

11 10

12

12 14

14

16

24

40

51

61

19

26

58

60

55

13 16

24

40

57

69

56

Qmat = 14

17 22

29

51

87

80

62

18

22 37

56

68

109

103

77

24

35 55

64

81

104

113

92

49

64 78

87

103

121

120

101

72

92 95

98

112

100

103

99

Quantization Table

312/16

= 20

16

11

10

16

24

40

51

61

12

12

14

19

26

58

60

55

14

13

16

24

40

57

69

56

14

17

22

29

51

87

80

62

18

22

37

56

68

109

103

77

24

35

55

64

81

104

113

92

49

64

78

87

103

121

120

101

72

92

95

98

112

100

103

99

20

5

-3

1

3

-2

1

0

-3

-2

1

2

1

0

0

0

-1

-1

1

1

1

0

0

0

-1

0

0

1

0

0

0

0

0

0

0

0

0

0

0

0

312

56

-27

17

79

-60

26

-26

-38

-28

13

45

31

-1

-24

-10

-20

-18

10

33

21

-6

-16

-9

-11

-7

9

15

10

-11

-13

1

-6

1

6

5

-4

-7

-5

5

3

3

0

-2

-7

-4

1

2

0

0

0

0

0

0

0

0

3

5

0

-4

-8

-1

2

4

0

0

0

0

0

0

0

0

3

1

-1

-2

-3

-1

4

0

0

0

0

0

0

0

0

DCT matrix

1

Quantized value

Thresholding/Threshold coding:

t=round(dcts./Qmat) =

20

5

-3

1

3

-2

1

0

-3

-2

1

2

1

0

0

0

-1

-1

1

1

1

0

0

0

-1

0

0

1

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

Zig- Zag Scanning of the Coefficients

20 5

-3

1

3

-2

1

0

-3

-2

1

2

1

0

0

0

-1

-1

1

1

1

0

0

0

-1

0

0

1

0

0

0

0 [20,5,-3,-1,-2,-3,1,1,-1,-1,0,0,1,2,3,-2,1,1,0,0,0,0,0,0,1,1,0,1,0,EOB]

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

• Here the EOB symbol denotes the end-of-block condition. A special EOB

Huffman code word is provided to indicate that the remainder of the coefficients

in a reordered sequence are zeros.

Decoding the Coefficients

ds_hat = t * Qmat =

320

55

-30

16

72

-80

51

0

-36

-24

14

38

26

0

0

0

-14

-13

16

24

40

0

0

0

-14

0

0

29

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

Computing the IDCT

fs_hat=round(idct2(ds_hat))

67

12

-9

20

69

43

-8

42

58

25

15

30

65

40

-4

47

46

41

44

40

59

38

0

49

41

52

59

43

57

42

3

42

44

54

58

40

58

47

3

33

49

52

53

40

61

47

1

33

53

50

53

46

63

41

0

45

55

50

56

53

64

34

-1

57

Shifting Back the Coefficients

195

140

119

148

197

171

120

170

186

153

143

158

193

168

124

175

174

169

172

168

187

166

128

177

169

180

187

171

185

170

131

170

182

186

168

186

175

131

161

177

180

181

168

189

175

129

161

181

178

181

174

191

169

128

173

183

178

184

181

192

162

127

185

f_hat=fs_hat+128 172

f=

183

160

94

153

194

163

132

165

183

153

116

176

187

166

130

169

179

168

171

182

179

170

131

167

177

177

179

177

179

165

131

167

178

178

179

176

182

164

130

171

179

180

180

179

183

164

130

171

179

179

180

182

183

170

129

173

180

179

181

179

181

170

130

169

Reconstructed image

Original image

A 4×4 grayscale image is given with the following pixel intensity values:

Perform the following steps for JPEG compression:

1.Subtract 128 from each pixel value (center the values around zero).

2.Compute the Discrete Cosine Transform (DCT) using the standard formula.

3.Quantize the transformed values using the following quantization matrix:

4.Round the values to obtain the compressed representation.

5.Reconstruct the approximate image by dequantization (multiplying by QQQ) and

applying Inverse DCT (IDCT).

Wavelet Based Compression

Limitations of JPEG Standard

• Low bit-rate compression: JPEG offers an excellent quality at high and mid

bit-rates. However, the quality is unacceptable at low bit-rates (e.g. below

0.25 bpp)

• Lossless and lossy compression: JPEG cannot provide a superior

performance at lossless and lossy compression in a single code-stream.

• Transmission in noisy environments: the current JPEG standard provides

some resynchronization markers, but the quality still degrades when biterrors are encountered.

• Different types of still images: JPEG was optimized for natural images. Its

performance on computer generated images and bi-level (text) images is

poor.

JPEG 2000

• JPEG 2000 is based on the DWT(Discrete Wavelet Transform). DWT

decomposes the image using functions called wavelets.

• The main advantage of wavelet is that it offers both time and frequency

information.

• The idea here is to have more localized analysis of the information which

is not possible using cosine functions whose spatial supports are identical

to the data.

• JPEG 2000 distinguishes itself from JPEG compression standards not only

by virtue of its higher compression ratios, but also by its many new

functionalities.

• The most noticeable among them is its scalability. From a compressed

JPEG 2000 bitstream, it is possible to extract a subset of the bitstream that

decodes to an image of variable quality and resolution.

What is Wavelet Transform ?

• Wavelets are functions that are defined in time as well as in frequency around a

certain point.

• Wavelet is a localize image transform. Because of localize process LF and HF

component are get scanned row wise as well as column wise.

• Wavelets based transform are mathematical tools which are used to extract

information from images. A significant benefit it has over Fourier transforms is

temporal(time) resolution which signifies that it can captures both frequency and

location information of the images.

• Wavelet transform can be viewed as the projection of a signal into a set of basis

functions named wavelets. Such basis functions offer localization in the frequency

domain.

• The wavelet transform is designed in such a way that we get good frequency

resolution for low frequency components(average intensity values of image) and

high temporal resolution for high frequency components(edges of image).

Steps of Wavelet Transform

Step1: Start with a mother wavelet such as Haar, Morlet, Daubechies etc. The image

is then translated into shifted and scaled versions of this mother wavelet. First

original image have to been passed through high pass filter and low pass filter by

applying filter on each row. We know when we apply LPF we get approximation

and when we apply HPF we get the details.

Step2: Now to the horizontal approximation, we again apply LPF and HPF to the

columns. Hence we get the approximate image (LL) and vertical detail of the

horizontal approximation(LH).

Step3: Next we apply LPF and HPF to the horizontal detail. Hence we get

horizontal detail of the image (HL) and the diagonal detail of the image (HH).

If the 3 detail sub-signals i.e. LH, HL and HH are small, they can be assumed to be

zero, without any significant change in the image. Hence large compression can be

achieved using wavelet transform.

Features of JPEG 2000

• Lossless and lossy compression: the standard provides lossy compression with a

superior performance at low bit-rates. It also provides lossless compression with

progressive decoding. Applications such as digital libraries/databases and

medical imagery can benefit from this feature.

• Protective image security: the open architecture of the JPEG2000 standard

makes easy the use of protection techniques of digital images such as

watermarking, labeling, stamping or encryption.

• Region-of-interest coding: in this mode, regions of interest (ROI’s) can be

defined. These ROI’s can be encoded and transmitted with better quality than

the rest of the image .

• Robustness to bit errors: the standard incorporate a set of error resilient tools to

make the bit-stream more robust to transmission errors.

Block diagram of JPEG 2000

Sr. Parameter

No:

JPEG

1.

Definition

It was created by Joint Photographic Expert It was created by JPEG in year 2000 and is a new

Group in 1986.

standard.

2.

Lossy/Lossless

JPEG uses lossy compression.

JPEG 2000 offers both lossless & lossy compression.

3.

Complexity

JPEG codec has low complexity.

JPEG 2000 codec is highly complex.

4.

Resolution

Quality

5.

Compression ratio

JPEG gives CR around 10 to 20.

JPEG 2000 can give CR around 30 to 200.

6.

Algorithms/

Techniques used

JPEG uses DCT.

JPEG 2000 uses DWT.

7.

Computation

It requires less computational complexity and It requires more computational complexity and

computation time is less.

computation time is also more.

8.

Image Quality

Blocking artifacts are present in the image and Blocking artifacts are not present in the image, image

regions of interest can also be not selected.

quality is very good and regions of interest can also

be selected for quality.

9.

Applications

JPEG is used widely on World Wide Web and in

digital cameras.

JPEG 2000 is widely in use in the medical and

wireless multimedia arenas. Most diagnostic imagery,

such as MRI, CT scans, and X-rays, are encoded as

JPEG 2000.

10.

File extension

JPEG/JPG.

J2K/JP2/ JPX.

and JPEG gives single resolution and single quality.

JPEG 2000

JPEG 2000 gives multiple resolution and full quality

scalability.

Comparison

JPEG (DCT based)

JPEG-2000 (Wavelet based)

Comparison

JPEG-2000(1.83KB)

Original(979 KB)

JPEG (6.21 KB)