Chapter 6

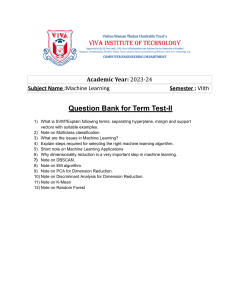

Similarity-Based Methods

manohorse ,

It's a

5 year old boy. We

but who

ex laimed the

ondent little

all it the Centaur out of habit,

an fault the kid's intuition? The 5 year old

has never seen this thing before now, yet he

with a reasonable

ame up

lassi ation for the beast. He is us-

ing the simplest method of learning that we know of

similarity and yet it's ee tive: the

hild sear hes

through his history for similar obje ts (in this

man and a horse) and builds a

ase a

lassi ation based on

these similar obje ts.

The method is simple and intuitive, yet when we

get into the details, several issues need to be addressed

in order to arrive at a te hnique that is quantitative

and t for a

omputer.

The goal of this

hapter is to build exa tly su h a

quantitative framework for similarity based learning.

6.1

Similarity

The `manohorse' is interesting be ause it requires a deep understanding of

similarity: rst, to say that the Centaur is similar to both man and horse;

and, se ond, to de ide that there is enough similarity to both obje ts so that

neither

an be ex luded, warranting a new

allows us to not only

arrival of a new

A simple

lass. A good measure of similarity

lassify obje ts using similar obje ts, but also dete t the

lass of obje ts (novelty dete tion).

lassi ation rule is to give a new input the

lass of the most

similar input in your data. This is the `nearest neighbor' rule. To implement

the nearest neighbor rule, we need to rst quantify the similarity between

two obje ts.

There are dierent ways to measure similarity, or equivalently

dissimilarity. Consider the following example with 3 digits.

©

AM

L

Yaser Abu-Mostafa, Malik Magdon-Ismail, Hsuan-Tien Lin: O t-2022.

All rights reserved. No

ommer ial use or re-distribution in any format.

6. Similarity-Based Methods

6.1. Similarity

The two 9s should be regarded as very similar. Yet, if we naively measure

similarity by the number of bla k pixels in ommon, the two 9s have only two

in ommon. On the other hand, the 6 has many more bla k pixels in ommon

with either 9, even though the 6 should be regarded as dissimilar to both 9s.

Before measuring the similarity, one should prepro ess the inputs, for example

by entering, axis aligning and normalizing the size in the ase of an image.

One an go further and extra t the relevant features of the data, for example

size (number of bla k pixels) and symmetry as was done in Chapter 3. These

pra ti al onsiderations regarding the nature of the learning task, though important, are not our primary fo us here. We will assume that through domain

expertise or otherwise, features have been onstru ted to identify the important dimensions, and if two inputs dier in these dimensions, then the inputs

are likely to be dissimilar. Given this assumption, there are well established

ways to measure similarity (or dissimilarity) in dierent ontexts.

6.1.1

Similarity Measures

For inputs x, x′ whi h are ve tors in Rd , we an measure dissimilarity using

the standard Eu lidean distan e,

d(x, x′ ) = kx − x′ k.

The smaller the distan e, the more similar are the obje ts orresponding to

inputs x and x′ .1 For Boolean features the Eu lidean distan e is the square

root of the well known Hamming distan e. The Eu lidean distan e is a spe ial

ase of a more general distan e measure whi h an be dened for an arbitrary

positive semi-denite matrix Q:

d(x, x′ ) = (x − x′ )t Q(x − x′ ).

A useful spe ial ase, known as the Mahalanobis distan e is to set Q = Σ−1 ,

where Σ is the ovarian e matrix 2 of the data. The Mahalanobis distan e

metri depends on the data set. The main advantage of the Mahalanobis

distan e over the standard Eu lidean distan e is that it takes into a ount

orrelations among the data dimensions and s ale. A similar ee t an be

1 An axiomati

treatment of similarity that goes beyond metri -based similarity appeared

in Features of Similarity, A. Tversky,

Psy hologi al Review, 84(4), pp 327352, 1977.

N

N

X

1 X

2Σ = 1

xi xti − x̄x̄t , where x̄ =

xi .

N i=1

N i=1

2

6. Similarity-Based Methods

6.2. Nearest Neighbor

a omplished by rst using input prepro essing to standardize the data (see

Chapter ??) and then using the standard Eu lidean distan e. Another useful

measure, espe ially for Boolean ve tors, is the osine similarity,

CosSim(x, x′ ) =

x • x′

.

kxkkx′ k

The osine similarity is the osine of the angle between the two ve tors,

CosSim ∈ [−1, 1], and larger values indi ate greater similarity. When the

obje ts represent sets, then the set similarity or Ja ard oe ient is often

used. For example, onsider two movies whi h have been wat hed by two different sets of users S1 , S2 . We may measure how similar these movies are by

how similar the two sets S1 and S2 are:

J(S1 , S2 ) =

|S1 ∩ S2 |

;

|S1 ∪ S2 |

1 − J(S1 , S2 ) an be used as a measure of distan e whi h onveniently has the

properties that a metri formally satises, su h as the triangle inequality. We

fo us on the Eu lidean distan e whi h is also a metri ; many of the algorithms,

however, apply to arbitrary similarity measures.

Exer ise 6.1

(a) Give two ve tors with very high osine similarity but very low Eu lidean

distan e similarity. Similarly, give two ve tors with very low osine

similarity but very high Eu lidean distan e similarity.

(b) If the origin of the oordinate system hanges, whi h measure of

similarity hanges? How will this ae t your hoi e of features?

6.2

Nearest Neighbor

Simple rules survive; and, the nearest neighbor te hnique is perhaps the simplest of all. We will summarize the entire algorithm in a short paragraph.

But, before we forge ahead, let's not forget the two basi ompeting prin iples

laid out in the rst ve hapters: any learning te hnique should be expressive

enough that we an t the data and obtain low Ein ; however, it should be

reliable enough that a low Ein implies a low Eout .

The nearest neighbor rule is embarrassingly simple. There is no training

phase (or no `learning' so to speak). The entire algorithm is spe ied by how

one omputes the nal hypothesis g(x) on a test input x. Re all that the data

set is D = (x1 , y1 ) . . . (xN , yN ), where yn = ±1. To lassify the test point x,

nd the nearest point to x in the data set (the nearest neighbor), and use the

lassi ation of this nearest neighbor.

Formally speaking, reorder the data a ording to distan e from x (breaking

ties using the data point's index for simpli ity). We write (x[n] (x), y[n] (x))

3

6. Similarity-Based Methods

6.2. Nearest Neighbor

for the nth su h reordered data point with respe t to x. We will drop the

dependen e on x and simply write (x[n] , y[n] ) when the ontext is lear. So,

d(x, x[1] ) ≤ d(x, x[2] ) ≤ · · · ≤ d(x, x[N ] )

The nal hypothesis is

g(x) = y[1] (x)

(x is lassied by just looking at the label of the nearest data point to x). This

simple nearest neighbor rule admits a ni e geometri interpretation, shown for

two dimensions in Figure 6.1.

PSfrag repla ements

0

0.5

1

0

0.5

1

Figure 6.1: Nearest neighbor Voronoi tessellation.

The shading illustrates the nal hypothesis g(x). Ea h data point xn `owns' a

region dened by the points loser to xn than to any other data point. These

regions are onvex polytopes ( onvex regions whose fa es are hyperplanes),

some of whi h are unbounded; in two dimensions, we get onvex polygons.

The resulting set of regions dened by su h a set of points is alled a Voronoi

(or Diri hlet) tessellation of the spa e. The nal hypothesis g is a Voronoi

tessellation with ea h region inheriting the lass of the data point that owns

the region. Figure 6.2(a) further illustrates the nearest neighbor lassier for

a sample of 500 data points from the digits data des ribed in Chapter 3.

The lear advantage of the nearest neighbor rule is that it is simple and

intuitive, easy to implement and there is no training. It is expressive, as it

a hieves zero in-sample error (as an immediately be dedu ed from Figure 6.1),

and as we will soon see, it is reliable. A pra ti ally important osmeti upside,

when presenting to a lient for example, is that the lassi ation of a test obje t

is easy to `explain': just present the similar obje t on whi h the lassi ation

is based. The main disadvantage is the omputational overhead.

4

6. Similarity-Based Methods

6.2. Nearest Neighbor

VC dimension. The nearest neighbor rule ts within our standard supervised learning framework with a very large hypothesis set. Any set of n labeled

points indu es a Voronoi tessellation with ea h Voronoi region assigned to a

lass; thus any set of n labeled points denes a hypothesis. Let Hn be the hypothesis set ontaining all hypotheses whi h result from some labeled Voronoi

tessellation on n points. Let H = ∪∞

n=1 Hn be the union of all these hypothesis

sets; this is the hypothesis set for the nearest neighbor rule. The learning

algorithm pi ks the parti ular hypothesis from H whi h orresponds to the

realized labeled data set; this hypothesis is in HN ⊂ H. Sin e the training

error is zero no matter what the size of the data set, the nearest neighbor rule

is non-falsiable 3 and the VC-dimension of this model is innite. So, from

the VC-worst- ase analysis, this spells doom. A nite VC-dimension would

have been great, and it would have given us one form of reliability, namely

that Eout is lose to Ein and so minimizing Ein works. The nearest neighbor

method is reliable in another sense, and we are going to need some new tools

if we are to argue the ase.

6.2.1

Nearest Neighbor is 2-Optimal

Using a probabilisti argument, we will show that, under reasonable assumptions, the nearest neighbor rule has an out-of-sample error that is at most

twi e the minimum possible out-of-sample error. The su ess of the nearest

neighbor algorithm relies on the nearby point x[1] (x) having the same lassi ation as the test point x. This means two things: there is a `nearby' point

in the data set; and, the target fun tion is reasonably smooth so that the

lassi ation of this nearest neighbor is indi ative of the lassi ation of the

test point.

We model the target value, whi h is ±1, as noisy and dene

π(x) = P[y = +1|x].

A data pair (x, y) is obtained by rst generating x from the input probability

distribution P (x), and then y from the onditional distribution π(x). We

an relate π(x) to a deterministi target fun tion f (x) by observing that if

π(x) ≥ 12 , then the optimal predi tion is f (x) = +1; and, if π(x) < 21 then

the optimal predi tion is f (x) = −1.4

3 Re all that a hypothesis set is non-falsiable if it

4 When π(x) = 1 , we break the tie in favor of +1.

2

5

an t any data set.

6. Similarity-Based Methods

6.2. Nearest Neighbor

Exer ise 6.2

Let

f (x) =

(

1

if π(x) ≥ 2 ,

+1

−1

otherwise.

Show that the probability of error on a test point x is

e(f (x)) = P[f (x) 6= y] = min{π(x), 1 − π(x)}

and e(f (x)) ≤ e(h(x)) for any other hypothesis h (deterministi or not).

The error e(f (x)) is the probability that y 6= f (x) on the test point x. The

previous exer ise shows that e(f (x)) = min{π(x), 1 − π(x)}, whi h is the

minimum probability of error possible on test point x. Thus, the best possible

out-of-sample mis lassi ation error is the expe ted value of e(f (x)),

∗

Eout

= Ex [e(f (x))] =

Z

dx P (x) min{π(x), 1 − π(x)}.

If we are to infer f (x) from a nearest neighbor using f (x[1] ), then π(x) should

not be varying too rapidly and x[1] should be lose to x. We require that π(x)

should be ontinuous.5 To ensure that there is a near enough neighbor x[1] for

any test point x, it will su e that N be large enough. We now show that,

if π(x) is ontinuous and N is large enough, then the simple nearest neighbor

rule is reliable; spe i ally, it a hieves an out-of-sample error whi h is at most

twi e the minimum a hievable error (hen e the title of this se tion).

Let {DN } be a sequen e of data sets with sizes N = 1, 2, . . . generated

a ording to P (x, y) and let {gN } be the asso iated nearest neighbor de ision

rules for ea h data set. For N large enough, we will show that

∗

Eout (gN ) ≤ 2Eout

.

So, even though the nearest neighbor rule is non-falsiable, the test error is at

most a fa tor of 2 worse than optimal (in the asymptoti limit, i.e., for large

N ). The nearest neighbor rule is reliable; however, Ein , whi h is always zero,

will never mat h Eout . We will never have a good theoreti al estimate of Eout

(so we will not know our nal performan e), but we know that you annot do

mu h better. The formal statement we an make is:

For any δ > 0, and any ontinuous noisy target π(x), there is

∗

a large enough N for whi h, with probability at least 1 − δ , Eout (gN ) ≤ 2Eout

.

Theorem 6.1.

The intuition behind the fa tor of 2 is that f (x) makes a mistake only if

there is an error on the test point x, whereas the nearest neighbor lassier

makes a mistake if there is an error on the test point or on the nearest neighbor

(whi h adds up to the fa tor of 2).

5 Formally, it is only required that the assumptions hold on a set of probability 1.

6

6. Similarity-Based Methods

6.2. Nearest Neighbor

More formally, onsider a test point x. The nearest neighbor lassier

makes an error on x if y = 1 and y[1] = −1 or if y = −1 and y[1] = 1.

P[gN (x) 6= y]

=

π(x) · (1 − π(x[1] )) + (1 − π(x)) · π(x[1] ),

=

=

2π(x) · (1 − π(x)) + ǫN (x),

2η(x) · (1 − η(x)) + ǫN (x),

where we dened η(x) = min{π(x), 1 − π(x)} (the minimum probability of

error on x), and ǫN (x) = (2π(x) − 1) · (π(x) − π(x[1] )). Observe that

|ǫN (x)| ≤ |π(x) − π(x[1] )|,

be ause 0 ≤ π(x) ≤ 1. To get Eout (gN ), we take the expe tation with respe t

∗

∗ 2

to x and use Eout

= E[η(x)] E[η(x)2 ] ≥ E[η(x)]2 = Eout

:

Eout (gN )

=

=

≤

E [P[gN (x) 6= y]]

2 E [η(x)] − 2 E [η 2 (x)] + Ex [ǫN (x)]

∗

∗

2Eout

(1 − Eout

) + Ex [ǫN (x)].

Under very general onditions, Ex [ǫN (x)] → 0 in probability, whi h implies

Theorem 6.1. We don't give a formal proof, but sket h the intuition for why

ǫN (x) → 0. When the data set gets very large (N → ∞), every point x has

a nearest neighbor that is lose by. That is, x[1] (x) → x for all x. This is

the ase if, for example, P (x) has bounded support6 as shown in Problem 6.9

(more general settings are overed in the literature). By the ontinuity of π(x),

if x[1] → x then π(x[1] ) → π(x), and sin e |ǫN (x)| ≤ |π(x[1] ) − π(x)|, it follows

that ǫN (x) → 0.7 The proof also highlights when the nearest neighbor works:

a test point must have a nearby neighbor, and the noisy target π(x) should

be slowly varying so that the y -value at the nearby neighbor is indi ative of

the y -value at x.

∗

∗

is small, Eout (g) is at most twi e as bad. When Eout

is large,

When Eout

you are doomed anyway (but on the positive side, you will get a better fa tor

than 2 ). It is quite startling that as simple a rule as nearest neighbor is

2-optimal.

At least half the lassi ation power of the data is in the nearest neighbor.

The rate of onvergen e to 2-optimal depends on how smooth π(x) is, but

the onvergen e itself is never in doubt, relying only on the weak requirement

that π(x) is ontinuous. The fa t that you get a small Eout from a model

that an t any data set has led to the notion that the nearest neighbor rule

is somehow `self-regularizing'. Re all that regularization guides the learning

6 x ∈ supp(P ) if for any ǫ > 0, P[B (x)] > 0 (B (x) is the ball of radius ǫ entered on x).

ǫ

ǫ

7 One te hni ality remains be ause we need to inter hange the order of limit and expe -

tation: limN →∞ Ex [ǫ(x)] = Ex [limN →∞ ǫ(x)] = 0. For the te hni ally in lined, this an be

justied under mild measurability assumptions using dominated onvergen e.

7

6. Similarity-Based Methods

6.2. Nearest Neighbor

toward simpler hypotheses, and helps to ombat noise, espe ially when there

are fewer data points. This self regularizing in the nearest neighbor algorithm

o urs naturally be ause the nal hypothesis is indeed simpler when there are

fewer data points. The following examples of the nearest neighbor lassier

with in reasing number of data points illustrates how the boundary gets more

ompli ated only as you in rease N .

PSfrag repla ements

-2.5

-2

-1.5

-1

-0.5

0

0.5

1

1.5

2

2.5

-3

-2

-1

0

1

2

3

PSfrag repla ements

-2.5

-2

-1.5

-1

-0.5

0

0.5

1

1.5

2

2.5

-3

-2

-1

0

1

2

3

N =2

6.2.2

PSfrag repla ements

-2.5

-2

-1.5

-1

-0.5

0

0.5

1

1.5

2

2.5

-3

-2

-1

0

1

2

3

N =3

PSfrag repla ements

-2.5

-2

-1.5

-1

-0.5

0

0.5

1

1.5

2

2.5

-3

-2

-1

0

1

2

3

PSfrag repla ements

-2.5

-2

-1.5

-1

-0.5

0

0.5

1

1.5

2

2.5

-3

-2

-1

0

1

2

3

N =4

N =5

N =6

k -Nearest Neighbors (k -NN)

By onsidering more than just a single neighbor, one obtains the generalization of the nearest neighbor rule to the k-nearest neighbor rule (k-NN). For

simpli ity, assume that k is odd. The k-NN rule lassies the test point x

a ording to the majority lass among the k nearest data points to x (the k

nearest neighbors). The nearest neighbor rule is the 1-NN rule. For k > 1,

the nal hypothesis is:

g(x) = sign

k

X

i=1

!

y[i] (x) .

(k is odd and yn = ±1). Figure 6.2 gives a omparison of the nearest neighbor

rule with the 21-NN rule on the digits data (blue ir les are the digit 1 and

all other digits are the red x's). When k is large, the hypothesis is `simpler';

in parti ular, when k = N the nal hypothesis is a onstant equal to the

majority lass in the data. The parameter k ontrols the omplexity of the

resulting hypothesis. Smaller values of k result in a more omplex hypothesis, with lower in-sample-error. The nearest neighbor rule always a hieves

zero in-sample error. When k > 1 (see Figure 6.2(b)), the in-sample error

is not ne essarily zero and we observe that the omplexity of the resulting

lassi ation boundary drops onsiderably with higher k.

As with the simple nearest neighbor rule,

one an analyze the performan e of the k-NN rule (for large N ). The next

exer ise guides the reader through this analysis.

Three Neighbors is Enough.

8

6. Similarity-Based Methods

PSfrag repla ements

0.1

0.2

0.3

0.4

0.5

0.6

-0.5

-0.4

-0.3

-0.2

-0.1

6.2. Nearest Neighbor

PSfrag repla ements

0.1

0.2

0.3

0.4

0.5

0.6

-0.5

-0.4

-0.3

-0.2

-0.1

(a)

1-NN rule

(b)

21-NN rule

Figure 6.2: The 1-NN and 21-NN rules for lassifying a random

sample of

√

500 digits (1 (blue

ir le) vs all other digits).

Note,

1-NN rule, the in-sample error is zero, resulting in a

boundary with islands of red and blue regions.

21 ≈

500. For the

ompli ated de ision

For the

21-NN rule, the

in-sample error is not zero and the de ision boundary is `simpler'.

Exer ise 6.3

Fix an odd k ≥ 1. For N = 1, 2, . . . and data sets {DN } of size N , let gN

be the k -NN rule derived from DN , with out-of-sample error Eout (gN ).

(a) Argue that Eout (gN ) = Ex [Qk (η(x))] + Ex [ǫN (x)] for some error

term ǫN (x) whi h onverges to zero, and where

Qk (η) =

(k−1)/2 X

i=0

k

i

η i+1 (1 − η)k−i + (1 − η)i+1 η k−i ,

and η(x) = min{π(x), 1 − π(x)}.

1

(b) Plot Qk (η) for η ∈ [0, 2 ] and k = 1, 3, 5.

( ) Show that for large enough N , with probability at least 1 − δ ,

k=3:

k=5:

∗

Eout (gN ) ≤ Eout

+ 3 E [η 2 (x); ]

∗

Eout (gN ) ≤ Eout

+ 10 E [η 3 (x)].

∗

(d) [Hard℄ Show that Eout (gN ) is asymptoti ally Eout (1 + O(k

−1/2

)).

Qk to argue that there is some a(k) su√h that

Qk ≤ η(1 + a(k)), and show that the best su h a(k) is O(1/ k).℄

[Hint: Use your plot of

For k = 3, as N grows, one nds that the out-of-sample error is nearly optimal:

∗

Eout (gN ) ≤ Eout

+ 3 E [η 2 (x)].

To interpret this result, suppose that η(x) is approximately onstant. Then,

9

6. Similarity-Based Methods

6.2. Nearest Neighbor

for k = 3, E (gN ) ≤ E ∗ + 3(E ∗ )2 (when N is large). The intuition here is

that the nearest neighbor lassier makes a mistake if there is an error on the

test x or if there are at least 2 mistakes on the three nearest neighbors. The

probability of two errors is O(η 2 ) whi h is dominated by the probability of

error on the test point. The 3-NN rule is approximately optimal, onverging

to E ∗ as E ∗ → 0.

To illustrate further, suppose the optimal lassier gives E ∗ = 1%. Then,

for large enough N , 3-NN delivers E of at most 1.03%, whi h is essentially

optimal. The exer ise also shows that going to 5-NN improves the asymptoti

performan e to 1.001%, whi h is ertainly loser to optimal than 3-NN, but

hardly worth the eort. Thus, a useful pra ti al guideline is: k = 3 nearest

neighbors is often enough.

out

out

out

out

out

out

out

The parameter k ontrols the

approximation-generalization trade o. Choosing k too small leads to too

omplex a de ision rule, whi h overts the data; on the other hand, k too

large leads to undertting. Though k = 3 works well when E ∗ is small, for

general situations we need to hoose between the dierent k: ea h value of k

is a dierent model. We need to pi k the best value of k (the best model).

That is, we need model sele tion.

Approximation Versus Generalization.

out

Exer ise 6.4

Consider the task of sele ting a nearest neighbor rule. What's wrong with

the following logi

applied to sele ting

k? (Limits are as N → ∞.)

Hnn with N hypotheses, the k-NN

k = 1, . . . , N . Use the in-sample error to hoose a

value of k whi h minimizes Ein . Using the generalization error

bound in Equation (2.1),

on lude that Ein → Eout be ause

log N/N → 0. Hen e on lude that asymptoti ally, we will be

pi king the best value of k , based on Ein alone.

Consider the hypothesis set

rules using

[Hints:

What value of

k will be pi ked?

What will

Ein be?

Does your

'hypothesis set' depend on the data?℄

Fortunately, there is powerful theory whi h gives a guideline for sele ting k as

a fun tion of N ; let k(N ) be the hoi e of k, whi h is hosen to grow as N

grows. Note that 1 ≤ k(N ) ≤ N .

Theorem 6.2.

For N → ∞, if k(N ) → ∞ and k(N )/N → 0 then,

Ein (g) → Eout (g)

and

∗

Eout (g) → Eout

.

Theorem 6.2 holds under smoothness assumptions on π(x) and regularity

onditions on P (x) whi h are very general. The theorem sets k(N ) as a fun tion of N and states that as N

√ → ∞, we an re over the optimal lassier.

One good hoi e for k(N ) is ⌊ N ⌋.

10

6. Similarity-Based Methods

6.2. Nearest Neighbor

We sket h the intuition for Theorem 6.2. For a test point x, onsider the

distan e r to its k-th nearest neighbor. The fra tion of points whi h fall within

distan e r of x is k/N whi h, by assumption, is approa hing zero. The only

way that the fra tion of points within r of x goes to zero is if r itself tends to

zero. That is, all the k nearest neighbors to x are approa hing x. Thus, the

fra tion of these k neighbors with y = +1 is approximating π(x) and as k → ∞

this fra tion will approa h π(x) by the law of large numbers. The majority vote

among these k neighbors will therefore be implementing the optimal lassier f

∗

. We an

from Exer ise 6.2, and hen e we will obtain the optimal error Eout

also dire tly identify the roles played by the two assumptions in the statement

of the theorem. The k/N → 0 ondition is to ensure that all the k nearest

neighbors are lose to x; the k → ∞ is to ensure that there are enough lose

neighbors so that the majority vote is almost always the optimal

de ision.

√

Few pra titioners would be willing to blindly set k = ⌊ N ⌋. They would

mu h rather the data spoke for itself. Validation, or ross validation (Chapter 4) are good ways to sele t k. Validation to sele t a nearest neighbor

lassier would run as follows. Randomly divide the data D into a training set

Dtrain and a validation set Dval of sizes N − K and K respe tively (the size

of the validation set K is not to be onfused with k, the number of nearest

neighbors to use). Let Htrain = {g1 , . . . , gN −K } be N − K hypotheses8 dened

by the k-NN rules obtained from Dtrain , for k = 1, . . . , N −K . Now use Dval to

sele t one hypothesis g from Htrain (the one with minimum validation error).

Similarly, one ould use ross validation instead of validation.

Exer ise 6.5

Consider using validation to sele t a nearest neighbor rule (hypothesis g

from Htrain ). Let g∗ be the hypothesis in Htrain with minimum Eout .

(a) Show that if K/ log(N − K) → ∞ then validation

hooses a good

hypothesis, Eout (g ) ≈ Eout (g∗ ). Formally state su h a result and

show it to be true.

[Hint: The statement has to be probabilisti ;

use the Hoeding bound and the fa t that hoosing g amounts to

sele ting a hypothesis from among N − K using a data set of size

K .℄

∗

(b) If also N − K → ∞, then show that Eout (g ) → Eout (validation

[Hint: Use (a) together with

Theorem 6.2 whi h shows that some value of k is good.℄

results in near optimal performan e).

Note that the sele ted g is not a nearest neighbor rule on the full data D ;

it is a nearest neighbor rule using data Dtrain , and k neighbors. Would the

performan e improve if we used the k -NN rule on the full data set D ?

The previous exer ise shows that validation works if the validation set size is

appropriately hosen, for example, proportional to N . Figure 6.3 illustrates

8 The g

notation was introdu ed in Chapter 4 to denote a hypothesis built from the data

set that is missing some data points.

11

6. Similarity-Based Methods

6.2. Nearest Neighbor

PSfrag repla ements

PSfrag repla ements

CV

2

k=√

3

k= N

CV

CV

Eout (%)

Ein (%)

2

1.5

1

1

0

1.5

1000

2000

3000

4000

5000

# Data Points, N

(a) In-sample error of k-NN rule

0

k=1

k=√

3

k= N

CV

1000

2000

3000

4000

5000

# Data Points, N

(b) Out-of-sample error of k-NN rule

Figure 6.3: In and out-of-sample errors for various hoi es of k for the 1

vs all other digits lassi ation task. (a) shows the in-sample error; (b)

the out-of-sample error. N points are randomly sele ted for training, and

the remaining for omputing the test error. The performan e is averaged

over 10,000 su h training-testing splits. Observe that the 3-NN is almost

as good as anything; the in and out-of sample errors for k = N 1/2 are a

lose mat h, as expe ted from Theorem 6.2. The ross validation in-sample

error is approa hing the out-of-sample error. For k = 1 the in-sample error

is zero, and is not shown.

√

our main on lusions on the digits data using k = ⌊ N ⌋, k hosen using

10-fold ross validation and k xed at 1, 3.

6.2.3 Improving the E ien y of Nearest Neighbor

The simpli ity of the nearest neighbor rule is both a virtue and a vi e. There's

no training ost, but we pay for this when predi ting on a test input during

deployment. For a test point x, we need to ompute the k nearest neighbors.

This means we need a ess to all the data, a memory requirement of N d

and a omputational omplexity of O(N d + N log k) (using appropriate data

stru tures to ompute N distan es and pi k the smallest k). This an be

ex essive as the next exer ise illustrates.

Exer ise 6.6

N +1 − 1 using 10-fold

2

3

3

ross validation. Show that the running time is O(N d + N log N )

We want to sele t the value of k = 1, 3, 5, . . . , 2

The basi approa h to addressing these issues is to prepro ess the data in some

way. This an help by either storing the data in a spe ialized data stru ture so

that the nearest neighbor queries are more e ient, or by redu ing the amount

of data that needs to be kept (whi h helps with the memory and omputational

12

6. Similarity-Based Methods

6.2. Nearest Neighbor

requirements when deploying). These are a tive resear h areas, and we dis uss

the basi approa hes here.

Data Condensing The goal of data ondensing is to redu e the data set

size while making sure the retained data set somehow mat hes the original full

data set. We denote the ondensed data set by S . We will only onsider the

ase when S is a subset of D.9

Fix k, and for the data set S , let gS be the k-NN rule derived from S and,

similarly, gD is the k-NN rule derived from the full data set D. The ondensed

data set S is onsistent with D if

gS (x) = gD (x)

∀x ∈ Rd .

This is a strong requirement, and there are omputationally expensive algorithms whi h an a hieve this (see Problem 6.11). A weaker requirement is

that the ondensed data only be training set onsistent, whi h means that the

ondensed data and the full training data give the same lassi ations on the

training data points,

gS (xn ) = gD (xn )

∀xn ∈ D.

This means that, as far as the training points are on erned, the rule derived

from S is the same as the rule derived from D . Note that training set onsistent does not mean Ein (gS ) = 0. For starters, when k > 1, even the full

data D may not a hieve Ein (gD ) = 0.

The ondensed nearest neighbor algorithm (CNN) is a very simple heuristi

that results in a training set onsistent

subset of the data. We illustrate with a

data set of 8 points on the right, setting k

to 3. Initialize the working set S randomly

with k data points from D (the boxed

points illustrated on the right). As long

as S is not training set onsistent, augment S with a data point not in S as follows. Let x∗ be any data point for whi h

gS (x∗ ) 6= gD (x∗ ), su h as the solid blue

ir le in the example. This solid blue point is lassied blue by S (in fa t,

every point is lassied blue by S be ause S only ontains three points, two of

whi h are blue); but, it is lassied red by D (be ause two of its three nearest

points in D are red). So gS (x∗ ) 6= gD (x∗ ). Let x′ be the nearest data point to

x∗ whi h is not in S and has lass gD (x∗ ). Note that x∗ ould be in S . The

reader an easily verify that su h an x′ must exist be ause x∗ is in onsistently

lassied by S . Add x′ to S . Continue adding to S in this way until S is

training set onsistent. (Note that D is training set onsistent, by denition.)

d

d

t

h

i

s

p

o

i

n

t

+

+

+

+

a

9 More generally, if S

ontains arbitrary points,

13

S is

alled a

ondensed set of

prototypes.

6. Similarity-Based Methods

PSfrag repla ements

0.1

0.2

0.3

0.4

0.5

0.6

-0.5

-0.4

-0.3

-0.2

-0.1

6.2. Nearest Neighbor

PSfrag repla ements

0.1

0.2

0.3

0.4

0.5

0.6

-0.5

-0.4

-0.3

-0.2

-0.1

(a) Condensed data for 1-NN

(b) Condensed data for 21-NN

Figure 6.4: The ondensed data and resulting lassiers from running the

CNN heuristi to ondense the 500 digits data points used in Figure 6.2.

(a) Condensation to 27 data points for 1-NN training set onsistent (b)

Condensation to 40 data points for 21-NN training set onsistent.

The CNN algorithm must terminate after at most N steps, and when it

terminates, the resulting set S must be training set onsistent. The CNN algorithm delivers good ondensation in pra ti e as illustrated by the ondensed

data in Figure 6.4 as ompared with the full data in Figure 6.2.

Exer ise 6.7

Show the following properties of the CNN heuristi . Let S be the urrent

set of points sele ted by the heuristi .

(a) If S is not training set onsistent, and if x∗ is a point whi h is not

training set onsistent, show that the CNN heuristi will always nd

a point to add to S .

(b) Show that the point added will `help' with the lassi ation of x∗

by S ; it su es to show that the new k nearest neighbors to x∗ in S

will ontain the point added.

( ) Show that after at most N − k iterations the CNN heuristi must

terminate with a training set onsistent S .

The CNN algorithm will generally not produ e a training onsistent set of

minimum ardinality. Further, the ondensed set an vary depending on the

order in whi h data points are onsidered, and the pro ess is quite omputationally expensive; but, at least it is done only on e, and it saves every time a

new point is to be lassied.

We should distinguish data ondensing from data editing. The former has a

purely omputational motive, to redu e the size of the data set while keeping

14

6. Similarity-Based Methods

6.2. Nearest Neighbor

the de ision boundary nearly un hanged. The latter edits the data set to

improve predi tion performan e. This typi ally means remove the points that

you think are noisy (see Chapter ). One way to determine if a point is noisy

is if it disagrees with the majority lass in its k-neighborhood. Su h data

points are removed from the data and the remaining data are used with the

1-NN rule. A very similar ee t an be a omplished by just using the k-NN

rule with the original data.

??

E ient Sear h for the Nearest Neighbors

The other main approa h

to alleviating the omputational burden is

to prepro ess the data in some way so that

nearest neighbors an be found qui kly.

There is a large and growing volume of

S2

literature in this arena. Our goal here is

to des ribe the basi idea with a simple

te hnique.

Suppose we want to sear h for the

x

nearest neighbor to the point x (red). Assume that the data is partitioned into lusters (or bran hes ). In our illustration, we

S1

have two lusters S1 and S2 . Ea h luster

has a ` enter' µj and a radius rj . First

sear h the luster whose enter is losest to the query point x. In the example, we sear h luster S1 be ause kx − µ1 k ≤ kx − µ2 k. Suppose that the

nearest neighbor to x in S1 is x̂[1] , whi h is potentially x[1] , the nearest

point to x in all the data. For any xn ∈ S2 , by the triangle inequality,

kx − xn k ≥ kx − µ2 k − r2 . Thus, if the bound ondition

kx − x̂[1] k ≤ kx − µ2 k − r2

holds, then we are done (we don't need to sear h S2 ), and an laim that x̂[1]

is a nearest neighbor (x[1] = x̂[1] ). We gain a omputational saving if the

bound ondition holds. This approa h is alled a bran h and bound te hnique

for nding the nearest neighbor. Let's informally onsider when we are likely

to satisfy the bound ondition, and hen e obtain omputational saving. By

the triangle inequality, kx − x̂[1] k ≤ kx − µ1 k + r1 , so, if kx − µ1 k + r1 ≤

kx − µ2 k − r2 , then the bound ondition is ertainly satised. To interpret

this su ient ondition, onsider the ase when x is mu h loser to µ1 , in

whi h ase kx − µ1 k ≈ 0 and kx − µ2 k ≈ kµ1 − µ2 k. Then we want

r1 + r2 ≪ kµ1 − µ2 k

to ensure the bound holds. In words, the radii of the lusters (the within

luster spread ) should be mu h smaller than the distan e between lusters

15

6. Similarity-Based Methods

6.2. Nearest Neighbor

(the between luster spread ). A good lustering algorithm should produ e exa tly su h lusters, with small within luster spread and large between luster

spread.

There is nothing to prevent us from applying the bran h and bound te hnique re ursively within ea h luster. So, for example, to sear h for the nearest

neighbor inside S1 , we an assume that S1 has been partitioned into (say) two

lusters S11 and S12 , and so on.

Exer ise 6.8

(a) Give an algorithmi pseudo ode for the re ursive bran h and bound

sear h for the nearest neighbor, assuming that every luster with more

than 2 points is split into 2 sub- lusters.

(b) Assume the sub- lusters of a luster are balan ed, i.e. ontain exa tly

half the points in the luster. What is the maximum depth of the

re ursive sear h for a nearest neighbor. (Assume the number of data

points is a power of 2).

( ) Assume balan ed sub- lusters and that the bound ondition always

holds. Show that the time to nd the nearest neighbor is O(d log N ).

(d) How would you apply the bran h and bound te hnique to nding the

k-nearest neighbors as opposed to the single nearest neighbor?

The omputational savings of the bran h and bound algorithm depend on

how likely it is that the bound ondition is met, whi h depends on how good

the partitioning is. We observe that the lusters should be balan ed (so that

the re ursion depth is not large), well separated and have small radii (so the

bound ondition will often hold). A very simple heuristi for getting su h

a good partition into M lusters is based on a lustering algorithm we will

dis uss later. Here is the main idea.

First reate a set of M well separated

enters for the lusters; we re ommend a

simple greedy approa h. Start by taking

an arbitrary data point as a enter. Now,

as a se ond enter, add the point furthest

from the rst enter; to get ea h new enter, keep adding the point furthest from

the urrent set of enters until you have

M enters (the distan e of a point PSfrag

fromrepla ements

a set is the distan e to its nearest neigh- 0.50

1

bor in the set). In the example, the red 0.20

point is the rst (random) enter added; 0.4

0.6

the largest blue point is added next and 0.81

so on. This greedy algorithm an be implemented in O(M N d) time using appropriate data stru tures. The partition

is inferred from the Voronoi regions of ea h enter: all points in a enter's

16

6. Similarity-Based Methods

6.2. Nearest Neighbor

Voronoi region belong to that enter's luster. The enter of ea h luster is redened as the average data point in the luster, and its radius is the maximum

distan e from the enter to a point in the luster,

µj =

1 X

xn ;

|Sj |

rj = max kxn − µj k.

xn ∈Sj

xn ∈Sj

We an improve the lusters by obtaining new Voronoi regions dened by these

new enters µj , and then updating the

enters and radii appropriately for these

new lusters. Naturally, we an ontinue

this pro ess iteratively (we introdu e this

PSfrag repla ements

algorithm later in the hapter as Lloyd's

0

algorithm for lustering). The main goal 0.2

0.4

0.6

here is omputational e ien y, and the 0.81

perfe t partition is not ne essary. The 0.20

partition, enters and radii after one re- 0.4

0.6

nement are illustrated on the right. Note 0.81

that though the lusters are disjoint, the

spheres en losing the lusters need not be.

The lusters, together with µj and rj an be omputed in O(M N d) time.

If the depth of the partitioning is O(log N ), whi h is the ase if the lusters

are nearly balan ed, then the total run time to ompute all the lusters at

every level is O(M N d log N ). In Problem 6.16 you an experiment with the

performan e gains from su h simple bran h and bound te hniques.

6.2.4

Nearest Neighbor is Nonparametri

There is some debate in the literature on parametri versus nonparametri

learning models. Having overed linear models, and nearest neighbor, we are

in a position to arti ulate some of this dis ussion. We begin by identifying some

of the dening hara teristi s of parametri versus nonparametri models.

Nonparametri methods have no expli it parameters to be learned from

data; they are, in some sense, general and expressive or exible; and, they typially store the training data, whi h are then used during deployment to predi t

on a test point. Parametri methods, on the other hand, expli itly learn ertain parameters from data. These parameters spe ify the nal hypothesis from

a typi ally `restri tive' parameterized fun tional form; the learned parameters

are su ient for predi tion, i.e. the parameters summarize the data whi h is

then not needed later during deployment.

To further highlight the distin tion between these two types of te hniques,

onsider the nearest neighbor rule versus the linear model. The nearest neighbor rule is the prototypi al nonparametri method. There are no parameters

to be learned. Yet, the nonparametri nearest neighbor method is exible and

17

6. Similarity-Based Methods

6.2. Nearest Neighbor

general: the nal de ision rule an be very omplex, or not, molding its shape

to the data. The linear model, whi h we saw in Chapter 3 is the prototypi al

parametri method. In d dimensions, there are d + 1 parameters (the weights

and bias) whi h need to be learned from the data. These parameters summarize the data, and after learning, the data an be dis arded: predi tion

only needs the learned parameters. The linear model is quite rigid, in that no

matter what the data, you will always get a linear separator as g . Figure 6.5

illustrates this dieren e in exibility on a toy example.

PSfrag repla ements

0

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

0.9

1

-0.5

-0.4

-0.3

-0.2

-0.1

0

0.1

0.2

0.3

0.4

0.5

PSfrag repla ements

0

0.2

0.4

0.6

0.8

1

-0.5

0

0.5

(a) Nonparametri NN

(b) Parametri linear

Figure 6.5: The de ision boundary of the `exible' nonparametri nearest

neighbor rule molds itself to the data, whereas the `rigid' parametri linear

model will always give a linear separator.

The k-nearest neighbor method would also be onsidered nonparametri

(on e the `parameter' k has been spe ied). Theorem 6.2 is an example of

a general onvergen e result.10 Under mild regularity onditions, no matter

what the target f is, we an re over it as N → ∞, provided that k is hosen

appropriately. That's quite a powerful statement about su h a simple learning

model applied to learning a general f . Su h onvergen e results under mild

assumptions on f are a trademark of nonparametri methods. This has led

to the folklore that nonparametri methods are, in some sense, more powerful

than their ousins the parametri methods: for the parametri linear model,

only if the target f happens to be in the linearly parameterized hypothesis

set, an one get su h onvergen e to f with larger N .

To ompli ate the distin tion between the two methods, let's look at the

non-linear feature transform (e.g. the polynomial transform). As the polynomial order in reases, the number of parameters to be estimated in reases

and H, the hypothesis set, gets more and more expressive. It is possible to

10 For the te hni ally oriented, it establishes the universal onsisten y of the k-NN rule.

18

6. Similarity-Based Methods

6.2. Nearest Neighbor

hoose the polynomial order to in rease with N , but not too qui kly so that H

gets more and more expressive, eventually apturing any xed target and yet

the number of parameters grows slowly enough that it is possible to ee tively

learn them from the data. Su h a model has properties of both the nonparametri and parametri settings: you still need to learn parameters (like

parametri models) but the hypothesis set is getting more and more expressive

so that you do get onvergen e to f . As a result, su h te hniques have sometimes been alled parametri , and sometimes nonparametri , neither being a

satisfa tory adje tive; we prefer the term semi-parametri . Many te hniques

are semi-parametri , for example Neural Networks (see Chapter ??).

In appli ations, the purely parametri model (like the linear model) has

be ome synonymous with `spe ialized' in that you have thought very arefully

about the features and the form of the model and you are ondent that the

spe ialized linear model will work. To take another example from interest

rate predi tion, a well known spe ialized parametri model is the multi-fa tor

Vasi ek model. If we had no idea what the appropriate model is for interest

rate predi tion, then rather than ommit to some spe ialized model, one might

use a `generi ' nonparametri or semi-parametri model su h as k-NN or neural

networks. In this setting, the use of the more generi model, by being more

exible, a ommodates our inability to pin down the spe i form of the target

fun tion. We stress that the availability of `generi ' nonparametri and semiparametri models is not a li ense to stop thinking about the right features

and form of the model; it is just a ba kstop that is available when the target

is not easy to expli itly model parametri ally.

6.2.5

Multi lass Data

The nearest neighbor method is an elegant platform to study an issue whi h,

unto now, we have ignored. In our digits data, there are not 2 lasses (±1), but

10 lasses (0, 1, . . . , 9). This is a multi lass problem. Several approa hes to the

multi lass problem de ompose it into a sequen e of 2 lass problems. For example, rst lassify between `1' and `not 1'; if you lassify as `1' then you are done.

Otherwise determine `2' versus `not 2';

this pro ess ontinues until you have narrowed down the digit. Several ompli ations arise in determining what is the best

sequen e of 2 lass problems; and, they

need not be one versus all (they an be

any subset versus its omplement). PSfrag repla ements

The nearest neighbor method oers a 0.50

simple solution. The lass of the test point -0.210

x is simply the lass of its nearest neigh- 0.2

0.4

bor x[1] . One an also generalize to the k- 0.60.8

nearest neighbor rule: the lass of x is the 1.21

majority lass among the k-nearest neigh19

PSfrag repla ements

PSfrag repla ements

6. Similarity-Based Methods

6.2. Nearest Neighbor

0

1

9

1

Symmetry

3

0.1

0.2

4

0.3

0.4

5

0.5

0.6

6

-0.55

Symmetry

2

0

8

-0.5

7

-0.45

0.1

8

0.2

3

-0.4

-0.35

0.3

9

0.4

-0.3

-8

-0.25

-6

-0.2

-4

-0.15

Average Intensity

-2

0

7

-0.05

Figure 6.6:

2

6

Average Intensity

-0.1

(a) Multi lass digits data

bors

4

9

(b) 21-NN de ision regions

21-NN de ision lassier for the multi lass digits data.

x[1] , . . . , x[k] , breaking ties randomly.

Exer ise 6.9

With C lasses labeled 1, . . . , C , dene πc (x) = P[c|x] (the probability to

observe lass c given x, analogous to π(x)). Let η(x) = 1 − maxc πc (x).

(a) Dene a target f (x) = argmaxc πc (x). Show that, on a test point x,

f attains the minimum possible error probability of

e(f (x)) = P[f (x) 6= y] = η(x).

(b) Show that for the nearest neighbor rule (k = 1), with high probability,

the nal hypothesis gN a hieves an error on the test point x that is

N →∞

e(gN (x)) −→

C

X

c=1

πc (x)(1 − πc (x)).

( ) Hen e, show that for large enough N , with high probability,

∗

Eout (gN ) ≤ 2Eout

−

[Hint: Show that

and

P

i ai = 1 , ℄

P

C

∗

)2 .

(Eout

C −1

(1−a1 )

2

2

i ai ≥ a1 +

C−1

2

for any

a1 ≥ · · · ≥ aC ≥ 0

The previous exer ise shows that even for the multi lass problem, the simple

nearest neighbor is at most a fa tor of 2 from optimal.

a universal

onsisten y result as in Theorem 6.2.

One

an also obtain

The result of running a

21-nearest neighbor rule on the digits data is illustrated in Figure 6.6.

20

6. Similarity-Based Methods

6.2. Nearest Neighbor

The probability of mis lassi ation whi h we disussed in the 2- lass problem an be generalized to a onfusion matrix whi h

is a better representation of the performan e, espe ially when there are multiple lasses. The onfusion matrix tells us not only the probability of error,

but also the nature of the error, namely whi h lasses are onfused with ea h

other. Analogous to the mis lassi ation error, one has the in-sample and outof-sample onfusion matri es. The in-sample (resp. out-of-sample) onfusion

matrix is a C × C matrix whi h measures how often one lass is lassied as

another. So, the out-of-sample onfusion matrix is

The Confusion Matrix.

Eout (c1 , c2 )

=

=

=

P[ lass c1 is lassied as c2 by g]

Ex πc1 (x) Jg(x) = c2 K

Z

dx P (x)πc1 (x) Jg(x) = c2 K .

where J·K is the indi ator fun tion whi h equals 1 when its argument is true.

Similarly, we an dene the in-sample onfusion matrix,

Ein (c1 , c2 ) =

1 X

Jg(xn ) = c2 K ,

N n:y =c

n

1

where the sum is over all data points with lassi ation c1 , and the summand

ounts the number of those ases that g lassies as c2 . The out-of-sample

onfusion matrix obtained by running the 21-NN rule on the 10- lass digits

data is shown in Table 6.1. The sum of the diagonal elements gives the probability of orre t lassi ation, whi h is about 42%. From Table 6.1, we an

easily identify some of the digits whi h are ommonly onfused, for example 8

is often lassied as 0. In omparison, for the two lass problem of lassifying

digit 1 versus all others, the su ess was upwards of 98%. For the multi lass

problem, random performan e would a hieve a su ess rate of 10%, so the performan e is signi antly above random; however, it is signi antly below the

2- lass version; multi lass problems are typi ally mu h harder than the two

lass problem. Though symmetry and intensity are two features that have

enough information to distinguish between 1 versus the other digits, they are

not powerful enough to solve the multi lass problem. Better features would

ertainly help. One an also gain by breaking up the problem into a sequen e

of 2- lass tasks and tailoring the features to ea h 2- lass problem using domain

knowledge.

6.2.6

Nearest Neighbor for Regression.

With multi lass data, the natural way to ombine the lasses of the nearest

neighbors to obtain the lass of a test input is by using some form of majority

voting pro edure. When the output is a real number (y ∈ R), the natural way

to ombine the outputs of the nearest neighbors is using some form of averaging. The simplest way to extend the k-nearest neighbor algorithm to regression

21

6. Similarity-Based Methods

6.2. Nearest Neighbor

True

Predi ted

0

1

2

3

4

5

6

7

8

0

13.5

0.5

0.5

1

0

0.5

0

0

0.5

9

0

1

0.5

13.5

0

0

0

0

0

0

0

0

14

2

0.5

0

3.5

1

1

1.5

1

1

0

0.5

10

3

2.5

0

1.5

2

0.5

0.5

0.5

0.5

0.5

1

9.5

4

0.5

0

1

0.5

1.5

0.5

1

2

0

1.5

8.5

16.5

5

0.5

0

2.5

1

0.5

1.5

1

1

0

0.5

7.5

6

0.5

0

2

1

1

1

1

1

0

1

8.5

7

0

0

1.5

0.5

1.5

0.5

1

3

0

1

9

8

3.5

0

0.5

1

0.5

0.5

0.5

0

0.5

1

8

9

0.5

0

1

1

1

0.5

1

1

0.5

2

8.5

22.5

14

14

9

7.5

7

7

9.5

2

8.5

100

Out-of-sample onfusion matrix for the 21-NN rule on the 10lass digits problem (all numbers are in %). The bold entries along the

diagonal are the orre t lassi ations. Pra titioners sometimes work with

the lass onditional onfusion matrix whi h is P[c2 |c1 ], the probability that

g lassies c2 given that the true lass is c1 . The lass onditional onfusion

matrix is obtained from the onfusion matrix by dividing ea h row by the

sum of the elements in the row. Sometimes the lass onditional onfusion

matrix is easier to interpret be ause the ideal ase is a diagonal matrix with

all diagonals equal to 100%.

Table 6.1:

is to take the average of the target values from the

k -nearest neighbors:

k

1X

y[i] (x).

g(x) =

k i=1

There are no expli it parameters being learned, and so this is a nonparametri

regression te hnique. Figure 6.7 illustrates the

k -NN te hnique for regression

using a toy data set generated by the target fun tion in light gray.

PSfrag repla ements

k=1

k=3

-0.2

k = 11

0

0.2

0.4

PSfrag repla ements

PSfrag repla ements

-0.5

-0.5

1.2

0

0

-0.2

0.5

0.5

-0.1

0.6

0.8

1

1

0

1.5

0.1

-0.2

0.2

0

0

0.3

0.2

1

0.2

0.4

0.4

0.4

0.5

0.6

0.6

0.6

0.8

0.8

0.7

1.5

-0.2

Figure 6.7:

k-NN for regression on a noisy toy data set with N = 20.

22

6. Similarity-Based Methods

6.3. Radial Basis Fun tions

Exer ise 6.10

You are using k-NN for regression (Figure 6.7).

(a) Show that Ein is zero when k = 1.

(b) Why is the nal hypothesis not smooth, making step-like jumps?

( ) How does the hoi e of k ontrol how well g approximates f ? (Consider the ases k too small and k too large.)

(d) How does the nal hypothesis g behave when x → ±∞.

Outputting a Probability with k -NN. Re all that for binary lassi ation, we took sign(g(x)) as the nal hypothesis. We an also get a natural

extension of the nearest neighbor te hnique outputting a probability. The nal

hypothesis is

g(x) =

k

y

1 Xq

y[i] = +1 ,

k i=1

whi h is just the fra tion of the k nearest neighbors that are lassied +1.

For both regression and logisti regression, as with lassi ation, if N → ∞

and k → ∞ with k/N → 0, then, under mild assumptions, you will re over a

lose approximation to the target fun tion: g(x) → f (x) (as N grows). This

onvergen e is true even if the data (y values) are noisy, with bounded noise

varian e, be ause the averaging an over ome that noise when k gets large.

6.3

Radial Basis Fun tions

In the k-nearest neighbor rule, only k neighbors inuen e the predi tion at a

test point x. Rather than x the value of k, the radial basis fun tion (RBF)

te hnique allows all data points to ontribute to g(x), but not equally. Data

points further from x ontribute less; a radial basis fun tion (or kernel) φ

quanties how the ontribution de ays as the distan e in reases. The ontribution of xn to the lassi ation at x will be proportional to φ(kx − xn k), and

so the properties of a typi al kernel are that it is positive and non-in reasing.

The most popular kernel is the Gaussian kernel,

1

2

φ(z) = e− 2 z .

Another ommon kernel is the window kernel,

23

6. Similarity-Based Methods

φ(z) =

(

6.3. Radial Basis Fun tions

1 z ≤ 1,

0 z > 1,

It is ommon to normalize the kernel so that it integrates to 1 over Rd . For

the Gaussian kernel, the normalization onstant is (2π)−d/2 ; for the window

kernel, the normalization onstant is π −d/2 Γ( 12 d+1), where Γ(·) is the Gamma

fun tion. The normalization onstant is not important for RBFs, but for

density estimation (see later), the normalization will be needed.

One nal ingredient is the s ale r whi h spe ies the width of the kernel.

The s ale determines the `unit' of length against whi h distan es are measured.

If kx − xn k is small relative to r, then xn will have a signi ant inuen e on

the value of g(x). We denote the inuen e of xn at x by αn (x),

αn (x) = φ

kx − xn k

r

.

The `radial' in RBF is be ause the inuen e only depends on the radial distan e

kx − xn k. We ompute g(x) as the weighted sum of the y -values:

PN

αn (x)yn

,

g(x) = Pn=1

N

m=1 αm (x)

(6.1)

where the sum in the denominator ensures that the sum of the weights is 1. The

hypothesis (6.1) is the dire t analog of the nearest neighbor rule for regression.

For lassi ation, the nal output is sign(g(x)); and, for logisti regression the

output is θ(g(x)), where θ(s) = es /(1 + es ).

The s ale parameter r determines the relative emphasis on nearby points.

The smaller the s ale parameter, the more emphasis is pla ed on the nearest

points. Figure 6.8 illustrates how the nal hypothesis depends on r and the

next exer ise shows that when r → 0, the RBF is the nearest neighbor rule.

Exer ise 6.11

When r → 0, show that for the Gaussian kernel, the RBF nal hypothesis

is g(x) = y[1] , the same as the nearest neighbor rule.

[Hint:

g(x) =

N

P

y[n] α[n] /α[1]

n=1

N

P

and show that

α[n] /α[1] → 0 for n 6= 1.℄

α[m] /α[1]

m=1

As r gets large, the kernel width gets larger and more of the data points

ontribute to the value of g(x). As a result, the nal hypothesis gets smoother.

The s ale parameter r plays a similar role to the number of neighbors k in the

k -NN rule; in fa t, the RBF te hnique with the window kernel is sometimes

24

6. Similarity-Based Methods

r = 0.01

6.3. Radial Basis Fun tions

r = 0.5

r = 0.05

PSfrag repla ements

PSfrag repla ements

PSfrag repla ements

0

0.2

0.4

0.6

0.8

1

-0.2

-0.1

0

0.1

0.2

0.3

0.4

0

0.2

0.4

0.6

0.8

1

-0.2

-0.1

0

0.1

0.2

0.3

0.4

0

0.2

0.4

0.6

0.8

1

-0.2

-0.1

0

0.1

0.2

0.3

0.4

Figure 6.8: The nonparametri RBF using the same noisy data from Figure 6.7. Too small an r results in a omplex hypothesis that overts. Too

large an r results in an ex essively smooth hypothesis that underts.

alled the r-nearest neighbor rule, be ause it uniformly weights all neighbors

within distan e r of x. One way to hoose r is using ross validation. Another

is to use Theorem 6.2 as a guide. Roughly speaking, the neighbors within

distan e r of x ontribute to g(x). The volume of this region is order of rd ,

and so the number of points in this region is order of N rd ; thus, using s ale

parameter r ee tively orresponds to using a number of neighbors k that is

order of N rd . Sin√ e we want k/N → ∞ and k → ∞√as N grows, one ee tive

hoi e for r is ( 2d N )−1 , whi h orresponds to k ≈ N .

6.3.1

Radial Basis Fun tion Networks

There are two ways to interpret the RBF in equation (6.1). The rst is as

a weighted sum of y -values as presented in equation (6.1), where the weights

are αn . This orresponds to entering a single kernel, or a bump, at x. This

bump de ays as you move away from x and the value the bump attains at

xn determines the weight αn (see Figure 6.9(a)). The alternative view is to

rewrite Equation (6.1) as

g(x) =

N

X

wn (x)φ

n=1

kx − xn k

r

,

(6.2)

where wn (x) = yn / N

i=1 φ (kx − xi k/r). This version orresponds to entering a bump at every xn . The bump at xn has a height wn (x). As you move

away from xn , the bump de ays, and the value the bump attains at x is the

ontribution of xn to g(x) (see Figure 6.9(b)).

With the se ond interpretation, g(x) is the sum of N bumps of dierent

heights, where ea h bump is entered on a data point. The width of ea h

bump is determined by r. The height of the bump entered on xn is wn (x),

whi h varies depending on the test point. If we x the bump heights to wn ,

independent of the test point, then the same bumps entered on the data

points apply to every test point x and the fun tional form simplies. Dene

P

25

6. Similarity-Based Methods

6.3. Radial Basis Fun tions

PSfrag repla ements

PSfrag repla ements

-1.5

-1

-0.5

0

0.5

1

1.5

2

0

0.2

0.4

0.6

0.8

1

1.2

1.4

wn

y

y

-1.5

-1

-0.5

0

0.5

1

1.5

2

0

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

0.9

1

αn

x (xn , yn )

(a) Bump entered on x

x (xn , yn )

(b) Bumps entered on xn

Figure 6.9: Dierent views of the RBF. (a) g(x) is a weighted sum of yn

with weights αn determined by a bump entered on x. (b) g(x) is a sum of

bumps, one on ea h xn having height wn .

Φn (x) = φ(kx − xn k/r). Then our hypotheses have the form

h(x) =

N

X

wn Φn (x) ,

(6.3)

n=1

where now wn are onstants (parameters) that we get to hoose. We swit hed

over to h(x) be ause as of yet, we do not know what the nal hypothesis g is

until we spe ify the weights w1 , . . . , wN , whereas in (6.2), the weights wn (x)

are spe ied. Observe that (6.3) is just a linear model h(x) = wt z with the

nonlinear transform to an N -dimensional spa e given by

z = Φ(x) =

Φ1 (x)

..

.

ΦN (x)

.

The nonlinear transform is determined by the kernel φ and the data points

x1 , . . . , xn . Sin e the nonlinear transform is obtained by pla ing a kernel

bump on ea h data point, these nonlinear transforms are often alled lo al

basis fun tions. Noti e that the nonlinear transform is not known ahead of

time. It is only known on e the data points are seen.

In the nonparametri RBF, the weights wn (x) were spe ied by the data

and there was nothing to learn. Now that wn are parameters, we need to learn

their values (the new te hnique is parametri ). As we did with linear models,

the natural way to set the parameters wn is to t the data by minimizing the

in-sample error. Sin e we have N parameters, we should be able to t the

data exa tly. Figure 6.10 illustrates the parametri RBF as ompared to the

nonparametri RBF, highlighting some of the dieren es between taking g(x)

as the weighted sum of y -values s aled by a bump at x versus the sum of xed

bumps at ea h data point xn .

26

6. Similarity-Based Methods

PSfrag repla ements

-0.5

0

0.5

1

1.5

-0.1

0

0.1

0.2

0.3

0.4

y

y

PSfrag repla ements

6.3. Radial Basis Fun tions

-0.5

0

0.5

1

1.5

-0.1

0

0.1

0.2

0.3

0.4

r = 0.1

x

(a) Nonparametri RBF, small r

r = 0.3

x

(b) Nonparametri

r = 0.1

r = 0.3

-0.5

0

0.5

1

1.5

0

0.1

0.2

0.3

0.4

0.5

y

PSfrag repla ements

y

PSfrag repla ements

RBF, large r

-0.5

0

0.5

1

1.5

0

0.1

0.2

0.3

0.4

0.5

x

( ) Parametri

RBF, small r

x

(b) Parametri

RBF, large r

Figure 6.10: (a),(b) The nonparametri RBF; ( ),(d) The parametri RBF.

The toy data set has 3 points. The width r

hypothesis.

The nonparametri

ontrols the smoothness of the

RBF is step-like with large |x| behavior

determined by the target values at the peripheral data; the parametri RBF

is bump-like with large x behavior that

onverges to zero.

Exer ise 6.12

(a) For the Gaussian kernel, what is g(x) as kxk → ∞ for the nonparametri RBF versus for the parametri RBF with xed wn ?

(b) Let Z be the square feature matrix dened by Znj = Φj (xn ). Assume

Z is invertible. Show that g(x) = wt Φ(x), with w = Z−1 y exa tly

interpolates the data points. That is, g(xn ) = yn , giving Ein (g) = 0.

( ) Does the nonparametri RBF always have Ein = 0?

The parametri RBF has N parameters w1 , . . . , wN , ensuring we an always

t the data. When the data has sto hasti or deterministi noise, this means

we will overt. The root of the problem is that we have too many parameters,

one for ea h bump. Solution: restri t the number of bumps to k ≪ N . If we

restri t the number of bumps to k, then only k weights w1 , . . . , wk need to be

learned. Naturally, we will hoose the weights that best t the data.

Where should the bumps be pla ed? Previously, with N bumps, this was

27

6. Similarity-Based Methods

6.3. Radial Basis Fun tions

not an issue sin e we pla ed one bump on ea h data point. Though we annot

pla e a bump on ea h data point, we an still try to hoose the bump enters so

that they represent the data points as losely as possible. Denote the enters

of the bumps by µ1 , . . . , µk . Then, a hypothesis has the parameterized form

h(x) = w0 +

k

X

j=1

wj φ

kx − µj k

r

= wt Φ(x),

(6.4)

where Φ(x)t = [1, Φ1 (x), . . . , Φk (x)] and Φj (x) = φ(kx − µj k/r). Observe

that we have added ba k the bias term w0 .11 For the nonparametri RBF or

the parametri RBF with N bumps, we did not need the bias term. However,

when you only have a small number of bumps and no bias term, the learning

gets distorted if the y -values have non-zero mean (as is also the ase if you do

linear regression without the onstant term).

The unknowns that one has to learn by tting the data are the weights

w0 , w1 , . . . , wk and the bump enters µ1 , . . . , µk (whi h are ve tors in Rd ).

The model we have spe ied is alled the radial basis fun tion network (RBFnetwork). We an get the RBF-network model for lassi ation by taking the

sign of the output signal, and for logisti regression we pass the output signal

through the sigmoid θ(s) = es /(1 + es ).

A useful graphi al representation of

the model as a `feed forward' network is

h(x)

illustrated. There are several important

features that are worth dis ussing. First,

+

the hypothesis set is very similar to a linw0

wk

ear model ex ept that the transformed feawj

w1

tures Φj (x) an depend on the data set

φ ··· φ ··· φ

1

(through the hoi e of the µj whi h are

hosen to t the data). In the linear

kx−µj k

model of Chapter 3, the transformed feakx−µ1 k

kx−µk k

r

r

r

tures were xed ahead of time. Be ause

x

the µj appear inside a nonlinear fun tion,

this is our rst model that is not linear in

its parameters. It is linear in the wj , but

nonlinear in the µj . It turns out that allowing the the basis fun tions to depend on the data adds signi ant power to this model over the linear model.

We dis uss this issue in more detail later, in Chapter ??.

Other than the parameters wj and µj whi h are hosen to t the data,

there are two high-level parameters k and r whi h spe ify the nature of the

hypothesis set. These parameters ontrol two aspe ts of the hypothesis set

that were dis ussed in Chapter 5, namely the size of a hypothesis set (quantied by k, the number of bumps) and the omplexity of a single hypothesis

(quantied by r, whi h determines how `wiggly' an individual hypothesis is).

11 An alternative notation for the s ale parameter 1/r is γ , and for the bias term w is b

0

(often used in the ontext of SVM, see Chapter ??).

28

6. Similarity-Based Methods

6.3. Radial Basis Fun tions

It is important to hoose a good value of k to avoid overtting (too high a k)

or undertting (too low a k). A good strategy for hoosing k is using ross

validation. For a given k, a good simple heuristi for setting r is

r=

R

,

k 1/d

where R = maxi,j kxi − xj k is the `diameter' of the data. The rationale for this

hoi e of r is that k spheres of radius r have a volume equal to cd krd = cd Rd

whi h is about the volume of the data (cd is a onstant depending only on d).

So, the k bumps an ` over' the data with this hoi e of r.

6.3.2

Fitting the Data

To omplete our spe i ation of the RBF-network model, we need to dis uss

how to t the data. For a given k and r, to obtain our nal hypothesis g ,

we need to determine the enters µj and the weights wj . In addition to the

weights, we appear to have an abundan e of parameters in the enters µj .

Fortunately, we will determine the enters by hoosing them to represent the

inputs x1 , . . . , xN without referen e to the y1 , . . . , yN . The heavy lifting to t

the yn will be done by the weights, so if there is overtting, it is mostly due

to the exibility to hoose the weights, not the enters.

For a given set of enters, µj , the hypothesis is linear in the wj ; we an

therefore use our favorite algorithm from Chapter 3 to t the weights if the

enters are xed. The algorithm is summarized as follows.

Fitting the RBF-network to the data (given k, r ):

1: Using the inputs X, determine k enters µ1 , . . . , µk .

2: Compute the N × (k + 1) feature matrix Z

Zn,0 = 1

Zn,j = Φj (xn ) = φ

kxn − µj k

r

.

Ea h row of Z is the RBF-feature orresponding to xn

(where we have the dummy bias oordinate Zn,0 = 1).

3: Fit the linear model Zw to y to determine the weights w∗ .

We briey elaborate on step 3 in the above algorithm.

For lassi ation, you an use one of the algorithms for linear models

from Chapter 3 to nd a set of weights w that separate the positive

points in Z from the negative points; the linear programming algorithm

in Problem 3.6 would also work well.

For regression, you an use the analyti pseudo-inverse algorithm

w∗ = Z† y = (Zt Z)−1 Zt y,

29

6. Similarity-Based Methods

6.3. Radial Basis Fun tions

where the last expression is valid when Z has full olumn rank. Re all

also the dis ussion about overtting from Chapter 4. It is prudent to

use regularization when there is sto hasti or deterministi noise. With

regularization parameter λ, the solution be omes w∗ = (Zt Z+λI)−1 Zt y;

the regularization parameter an be sele ted with ross validation.

For logisti regression, you an obtain w∗ by minimizing the logisti regression ross entropy error, using, for example, gradient des ent. More

details an be found in Chapter 3.

In all ases, the meat of the RBF-network is in determining the enters µj

and omputing the radial basis feature matrix Z. From that point on, it is a

straightforward linear model.

PSfrag repla ements

PSfrag repla ements

0

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

0.9

1

-0.2

-0.1

0

0.1

0.2

0.3

0.4

0

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

0.9

1

-0.2

-0.1

0

0.1

0.2

0.3

0.4

y

y

0

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

0.9

1

-0.2

-0.1

0

0.1

0.2

0.3

0.4

y

PSfrag repla ements

x

(a) k = 4

x

(b) k = 10

x

( ) k = 10, regularized

Figure 6.11: The parametri RBF-network using the same noisy data from

Figure 6.7. (a) Small k. (b) Large k results in overtting. ( ) Large k with

regularized linear tting of weights (λ = 0.05). The enters µj are the gray

shaded ir les and r = 1/k. The target fun tion is the light gray urve.

Figure 6.11 illustrates the RBF-network on the noisy data from Figure 6.7.

We (arbitrarily) sele ted the enters µj by partitioning the data points into

k equally sized groups and taking the average data point in ea h group as a

enter. When k = 4, one re overs a de ent t to f even though our enters

were hosen somewhat arbitrarily. When k = 10, there is learly overtting,

and regularization is alled for.

Given the enters, tting a RBF-network redu es to a linear problem, for

whi h we have good algorithms. We now address this rst step, namely how

to determine these enters. Determining the optimal bump enters is the hard

part, be ause this is where the model be omes essentially nonlinear. Lu kily,

the physi al interpretation of this hypothesis set as a set of bumps entered

on the µj helps us. When there were N bumps (the nonparametri ase),

the natural hoi e was to pla e one bump on top of ea h data point. Now

that there are only k ≪ N bumps, we should still hoose the bump enters

to losely represent the inputs in the data (we only need to mat h the xn , so

we do not need to know the yn ). This is an unsupervised learning problem; in

fa t, a very important one.

30

6. Similarity-Based Methods

6.3. Radial Basis Fun tions

One way to formulate the task is to require that no xn be far away from a

bump enter. The xn should luster around the enters, with ea h enter µj

representing one luster. This is known as the k- enter problem, known to be

NP-hard. Nevertheless, we need a omputationally e ient way to partition