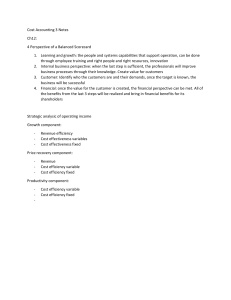

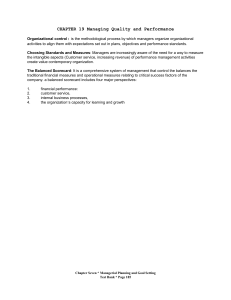

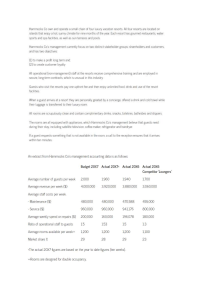

Subjectivity & Balanced Scorecard: Performance Measure Weighting

advertisement