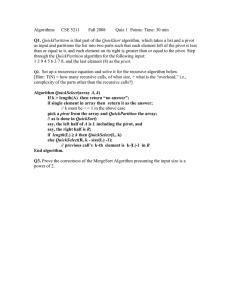

University of Management and Technology Data Structures and Algorithms Name: _____________ Id: ________________ Question no.1: Explain how a hash table works and discuss how collisions are handled using methods like chaining and open addressing. What are the trade-offs of each approach? Answer: A hash table is a data structure that maps keys to values using a hash function to compute an index into an array, where the value is stored. Collision Handling Methods 1-) Chaining: Stores all elements that hash to the same index in a linked list (or another secondary structure). Trade-offs Easy to implement and handles dynamic resizing well. Performance degrades if the hash function produces too many collisions (leading to long chains). 2-) Open Addressing: Finds an open slot within the hash table array itself to resolve collisions (e.g., linear probing, quadratic probing, or double hashing). Trade-offs Avoids the overhead of extra data structures. Can lead to clustering and higher probing costs if the table becomes full. Trade off comparison Approach Space Efficiency Time Complexity Notes Chaining O(n+m) Open Addressing O(m) O(1) on average, O(n) in the worst case O(1) on average, O(m) on worst case Handles high load factors better. Requires careful resizing. Question no.2: What is the difference between a min-heap and a max-heap? How would you use a heap to implement a priority queue? Answer: Min-Heap: The root node has the smallest value. Every parent node is smaller than or equal to its child nodes. Max-Heap: The root node has the largest value. Every parent node is larger than or equal to its child nodes. Using Heaps for a Priority Queue: Min-Heap: Implements a priority queue where the element with the lowest priority value is dequeued first. Max-Heap: Implements a priority queue where the element with the highest priority value is dequeued first. Operations: Insert: Insert an element and adjust the heap using heapify-up. Extract-Min/Max: Remove the root and reheapify the structure. Question no.3: Describe how QuickSort works. What are its average and worst-case time complexities, and how can the worst-case scenario be avoided? Answer: QuickSort is a divide-and-conquer sorting algorithm: 1. Select a pivot element from the array. 2. Partition the array so that elements smaller than the pivot are placed on the left, and elements larger are on the right. 3. Recursively apply QuickSort to the left and right subarrays. Time Complexities: Average Case: O(n log n) Worst Case: O(n²) (occurs when the pivot divides the array poorly, e.g., already sorted array with the first/last element as pivot). Avoiding the Worst Case: Use a random pivot or the median-of-three pivot selection strategy to ensure balanced partitions. Question no.4: What is the difference between a singly linked list, a doubly linked list, and a circular linked list? In which scenarios would each be most suitable? Answer: 1-) Singly Linked List: Each node points to the next node. Suitable for stack implementations or scenarios requiring sequential access. 2-) Doubly Linked List: Each node points to both its previous and next nodes. Suitable for bidirectional traversal, e.g., implementing a LRU cache. 3-) Circular Linked List: The last node points back to the first node, forming a loop. Suitable for cyclic tasks, e.g., round-robin scheduling.