Digital Logic Design Course Material: Number Systems & Codes

advertisement

APSC 262 Digital Logic Design

Dr. Ayman Elnaggar, Ph.D., P.Eng.

School of Engineering, Faculty of Applied Science

The University of British Columbia

Office: EME 4261

Tel: 250-807-8198

Email:ayman.elnaggar@ubc.ca

TEXTBOOK

Chapter 1 Digital Systems and Binary Numbers

Chapter 2 Boolean Algebra and Logic Circuits

Chapter 3 Gate-Level Minimization

Chapter 4 Combinational Logic

Chapter 5 Synchronous Sequential Logic

Chapter 6 Register, Counters, and FSM

Chapter 7 Memory & Programmable Logic

Digital Design with an Introduction

to Verilog HDL, 5th edition.

MODULE 1.0

1.1 Number Representation

1.1.1 Binary numbers

1.1.2 Octal numbers

1.1.3 Hexadeciaml numbers

1.1.4 Number conversion

1.1.5 Addition of unsigned integers

1.2 Signed Integers

1.2.1 Number representation of signed integers

1.2.2 Addition and subtraction of signed numbers (2’s Complement)

1.3 Binary Codes

1.2.1 Binary Coded Decimal (BCD) numbers

1.2.2 ASCII code

1.2.3 Parity bit

PAGE 1

1.0 Number Systems.

In this module, we will study arithmetic operations and develop digital logic circuits to implement the operations.

1.1 Unsigned Numbers.

We often take numbers for granted. We use decimal numbers and routinely carry out arithmetic operations using

the 10 digits (0, 1, 2, …., 9). With digital logic design, it becomes necessary to generalize the steps used for

arithmetic operations and apply them in other number systems. Weighted Number Systems

A decimal number D consists of n digits and each digit has a position. Every digit position is associated with a fixed

weight. If the weight associated with the ith position is w , then the value of D is given by:

i

D = d w + d w + ……+ d w + d w

n-1

n-1

n-2

n-2

1

1

0

0

Also called positional number system

9375

Decimal (Radix) Point

A number D has n integral digits and m fractional digits. Digits to the left of the radix point (integral digits) have

positive position indices, while digits to the right of the radix point (fractional digits) have negative position

indices

The weight for a digit position i is given by wi = ri

PAGE 2

The Radix (Base) of a number

• A digit di, has a weight which is a power of some constant value called radix (r) or base such that wi = ri.

• A number D with base r can be denoted as (D)r,

o Decimal number 128 can be written as (128)10

• A number system of radix r, has r allowed digits {0,1,… (r-1)}

• The leftmost digit has the highest weight and called Most Significant Digit (MSD)

• The rightmost digit has the lowest weight and called Least Significant Digit (LSD)

• The Largest value that can be expressed in n digits is (rn - 1)

o For example, the largest decimal number (r=10) that can be written in 3 digits (n=3) is 10 3-1=999.

Are these valid numbers?

• (9478)

→ Yes, digits between 0 to 9

10

•

(1289)

•

(111000)

•

(55)

5

→ No, for base 2 only 0’s and1’s

2

2

→ Yes

→ No, for base 5 only digits 0 to 4

We are going to consider the following number systems:

Base-2 (binary), Base-8 (octal), Base-10 (decimal), and Base-16 (hexadecimal).

To avoid ambiguity in representing numbers, we can explicitly express the base of an integer with a subscript on

the number (written in parentheses). For example, (123)10 , (1111011)2 , (753)8.

We will begin with a look at number representation for the simplest system of unsigned numbers, with no

positive or negative sign.

Binary Numbers (Converting Binar to Decimal)

Consider the base-2 (binary) system. An unsigned binary integer with n binary digits (or bits) will have each bit

be 0 or 1. We can also talk about groups of bits: a group of four bits is a nibble; a group of eight bits is a byte.

We can express all the bits in a positional number representation:

B

=

bn-1 bn-2 … b1 b0.b-1b-2..

V(B) = bn-1×2n-1 + bn-2×2n-2+ bn-3×2n-3+ bn-4×2n-4+ … + b1×21 +b0×20+ b-1×2-1 +b-2×2-2 +…….

For example, to convert the binary number (1111011)2 to decimal, we can write it as

1×26 + 1×25 + 1×24+ 1×23 + 0×22 + 1×21 + 1×20 = (123)10

For unsigned integer with n bits, we will be able to express integers in the range from 0 to 2n - 1.

Convert the binary number (101.01)2 to decimal

1 *22 + 0 *21 + 1 *20 + 0 *2-1 + 1 *2-2 = ( 5.25)10

PAGE 3

The Power of 2

Kilo

Mega

Giga

Tera

Conversion from decimal to binary number. Integer conversion to the binary number system from other number

systems involves division, while monitoring remainders. Fortunately, this process of division and monitoring

remainders can be carried out in a well-structured algorithm.

We take the original decimal integer and divide by 2 repeatedly, while listing our successive integer answers

below the current integer, in a column. The remainders are recorded in an adjacent column. Digits (bits) in the

remainder column form the final answer, expressed from top (LSB) to bottom (MSB). Consider these examples:

(184)10

Quotient

Remainder

184

/2

92

0

92

/2

46

0

46

/2

23

0

23

/2

11

1

11

/2

5

1

5

/2

2

1

2

/2

1

0

1

/2

0

1

(LSB)

(MSB)

(10111000)2

PAGE 4

Convert (789.625)10 to binary.

1. Integer part:

(789)10

Quotient

Remainder

789

/2

394

1

394

/2

197

0

197

/2

98

1

98

/2

49

0

49

/2

24

1

24

/2

12

0

12

/2

6

0

6

/2

3

0

3

/2

1

1

1

/2

0

1

(LSB)

(MSB)

(1100010101)2

2. Fraction part:

o Multiply the fraction part (0.625) by the ‘Base’ (=2)

o Take the integer (either 0 or 1) as a coefficient

o Take the resultant fraction and repeat the multiplication

Fraction

. 25

Coefficient

a-1 = 1

(MSB)

0.625

*2=

Integer

1

0.25

*2=

0

. 5

a-2 = 0

0.5

*2=

1

.0

a-3 = 1

(LSB)

(0.625)10 = (0.a-1 a-2 a-3)2 = (0.101)2

MSB

LSB

Grouping both parts → (789.625)10 = (1100010101.101)2

The method can be generalized to convert any decimal number (base 10) to any other base system such as

base 8 (divide by 8 instead) or hexadecimal (divide by 16 instead).

PAGE 5

Binary Coding

Digital systems use signals that have two distinct values and circuit elements that have two stable states. A binary

number of n digits, for example, may be represented by n binary circuit elements, each having an output signal

equivalent to 0 or 1.

Digital systems represent and manipulate not only binary numbers, but also many other discrete elements of

information (image, video, audio, music, etc.). Any discrete element of information that is distinct among a group

of quantities can be represented with a binary code (i.e., a pattern of 0’s and 1’s). For example, let us say we want

to code 256 colors associated of the color of pixels on an image. In this case we need 8-bit of coding. We can say

00000000 will code white, 00000001 will code blue, 00000010 for yellow, and so on till 11111111 for black color.

The codes must be in binary because, in today’s technology, only circuits that represent and manipulate patterns

of 0’s and 1’s can be manufactured economically for use in computers. However, it must be realized that binary

codes merely change the symbols, not the meaning of the elements of information that they represent. If we

inspect the bits of a computer at random, we will find that most of the time they represent some type of coded

information rather than binary numbers.

An n‐bit binary code is a group of n bits that assumes up to 2n distinct combinations of 1’s and 0’s, with each

combination representing one element of the set that is being coded (color, amplitude, brightness, intensity,

etc.). A set of four elements can be coded with two bits, with each element assigned one of the following bit

combinations: 00, 01, 10, 11. A set of eight elements requires a three‐bit code and a set of 16 elements requires a

four‐bit code (or (log2 n) in general).

1.3.1 Binary Coded Decimal (BCD)

Is used when decimal input is applied to a digital circuit. In this case we have 10 (n=10) different digits that we

would like to code in binary. The closest log2 n is 4. Therefore, we are going to use 4 bits to represent each of the

10 decimal digit from 0 to 9. However, since we can represent decimal digits 11, 12, .., 15 in 4 bit too, these

numbers are not considered and we normally put them as X (don’t care) on our Truth Table or K-Map. More on

this later on.

(0 – 9) Valid combinations

(10 – 15) Invalid combinations

Decimal

0

1

2

3

4

5

6

7

8

9

BCD

0000

0001

0010

0011

0100

0101

0110

0111

1000

1001

It is important to realize that BCD numbers are decimal numbers and not binary numbers, although they use bits

in their representation. The only difference between a decimal number and BCD is that decimals are written with

the symbols 0, 1, 2, c, 9 and BCD numbers use the binary code 0000, 0001, 0010, …., 1001. The decimal value is

exactly the same. Decimal 10 is represented in BCD with eight bits as 0001 0000 and decimal 15 as 0001 0101.

The corresponding binary values are 1010 and 1111 and have only four bits.

PAGE 6

ASCII Code

Many applications of digital computers require the handling not only of numbers, but also of other characters or

symbols, such as the letters of the alphabet. For you to text a message on your cell phone, or typing in a Word

file, it is necessary to formulate a binary code for the letters of the alphabet. In addition, the same binary code

must represent numerals and special characters (such as $). An alphanumeric character set is a set of elements

that includes the 10 decimal digits, the 26 letters of the alphabet, and a number of special characters. Such a set

contains between 36 and 64 elements if only capital letters are included, or between 64 and 128 elements if both

uppercase and lowercase letters are included. In the first case, we need a binary code of seven bits.

The standard binary code for the alphanumeric characters is the American Standard Code for Information

Interchange (ASCII), which uses seven bits to code 128 characters, as shown in the Table below. The seven bits of

the code are designated combination corresponding to each character. The letter A, for example, is represented

in ASCII as 0100 0001. The ASCII code also contains 34 nonprinting characters used for various control functions

such as line feed and back space.

Normally, your keypad or touchpad will do this conversion. So when you text a message or type in your file, every

character is replaced and saved by its ASCII code. Remember, you may need additional binary coding for

formatting such as color, font, size, etc. associated with each character.

PAGE 7

Parity Bit – Error Detection

To detect errors in data communication and processing, an eighth bit is sometimes added to the ASCII character

to indicate its parity. A parity bit is an extra bit included with a message to make the total number of 1’s either

even or odd. Consider the following two characters and their even and odd parity:

ASCII A = 1000001

ASCII T = 1010100

With even parity

01000001

11010100

With odd parity

11000001

01010100

In each case, we insert an extra bit in the leftmost position of the code to produce an even number of 1’s in the

character for even parity or an odd number of 1’s in the character for odd parity. In general, one or the other

parity is adopted, with even parity being more common.

The parity bit is helpful in detecting errors during the transmission of information from one location to another.

This function is handled by generating an even parity bit at the sending end for each character. The eight‐bit

characters that include parity bits are transmitted to their destination. The parity of each character is then

checked at the receiving end. If the parity of the received character is not even, then at least one bit has changed

value during the transmission. This method detects one, three, or any odd combination of errors in each

character that is transmitted. An even combination of errors, however, goes undetected, and additional error

detection codes may be needed to take care of that possibility.

What is done after an error is detected depends on the particular application. One possibility is to request

retransmission of the message on the assumption that the error was random and will not occur again.

7-bit Example

4-bit Example

1

0

Even Parity

Even Parity

1

0

Odd Parity

Odd Parity

Example: Decode the following ASCII string (with MSB = parity). Is it an even or odd parity?

11010101 11000010 01000011 01001111

PAGE 8

Representation of Binary Numbers by Electrical Signals

So how 1’s and 0’s are really stored, processed, or transmitted?

•

•

•

Binary ‘0’ is represented by a “low” voltage (range of voltages)

Binary ‘1’ is represented by a “high” voltage (range of voltages)

The “voltage ranges” guard against noise

PAGE 9

APSC 262 Digital Logic Design

Dr. Ayman Elnaggar, Ph.D., P.Eng.

School of Engineering, Faculty of Applied Science

The University of British Columbia

Office: EME 4261

Tel: 250-807-8198

Email:ayman.elnaggar@ubc.ca

TEXTBOOK

Chapter 1 Digital Systems and Binary Numbers

Chapter 2 Boolean Algebra and Logic Circuits

Chapter 3 Gate-Level Minimization

Chapter 4 Combinational Logic

Chapter 5 Synchronous Sequential Logic

Chapter 6 Register, Counters, and FSM

Chapter 7 Memory & Programmable Logic

Digital Design with an Introduction

to Verilog HDL, 5th edition.

MODULE 2.0

2.0 Introduction to logic circuits

2.1 Logic gates

2.3 Logic network analysis

2.4 Boolean algebra

2.5 Logic design

2.6 Standard logic chips

2.7 Implementation technologies (transistor-level)

PAGE 10

2.0 Introduction to logic circuits.

In this module, we will look at logic circuits. We will first explore the input variables and output functions that

facilitate the operation of a logic circuit. We will then identify logic gates—being the fundamental elements that

carry out logic processes.

2.1 Logic variables and functions.

In this section we will identify fundamental elements of a digital system. The first is an input variable, being a usercontrolled input to the system, and the second is an output function, being the overall output from the system.

Consider the input variable and output function. Simple representations for an input variable and output

function are a switch and an LED light, respectively. Imagine that the switch, defined by input variable x, controls

the state of the LED light, defined by output function L(x). The switch input variable x can be 0 (open) or 1

(closed), and the LED light output function L(x), that depends on x, can be 0 (off) or 1 (on). The dependency

between the input variable and output function can be tabulated in a truth table.

Logic function:

L(x) = x.

Truth table:

x

0

1

|

|

|

L(x) = x

0

1

Consider the AND logic function. The AND logic function has on two (or more) input variables, x1 and x2. The

output logic function is L(x1,x2) = 1 if and only if x1 and x2 are 1. All other input variable combinations yield L(x1,x2)

= 0. The AND logic function can be visualized as a series connection of two switches. The AND operator is "·" or is

assumed to be there by default with two adjoining variables.

Logic function:

L(x1, x2) = x1 · x2 = x1x2

Truth table:

x1

0

0

1

1

x2

0

1

0

1

|

|

|

|

|

L = x1 · x2

0

0

0

1

PAGE 11

Consider the OR logic function. The OR logic function has on two (or more) input variables, x1 and x2. The OR

logic function is L(x1,x2) = 1 if one or both of x1 or x2 are 1. Thus, the OR logic function is L(x1,x2) = 0 if and only if x1

= 0 and x2 = 0. The OR logic function can be visualized as a parallel connection of switches. The OR operator is "+".

Logic function:

L(x1, x2) = x1 + x2

Truth table:

x1

0

0

1

1

x2

0

1

0

1

|

|

|

|

|

L= x1 + x2

0

1

1

1

Consider the NOT logic function. The NOT logic function has one input variable, x. The output logic function is

L(x1,x2) = 0 if the input variable is x = 1, and L(x1,x2) = 1 if the input variable is x = 0. Thus, the NOT function is an

inversion function. The NOT logic function is realized by a circuit with a closed (x = 1) switch shorting the light to

ground. The NOT operator is " ' " or "!", or it is denoted with an overhead bar on the input variable. The output of

a NOT function on an input variable is called the "complement" of that variable.

Logic function:

L(x) = x'

Truth table:

x

0

1

|

|

|

L= x'

1

0

PAGE 12

Example. Create a circuit with switches x1, x2, x3, and x4 that can light up an LED according to the logic function

L(x1,x2,x3,x4) = x1 · (x2 + x3) · x4. The operation of the circuit is demonstrated by way of a truth table.

Truth table:

x1

0

0

0

0

0

0

0

0

1

1

1

1

1

1

1

1

x2

0

0

0

0

1

1

1

1

0

0

0

0

1

1

1

1

x3

0

0

1

1

0

0

1

1

0

0

1

1

0

0

1

1

x4

0

1

0

1

0

1

0

1

0

1

0

1

0

1

0

1

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

(x2 + x3)

0

0

1

1

1

1

1

1

0

0

1

1

1

1

1

1

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

For n inputs, how do we list all combinations without missing any?

PAGE 13

x1 · (x2 + x3) · x4

0

0

0

0

0

0

0

0

0

0

0

1

0

1

0

1

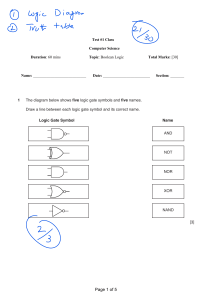

2.2 Logic gates.

The AND, OR, and NOT logic functions in the prior section (and a few other logic functions) are implemented in

circuits with digital logic gates. In this section, we define a distinct logic gate for each of our logic functions.

Consider the AND gate. The AND gate has two (typically) input variables, x1 and x2. The output is 1 if both x1 and

x2 are 1, and the output is 0 for all other cases of x1 and x2 values. The AND gate is denoted as x1 · x2.

AND gate

Truth table:

x1

0

0

1

1

x2

0

1

0

1

|

|

|

|

|

f = x1 · x2

0

0

0

1

Consider the OR gate. The OR gate has two (typically) input variables, x1 and x2. The output is 1 if either or both

of x1 and x2 are 1, and the output is 0 only if both x1 and x2 are 0. The OR gate is denoted as x1 + x2.

OR gate

Truth table:

x1

0

0

1

1

x2

0

1

0

1

|

|

|

|

|

f = x1 + x2

0

1

1

1

Consider the NOT gate. The NOT gate has one input variable, x. The output is 1 if x is 0 and is 0 if x is 1. The

output of the NOT gate is the complement of x. The NOT gate is denoted as x' or !x or x . The NOT gate uses a

bubble to signify the complement operation (and may include a triangle to signify gain from an amplifier/buffer).

NOT gate

Truth table:

x

0

1

|

|

|

f = x'

1

0

PAGE 14

Consider the NAND gate. The NAND gate is an NOT-AND gate with two (typically) input variables, x1 and x2. The

output is the complement of the ANDed input variables. The NAND gate is denoted as (x1·x2)'. The NAND gate

uses a bubble to signify the complement operation after the AND gate.

NAND gate

Truth table:

x1

0

0

1

1

x2

0

1

0

1

|

|

|

|

|

AND

x1 · x2

0

0

0

1

NAND

f = (x1 · x2)'

1

1

1

0

Consider the NOR gate. The NOR gate is an NOT-OR gate with two (typically) input variables, x1 and x2. The

output is the complement of the ORed input variables. The NOR gate is denoted as (x1+x2)'. The NOR gate uses a

bubble to signify the complement operation after the OR gate.

NOR gate

Truth table:

x1

0

0

1

1

x2

0

1

0

1

|

|

|

|

|

OR

x1 + x2

0

1

1

1

NOR

f = (x1 + x2)'

1

0

0

0

Consider the XOR gate. The XOR gate is an exclusive-OR gate with two (typically) input variables, x1 and x2. The

output is 1 only when the input variables differ in value. For any number of input variables, in general, the output

is 1 if and only if there is an odd number of input variables equal to 1. The XOR gate is denoted as x1⊕x2. The XOR

gate looks similar to the OR gate, but there is a double band on the input side.

XOR gate

Truth table:

x1

0

0

1

x2

0

1

0

|

|

|

|

XOR

f = x1 ⊕ x2

0

1

1

PAGE 15

Example. Draw the logic gate circuit for a digital logic system with input variables x1 and x2 and an output

function L(x1,x2) = x1' + (x1 · x2).

Example. Draw the logic gate circuit for a digital logic system with input variables x1, x2, x3, and x4 and an output

function L(x1,x2,x3,x4) = x1 · (x2 + x3) · x4.

PAGE 16

2.3 Logic network analysis.

This section looks at the analysis of logic networks. Logic networks are given to us and their operation is analyzed.

2.3.1 Logic network analysis with truth tables.

When logic networks become increasingly complicated it becomes necessary to track input variables, logic states

of intermediate nodes, and the corresponding output functions. This can be accomplished with truth tables.

A truth table organizes all possible combinations of input variables and lists the corresponding logic values for the

intermediate nodes (when necessary) and output functions.

Example. Derive the Boolean function and create the truth table for the logic gate circuit below

x1

0

0

1

1

x2

0

1

0

1

|

|

|

|

|

x 1'

1

1

0

0

(x1·x2)

0

0

0

1

|

|

|

|

|

y=x1' + (x1·x2)

1

1

0

1

Example. Derive the Boolean function and create the truth table for the logic gate circuit below

x1

0

0

0

0

0

0

0

0

1

1

1

1

1

1

1

1

x2

0

0

0

0

1

1

1

1

0

0

0

0

1

1

1

1

x3

0

0

1

1

0

0

1

1

0

0

1

1

0

0

1

1

x4

0

1

0

1

0

1

0

1

0

1

0

1

0

1

0

1

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

(x2+x3)

0

0

1

1

1

1

1

1

0

0

1

1

1

1

1

1

x1·(x2+x3)

0

0

0

0

0

0

0

0

0

0

1

1

1

1

1

1

PAGE 17

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

y=x1·(x2+x3)·x4

0

0

0

0

0

0

0

0

0

0

0

1

0

1

0

1

2.3.2 Logic network analysis with timing diagrams.

The tracking of logic values in a circuit becomes more complex when one considers that digital logic networks are

typically used with data streams—having input variables and nodes taking on 0 and 1 values as a function of time.

We can keep track of the input variables and their effects on nodes in our system with timing diagrams.

With timing diagrams, we track the sequential series of 0 and 1 bits for the input variables. We also track the 0

and 1 values of the intermediate nodes and output function for the overall system in the same sequential order.

tim

e

tim

e

tim

e

tim

e

tim

e

Propagation delay (tpd) is the time for a change in the input of a gate to propagate to the output.

• High-to-low (tphl) and low-to-high (tplh) output signal changes may have different propagation delays

• tpd = max {tphl, tphl)

• A circuit is considered to be fast, if its propagation delay is less (ideally as close to 0 as possible)

Delay is usually measured

between the 50% levels

of the signal

PAGE 18

X

Z

Y

Inputs

X

Propagation Delay of

the Circuit = τ

Y

Output

Z

Timing Diagram for an AND gate

Time

For the sake of our course, we are ignoring the propagation delay in timing analysis of logic gates. However, it

is a very important parameter in evaluating the performance of digital circuits.

Delay in multi-level logic diagrams

How do we decompose 3-input AND gates of 2-input ones?

What about 4-input AND gates?

PAGE 19

2.4 Logic network analysis with Boolean algebra.

Logic operations can be separated with parentheses to distinguish order of operations, but this would introduce

unnecessary parentheses. Instead, it is possible to define an implicit order of operations and save parentheses for

situations requiring specific ordering. The implicit order of operations is as follows:

i. parentheses,

ii. NOT,

iii. AND,

iv. OR.

For example, x · y + y' · z + x' · z can be written as (x · y) + ((y') · z) + ((x') · z), but the parentheses are unnecessary.

Let's now use the operations to define Boolean algebra axioms and derive Boolean algebra theorems.

Boolean algebra axioms and theorems. We can carry out Boolean algebra operations for complex logic networks

by considering fundamental axioms, single-variable theorems, and two/three-variable theorems.

Axioms.

1a

0·0=0

1b

1+1=1

2a

1·1=1

2b

0+0=0

3a

0·1=1·0=0

3b

1+0=0+1=1

4a

If x = 0, then x' = 1

4b

If x = 1, then x' = 0

Single-variable theorems.

5a

x·0=0

5b

x+1=1

6a

x·1=x

6b

x+0=x

7a

x·x=x

7b

x+x=x

8a

x · x' = 0

8b

x + x' = 1

9

x'' = x

Two/three-variable theorems.

10a

x·y=y·x

10b x + y = y + x

11a

x · (y · z) = (x · y) · z

11b x + (y + z) = (x + y) + z

12a

x · (y + z) = x · y + x · z

12b x + y · z = (x + y) · (x + z)

13a

x+x·y=x

13b x · (x + y) = x

14a

x · y + x · y' = x

14b (x + y) · (x + y') = x

15a

(x · y)' = x' + y'

15b (x + y)' = x' · y'

16a

x + x' · y = x + y

16b x · (x' + y) = x · y

17a

x · y + y · z + x' · z = x · y + x' · z

17b (x + y) · (y + z) · (x' + z) = (x + y) · (x' + z)

(Commutative)

(Commutative)

(Associative)

(Associative)

(Distributive)

(Distributive)

(Absorption) proof: x + xy = x(y+1) = x

(Absorption) proof: x(x+y) = xx+xy = x(y+1) = x

(Combining) proof: xy+xy' = x(y+y') = x

(Combining) proof: (x+y)(x+y') = xx+xy+xy'+yy' = x+x(y+y') = x

(DeMorgan's) proof: truth table

(DeMorgan's) proof: truth table

(Elimination) proof: x+x'y=x(y+1)+x'y=x+xy+x'y=x+y(x+x')=x+y

(Elimination) proof: x(x'+y) = xx'+xy = xy

(Consensus) proof: xy+yz+x'z = xy+yz(x+x')+x'z = xy+x'z

(Consensus) proof: (x+y)(y+z)(x'+z) = (xy+yz+xz+y)(x'+z)

=x'y+x'yz+xyz+yz+xz=x'y+yz+xz=(x+y)(x'+z)

PAGE 20

Example. Prove the logic identity x1' · x2' + x1 · x2 + x1 · x2' = x1 + x2'.

LHS = x1' · x2' + x1 · x2 + x1 · x2'

LHS = x1' · x2' + x1 · (x2 + x2')

(using 12a)

LHS = x1' · x2' + x1 · 1

(using 8b)

LHS = x1' · x2' + x1

(using 6a)

LHS = x1 + x1' · x2'

(using 10b)

LHS = x1 + x2' = RHS

(using 16a

or

x1 + x1' · x2' = x1 (x2' + 1) + x1' · x2' = x1 + x2')

Example. Prove the logic identity x1 · x3' + x2'· x3' + x1 · x3 + x2'· x3 = x1 + x2'.

LHS = x1 · x3' + x2'· x3' + x1 · x3 + x2'· x3

LHS = x1 · x3' + x1 · x3 + x2'· x3' + x2'· x3

(using 10b)

LHS = x1 · (x3' + x3) + x2'· (x3' + x3)

(using 12a)

LHS = x1 · (1) + x2'· (1)

(using 8b)

LHS = x1 + x2' = RHS

(using 6a)

Example. Prove the logic identity (x1 + x3) · (x1' + x3') = (x1· x3' + x1'· x3).

LHS = (x1 + x3) · x1' + (x1 + x3) · x3'

(using 12a)

LHS = x1 · x1' + x3 · x1' + x1 · x3' + x3 · x3'

(using 12a)

LHS = 0 + x3 · x1' + x1 · x3' + 0

(using 8a)

LHS = x3 · x1' + x1 · x3'

(using 6b)

LHS = x1 · x3' + x1' · x3 = RHS

(using 10a and 10b)

Another way to prove any identity is to form a truth table for the RHS and another table for the LHS and prove

they are of equal values.

PAGE 21

Example. Express the following logic circuit in terms of Boolean algebra. Then apply Boolean algebra to show that

it can be simplified. Compare the original and simplified circuits (gate count).

Original circuit:

Simplified circuit:

Original circuit 3 gates.

Simplified circuit 2 gates.

Algebra:

(x1 + x2) · x1' = (x1· x1' + x2· x1') = (0 + x2· x1') = x2· x1'

Why is simplifying logic circuits important?

• Boolean algebra identities and properties help reduce the size of expressions

• In effect, smaller sized expressions will require fewer logic gates for building the circuit

• As a result simpler circuits will gain the following features:

•

less cost,

•

less size,

•

less power consumption,

•

less delay

• The speed of simpler circuits is also high

PAGE 22

2.5 Logic network design.

This section introduces design, i.e., synthesis, of digital logic systems. We will do this by way of an example.

Design a warning system at a train intersection with three tracks. Each track has an input variable x1, x2, and x3. If

a train is present on a track, its input variable has a value of 1, otherwise it has a value of 0. The system must give

a warning, designated by the output function f, if two or more trains are present on the tracks.

We start digital design work with a truth table having all combinations of inputs and their function values.

Row

0

1

2

3

4

5

6

7

x1

0

0

0

0

1

1

1

1

x2

0

0

1

1

0

0

1

1

x3

0

1

0

1

0

1

0

1

|

|

|

|

|

|

|

|

|

f

0

0

0

1

0

1

1

1

Minterm label

m0 = x1' · x2'· x3'

m1 = x1' · x2'· x3

m2 = x1' · x2 · x3'

m3 = x1' · x2 · x3

m4 = x1 · x2'· x3'

m5 = x1 · x2'· x3

m6 = x1 · x2 · x3'

m7 = x1 · x2 · x3

First, we identify minterms. Minterms are identified for each truth table row, as shown above. (With experience,

it is not necessary to label these terms on your truth tables.) Consider the labels:

I. Minterms are products of all (uncomplemented or complemented) variables. Minterm mi is labelled as a

product in a row of the truth table by stating xi in the product if xi = 1 or stating xi' in the product if xi = 0. You can

quickly identify the input values for a minterm, mi, by looking at the binary representation of the integer i.

Second, we assemble sum-of-products.

Sum-of-products (SOP) expressions are a sum of all product terms in the rows having f = 1. This fully defines the

design, as (uncomplemented or complemented) variables in each of these product terms must be 1 to yield f = 1.

f (x1 , x2 , x3 ) =

mi = m3 + m5 + m6 + m7 = x1'x2 x3 + x1 x2 'x3 + x1 x2 x3'+ x1 x2 x3 .

i

SOP/sum of minterms are easily implemented by allocating each product term/minterm to an AND gate, in

addition to one OR gate that combine all the AND gates outputs. This topology is called 2-level AND-OR gates.

Example. State the canonical (i.e., complete) SOP expression for the following function and simplify it:

f (x1 , x2 , x3 ) =

(m2 ,m3 ,m4 ,m6 ,m7 ).

i

f = m2 + m3 + m4 + m6 + m7 ,

f = x1 ' x2 x3 '+ x1 ' x2 x3 + x1 x2 ' x3 ' + x1 x2 x3 ' + x1 x2 x3 ,

f = x1 ' x2 x3 ' + x1 ' x2 x3 + x1 x2 ' x3 ' + x1 x2 x3 ' + x1 x2 x3 + x1 x2 x3 ',

f = x1 ' x2 (x3 '+ x3 ) + x1 x3 '(x2 '+ x2 ) + x1 x2 (x3 '+ x3 ),

f = x1 ' x2 + x1 x2 + x1 x3 ' ,

f = x2 (x1 '+ x1 ) + x1 x3 ' ,

f = x2 + x1 x3 '.

Compare both logic diagrams and gate count.

PAGE 23

Example. State the canonical (i.e., complete) SOP expression for the following function and simplify it:

f (x1 , x2 , x3 , x4 ) =

(m3 , m7 , m9 , m12 , m13 , m14 , m15 ) .

i

f = m3 + m7 + m9 + m12 + m13 + m14 + m15 ,

f = x1 ' x2 ' x3 x4 + x1 ' x2 x3 x4 + x1 x2 ' x3 ' x4 + x1 x2 x3 ' x4 ' + x1 x2 x3 ' x4 + x1 x2 x3 x4 ' + x1 x2 x3 x4 ,

f = x1 ' x2 ' x3 x4 + x1 ' x2 x3 x4 + x1 x2 ' x3 ' x4 + x1 x2 x3 ' x4 ' + x1 x2 x3 ' x4 + x1 x2 x3 x4 ' + x1 x2 x3 x4 + x1 x2 x3 ' x4 ,

f = x1 ' x3 x4 (x2 '+ x2 ) + x1 x3 ' x4 (x2 '+ x2 ) + x1 x2 x3 '(x4 + x4 ') + x1 x2 x3 (x4 '+ x4 ),

f = x1 ' x3 x4 + x1 x3 ' x4 + x1 x2 x3 ' + x1 x2 x3 ,

f = x1 ' x3 x4 + x1 x3 ' x4 + x1 x2 (x3 '+ x3 ),

f = x1 ' x3 x4 + x1 x3 ' x4 + x1 x2 .

Compare both logic diagrams and gate count.

PAGE 24

It is often advantageous to implement digital circuits with NAND and NOR gates, rather than AND and OR gates,

as fewer transistors are needed in NAND and NOR gates. (NOT gates are easily implemented and are denoted in

digital circuits with a small bubble.) With this practical motivation, we can use our knowledge of digital design

with AND and OR and make use of deMorgan's theorem to implement digital designs with NAND and NOR.

Consider the NAND gate. A NAND gate is a NOT-AND gate with two (typically) input variables, x1 and x2. The

output is the complement of the ANDed input variables. The NAND gate operation is denoted by (x1·x2)'. The

NAND gate uses a bubble on the output to signify the complement and an AND gate to signify the AND operation.

Let's apply deMorgan's theorem to this NAND gate. We know that deMorgan's theorem will take the complement

of the inputs, change the AND gate to an OR gate, and yield the complement of our output function. Thus, we can

create an analogy between a NAND gate and an OR gate with complemented inputs.

NAND gate

x1

0

0

1

1

x2

0

1

0

1

|

|

|

|

|

Analogous OR gate (with complemented inputs)

(x1 · x2)'

1

1

1

0

x1

0

0

1

1

x2

0

1

0

1

|

|

|

|

|

x 1' + x 2'

1

1

1

0

Consider the NOR gate. A NOR gate is an OR-NOT gate with two (typically) input variables, x1 and x2. The output

is the complement of the ORed input variables. The NOR gate operation is denoted by (x1+x2)'. The NOR gate uses

a bubble on the output to signify the complement and an OR gate to signify the OR operation.

Let's now apply deMorgan's theorem to this NOR gate. We know that deMorgan's theorem will take the

complement of the inputs, change the OR gate to an AND gate, and yield the complement of our output function.

Thus, we can create an analogy between a NOR gate and an AND gate with complemented inputs.

NOR gate

x1

0

0

1

1

x2

0

1

0

1

|

|

|

|

|

Analogous AND gate (with complemented inputs)

(x1 + x2)'

1

0

0

0

x1

0

0

1

1

PAGE 25

x2

0

1

0

1

|

|

|

|

|

x 1' · x 2'

1

0

0

0

Example. Sketch a digital logic circuit with AND and OR gates and a digital logic circuit with only NAND gates for

the SOP function f = x1 · x2 + x3 · x4 · x5.

AND/OR gates:

AND/OR gates (complemented):

PAGE 26

NAND gates:

2.6 Standard logic chips.

The logic gates in the prior sections can be implemented in digital circuits with 7400-series standard logic chips.

The implementation is straightforward, with connections made between appropriate pins of the logic chips.

Four standard logic chips are shown here.

The 7404 chip includes six hex inverters.

The 7407 chip includes six hex buffers.

The 7408 chip includes four AND gates.

The 7432 chip includes four OR gates.

Example. Wire together the 7400-series standard logic chips to implement the function f = (x1 + x2')·x3.

PAGE 27

2.7 Logic network implementation technology.

Transistors. A transistor has three terminals:

I. source, which can be thought of as the input terminal,

II. drain, which can be thought of as the output terminal, and

III. gate, the terminal to which an electrical signal is applied to control current flow from the source to the drain.

Consider NMOS transistor realizations of the NOT gate. We state the logic relating the input variable, x, and

output function, f, and sketch the NMOS and PMOS transistor circuits that form this logic relationship.

x |

0 |

1 |

f = x'

1

0

NMOS realization (pull-down):

Consider the NMOS transistor realizations of the AND gate and NAND gate. We relate the input variables, x1 and

x2, to the output function, f, for an AND gate and a NAND gate, and then sketch the NMOS transistor circuits that

form these logic relations.

x1

0

0

1

1

x2

0

1

0

1

|

|

|

|

|

f = x 1 · x2

0

0

0

1

x1

x2

0

0

0

1

1

0

1

1

NMOS realization (pull-down):

PAGE 28

|

|

|

|

|

f = (x1 · x2)'

1

1

1

0

Consider the NMOS transistor realizations of the OR gate and NOR gate. We first state the logic relationships

between input variables, x1 and x2, and output function, f, for an OR gate and a NOR gate and then sketch the

NMOS transistor circuits that can form these logic relationships.

x1

0

0

1

1

x2

0

1

0

1

|

|

|

|

|

f = x1 + x2

0

1

1

1

x1

0

0

1

1

x2

0

1

0

1

NMOS realization (pull-down):

A note on programmable logic.

PAGE 29

|

|

|

|

|

f = (x1 + x2)'

1

0

0

0

APSC 262 Digital Logic Design

Dr. Ayman Elnaggar, Ph.D., P.Eng.

School of Engineering, Faculty of Applied Science

The University of British Columbia

Office: EME 4261

Tel: 250-807-8198

Email:ayman.elnaggar@ubc.ca

TEXTBOOK

Chapter 1 Digital Systems and Binary Numbers

Chapter 2 Boolean Algebra and Logic Circuits

Chapter 3 Gate-Level Minimization

Chapter 4 Combinational Logic

Chapter 5 Synchronous Sequential Logic

Chapter 6 Register, Counters, and FSM

Chapter 7 Memory & Programmable Logic

Digital Design with an Introduction

to Verilog HDL, 5th edition.

MODULE 3.0

3.0 Logic function minimization

3.1 Karnough Map (K-Map)

3.2 2-, 3-, and 4-variable K-Map

3.3 Don’t Care Conditions

PAGE 30

3.0 Logic function minimization.

In this module, we will look at optimization techniques to create minimized designs for logic functions. Before we

begin, it is best to define some terminology that is needed to do this minimization of logic functions.

Literal. A given product term will contain many variables, and each appearance of a variable, either in

complemented or uncomplemented form, is defined as a literal. The product term x1·x2'·x3 contains three literals,

while the product term x1·x2'·x3·x4' contains four literals.

Objective: In our previous analysis we have considered implementing Boolean functions in the form of sum of

minterms (or a minimized SOP) using 2-level AND-OR gates.

• 3-input and 4-input Gates are practically implemented using 2, 3 or more 2-input Gates. So reducing

number of inputs leads to reduced number of gates.

• Given two or more Boolean functions for the same design, our goal is to implement the simplified one

that has less number of product terms (less number of AND gates and eventually less inputs to the OR

gate).

Therefore, in our minimization analysis, we will be aiming to not only eliminating minterms/product terms, but

also to reducing the number of literals in most of the product terms.

But that is not always easy through algebraic simplifications. In the next sections, we will see that the minimized

SOP logic function can be found easily through the use of a Karnaugh map.

3.1 Multivariable SOP Karnaugh maps.

3.1.1 Two-variable SOP Karnaugh maps.

Consider the truth table and sum of minterms for a function having two input variables, f ( x , y ) = mi . We can

i

organize the sum of minterms within a grid, and the result is the two-variable Karnaugh map.

The two-variable map is shown below;

There are four minterms for two variables; hence, the map consists of four squares, one for each minterm. The

map is redrawn in to show the relationship between the squares and the two variables x and y.

The 0 and 1 marked in each row and column designate the values of variables. Variable x appears primed in row 0

and unprimed in row 1. Similarly, y appears primed in column 0 and unprimed in column 1. We mark the squares

whose minterms belong to a given function with 1.

PAGE 31

Step 1: Mapping of a given sum of minterms into K-Map

As an example, let us say we want to minimize the function

f (x, y ) = m1 + m2 + m3 = x' y + xy'+ xy

The marking of xy is shown in Fig. (a) below. Since xy is equal to m3, a 1 is placed inside the square that belongs to

m3. Fig. (b) shows the marking of all terms by three squares marked with 1’s

Step 2: Combine maximum number of 1’s following rules:

1. Only adjacent squares can be combined

2. All 1’s must be covered

3. Covering rectangles must be of size 1,2,4,8, … 2n

4. Check if all covering are really needed

we combine 1's in adjacent cells with circled groups. We look for adjacent (horizontal or vertical, but not

diagonal) 1's in the grid and circle groups of the 1's in groups of one, two, or four. All 1's must be circled, and we

always draw a circled group of 1's with the largest possible size (containing one, two, or four 1's).

Step 3: Write minimized product terms for each circled group. We write a product term for each circled group:

· a circled group with one 1 is specified by a product term containing two input variables;

· a circled group with two 1's is specified by a product term containing one input variable;

· a circled group with four 1's is specified by a product term that is simply 1 (as the output function is always 1).

The horizontal circle is on the row of x=1 → x part of the product term. The other variable, y, changed from 0 to 1

over the circle, therefore y is not part of the product term. As a result, the grouping of m 2 and m3 in one circle can

be simplified to a product term of single literal x.

Similarly, the vertical circle that covers m1 and m3 can be simplified to a product term of single literal y.

The simplified SOP is the ORing of both product terms = x+y

Compare the number of gates required to implement both; the original sum of minterms, and the simplified SOP.

PAGE 32

3.1.2 Three-variable SOP Karnaugh maps.

Consider the truth table and SOP minterms for a function having three input variables, f ( x , y , z ) = mi . There

i

are eight minterms for three binary variables; therefore, the map consists of eight squares. Note that the

minterms are arranged, not in a binary sequence. The characteristic of this sequence is that only one bit changes

in value from one adjacent column to the next. The map drawn in part (b) is marked with numbers in each row

and each column to show the relationship between the squares and the three variables.

Step 1: Mapping of a given sum of minterms into K-Map

as before

Step 2: Combine maximum number of 1’s following rules:

As before but we combine 1's in adjacent cells with circled groups. We look for adjacent (horizontal or vertical,

but not diagonal) 1's in the grid and circle groups of the 1's in groups of one, two, four, or eight. All 1's must be

circled, and we always draw a circled group of 1's with the largest possible size (containing one, two, four, or

eight 1's). Circled groupings can wrap off the top, bottom, sides and corners of the grid, onto opposing sides.

Step 3: Write minimized product terms for each circled group.

Third, we write minimized product terms for each circled group. We write a product term for each circled group:

· a circled group with one 1 is specified by a product term containing three input variables;

· a circled group with two 1's is specified by a product term containing two input variables;

· a circled group with four 1's is specified by a product term containing one input variable;

· a circled group with eight 1's is specified by a product term that is simply 1 (as the output function is always 1).

PAGE 33

Example

Simplify the Boolean function f (x, y, z ) = (2, 3, 4, 5)

Step 1: Mark 1’s on each square representing a minterm of the function.

Step 2: Circle groups of 8, 4, or 2 ones (only group of 2 ones exist)

Step 3: Write simplified product terms:

For the first circle on the first row, x is complemented (=0) → X’ is on the product term, y =1→ y is on the

product term, z changes → removed. Therefore, the first product term = X’y.

For the second circle on the second row, x =1 → X is on the product term, y is complemented → y’ is on the

product term, z changes → removed. Therefore, the first product term = xy’.

Therefore, the simplified SOP is x’y+xy’

Is it easy to come to the same result using Boolean algebra? How? Compare the number of gates

required to

implement both; the original sum of minterms, and the simplified SOP

Example

Simplify the Boolean function f (x, y, z ) = (3, 4, 6, 7)

Note that m4 and m6 are considered as adjacent squares. Why? As one of the variables stays the same (z) and

the other variable change (y). On the second row x =1 → X is on the product term, y changes → removed, z is

complemented → z’ is on the product term. Therefore, the first product term = xz’.

Is it easy to come to the same result using Boolean algebra? How?

Compare the number of gates required to implement both; the original sum of minterms, and the simplified SOP

PAGE 34

Example

Simplify the Boolean function f (x, y, z ) = (0, 2, 4, 5, 6)

Note that m0, m2, m4 and m6 are considered as one group of 4 adjacent squares. Within this group, x changes →

removed, y changes → removed, z is 0 (complemented) → the product term has only one literal z’. the second

product term is xy’

Therefore, the simplified SOP is z’+xy’

Notice that m4 has been circled in more than one group. You can do that long as long as there are no redundant

groups. Notice also that m5 has no adjacent squares but m4.

To better understand this concept, let us say we add m7 to the previous function? How the group of m5 will look

like now? Now m5 and m7 can form a group by themselves. If you grouped m4 and m5 in one group as in the

previous example, you form a redundant group (groups that have a common literal that can be further

simplified).

What if we are given a function that is not in the form of sum of minterms?

Example

Simplify the Boolean function f ( A, B, C ) = A' C + A' B + AB ' C + BC

The first step is to rewrite the function as a sum of minterms so the terms can be mapped to squares on the Kmaps. This can be achieved by multiplying each product term by (missing literal + its complement) which is equal

to 1.

f ( A, B, C ) = A' ( B + B' )C + A' B(C + C ' ) + AB' C + ( A + A' ) BC

= A' BC + A' B' C + A' BC + A' BC '+ AB' C + ABC + A' BC

= m3 + m1 + m2 + m5 + m7

Remember that X+X=X that is why we have only one copy of m3. Remember also to keep the order of the literals

in each minterm as A, followed by B, followed by C

PAGE 35

3.1.3 Four-variable SOP Karnaugh maps.

The map for Boolean functions of four binary variables f(w, x, y, z) is shown the Fig. below . In Fig. (a) are listed

the 16 minterms and the squares assigned to each. In Fig. (b), the map is redrawn to show the relationship

between the squares and the four variables.

Notice the order of the minterms on the third and fourth rows/columns.

Step 1: Mapping of a given sum of minterms into K-Map

as before

Step 2: Combine maximum number of 1’s following rules:

As before but we combine 1's in adjacent cells with circled groups. We look for adjacent (horizontal or vertical,

but not diagonal) 1's in the grid and circle groups of 1's in groups of one, two, four, eight, or sixteen. All 1's must

be circled, and we always draw the circled group of 1's with the largest possible size (with one, two, four, eight, or

sixteen 1's). Circled groupings can wrap off the top, bottom, sides and corners of the grid, onto opposing sides.

Step 3: Write minimized product terms for each circled group.

We write a product term for each circled group:

· a circled group with one 1 is specified by a product term containing four input variables;

· a circled group with two 1's is specified by a product term containing three input variables;

· a circled group with four 1's is specified by a product term containing two input variables;

· a circled group with eight 1's is specified by a product term containing one input variable;

· a circled group with sixteen 1's is specified by a product term that is simply 1 (as the output function is always 1).

PAGE 36

Example

Simplify the Boolean function f (w, x, y, z ) = (0, 1, 2, 4, 5, 6, 8, 9, 12, 13, 14)

For the circle of eight ones, w and x change → both are removed, y=0 → y’ is on the product term, z change

Example

Simplify the Boolean function f ( A, B, C, D ) = (0, 1, 2, 6, 8, 9, 10)

Notice the 4 minterms on the 4 corners can be circled in one group

The simplified SOP = B’C’ + B’D’ + A’CD’

PAGE 37

Don't-care condition.

In some cases, the output of the function (1 or 0) is not specified for certain input combinations either because:

- The input combination never occurs (Example BCD codes), or

- We don’t care about the output of this particular combination

Such a situation leads to a don't-care condition and the unspecified minterms are called don’t cares. While

minimizing a k-map with don’t care minterms, it would be to our advantage to leave the output function value

unspecified and use this unspecified value as a 0 or 1, depending upon whether a 0 or 1 yields a simplified design.

Don't-care values are specified as a set in the shorthand notation of minterms

f (x1 , x2 ,...xn ) = mi + D j

i

j

Designing with don't-care conditions is straightforward. A don't-care condition is labeled within a Karnaugh map

with a "d". We go about our Karnaugh map creation and optimization using the don't-care d values to our best

advantage. Consider this process in the following example.

Example: Simplify the function with the don’t care conditions;

f ( A, B, C ) = m(1, 3, 7) + d (0, 5)

Notice that if we consider d5 as 1, then we can circle the 4 minterms m1, m3, d5 and m7 as one group with product

term = C. For d0, being 1 or 0 will not simplify the product terms any better so will ignore it.

The simplified SOP = C

Example: Simplify the function with the don’t care conditions;

f ( A, B, C, D ) = m(1, 3, 7, 11, 15) + d (0, 2, 5)

-

Two possible solutions! Both are acceptable as all 1’s are grouped

PAGE 38

APSC 262 Digital Logic Design

Dr. Ayman Elnaggar, Ph.D., P.Eng.

School of Engineering, Faculty of Applied Science

The University of British Columbia

Office: EME 4261

Tel: 250-807-8198

Email:ayman.elnaggar@ubc.ca

TEXTBOOK

Chapter 1 Digital Systems and Binary Numbers

Chapter 2 Boolean Algebra and Logic Circuits

Chapter 3 Gate-Level Minimization

Chapter 4 Combinational Logic

Chapter 5 Synchronous Sequential Logic

Chapter 6 Register, Counters, and FSM

Chapter 7 Memory & Programmable Logic

Digital Design with an Introduction

to Verilog HDL, 5th edition.

MODULE 4.0

4.1 Introduction

4.2 Combinational Circuits: Analysis & Design Procedures

4.3 Half- and Full-Adders

4.4 Design Using Standard Building Blocks (Components):

4.4.1 Binary Adders (Ripple Carry Adders)

4.4.2 Subtractos

4.4.3 Decoders

4.4.4 Encoders

4.4.5 Priority Encoders

4.4.6 Multiplixers

PAGE 39

4.1 Introduction

Logic circuits for digital systems may be combinational or sequential. A combinational circuit consists of logic

gates whose outputs at any time are determined from only the present combination of inputs. A combinational

circuit performs an operation that can be specified logically either by a set of Boolean functions or by a truth

table. In contrast, sequential circuits employ storage elements in addition to logic gates. Their outputs are a

function of the inputs and the state of the storage elements. Because the state of the storage elements is a

function of previous inputs, the outputs of a sequential circuit depend not only on present values of inputs, but

also on past inputs, and the circuit behavior must be specified by a time sequence of inputs and internal states.

Sequential circuits are the building blocks of digital systems and are discussed in modules 5 and 6 .

4.2 Combinational Circuits

A combinational circuit consists of an interconnection of logic gates. Combinational logic gates react to the values

of the signals at their inputs and produce the value of the output signal, transforming binary information from the

given input data to a required output data. A block diagram of a combinational circuit is shown below. The n

input binary variables come from an external source; the m output variables are produced by the internal

combinational logic circuit and go to an external destination. Each input and output variable exists as a binary

signal that represents logic 1 and logic 0. The relation between n and m is given as an explicit Boolean function or

as a word problem that needs to be mapped to a truth table.

Combinational

Circuits

n inputs

m outputs

4.2.1 Analysis Procedure

The analysis of a combinational circuit requires that we determine the function that the circuit implements. This

task starts with a given logic diagram and it is required to derive the Boolean functions.

Example: What are the functions of the logic diagram shown below?

A

B

C

F1

A

B

C

A

B

A

C

F2

B

C

F1=AB'C'+A'BC'+A'B'C+ABC

F2=AB+AC+BC

PAGE 40

4.2.2 Design Procedure

The design/implementation of a combinational circuit requires that we draw the logic diagram that implements a

given design specifications/word problem. This task starts with defining and labeling inputs/output, then deriving

the truth table, simplifying the Boolean functions using K-map, and drawing the resultant logic diagram.

1. Specification

• Write a specification for the circuit if one is not already available

• Specify/Label input and output

2. Formulation

• Derive a truth table or initial Boolean equations that define the required relationships between the

inputs and outputs, if not in the specification

• Apply hierarchical design if appropriate

3. Optimization

• Apply multiple-level optimization (K-Map)

• Draw a logic diagram for the resulting circuit using 2-level AND-OR schematic.

4. Verification (Using CAD Tools)

• Verify the correctness of the final design using simulation

Practical Considerations:

• Number of gates (size, area, power, and cost)

• Maximum allowed delay

• Maximum consumed power

• Working conditions (temp., vibration, water resistance, etc.)

PAGE 41

Example: Design a circuit that has a 3-bit input and a single output (F) specified as follows:

• F = 0, when the input is less than (5)10

• F = 1, otherwise

⚫

Step 1 (Specification):

Label the inputs (3 bits) as X, Y, Z

X is the most significant bit, Z is the least significant bit

The output (1 bit) is F:

F = 1 → (101)2 , (110)2 , (111)2

F = 0 → other inputs

• Step 3 (Optimization)

• Step 2 (Formulation)

Obtain Truth table

YZ

00

01

11

10

X 0

0

0

0

0

1

0

1

1

1

F = XY’Z + XYZ’+XYZ

Logic Diagram

X

Z

X

Y

PAGE 42

F

Example: Design a BCD to Excess-3 Code Converter

Code converters convert from one code to another (BCD to Excess-3 in this example)

The inputs are defined by the code that is to be converted (BCD in this example)

The outputs are defined by the converted code (Excess-3 in this example)

Excess-3 code is a decimal digit plus three converted into binary,

i.e. 0 → 0011, 1 → 0100, and so on

Step 1 (Specification)

⚫ 4-bit BCD input (A,B,C,D)

⚫ 4-bit E-3 output (W,X,Y,Z)

Step 2 (Formulation)

Obtain Truth table

Step 3 (Optimization)

PAGE 43

Example: Design a BCD-to-Seven-Segment Decoder

A BCD-to-Seven-Segment decoder is a combinational circuit that:

Accepts a decimal digit in BCD (input)

Generates appropriate outputs for the segments to display the input decimal digit (output)

Step 1 (Specification):

4 inputs (A, B, C, D)

7 outputs (a, b, c, d, e, f, g)

Step 2 (Formulation):

w

x

y

z

?

a

b

c

d

e

f

g

Step 3 (Optimization)

a

f

g

e

b

c

d

a = w + y + xz + x’z’

w x y z

abcdefg

0 0 0 0

1111110

0 0 0 1

0110000

0 0 1 0

1101101

0 0 1 1

1111001

0 1 0 0

0110011

0 1 0 1

1011011

0 1 1 0

1011111

0 1 1 1

1110000

1 0 0 0

1111111

1 0 0 1

1111011

1 0 1 0

xxxxxxx

1 0 1 1

xxxxxxx

1 1 0 0

xxxxxxx

1 1 0 1

xxxxxxx

1 1 1 0

xxxxxxx

1 1 1 1

xxxxxxx

We show the steps required to design/implement output a only. Another 6 K-maps are required; one for each of

the 6 outputs (b through g). They are left as an exercise.

PAGE 44

4.3 Half- and Full-Adders.

Digital computers perform a variety of information-processing tasks. Among the functions encountered are the

various arithmetic operations. The most basic arithmetic operation is the addition of two binary digits. This

simple addition consists of four possible elementary operations: 0 + 0 = 0, 0 + 1 = 1, 1 + 0 = 1, and 1 + 1 = 10. The

first three operations produce a sum of one digit, but when both augend and addend bits are equal to 1, the

binary sum consists of two digits. The higher significant bit of this result is called a carry. When the augend and

addend numbers contain more significant digits, the carry obtained from the addition of two bits is added to the

next higher order pair of significant bits. A combinational circuit that performs the addition of two bits is called a

half adder. One that performs the addition of three bits (two significant bits and a previous carry) is a full adder.

4.3.1 Half Adder (HA)

The HA needs two binary inputs and two binary outputs. The input variables designate the augend and addend

bits; the output variables produce the sum and carry. We assign symbols x and y to the two inputs and S (for sum)

and C (for carry) to the outputs. The truth table for the half adder is listed below.

The C output is 1 only when both inputs are 1. The S output represents the least significant bit of the sum. The

simplified Boolean functions for the two outputs can be obtained directly from the truth table. The simplified SOP

as well as the logic diagram are shown;

4.3.2 Full Adder (FA)

Addition of n-bit binary numbers requires the use of a full adder, and the process of addition proceeds on a bitby-bit basis, right to left, beginning with the least significant bit. After the least significant bit, addition at each

position adds not only the respective bits of the words, but must also consider a possible carry bit from addition

at the previous position.

A full adder is a combinational circuit that forms the arithmetic sum of three bits. It consists of three inputs and

two outputs. Two of the input variables, denoted by x and y, represent the two significant bits to be added. The

third input, z, represents the carry from the previous lower significant position. Two outputs are necessary

because the arithmetic sum of three binary bits ranges in value from 0 to 3, and binary representation of 2 or 3

needs two bits. The two outputs are designated by the symbols S for sum and C for carry. The binary variable S

gives the value of the least significant bit of the sum. The binary variable C gives the output carry formed by

adding the input carry and the bits of the words.

The truth table of the full adder is listed in

PAGE 45

The simplified Boolean functions of C and S as well as the corresponding logic diagrams are shown below;

The FA can also be implemented by cascading two HAs as shown below;

PAGE 46

4.4 Design Using Standard Building Blocks (Components)

4.4.1 Binary Adder (Ripple-Carry Adder)

A binary adder is a digital circuit that produces the arithmetic sum of two binary numbers. It can be constructed

with full adders connected in cascade, with the output carry from each full adder connected to the input carry of

the next full adder in the chain. Addition of n-bit numbers requires a chain of n full adders with the input carry to

the least significant position is fixed at 0. The figure below shows the interconnection of four full-adder (FA)

circuits to provide a four-bit binary ripple carry adder.

Why don’t we simply use the design procedure we have learned for designing combinational circuits?

The four-bit adder is a typical example of a standard component (or modular design). It can be used in many

applications involving arithmetic operations. Observe that the design of this circuit by the classical method would

require a truth table with 29 = 512 entries, since there are nine inputs to the circuit. By using an iterative method

of cascading a standard function, it is possible to obtain a simple and straightforward implementation.

Carry Propagation

The addition of two binary numbers in parallel implies that all the bits of the augend and addend are available for

computation at the same time. As in any combinational circuit, the signal must propagate through the gates

before the correct output sum is available in the output terminals. The total propagation time is equal to the

propagation delay of a typical gate, times the number of gate levels in the circuit. The longest propagation delay

time in an adder is the time it takes the carry to propagate through the full adders (C4 in the 4-bit adder shown

above). Since each bit of the sum output depends on the value of the input carry, the value of Si at any given

stage in the adder will be in its steady-state final value only after the input carry to that stage has been

propagated (S3 will waiting the most for C3 to arrive). From the logic diagram of the FA shown before, the signal

from the input carry Ci to the output carry Ci+1 propagates through an AND gate and an OR gate, which constitute

two gate levels. If there are four FAs in the adder, the output carry C4 would have 2 * 4 = 8 gate levels from C0 to

C4. For an n -bit adder, there are 2 n gate levels for the carry to propagate from input to output.

PAGE 47

Even though the designing binary adders from FAs is very simple and regular, as the size of the adder increases,

this carry propagation delay becomes a design issue specially if high performance is required. However, there

exists other adder architectures that reduces the delay but on the price of increasing the architecture complexity.

Examples are Carry Look-Ahead Adder and Carry-Select Adders that will be discussed in details in the ENGR468

course.

4.4.2 Binary Subtractor

The subtraction of unsigned binary numbers (A – B) can be done by taking the 2’s complement of B and adding it

to A. The 2’s complement can be obtained by taking the 1’s complement and adding 1 to the least significant pair

of bits. The 1’s complement can be implemented with inverters, and a 1 can be added to the sum through the

input carry.

The addition and subtraction operations can be combined into one circuit with one common binary adder by

including an exclusive-OR gate with each full adder. A four-bit adder–subtractor circuit is shown below.

M

0 : Add

1: Subtract

The mode input M controls the operation. When M = 0, the circuit is an adder, and when M = 1, the circuit

becomes a subtractor. Each exclusive-OR gate receives input M and one of the inputs of B. When M = 0, we have

B 0 = B . The full adders receive the value of B , the input carry is 0, and the circuit performs A plus B . When

'

M = 1, we have B 1 = B and C0 = 1. The B inputs are all complemented and a 1 is added through the input

carry. The circuit performs the operation A plus the 2’s complement of B.

PAGE 48

4.4.3 Decoders

In this section, we will consider the operation of decoders. A decoder is a combinational circuit that converts

binary information from n input lines to a maximum of 2n unique output lines. That is at any time, there will be

exactly one output bit set to 1 (activated) while all others are equal to 0s. (if it is an active-low output decoder,

one output is equal to 0 at any time while others are equal to1s). If the n -bit coded information has unused

combinations, the decoder may have fewer than 2n outputs.

In particular, we will look at the n-bit binary decoder. The n-bit binary decoder has n inputs and 2n outputs. There

is also an enable input, En, controlling the encoder operation, such that En = 0 yields no output and En = 1 has the

input valuation activate the appropriate output.

Let's analyze the decoder operation for the case of a 2-bit (2-to-4) decoder. The inputs I1 and I0 are treated as a

two-bit integer, the value of which specifies the precise output, Y0, Y1, Y2 or Y3, to be made equal to 1. We can

represent the relationship between the outputs and inputs with a truth table, graphical symbol, and logic circuit:

I1 I0

Y3 Y2 Y1 Y0

Y3

0 0

0 0 0 1

Y2

0 1

0 0 1 0

Y1

1 0

0 1 0 0

Y0

1 1

1 0 0 0

I1

I0

The 3-bit (3-to-8) decoder is shown below.

PAGE 49

Combinational Circuits Implementation (Logic Function Synthesis) Using Decoders

A decoder provides the 2n minterms of n input variables. Each asserted output of the decoder is associated with a

unique pattern of input bits. Since any Boolean function can be expressed in sum-of-minterms form, a decoder

that generates the minterms of the function, together with an external OR gate that forms their logical sum,

provides a hardware implementation of the function. In this way, any combinational circuit with n inputs and m

outputs can be implemented with an n -to-2n decoder and m OR gates (one OR gate per output function).

In order to implement a combinational circuit by means of a decoder and OR gates, it is required that the

Boolean function for the circuit be expressed as a sum of minterms. A decoder is then chosen that generates all

the minterms of the input variables. The inputs to each OR gate are selected from the decoder outputs according

to the list of minterms of each function.

This procedure will be illustrated by an example that implements a full-adder circuit. From the truth table of the

full adder shown before, we obtain the functions for the combinational circuit in sum-of-minterms form:

S ( x, y, z ) = (1, 2, 4, 7)

C ( x, y, z ) = (3, 5, 6, 7)

Since there are three inputs and a total of eight minterms, we need a three-to-eight-line decoder. The

implementation is shown below. The decoder generates the eight minterms for x , y , and z . The OR gate for

output S forms the logical sum of minterms 1, 2, 4, and 7. The OR gate for output C forms the logical sum of

minterms 3, 5, 6, and 7.

PAGE 50

4.4.4 Encoders

An encoder is a digital circuit that performs the inverse operation of a decoder. An encoder has 2 n (or fewer)

input lines and n output lines. The output lines, as an aggregate, generate the binary code corresponding to the

input value. An example of an encoder is the 8-to-3 encoder whose truth table is given below. It has eight inputs

(one for each of the input digits) and three outputs that generate the corresponding binary number. It is assumed

that only one input has a value of 1 at any given time.

The encoder can be implemented with OR gates whose inputs are determined directly from the truth table.

Output Z is equal to 1 when the input digit is 1, 3, 5, or 7. Output Y is 1 for octal digits 2, 3, 6, or 7, and output X is

1 for digits 4, 5, 6, or 7. These conditions can be expressed by the following Boolean output functions:

Output functions:

X = D4 + D5 + D6 + D7

Y = D2 + D3 + D6 + D7

Z = D1 + D3 + D5 + D7

ID7

7

ID6

6

ID5

5

ID4

4

ID3

3

ID2

2

D1

I1

ID0

0

PAGE 51

X Y2

Y Y1

Z Y0

4.4.5 Priority Encoders

It is assumed that only one input has a value of 1 at any given time of the encoder that is described above. But

what if two or more inputs happen to have a value 1 at the same time?

A priority encoder is an encoder circuit that includes the priority function. The operation of the priority encoder is

such that if two or more inputs are equal to 1 at the same time, the input having the highest priority will take

precedence. The truth table of a four-input priority encoder is given below.

In addition to the two outputs x and y, the circuit has a third output designated by V; this is a valid bit indicator

that is set to 1 when one or more inputs are equal to 1. If all inputs are 0, there is no valid input and V is equal to

0. The other two outputs are not inspected when V equals 0 and are specified as don’t-care conditions. Note that

whereas X ’s in output columns represent don’t-care conditions, the X ’s in the input columns are useful for

representing a truth table in condensed form. Instead of listing all 16 minterms of four variables, the truth table

uses an X to represent either 1 or 0. For example, X 100 represents the two minterms 0100 and 1100.

According to table, the higher the subscript number, the higher the priority of the input. Input D3 has the highest

priority, so, regardless of the values of the other inputs, when this input is 1, the output for xy is 11 (binary 3). D2

has the next priority level. The output is 10 if D2 = 1, provided that D3 = 0, regardless of the values of the other

two lower priority inputs. The output for D1 is generated only if higher priority inputs are 0, and so on down the

priority levels.

PAGE 52

4.4.6 Multiplexers

An m-to-1 multiplexer operates with 2m data inputs and m select inputs. The select inputs can be used to route

data from the 2m inputs to the single output.

Simple data input selection with multiplexers

Simple data input selection with 2-to-1, 4-to-1, and 16-to-1 multiplexers. We can use our knowledge of SOP

expressions and truth tables to characterize the data input selection process with multiplexers.

2-to-1 multiplexer

4-to-1 multiplexer

16-to-1 multiplexer

f = s0 'w0 + s0 w1

f = s1 's0 'w0 + s1 's0 w1

+ s1 s0 'w2 + s1 s0 w3

f = s3 's2 's1 's0 'w0

s0 |

0 |

1 |

f

w0

w1

s3

s2

s1

s0 | f

0

0

0

0 | w0

0

0

0

1 | w1

0

0

1

0 | w2

0

0

1

1 | w3

0

1

0

0 | w4

0

1

0

1 | w5

0

1

1

0 | w6

0

1

1

1 | w7

1

0

0

0 | w8

1

0

0

1 | w9

1

0

1

0 | w10

1

0

1

1 | w11

1

1

0

0 | w12

1

1

0

1 | w13

1

1

1

0 | w14

1

1

1

1 | w15