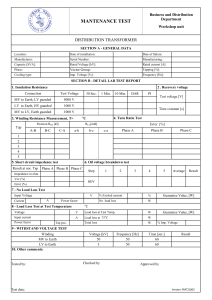

Principles of Deep Learning I Itthi Chatnuntawech Nanoinformatics and Artificial Intelligence (NAI) November 14, 2022 Outline ❖ Deep Learning Components Fully connected layer ❖ Computation graph ❖ Activation functions ❖ Loss function ❖ Model optimization ❖ Gradient descent ❖ Backpropagation ❖ Stochastic gradient descent (SGD) ❖ Regularization ❖ ℓ and ℓ regularization 2 1 ❖ Dropout ❖ Batch normalization ❖ Convolutional layer ❖ Pooling layer ❖ ❖ Convolutional Neural Network (CNN) 2 From Last Time: Image Classification Using Deep Learning ❖ Training phase: Optimize the weights of a deep neural network Prepared inputs Training loss Prepared outputs 0.7 1 0.2 0 0.1 0 0.9 1 Validation loss Iteration ❖ Estimated outputs Test phase: Perform prediction using the trained neural network 0.01 orange! 3 Fully connected layer (Dense) Convolutional layer Optimizer Conv1D, 2D, 3D, … separable Conv SGD Adam RMSprop Evaluation metric accuracy F1-score AUC confusion matrix Deep Learning Activation function Loss function categorical crossentropy binary crossentropy mean squared error mean absolute error ❖ Pooling layer max-pooling average-pooling Regularization Dropout Data augmentation l1, l2 regularizations sigmoid softmax ESP (swish) ReLU Combine basic components to build a neural network • More components → “More” representative power 4 Artificial Neuron Artificial neuron Single neuron 1 𝑥1 𝑤1 𝑤2 𝑦=a … … 𝑥2 𝑤0 𝑥𝑁 𝑤𝑁 N xnwn + w0 (∑ ) n=1 non-linear function (activation) If we set all the weights to 0, then y = 0. Different weights give rise If we set w1 = 1 and the rest to 0, then y = a(x1). to different behavior If we set w0 = 0 and the rest to 1, then y = a(x1 + x2 + . . . + xN ). The Essense of Artificial Neural Networks by Ivan Liljeqvist (Medium) 5 keyword: Multi-Layer Perceptron (MLP) Fully ConnectedWLayer (Dense) Each neuron a(w T x) 2 W1 W3 Input A 1 x Output B 2 y C 3 D 4 E Hidden layer 1 (ignore the biases for simplicity) outA outB outC = a1(W1x) =a1 outD outE wA,1 wB,1 wC,1 wD,1 wE,1 wA,2 wB,2 wC,2 wD,2 wE,2 wA,3 wB,3 wC,3 wD,3 wE,3 wA,4 wB,4 wC,4 wD,4 wE,4 x1 x2 x3 x4 y = a3(W3a2(W2a1(W1x))) 6 keyword: Multi-Layer Perceptron (MLP) Representing Neural Network y = a3(W3a2(W2a1(W1x))) W1 W2 W3 Input Output x y Computation graph x W1 x a1 Fully connected 1 (5 nodes, a1) W2 a2 Fully connected 2 (7 nodes, a2) W3 a3 y Fully connected 3 (3 nodes, a3) y 7 Activation Functions Introduction to Exponential Linear Unit 8 class 1 class 2 Two-class Classification probability of being class 1 1 activation functions 0 Fully connected 1 (3 nodes, ReLU) Fully connected 2 (2 nodes, ReLU) Fully connected 3 (1 nodes, sigmoid) 0.99 model.add(tf.keras.layers.Dense(3, activation=‘relu’)) model.add(tf.keras.layers.Dense(2, activation=‘relu’)) model.add(tf.keras.layers.Dense(1, activation=‘sigmoid’)) 9 class 1 class 2 class 3 Three-class Classification probability of being class 1 probability of being class 2 probability of being class 3 1 activation functions 0 Fully connected 1 (3 nodes, ReLU) Fully connected 2 (2 nodes, ReLU) Fully connected 3 (3 nodes, sigmoid) 0.99 What if our model output something like 0.99 0.99 0.99 0.1 0.02 ? Not good 10 class 1 class 2 class 3 Three-class Classification probability of being class 1 probability of being class 2 probability of being class 3 activation functions Softmax function z1 = 5 … Softmax function e zj z2 = 2 σ(z)i = z3 = 1 K = 3 in this case K ∑j=1 e zj e z1 e5 = 5 = 0.936 z z z 2 1 3 1 2 e +e +e e +e +e e2 e z2 = 5 = 0.047 e + e2 + e1 e z1 + e z2 + e z3 e1 e z3 = 5 = 0.017 z 2 1 z z e +e +e e 1+e 2+e 3 0.936 + 0.047 + 0.017 = 1 11 keywords: multi-class classification ≠multi-label classification Extend the model to K-class Classification Fully connected 2 (2 nodes, ReLU) Fully connected 3 (K nodes, softmax) P(class 2) … Fully connected 1 (3 nodes, ReLU) P(class 1) P(class K) model.add(tf.keras.layers.Dense(3, activation=‘relu’)) model.add(tf.keras.layers.Dense(2, activation=‘relu’)) model.add(tf.keras.layers.Dense(K, activation=‘softmax’)) 12 Fully connected layer (Dense) Convolutional layer Optimizer Conv1D, 2D, 3D, … separable Conv SGD Adam RMSprop Evaluation metric accuracy F1-score AUC confusion matrix Deep Learning Activation function Loss function categorical crossentropy binary crossentropy mean squared error mean absolute error ❖ Pooling layer max-pooling average-pooling Regularization Dropout Data augmentation l1, l2 regularizations sigmoid softmax ESP (swish) ReLU Combine basic components to build a neural network • More components → “More” representative power 13 Image Classification Using Deep Learning ❖ Training phase: Optimize the weights of a deep neural network Prepared inputs Training loss Prepared outputs 0.7 1 0.2 0 0.1 0 0.9 1 Validation loss Iteration ❖ Estimated outputs Test phase: Perform prediction using the trained neural network 0.01 orange! 14 Loss function (cost function and error function) ❖ A function L that maps values of variable(s) onto a real number For example, L(y, y)̂ gives us a number that tell us how “different” y and ŷ are ❖ We have used it to monitor how our model performs during the training phase Training loss 1 N L(y, y)̂ = (yi − yî )2 N∑ i=1 Validation loss Iteration 1 N | yi − yî | L(y, y)̂ = ∑ N i=1 ❖ Mean squared error (MSE) and mean absolute error (MAE) are two popular choices for regression ❖ Cross-entropy is the most common choice for a classification task 15 Cross-entropy ❖ Well suited to comparing probability distributions 3-class classification deep learning layers L(y, y)̂ = − K ∑ k=1 yklogyk̂ Fully connected (3 nodes, softmax) predicted prob. dist. true prob. dist. 0.7 1 0.1 0 0.2 0 ŷ by definition (K = 3) y One-hot encoding = − (1 × log(0.7) + 0 × log(0.1) + 0 × log(0.2)) = − 1 × log(0.7) = 0.15 Assume that we have updated the weights of our model and got ŷ = [0.9, 0.1, 0], then L(y, y)̂ = − 1 × log(0.9) + 0 + 0 = 0.046 Lower loss. ŷ has become more similar to y than the previous case. Our model performs better! 16 Optimizing Our Model ❖ ❖ To train our model fW, we adjust its weights W in a way that decreases your loss function Particularly, we are minimizing our loss function with respect to W prepared output/ labels/targets prepared input L(y, y)̂ = L(y, fW(x)) predicted output Weights Calculus! Compute the derivative of L(y, fW (x)) with respect to W and set it to 0. 17 Example: Optimization ❖ Goal: Find w that minimizes the following loss function L(w) = w 2 w Method 1: Compute the derivative and set it to 0 Gradient dL(w) dw 2 = = 2w dw dw =0 w=0 In our case, we have L(y, fW (x)). Not simple to compute the gradient with respect to W. Even when we have a way to compute the gradient and manage to set it to zero, we might not be able to get a closed-form solution for it. 18 keyword: gradient descent Example: Optimization ❖ Goal: Find w that minimizes the following loss function L(w) = w 2 • Randomly start somewhere • Gradually move to the minimum of the function w Method 2: Iteratively solve for w Gradient Descent for Logistic Regression Simplified – Step by Step Visual Guide 19 keyword: gradient descent Example: Optimization ❖ Goal: Find w that minimizes the following loss function L(w) = w 2 dL(w) dw positive value (positive slope → move left to decrease the function) zero negative value w Method 2: Iteratively solve for w (negative slope → move right to decrease the function) w dL(w) 1. Guess an initial solution w0 and pick α The negative of the gradient − tells us dw Iteration k For k = 0,1,2,... the direction we should move to decrease the function dL(w) |w=w = 2w |w=w = 2w(k) 2. Compute (k) (k) dw dL(w) |w=w = w(k) − α * 2w(k) 3. Compute a new solution w(k+1) := w(k) − α (k) dw 4. Repeat step 2 and 3 until converge step size/learning rate α indicates how far we want to move 20 keyword: gradient descent Example: Optimization ❖ Goal: Find w that minimizes the following loss function L(w) = w 2 w(0) = − 2, α = 0.5 w(1) = w(0) − α * 2w(0) = − 2 − 0.5 * 2 * −2 = 0 w(2) = w(1) − α * 2w(1) = 0 w(3) = w(2) − α * 2w(2) = 0 w Method 2: Iteratively solve for w 1. Guess an initial solution w0 and pick α For k = 0,1,2,... dL(w) |w=w = 2w |w=w = 2w(k) 2. Compute (k) (k) dw dL(w) |w=w = w(k) − α * 2w(k) 3. Compute a new solution w(k+1) := w(k) − α (k) dw 4. Repeat step 2 and 3 until converge 21 keyword: gradient descent Example: Optimization ❖ Goal: Find w that minimizes the following loss function L(w) = w 2 w(0) = 2, α = 0.5 w(1) = w(0) − α * 2w(0) = 2 − 0.5 * 2 * 2 = 0 w(2) = w(1) − α * 2w(1) = 0 w(3) = w(2) − α * 2w(2) = 0 w Method 2: Iteratively solve for w 1. Guess an initial solution w0 and pick α For k = 0,1,2,... dL(w) |w=w = 2w |w=w = 2w(k) 2. Compute (k) (k) dw dL(w) |w=w = w(k) − α * 2w(k) 3. Compute a new solution w(k+1) := w(k) − α (k) dw 4. Repeat step 2 and 3 until converge 22 keyword: gradient descent Example: Optimization ❖ Goal: Find w that minimizes the following loss function L(w) = w 2 w(0) = 2, α = 0.25 w(1) = w(0) − α * 2w(0) = 2 − 0.25 * 2 * 2 = 1 w(2) = w(1) − α * 2w(1) = 1 − 0.25 * 2 * 1 = 0.5 w(3) = w(2) − α * 2w(2) = 0.5 − 0.25 * 2 * 0.5 = 0.25 w Method 2: Iteratively solve for w w(4) = w(3) − α * 2w(3) = 0.125 w(5) = w(4) − α * 2w(4) = 0.0625 1. Guess an initial solution w0 and pick α For k = 0,1,2,... dL(w) |w=w = 2w |w=w = 2w(k) 2. Compute (k) (k) dw dL(w) |w=w = w(k) − α * 2w(k) 3. Compute a new solution w(k+1) := w(k) − α (k) dw 4. Repeat step 2 and 3 until converge 23 keyword: gradient descent Example: Optimization ❖ Goal: Find w that minimizes the following loss function L(w) = w 2 w(0) = 2, α = 1 w(1) = w(0) − α * 2w(0) = 2 − 1 * 2 * 2 = − 2 w(2) = w(1) − α * 2w(1) = − 2 − 1 * 2 * −2 = 2 w(3) = w(2) − α * 2w(2) = − 2 w Method 2: Iteratively solve for w w(4) = w(3) − α * 2w(3) = 2 w(5) = w(4) − α * 2w(4) = − 2 1. Guess an initial solution w0 and pick α For k = 0,1,2,... α: learning rate dL(w) |w=w = 2w |w=w = 2w(k) 2. Compute (k) (k) dw dL(w) |w=w = w(k) − α * 2w(k) 3. Compute a new solution w(k+1) := w(k) − α (k) dw 4. Repeat step 2 and 3 until converge 24 keywords: local minimum/maximum, global minimum/maximum Example: Gradient Deep Learning, NeuroEvolution & Extreme Learning Machines 25 Optimization: Learning Rate Fixed learning rate Adaptive learning rate Deep Learning, NeuroEvolution & Extreme Learning Machines 26 Gradient 1-dimensional case 2-dimensional case L(w1) = w12 L(w1, w2) = w12 + w22 w2 w1 Derivative of L(w1) Partial derivatives of L(w1, w2) Weight update Weight update dL(w1) dw12 = = 2w1 dw1 dw1 dL(w1) |w =w w1, (k+1) := w1, (k) − α 1 1, (k) dw1 = w1, (k) − 2w1, (k) w2 w1 ∂L(w1, w2) ∂ = (w12 + w22) = 2w1 ∂w1 ∂w1 ∂L(w1, w2) ∂ = (w12 + w22) = 2w2 ∂w2 ∂w2 Gradient ∇w L(w1, w2) w1, (k+1) w1, (k) = w −α w [ 2, (k+1)] [ 2, (k)] ∂L(w1, w2) ∂w1 ∂L(w1, w2) ∂w2 |w =w 1 1, (k) |w =w 2 2, (k) w1, (k) 2w1, (k) = w −α [ 2, (k)] [2w2, (k)] 27 Optimization Real Life w2 w2 w1 w1 No local minima Could get stuck in problem local minima (global minimum = local minimum) Furthermore, how do we compute a complicated loss function such as the one in our example L(y, fW (x))? O'Reilly Media w2 w1 @#Q#@%!!!!!! Backpropagation! Yoshua Bengio 28 Backpropagation Computation graph w1 + z = w1 + w2 × w2 w3 L L(w1, w2, w3) = z × w3 = (w1 + w2) × w3 Exercise: Compute the gradient vector ∂L ∂L ∂z ∂w3z ∂(w1 + w2) = = = w3(1 + 0) = w3 ∂w1 ∂z ∂w1 ∂z ∂w1 ∂w3z ∂(w1 + w2) ∂L ∂z ∂L = = = w3(0 + 1) = w3 ∂w2 ∂z ∂w2 ∂z ∂w2 ∂zw3 ∂L = = z = w1 + w2 ∂w3 ∂w3 L = z × w3 z Use chain rule w3 w3 ∇w L(w1, w2, w3) = w1 + w2 Modified from CS231n Convolutional Neural Networks for Visual Recognition 29 Backpropagate w1 w2 ∂z =1 ∂w1 ∂z =1 ∂w2 w3 + ∂L = w3 ∂z z = w1 + w2 1 L = z × w3 z × L ∂L = w1 + w2 ∂w3 Exercise: Compute the gradient vector ∂w3z ∂(w1 + w2) ∂L ∂z ∂L = = = w3(1 + 0) = w3 ∂w1 ∂z ∂w1 ∂z ∂w1 ∂w3z ∂(w1 + w2) ∂L ∂z ∂L = = = w3(0 + 1) = w3 ∂w2 ∂z ∂w2 ∂z ∂w2 ∂zw3 ∂L = = z = w1 + w2 ∂w3 ∂w3 w3 w3 ∇w L(w1, w2, w3) = w1 + w2 Modified from CS231n Convolutional Neural Networks for Visual Recognition 30 Backpropagate w1 w2 ∂z =1 ∂w1 ∂z =1 ∂w2 w3 + ∂L = w3 ∂z z = w1 + w2 1 L = z × w3 z × L ∂L = w1 + w2 ∂w3 Exercise: Compute the gradient vector ∂w3z ∂(w1 + w2) ∂L ∂z ∂L = = = w3(1 + 0) = w3 ∂w1 ∂z ∂w1 ∂z ∂w1 ∂w3z ∂(w1 + w2) ∂L ∂z ∂L = = = w3(0 + 1) = w3 ∂w2 ∂z ∂w2 ∂z ∂w2 ∂zw3 ∂L = = z = w1 + w2 ∂w3 ∂w3 w3 w3 ∇w L(w1, w2, w3) = w1 + w2 Modified from CS231n Convolutional Neural Networks for Visual Recognition 31 Backpropagate w1 w2 ∂z =1 ∂w1 ∂z =1 ∂w2 w3 + ∂L = w3 ∂z z = w1 + w2 1 L = z × w3 z × L ∂L = w1 + w2 ∂w3 Exercise: Compute the gradient vector ∂w3z ∂(w1 + w2) ∂L ∂z ∂L = = = w3(1 + 0) = w3 ∂w1 ∂z ∂w1 ∂z ∂w1 ∂w3z ∂(w1 + w2) ∂L ∂z ∂L = = = w3(0 + 1) = w3 ∂w2 ∂z ∂w2 ∂z ∂w2 ∂zw3 ∂L = = z = w1 + w2 ∂w3 ∂w3 w3 w3 ∇w L(w1, w2, w3) = w1 + w2 Modified from CS231n Convolutional Neural Networks for Visual Recognition 32 With actual numbers -2 w1 + 5 z = w1 + w2 3 L = z × w3 z × w2 L -12 -4 w3 Forward pass z =−2+5=3 L = − 4 × 3 = − 12 We calculate the values of the variables in the computation graph Modified from CS231n Convolutional Neural Networks for Visual Recognition 33 With actual numbers ∂z =1 ∂w1 -2 w1 -4 5 w2 ∂z =1 ∂w2 -4 -4 w3 3 Forward pass + ∂L = w3 = − 4 ∂z z = w1 + w2 1 z L = z × w3 3 L -12 × ∂L = w1 + w2 = 3 ∂w3 z =−2+5=3 L = − 4 + 3 = − 12 We calculate the values of the variables in the computation graph Backward pass We calculate the gradients and evaluate them using the values from the forward pass ∂L ∂z ∂L = =−4×1=−4 ∂w1 ∂z ∂w1 ∂L ∂z ∂L = =−4×1=−4 ∂w2 ∂z ∂w2 Modified from CS231n Convolutional Neural Networks for Visual Recognition ∂L =z=3 ∂w3 −4 ∇w L = −4 [3] 34 Update the weights -2 w1 -4 + 5 z = w1 + w2 L = z × w3 z w2 -4 -4 × L w3 3 Given −2 −4 at w(0) and α = 1 w(0) = 5 , ∇w L = −4 [−4] [3] L(w(0)) = − 12 Weight update −2 2 −4 w(1) = w(0) − α ∇w L = 5 − 1 −4 = 9 [−4] [ 3 ] [−7] L(w(1)) = − 77 w(2) = w(1) − α ∇w L = ? L(w(2)) = ? Modified from CS231n Convolutional Neural Networks for Visual Recognition 35 Backpropagation f(w, x) = 1 1 + e −(w0 x0+w1x1+w2) Taken from CS231n Convolutional Neural Networks for Visual Recognition 36 Exploding/Vanishing Gradients 0.055 = 3.125 × 10−7 155 = 759375 Very small Very large Taken from Recurrent Neural Networks Tutorial, Part 3 – Backpropagation Through Time and Vanishing Gradients 37 prepared outputs loss values y1̂ y1 L(y1, y1̂ ) y2̂ y2 L(y2, y2̂ ) … … prepared inputs predicted outputs … Training Our Model yN̂ yN L(yN , yN̂ ) x1 fW x2 … deep learning model xN Total loss L(y, y)̂ = N ∑ i=1 N L(yi, yî ) = ∑ L(yi, fW (xi)) i=1 Update the weights Compute the gradient of the total loss w.r.t. to W using backpropagation ∇W L = Assign the new weights N ∑ i=1 ∇W L(yi, fW (xi)) W ← W − α ∇W L 38 Stochastic Gradient Descent ❖ Every time we want to update W, we need to compute the loss functions and their gradients of all samples ∇W L = ❖ ❖ N ∑ i=1 ∇W L(yi, fW (xi)) For very large N, we might not be able to do it due to both the time and memory constraints 14,000,000 images? For stochastic gradient descent (SGD), we update W based on subsets of samples called a mini-batch Training Data batch 1 batch 2 batch 3 … batch B 1 epoch = the model has seen the whole training data once (i.e., B batches in this case) In this example, the weight matrix W is updated B times per epoch. 39 Optimizers ❖ Popular choices are Stochastic gradient descent (SGD) ❖ SGD with momentum ❖ RMSprop ❖ Adam ❖ ADADELTA ❖ AdaGrad ❖ Loss landscape (bright = low, dark = high) w2 w2 w1 w1 Interactive Visualization of Optimization Algorithms in Deep Learning 40 Loss landscape (bright = low, dark = high) w2 w1 w2 w1 Interactive Visualization of Optimization Algorithms in Deep Learning 41 Fully connected layer (Dense) Convolutional layer Optimizer Conv1D, 2D, 3D, … separable Conv SGD Adam RMSprop Evaluation metric accuracy F1-score AUC confusion matrix Deep Learning Activation function Loss function categorical crossentropy binary crossentropy mean squared error mean absolute error ❖ Pooling layer max-pooling average-pooling Regularization Dropout Data augmentation l1, l2 regularizations sigmoid softmax ESP (swish) ReLU Combine basic components to build a neural network • More components → “More” representative power 42 y probability of being class 1 x probability of being class 2 probability of being class 3 activation functions ReLU Softmax ReLU # Import necessary modules import tensorflow as tf from tensorflow import keras from tensorflow.keras import layers # Create the model model = keras.Sequential() model.add(layers.Dense(3, activation="relu")) model.add(layers.Dense(2, activation="relu")) model.add(layers.Dense(3, activation=“softmax”)) # Compile the model model.compile( optimizer='adam', loss=tf.keras.losses.CategoricalCrossentropy(), ) Training loss Validation loss Iteration Model Loss function and optimizer # Train the model for 100 epochs with a batch size of 32 model.fit(x_train, y_train, batch_size=32, epochs=100, validation_data=(x_val,y_val)) 43 Regularization overfitting Validation loss Training loss Training loss Iteration ❖ Validation loss Iteration Regularization is frequently used to mitigate overfitting ❖ Add a regularization term to the loss function ❖ ℓ regularization 2 min N ∑ i=1 ❖ → min N ∑ i=1 L(yi, fW (x)) → Use dropout - much more popular ∑ i=1 ℓ1 regularization min ❖ L(yi, fW (x)) N min L(yi, fW (x)) + λ∥w∥2 N ∑ i=1 L(yi, fW (x)) + λ∥w∥1 44 Dropout ❖ keyword: dropblock for CNN Dropout can be used to reduce overfitting ❖ Randomly omit some neurons/units Dropout for Deep Learning Regularization, explained with Examples 45 Dropout test loss training accuracy training accuracy with dropout test accuracy with dropout test loss with dropout test accuracy training loss with dropout training loss Dropout for Deep Learning Regularization, explained with Examples 46 Batch Normalization Normalize every batch ❖ reduce internal covariate shift problems* ❖ Can be thought of as a form of implicit regularization ❖ can help reduce overfitting ❖ Counts Values Values Batch Normalization — Speed up Neural Network Training *This has been challenged by more recent work 47 Batch Normalization Normalize every batch ❖ reduce internal covariate shift problems* ❖ Can be thought of as a form of implicit regularization ❖ can help reduce overfitting ❖ Batch Normalization — Speed up Neural Network Training *This has been challenged by more recent work 48 keywords: layer normalization, instance normalization, group normalization, standardization Batch Normalization ❖ Reduce internal covariate shift problems* batch size ❖ # of features More robust to bad initialization Lecture 7 (Stanford, CS231n, 2018) *This has been challenged by more recent work 49 Data Augmentation Data augmentation-assisted deep learning of hand-drawn partially colored sketches for visual search 50 Fully connected layer (Dense) Convolutional layer Optimizer Conv1D, 2D, 3D, … separable Conv SGD Adam RMSprop Evaluation metric accuracy F1-score AUC confusion matrix Deep Learning Activation function Loss function categorical crossentropy binary crossentropy mean squared error mean absolute error ❖ Pooling layer max-pooling average-pooling Regularization Dropout Data augmentation l1, l2 regularizations sigmoid softmax ESP (swish) ReLU Combine basic components to build a neural network • More components → “More” representative power 51 W1 Input Output A 1 x B 2 y C 3 D 4 E Hidden layer 1 (ignore the biases for simplicity) outA outB outC = a1(W1x) = a1 outD outE wA,1 wB,1 wC,1 wD,1 wE,1 wA,2 wB,2 wC,2 wD,2 wE,2 wA,3 wB,3 wC,3 wD,3 wE,3 wA,4 wB,4 wC,4 wD,4 wE,4 x1 x2 x3 x4 # of weights for hidden layer 1 = 4 x 5 = 20 # of weights per layer = # of incoming nodes x # of hidden nodes What if our input dimension is 256x256=65,536 and we use 1024 hidden nodes? 67 M trainable parameters for just one layer! 52 Do we need that many parameters? Would it be the case that ❖ Only neighboring neurons talk to each other? ❖ Furthermore, they talk to their neighbors in the same way? wg,1 w1 wg,2 w2 1M nodes in between … w3 … 1M nodes in between … wg,3 … ❖ wo,1 w1 wo,2 w2 wo,3 Much fewer parameters! w3 53 2D Convolution ❖ Similarly, for images, maybe only neighboring pixels talk to each other? 2D convolution 2D pooling Deep learning radiology: the secret of convolutional neural networks 54 2D Convolution y[n1, n2] = x[n1, n2] * h[n1, n2] = ∞ ∑ ∞ ∑ k1=−∞ k2=−∞ x[k1, k2]h[n1 − k1, n2 − k2] 2D Conv 0*0 + 2*1 + 1*0 + 0*1 + 1*1 + 1*1 + -3*0 + -1*1 + 1*0 0 2 1 1 0 0 1 0 0 1 1 1 0 1 1 1 -3 -1 1 0 1 0 1 0 0 6 0 0 1 0 4 1 7 0 x Input h Filter/kernel defines the relationship between neighboring pixels 3 y 55 2D Convolution y[n1, n2] = x[n1, n2] * h[n1, n2] = ∞ ∑ ∞ ∑ k1=−∞ k2=−∞ x[k1, k2]h[n1 − k1, n2 − k2] 2D Conv 2*0 + 1*1 + 1*0 + 1*1 + 1*1 + 1*1 + -1*0 + 1*1 + 0*0 0 2 1 1 0 0 1 0 0 1 1 1 0 1 1 1 -3 -1 1 0 1 0 1 0 0 6 0 0 1 0 4 1 7 0 x Input h Filter/kernel defines the relationship between neighboring pixels 3 5 y 56 2D Convolution y[n1, n2] = x[n1, n2] * h[n1, n2] = ∞ ∑ ∞ ∑ k1=−∞ k2=−∞ x[k1, k2]h[n1 − k1, n2 − k2] 2D Conv 1*0 + 1*1 + 0*0 + 1*1 + 1*1 + 0*1 + 1*0 + 0*1 + 1*0 0 2 1 1 0 0 1 0 0 1 1 1 0 1 1 1 -3 -1 1 0 1 0 1 0 0 6 0 0 1 0 4 1 7 0 x Input h Filter/kernel defines the relationship between neighboring pixels 3 5 3 y 57 2D Convolution y[n1, n2] = x[n1, n2] * h[n1, n2] = ∞ ∞ ∑ ∑ k1=−∞ k2=−∞ x[k1, k2]h[n1 − k1, n2 − k2] 2D Conv 0 2 1 1 0 0 1 0 0 1 1 1 0 1 1 1 3 5 3 -3 -1 1 0 1 0 1 0 4 1 3 0 6 0 0 1 9 8 8 0 4 1 7 0 x Input h Filter/kernel defines the relationship between neighboring pixels y smaller output 58 2D Convolution Zero-padding 0 0 0 0 0 0 0 0 0 2 1 1 0 0 0 0 1 1 1 0 0 0 -3 -1 1 0 1 0 0 0 6 0 0 1 0 0 0 4 1 7 0 0 0 0 0 0 0 0 0 x Input 0 1 0 1 1 1 3 5 3 0 1 0 4 1 3 9 8 8 h Filter/kernel defines the relationship between neighboring pixels y smaller output 59 2D Convolution Zero-padding 0 0 0 0 0 0 0 0 0 2 1 1 0 0 0 0 1 1 1 0 0 0 -3 -1 1 0 1 0 0 0 6 0 0 1 0 0 0 4 1 7 0 0 0 0 0 0 0 0 0 x Input 0 1 0 2 4 5 3 1 1 1 1 -2 3 5 3 2 0 1 0 -4 4 1 3 2 3 9 8 8 2 4 11 12 8 8 h Filter/kernel defines the relationship between neighboring pixels y output of the same size 60 2D Convolution ❖ Low-pass Filter * 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 = Filter/kernel 61 2D Convolution ❖ “Gradient image” -1 1 -1 1 * = Filter/kernel 62 2D Convolution ❖ “Gradient image” -1 -1 1 1 * = Filter/kernel 63 2D Convolution ❖ “Plus-sign Detector” * 0 1 0 1 1 1 0 1 0 = Filter/kernel 64 2D Convolution ❖ Different filters/kernels process images differently ❖ ❖ ❖ ❖ ❖ Low-pass filter (blurring/denoising) High-pass filter (sharpening) Shape detector Refer to Image Kernels for a good demo complementary information Instead of defining the filter ourselves, we let neural network come up with good ones for us w11 w12 w13 w21 w22 w23 w31 w32 w33 Filter/kernel defines the relationship between neighboring pixels model.add(Conv2D(32, (3, 3), padding="same", activation="relu")) # of filters filter size 65 2D Convolution ❖ 2D convolution with more than one channels G R B 1 filter G R B 128 filters A Comprehensive Introduction to Different Types of Convolutions in Deep Learning 66 Pooling Layers model.add(layers.MaxPooling2D((2,2))) Mixed Pooling for Convolutional Neural Networks 67 keywords: feature maps, activation maps, receptive field, backbone Convolutional Neural Network (CNN) feature maps feature maps feature maps probability of being a cat? probability of being a dog? Same filter size (e.g., 3 x 3 filter), but different coverages for different “resolutions” Disadvantages of CNN models 68 keywords: feature maps, activation maps, receptive field, backbone Convolutional Neural Network (CNN) feature maps feature maps feature maps probability of being a cat? probability of being a dog? Classification/ regression feature 2 feature 2 Feature extraction feature 1 Disadvantages of CNN models feature 1 69 Fully connected layer (Dense) Convolutional layer Optimizer Conv1D, 2D, 3D, … separable Conv SGD Adam RMSprop Evaluation metric accuracy F1-score AUC confusion matrix Deep Learning Activation function Loss function categorical crossentropy binary crossentropy mean squared error mean absolute error ❖ Pooling layer max-pooling average-pooling Regularization Dropout Data augmentation l1, l2 regularizations sigmoid softmax ESP (swish) ReLU Combine basic components to build a neural network • More components → “More” representative power 70 ImageNet More networks: Xception, ResNeXt, DenseNet, MobileNet, NASNet, EfficientNet, SqueezeNet, Feature Pyramid Network ImageNet Large Scale Visual Recognition Challenge (ILSVRC) winners The SVM era (traditional ML) top-5 classification error The deep learning era Residual connection (ZFNet) Stanford CS231n (Spring 2022): Lecture 6. - Conv - Pooling - Dense - Dropout - ReLU - SGD with momentum Optimized “AlexNet” in terms of # filter, kernel sizes etc. 19 layers with filter size = 3x3 - Introduce 1x1 Conv to reduce the dimensions - Multiple losses to help with gradients - Inception module - Skip connection to help with the vanishing gradient problem - Batch normalization - Network ensemble - Squeeze-andExcitation (SE) block - Adaptive feature map reweighting The last annual ImageNet competition was held in 2017 Model complexity Floating-point operations (FLOPs) required for a single forward pass Bianco, Simone, et al. "Benchmark analysis of representative deep neural network architectures." IEEE access 6 (2018): 64270-64277. Titan Xp Jetson TX1 Bianco, Simone, et al. "Benchmark analysis of representative deep neural network architectures." IEEE access 6 (2018): 64270-64277. Understanding CNN - Filter Visualization Learned weights of the filters at the first layer 64 filters filter size = 11 x 11 with 3 channels What these filters look for (through template matching/inner product) - oriented edges - opposing colors Similar to human visual system! input image AlexNet conv1 Stanford CS231n (Spring 2022): Lecture 8. 74 Understanding CNN - Filter Visualization conv conv conv Stanford CS231n (Spring 2022): Lecture 8. The weights of conv2 tell us what type of the activation patterns after conv1 that would cause conv2 to maximally activate. These filters are not connected directly to the input image, so it is not directly interpretable. We need more complicated techniques to get a sense of what is going on. 75 input image Understanding CNN - Last Layer output of fc 7 = feature vector (last layer) of size 4096 Stanford CS231n (Spring 2022): Lecture 8. fc7 Test image Krizhevsky, Alex, Ilya Sutskever, and Geoffrey E. Hinton. "Imagenet classification with deep convolutional neural networks." Advances in neural information processing systems 25 (2012). L2 Nearest neighbors in pixel space Stanford CS231n (Spring 2022): Lecture 8. 76 input image Understanding CNN - Last Layer output of fc 7 = feature vector (last layer) of size 4096 Stanford CS231n (Spring 2022): Lecture 8. fc7 Perform a machine learning algorithm to the output of fc7 Barnes-Hut t-SNE https://cs.stanford.edu/people/karpathy/cnnembed/ 77 Understanding CNN - Other Techniques Saliency Maps gradient of class score w.r.t. image pixels Simonyan, Karen, Andrea Vedaldi, and Andrew Zisserman. "Deep inside convolutional networks: Visualising image classification models and saliency maps." arXiv preprint arXiv:1312.6034 (2013). Occlusion Experiments Zeiler, Matthew D., and Rob Fergus. "Visualizing and understanding convolutional networks." European conference on computer vision. Springer, Cham, 2014. …and many more such as ❖ Guided Backpropagation ❖ Grad-CAM, Score-CAM, HiResCAM ❖ Attention map visualization Stanford CS231n (Spring 2022): Lecture 8. 78 Trained CNN as a Feature Extractor What these filters look for (through template matching/inner product) - oriented edges - opposing colors These patterns are important for processing other types of images as well, so we can reuse trained weights when we encounter a related task or the same task but with a different dataset Stanford CS231n (Spring 2022): Lecture 8. 79 Trained CNN as a Feature Extractor Previous Task: Cat-vs-dog classification probability of being a cat? probability of being a dog? Train all the weights of this network New Task: Watermelon-vs-orange classification Pretrained feature extractor To-betrained classifier Take parts of the trained network and use them as a feature extractor Only train the weights here watermelon or orange? 80 Transfer Learning and Fine-Tuning 14M images AI-vs-Artists (2 classes) Assume small dataset layer 1 layer 1 layer 2 layer 2 layer L-1 Less “reusable” layer L … … More “reusable” ImageNet (1000 classes) Clone these layers along with their weights and do not modify the weights FC-4096 FC-4096 FC-1000 layer L-1 layer L FC-4096 Replace the classification layer and train it FC-4096 FC-2 81 Transfer Learning and Fine-Tuning layer 1 layer 1 layer 2 layer 2 layer L-1 Less “reusable” layer L … Clone these layers along with their weights and do not modify the weights FC-4096 FC-4096 FC-1000 Clone these layers along with their weights and do not modify the weights layer L FC-4096 FC-2 layer 1 layer 2 layer L-1 layer L-1 FC-4096 Replace the classification layer and train it Dog-vs-cat (2 classes) Assume dataset of moderate size … 14M images AI-vs-Artists (2 classes) Assume small dataset … More “reusable” ImageNet (1000 classes) Clone these layers along with their weights and retrain them with an initially low learning rate Replace these layers and train them layer L FC-4096 FC-n_filters FC-2 82 Outline ❖ Deep Learning Components Fully connected layer ❖ Computation graph ❖ Activation functions ❖ Loss function ❖ Model optimization ❖ Gradient descent ❖ Backpropagation ❖ Stochastic gradient descent (SGD) ❖ Regularization ❖ ℓ and ℓ regularization 2 1 ❖ Dropout ❖ Batch normalization ❖ Convolutional layer ❖ Pooling layer ❖ ❖ Convolutional Neural Network (CNN) 83 Outline ❖ Deep Learning Components Fully connected layer ❖ Computation graph ❖ Activation functions ❖ Loss function ❖ Model optimization ❖ Gradient descent ❖ Backpropagation ❖ Stochastic gradient descent (SGD) ❖ Regularization ❖ ℓ and ℓ regularization 2 1 ❖ Dropout ❖ Batch normalization ❖ Convolutional layer ❖ Pooling layer ❖ ❖ Convolutional Neural Network (CNN) 84 Outline ❖ Deep Learning Components Fully connected layer ❖ Computation graph ❖ Activation functions ❖ Loss function ❖ Model optimization ❖ Gradient descent ❖ Backpropagation ❖ Stochastic gradient descent (SGD) ❖ Regularization ❖ ℓ and ℓ regularization 2 1 ❖ Dropout ❖ Batch normalization ❖ Convolutional layer ❖ Pooling layer ❖ ❖ Convolutional Neural Network (CNN) 85 Outline ❖ Deep Learning Components Fully connected layer ❖ Computation graph ❖ Activation functions ❖ Loss function ❖ Model optimization ❖ Gradient descent ❖ Backpropagation ❖ Stochastic gradient descent (SGD) ❖ Regularization ❖ ℓ and ℓ regularization 2 1 ❖ Dropout ❖ Batch normalization ❖ Convolutional layer ❖ Pooling layer ❖ ❖ Convolutional Neural Network (CNN) 86 y probability of being class 1 x probability of being class 2 probability of being class 3 activation functions ReLU Softmax ReLU # Import necessary modules import tensorflow as tf from tensorflow import keras from tensorflow.keras import layers # Create the model model = keras.Sequential() model.add(layers.Dense(3, activation="relu")) model.add(layers.Dense(2, activation="relu")) model.add(layers.Dense(3, activation=“softmax”)) # Compile the model model.compile( optimizer='adam', loss=tf.keras.losses.CategoricalCrossentropy(), ) Training loss Validation loss Iteration Model Loss function and optimizer # Train the model for 100 epochs with a batch size of 32 model.fit(x_train, y_train, batch_size=32, epochs=100, validation_data=(x_val,y_val)) 87