~

~

In

science ,

form hypotheses

we

All datasets will have

to explain

observations

•

accuracy

•

•

•

. .

s

.

measurement

to accepted

is

"

are

close to true

values

"

inaccurate

value

÷

actual

fake

!

%ghf1k9 calibrate

,

calibrate

probability

confidence

equipment should be properly calibrated to ensure readings

how close a

accurate

results

in

by your apparatus

determined

is

accuracy

.

"

•

confident

must be

we

the reliability of results

need to evaluate

•

But

.

variability in results affecting

leads to

degree of variation

some

by testing them

you

are

in known

by weighing

using apparatus

pH solution

Known

" ""

buffer

;

* ensure

"

mass

"

read manuals !

scale is level

ensure

"

I

systematic error

:

consistent repeatable

,

equipment

error

flawed design

,

,

faulty

associated with

human

error

Preventable

.

.

mL

Precision

"

.

.

'

of

consistency

.

.

measurements

close same

precise

are

to

-

20.01=0.5

-

.

20

125 I ± 12.5mL

'

how

I

5

other

imprecise

,

repeats / trials

variance :

/

error

± uncertainty of apparatus

:

add

uncertainties

standard deviation :

101=0.2

within

-

within a group

error :

unknown , unpredictable variations

ex :

5-61=0.1

ex :

10.1 1=0.1

-

can

-

5.61--0.1

mean

0

a

a

powerful statistical measure

repeatable methodology

,

Outliers with TT variability and St .D/ CV

/°bit

I

high precision

within

*

Generally

5 repeats /trials per

high variability

low

precision

:

group variation

errorbarovertap:

overlap :

between group variation

suggests no difference between groups

inconclusive : does

do

ex : conc

mean

not support hypothesis

further tests are required to determine significance

strong controls and many repeats results in It variability within groups

*

uncertainty

4.5 1=0.2

group variation

its own ,

Cannot be

groups can have

=

difference between groups

on

.

reduced

* IV

St. Dev

CV=

be

-

is not

uncertainty

✓ 10.11=0.2

large error bars

may support hypothesis

note : St Dev

✗ 10.251=0.2

between group variation

suggests

as

50

small error bars :

no error bar

,

✗

-

-

coefficient of variation : measure of relative variability

variation

,

* value must have same Sig fig

100

of the spread of most data surrounding the

measure

bar :

Generally

"

prevented but

visually show

*

I

-

precision :

Added /subtracted values :

Mean

"

f

"

± 10

-

,

15

random

Raw data

precise your measurement tools

.

1=0.001

Calculating

more

the less uncertainty in measurements

-

measurements

each

The

"

.

,

and probability

i. e.

low

of effect

STD / CV

NIT exclude them but rather highlight them & discuss their potential cause + significance

experimental group is the MINIMUM

,

otherwise

St .D is

not reliable

more

in

FAQ

.

~

~

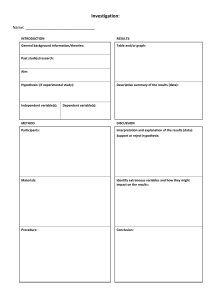

The

type of data you collect and your RQ

Relationship

between

2

your statistical

determines

analysis

Is there

hypothesis being tested

continuous variables

difference ?

a

comparing groups

1

I

Regression

1

discrete data

continuous

categorical data

1

1

data

12¥,

{

groups

dependent

t

:

✓

equal variance +

✓

normally distributed

✓

groups

✓

1

:

-

U

rank test

-

-

two

way

|

distribution

\

10=-0.05

compare all groups

compare groups to control

Tukey post hoc

Dunnett post hoc

Whitney

Ñ goodness

of fittest

-

-

test

equal variance Hor

✗

normally distributed

Kruskal

✗

only valid when certain assumptions concerning the data

are

.

does group fall

within an expected

way

-

ANOVA

groups :

Many statistical tests

testa

l

ANOVA

equal variance Hor

✗

normally distributed

Mann

groups

independence

equal variance

normally distributed

✗

Wilcoxon

selected

an

equal probability of being

every sample must

this ensures the sample is more representative of

population

have

:

☒

www.iance-iresiouaisnaue

one

groups :

NOT

sampling

2 or more

IVs

two

l

1

random

association between

unpaired t-test

normally distributed

signed

groups

2- sample

paired t-test

-

IV

one

independent

2- sample

data

|3tgroups-

groups

data normally

distributed

(counts , frequencies )

,

i. e.

no

-

-

10=-0.05

Wallis

test

are

bias

Dunn

post

-

hoc

true

during selection

a

normal distribution : symmetrical distribution of random variables

where

independent groups

:

samples

i. e.

equal variance :

50% of values

I

~

50% of values

I

\

-

distinct without

( St Dei ) within

.

each

of thumb : if no group has

another,

:

tests for normalcy

in

a

sample

Ho : population is normally distributed

:-p

contain different sets of items in each sample

groups are

variance

rule

~

Shapiro Wilk Test

0.05

reject null not normally distributed

,

overlapping items among groups

group is

~

equal

Levene's Test :

tests the

2x the variance of

variance is assumed

to be equal

equality of variance amongst groups

Ho : variances among groups are equal

.

:p

0.05

reject null

,

variance

not equal

among groups

Regression

atbx

y=

i. e.

DV ( y-axis )

of the relation /association between

measure

:

average

r2

.

of y

value

is

function of

a

amount of variance between

-

( x-axis )

IV

and

generates regression line

I

"

y= ab

✗

p=

←

i

'

'

DV

accounts for

'

-

how well a

i. e-

,

linear

'

Allows you to make predictions

affect y ?

✗

Alternative hypothesis ( Ha)

:

,

distribution of

Either A associates with B ( positive association )

null

hypothesis ( Ho )

Chi

squared ( x2 ) goodness of fit test

A does

or

,

:

N

exponential regression

logistic regression

*

cannot

:

e.

r

use

for non linear

'

regressions

-

or

causality

association is likely

e.

not associate

with

any association is

tests if categorical variables fall within

HA sample data do not follow the expected

:

N

species A and species B

statistically significant association between variables i.

no

example

.

This does not determine correlation

.

statistically significant association between variables i.

:

:

:

.

tests if a relationship between categorical variables exist

Ecology example significant association between

Ho : sample

-

.

of the nature of a relationship between continuous variables

squared ( x2) test for independence

\ Genetics

I

variability of y is explained by variability in ✗

other 11% of variance is explained by other factors

understanding

How does

\

.

r

89% of

regression

Allows an

i

r2 = 0.89

ex :

P

.

-

O

Iv

DV

or

the variation in the data

-

•

Chi

fits '

regression line

'

( at bx)

-

-

2 variables

It e-

a

non

-

random

species are dependent

B ( negative association )

likely due to chance

specified distribution

sampled population

is not

representative

of entire population

distribution

expected ( Mendelian ) phenotypic ratios

difference between observed and

sampling error

or

difference

suggests gene linkage

suggests genes

data follow the expected distribution

unlinked

are

Bothx-lestscalculatedthesa.me#

p

-

value : probability of

obtaining the observed effect

\

significance =p

data obtained

X'

2

-

CF

XZ

CF

:

:

fail to reject null hypothesis

we

an

don't accept Ho

calculated ✗ 2

as

we

,

evidence

allows us to

=

probability

extreme) results

where

strength

reject

or

I

12.71

63.66

636.62

2

4.30

9.93

31.60

3

3.18

5.84

12.94

reject null hypothesis

at p= 0.01

12.3 ( DF -_ 2)

haven't proven

affect doesn't exist rather the

of the

*

reject null hypothesis

ex:

*

of obtaining similar ( or more

5% or less likelihood

the

Ho

is

correct

Ho

is

true

degrees of freedom

significance level (a)

(number ofgroups-DO.050.010.CI

expected

expected

XZ

i. e.

Not

data expected

observed

=

0.05

Ho is true

dataset if

in the

not

.

Since

I > 9.93

Assuming

in

1%

no

we

effect

of studies

,

can

we

due

would obtain

to random error

}

observed differences or

critical

values

more

( CF)

f- test :

2- sample

HA

:

there

Ho :

no

is

used to determine

significant difference

a

if there is a significant difference between the

between

2

(s )2 +

=

t

CF

t

,

(5)

ni

Nz

2

=

5-

St dev

( is A larger than B)

tailed : compares differences in only 1 direction

n =

sample size

two tailed : compares differences

-

paired

related /dependent groups

compares

:

\ assumes : ✓random

(is A smaller

either direction

in

-

reject null hypothesis

:

-

mean

fail to reject null hypothesis

:

CF

✗

difference due to treatment and not chance or sampling error

-

one

(x,-~

i. e.

.

or

larger than B)

( before + after )

test same group 2x

sampling ✓ DV continuous + normally distributed

> 20)

for

✓

groups are

dependent

n=

)

unpaired : compares unrelated /independent groups

\ assumes : ✓random

test separate groups ( control us treatment

sampling ✓ DV continuous + normally distributed

> 15 )

( or

✓

A- NOVA ( Analysis of variance) : used to determine if there is a significant difference

why can't

we

HA at least

:

do

multiple t tests

one

group is significantly different

Type

increases

-

Ho : no statistically significant difference between

F

MS between group variance

Ms within group variance

independent

error :

the

PIO 05

.

any groups

all

random

One

way

error

F

F crit

:

fail to reject null hypothesis

F

F crit

:

reject null hypothesis

there

3 or

more

groups

probability that you incorrectly rejected null hypothesis

means

-

equal

is a difference

amongst groups

ANOVA :

\ assumes :

Types of ANOVAs

-

way ANOVA

\ Post hoc tests control

-

Tukey 's Post hoc

:

-

4 groups : A

,

what

for Type 1 errors

✓random

groups differ specifically ( post

,

so

even

the

B C, D

requires

comparisons :

among

B and

are

,

treatment pair

only statistically significant difference

these groups

✓

groups have

equal variance

-

hoc

"

=

A

between

A

-

-

-

B

providing

p

where two

examine

✓

after this

an

value

IV

interaction between

residuals ( value

-

mean

are

considered

IVs

) have equal variance

sampling

if many comparisons are made, the

A

i.

also

\ assumes : ✓ DV continuous

all possible combinations between groups

6

+

compares 3. + groups

can

compares

,

:

,

-

-

considered

IV is

one

normally distributed ✓ groups are

✓random

> 15 )

( or

independent

sampling

✓ DV continuous

post hoc

Post hoc test : used to determine

where

compares 3. + groups

n=

to determine which groups differ

"

error

i. e.

following

a

statistically significant ANOVA )

constant

rate remains

adjusted p value for

each

treatment pair

value

0.89

B

-

p

0.01

C

grouping

*

C

0.21

B

-

D

0.04

D

0.11

C

-

D

0.25

't

}

only

B and C

B and D

are

and

significantly

different from each other

C ( p -0.01 ) and B and D ( p -0.04)

-

-

* the

Dunnett 's Post hoc : compares all treatment groups to

-

ex :

equal variance

from the rest

Two

ex :

of

means

groups have

-

=

-

if

1

between the

✓

groups are

n=

*

groups

Types of t tests

statistically significant

difference between both groups

t

groups

and St. Dev of two

means

I control (A) 3

,

treatment groups ( B. C D)

,

more

comparisons

made, the less

statistical power

only control group

treatmentpairpvalue

A

-

A

-

A

-

B

0.03 't

C

0

D

0.10

.

I 1

only group 13

*

differed from control significantly

because fewer comparisons,

higher statistical power

a) how

,

data within groups

examine

likely

Trials

µ

.

unusually high or low

values

are

identified ?

be

they

can

\

point that differs significantly from others making them unusual in dataset

data

Outlier :

1

outliers

|

outliers

I

2

3

a

8

8

2

b

9

10

8

c

2

4

5

•

} compared to other

bars

•

:

•

in trial ,

values

Terror

•

outlier

'

✓

very low

alters L0BF_

•

•

b) why are they important ?

\ outlier

c) how

\

mean

( Tor b)

error

in

error in

measurement and entry

data

sampling

outliers

any time

measured in incorrect

sample

2

outliers

-

occur ,

occur

can

identify them clearly

significant

:

the

it does not mean the

✗

-

evidence is not

fail to reject

we

null

null

\

an

error :

remeasure

( if possible ) or

as we

know its

an

error :

remeasure

( if possible ) or remove

an

do not

are

\

p

mean

value

your

can

as

removed ,

clearly justify why

?

0.0s

p

an

effect exists

hypothesis is true

an

look important , but

③ what does it

\

mean

if

it means that

.

:

we

\ the

reject

value

p

the

the

\

be

may

is

,

and

but

alternative

or

it's possible :

is

false

effect size

i. e.

too small for

is

size

proof there is

no

you to

effect

detect

too small

is

variability is too high for hypothesis test to detect

didn't work

random

significant

error

10=-0.05

?

strong enough to suggest

an

effect

may exist in the population

hypothesis

stronger the evidence against the Ho

lower the p value , the

✗ it does not mean

population

important caution against drawing conclusions based on differences that

evidence is

null

in the

hypothesis

investigation failed

be

data

doing so skews

remove ,

sample

✗ it does not

remove

analysis without as a comparison

strong enough to suggest

effect may exist

an

know its

outliers

if

*

is non

.

do ?

we

as we

-

what does it mean if p value

the

-

naturally

doing

it means that

statistical power

conditions

consider

\

do

reduces

.

outliers cannot be removed,

*

increases within group variation

-

sample not of target pop

-

unusual

\ natural variation

if

this

.

d) what

-

*

St D

and increases

they caused ?

are

\

alters

the

null

to replicate

need

we

hypothesis is false and

or

the lower the probability of

and

alternative

is

true

i. e.

proof of

statistically significant results several times before

we

an

effect

can

have

a

false positive

confidence

"

s,

✗ it does not relate to the

strength

of the

investigation

or

its

importance ,

i. e.

Ivp value

=

Ronald

Fisher

good investigation

Fur thering

Frost , J

.

2020

.

Hypothesis Testing

Biology for life .com

:

An intuitive guide for

making data driven decisions

statistics html

Statistics solutions .com

-

free

-

resources

directory of statistical analyses

-

-

-

.

Statistics By Jim

Publishing