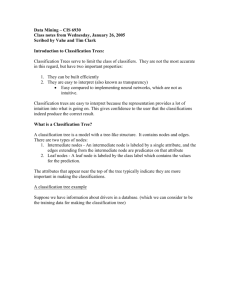

What is a Decision Tree? A decision tree is a supervised learning algorithm that can be used for both classification and regression tasks. It recursively splits the data into subsets based on the most significant attribute at each step. The goal is to create a model that predicts the value of a target variable based on several input variables. How does it work? Tree Construction: It starts with the entire dataset at the root node. It selects the best attribute using various criteria (e.g., information gain, Gini impurity) to split the dataset into subsets. This process repeats recursively for each child node until a stopping criterion is met (e.g., maximum depth, minimum number of samples). Attribute Selection Measures: Information Gain: Measures the reduction in entropy or uncertainty after a dataset is split on an attribute. Gini Impurity: Measures the probability of incorrectly classifying a randomly chosen element if it were randomly classified according to the distribution of labels in the subset. Gain Ratio: Similar to information gain but adjusts for the number of branches and their size. Tree Pruning: After the tree is constructed, it may be pruned to improve generalization and prevent overfitting. Pruning involves removing parts of the tree that are not statistically significant and do not provide much predictive power. Advantages: Interpretability: Decision trees produce easily interpretable rules. Handles Non-linearity: They can model non-linear relationships between features and the target variable. Handles Mixed Data: Can handle both numerical and categorical data. Feature Importance: Decision trees can provide insights into the most important features for prediction. Disadvantages: Overfitting: Decision trees are prone to overfitting, especially when the tree depth is not properly limited. Instability: Small variations in the data can lead to different tree structures, making them unstable. Bias: Decision trees can have high bias, especially with small datasets. Not Suitable for Some Problems: They may not perform well on tasks where the relationships between features and target variables are too complex. Applications: Classification Problems: Such as spam detection, medical diagnosis, customer churn prediction. Regression Problems: Such as predicting house prices, demand forecasting. Variants: Random Forests: Ensemble learning method that builds multiple decision trees and merges their predictions. Gradient Boosted Trees: Ensemble learning method where each tree is built sequentially, with each new tree correcting errors made by previously trained trees. Decision trees are versatile and widely used due to their simplicity and effectiveness, especially in scenarios where interpretability is crucial. However, they require careful tuning and consideration to avoid common pitfalls like overfitting. Tell me about purity and how it is measured while training Decision Tree. ChatGPT Purity, also referred to as impurity or homogeneity, is a measure of how well a node in a decision tree represents a single class or category. In other words, it quantifies the degree of "impurity" in a set of data. Decision tree algorithms use purity measures to determine the best splits when constructing the tree. The most common measures of purity used in decision tree algorithms are: Gini Impurity: Gini impurity measures the probability of incorrectly classifying a randomly chosen element in a dataset if it were randomly labeled according to the distribution of labels in the subset. Mathematically, it can be expressed as: