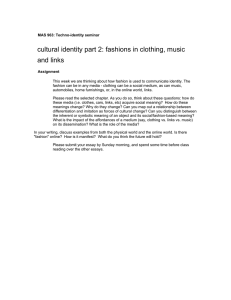

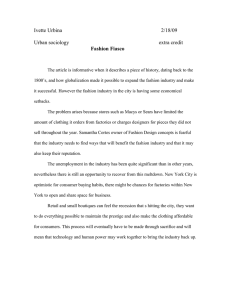

TUTORIAL Hung-Jen Chen , Hong-Han Shuai , and Wen-Huang Cheng A Survey of Artificial Intelligence in Fashion T he fashion industry is on the verge of an unprecedented change. Fashion applications are benefiting greatly from the development of machine learning, computer vision, and artificial intelligence. In this article, we present an overview of three major topics of fashion and associated state-of-the-art techniques: 1) fashion analysis, including popularity prediction and fashion trend analysis; 2) fashion recommendation, including fashion compatibility and outfit matching; and 3) fashion synthesis, including makeup transfer and virtual try-on. Problem formulations, method comparisons, and evaluation metrics are illustrated for each topic of fashion research. Additionally, promising directions for development in each area are outlined to inspire future research. plications, e.g., in improving revenue (https://www.statista.com/outlook/dmo/ ecommerce/fashion/worldwide#revenue), increasing customer loyalty (https:// www.firstinsight.com/knowledge-base/ machine-learning-ai-for-retail-fashion), and staying ahead of trends (https:// www.vogue.co.uk/tags/fashion-trends). In this article, we introduce three important research topics—fashion analysis, recommendation, and synthesis— as shown in Figure 1. Specifically, fashion analysis consists of the two major research areas of popularity prediction and fashion trend analysis. The study of popularity prediction on social media has become important with the explosive growth of social media content (i.e., text, images, audio, and video) and interactive behavior among web users. Therefore, extensive efforts have been expended in the past few years to predict social media content popularity, understand its variation, and evaluate its growth. This popularity reflects user interests and provides opportunities to understand user interaction with online content as well as information diffusion through social media platforms. Hence, an accurate popularity prediction of online content may improve user experience and Fashion Research Introduction Fashion has become an indispensable part of the economy, society, and individuals. People are interested in fashion and want to know what looks best on them and how they can improve their style and raise their impressions. As such, the issue of how to help people quickly and accurately find their own beauty products has gradually become a research hotspot. With the advance of machine learning, there has been a trend of using machine learning techniques in the fashion industry for various apDigital Object Identifier 10.1109/MSP.2022.3233449 Date of current version: 1 May 2023 Fashion Analysis Fashion Recommendation Fashion Synthesis Fashion Trend Analysis Fashion Compatibility Makeup Transfer Popularity Prediction Outfit Matching Virtual Try-On FIGURE 1. An overview of the fashion applications introduced in this work. IEEEDownloaded SIGNAL PROCESSING MAGAZINE 26,2023 | May 2023 | 64 1053-5888/23©2023IEEE Authorized licensed use limited to: The University of Hong Kong Libraries. on November at 23:24:07 UTC from IEEE Xplore. Restrictions apply. service effectiveness. Moreover, it can significantly enhance several important applications, such as online ­advertising and online product marketing. Most research on popularity prediction predominantly focuses on exploring the correlation between popularity and user-item factors, such as item content, user cues, social relations, and user-item interactions. In fact, time also exerts a crucial impact on popularity but is often overlooked. Hence, Wu et al. [1] first proposed investigating popularity prediction by factoring popularity into two contextual associations, i.e., user-item context and time-sensitive context. Fashion trend analysis is also of great significance and aims to master the changeability of fashion. Despite rapid technological changes, the desire to convey a sense of self through one’s appearance has not changed. As such, fashion guidance to develop good taste and catch up with trends is needed. Additionally, for the fashion industry, valid forecasting enables fashion companies to establish marketing strategies wisely and to continuously anticipate and fulfill their consumers’ wants and needs. In particular, fashion trends reflect what’s “in” and what’s “out” for the season. To date, fashion trend analysis is mainly performed by fashion experts who have intensive fashion knowledge. However, even for fashion experts, it is time consuming to collect and analyze fashion trends since there are numerous fashion shows each year. With this in mind, several recent studies aim to automate this process. For example, Hidayati et al. [2] first introduced a framework for discovering visual style elements that well represent the fashion trends at New York Fashion Week (NYFW) that are 1) frequently occurring within the given fashion week event and 2) unique for the latest fashion week. For fashion recommendation, the goal is to help users find products relevant to their interests as they navigate large collections of fashion products by using information from product views, followed or ignored items, purchases, and webpages visited to determine exactly how, when, and what to recommend to customers. For example, if you consider an outfit as a set of clothes with a specific style or a good outfit composition, we need to not only follow a dress code but also balance color and styles creatively. Although there is a substantial number of studies on clothing retrieval and recommendation, none of them considers the problem of fashion outfit composition. Thus, dressing with style is more than just clothing; it is about how we carry ourselves to reflect our attitude and personality. Nevertheless, dressing with style takes strategy. A number of key principles need to be considered to dress with style, including the flattering styles for our body shape, the occasion, and the combination of our clothing items. Body shape is the first thing to consider when choosing the perfect clothing styles. Hidayati et al. [3] proposed the first framework for learning the compatibility of clothing styles and body shapes from social big data, with the goal of giving users better recommendations about what to wear in relation to their essential body attributes. Finally, fashion synthesis generates a realistic-looking person with different makeup or clothing styles. Makeup transfer is a new application of virtual reality technology in images. However, applying makeup is time consuming, and it takes even longer to find a suitable makeup for each individual. The ability to quickly see virtual makeup effects on images has become a necessity for many young people. Therefore, makeup transfer technology has received increasing attention. The ideal makeup transfer method needs to maintain the face appearance of the plain face image, transferring only the makeup style of the reference image, and the final output generated image should automatically present the perfect combination of the plain face portrait and the reference makeup. Nevertheless, since people’s facial expressions, face shapes, lip shapes, eyebrow shapes, eye shapes, and distances between eyebrows and eyes are different, the output generated image may not be well integrated or may even be distorted. Moreover, a makeup style is changeable and irregu- lar, and it is also affected by age and race. Different works leverage generative models to handle these challenges. For example, Li et al. [4] utilized generative adversarial networks (GANs) with cycle consistency between inputs and outputs to produce makeup and antimakeup looks simultaneously in a single forward pass. In addition to makeup, despite the convenience online fashion shopping provides, consumers are concerned about how a particular fashion item in a product image would look on them when buying apparel online. Thus, allowing consumers to virtually try on clothes not only enhances their shopping experience, transforming the way people shop for clothes, but also saves costs for retailers. Virtual try-on enables customers to try on products using their camera-equipped devices. Image-based virtual try-on is among the most promising approaches of virtual fitting that show target clothes on a customer’s image. However, it is difficult to design an algorithm that can synthesize the results under different conditions, e.g., different body shapes and different poses with some occlusions, as well as preserve the details of the clothing itself, e.g., cloth texture, logo, and text. Therefore, recent work has also leveraged generative models to tackle this challenging task. For instance, Han et al. [5] presented an image-based virtual try-on network (VITON) without using 3D information in any form, which seamlessly transfers the desired clothing item onto the corresponding region of a person using a coarse-to-fine strategy. In the following sections, we introduce several classic works, present the evaluation metrics, and provide future directions for each category. Overall, the contributions of our work can be summarized as follows: 1) We provide the latest survey of the current progress in the fashion domain and categorize fashion research topics into three important categories: analysis, recommendation, and synthesis. 2) For each category of fashion research, we provide an organized review of the significant methods IEEEDownloaded SIGNAL PROCESSING MAGAZINE 26,2023 | May 2023 | Authorized licensed use limited to: The University of Hong Kong Libraries. on November at 23:24:07 UTC from IEEE Xplore. Restrictions apply. 65 Post Metadata Features Post Information Posted Time Post Duration Posted Day Posted Month Time Features Social Features Fusion Z Social Network User ID Average Views Group Count User Features Extract Social Context Features VGG19 Brightness Contrast Composition Geometry Background Simplicity High-Level Features Deep Learning Features Color Gist Local Binary Pattern Low-Level Features Extract Visual Content Features Image Tagged People Comment Count Conv1Ds1 Tag Count Title Count Description Length Conv1Dv1 Member Count Image Count Conv1Ds2 Dimensionality Reduction Using PCA Fusion Network Back Propagation Sum FC2 FC1 Merged Layer Visual Network X Conv1Dv2 Colorfulness Clarity Contrast Color Entropy Dimensionality Reduction Using PCA Visual Features Fusion Conv1Ds3 FIGURE 2. Diagram of the proposed framework for image popularity prediction as proposed by Abousaleh et al. [6]. PCA: principal component analysis; MSE: mean square error; FC: fully connected layers. Actual Popularity Y MSE Y Predicted Popularity " Conv1Dv3 and their contributions. Moreover, evaluation metrics for different problems are introduced. 3) Several possible future directions for each category in the area of fashion research are provided, which may benefit the research community. Fashion analysis Popularity prediction In recent years, popularity prediction on social media has attracted extensive attention because of its widespread applications, such as online marketing and trend detection. Popularity prediction on social media is usually defined as the problem of regressing the rating scores, view counts, or click-throughs of a post [1]. Given the image and corresponding postcontext information, the goal is to predict the popularity score. Figure 2 shows an example framework of image popularity prediction [6], which takes the visual content features and social context features as the input and then fuses them to predict the popularity score. Specifically, Wu et al. [1] presented the novel approach of multiscale temporal decomposition (MTD) to decompose popularity into user-item context and time-sensitive context to explore the mechanism of dynamic popularity. The proposed MTD models time-sensitive context on different time scales, which is beneficial for automatically learning temporal patterns. However, the essential multiscale characteristic of popularity dynamics is not considered. Wu et al. [7] further presented a general framework named multiscale temporalization for modeling dynamic popularity in social media from multiple time-scale views. Specifically, they proposed constructing a structured decomposition model at different time scales instead of computing the whole popularity tensor directly with only intrinsic time information. Wu et al. [8] proposed a novel view on temporal context modeling that considers both temporal and sequential coherence during prediction. They proposed a novel prediction framework called a deep temporal context network that jointly integrated embedding, temporal context learning, and predicting with IEEEDownloaded SIGNAL PROCESSING MAGAZINE 26,2023 | May 2023 | 66 Authorized licensed use limited to: The University of Hong Kong Libraries. on November at 23:24:07 UTC from IEEE Xplore. Restrictions apply. temporal attention. Moreover, Massip et al. [9] focused on image popularity prediction by analyzing early popularity patterns of posts in the same content category, fused with user information data. Lo et al. [10] proposed a deep temporal sequence learning framework to predict the fine-grained fashion popularity of an outfit look. In addition, they considered not only visual dependence but also temporal coherence for predicting fashion popularity over time. More specifically, they extracted both contextual and visual features from the outfits and transferred them into a unified latent sequence using a two-stream, multimodal embedding structure. However, most of the aforementioned studies rely on only a few of the useful features for image popularity prediction and do not consider the interplay between other pertinent types of features. For example, image popularity prediction can be significantly influenced by various factors (and features), such as visual content, aesthetic quality, user, post metadata, and time; therefore, considering all of this multimodal information is crucial for an efficient prediction. Moreover, it is nontrivial to design an appropriate model that can make better use of the various features for predicting image popularity. Abousaleh et al. [6] proposed a novel convolutional neural networkbased visual–social computational model for image popularity prediction. As shown in Figure 2, this model used two individual networks to process the input data with different modalities (i.e., visual and social features) independently, and the outputs from these networks were then integrated into a fusion network to learn joint multimodal features for estimating the popularity score. Performance evaluations The performance of a popularity prediction is primarily measured by the mean absolute percentage error (MAPE), mean absolute error (MAE), mean square error (MSE), and Spearman’s ranking correlation (SRC). The MAPE, MAE, and MSE are used to calculate the difference between the predicted scores and the ground truth scores. However, for some applications (e.g., ranking), only relative scores are important. As such, SRC is used to measure the ranking correlation between the ground truth popularity set P and predicted popularity set Pt , varying from 0 to 1. SRC is defined as SRC = k 1 / c Pi - Pr mc Pt i - Ptr m v Pt k - 1 i = 1 vP (1) where Pr and v P are the mean and variance of the corresponding popularity set, respectively. Future opportunities The influence of other aspects on image popularity could be investigated, e.g., geographical location, cultural background, and gender difference. Moreover, most existing works focus on using images to predict popularity. However, external factors may also affect image popularity. For example, the hashtags (similar to keywords) used in a post also highly affect the popularity. This external textual information could be incorporated to investigate whether it could improve image popularity prediction. Finally, learning popularity prediction under the setting of multiple competitive sets of information spreading on networks might be an interesting direction. For example, it could be interesting to examine the product containment problem, i.e., limiting the spread of productrelated posts in online social networks by posting competing posts. Fashion trend analysis In addition to predicting popularity, fashion trend analysis focuses on discovering fashion cycles and fashion temporal patterns by analyzing the key elements in fashion. For example, pioneer research in automatic fashion trend analysis was presented by Hidayati et al. [2]. They collected a fashion style image dataset that was extracted from the videos of 10 different seasons of NYFW (https:// www.fashiontv.com/). Afterward, fashion trends were examined by analyzing four visual style elements, i.e., color, cut, head decor, and pattern, in terms of their coherence (to co-occur frequently) and uniqueness (to be sufficiently different from other fashion shows). Furthermore, Chen and Luo [11] analyzed the best- selling clothing attributes corresponding to a specific season via online shopping website statistics, e.g., 1) winter: gray, black, and sweater; 2) spring: white, red, and V neckline. They designed a machine learning-based method using the fashion item sales information and the user transaction history to measure the real impact of the item attributes on customers. Moreover, Chang et al. [12] first investigated the world’s fashion landscape in modern times through the visual analysis of big social data. Their proposed “fashion world map” framework exploited a collection of geotagged street fashion photos from an image-centered social media site named Lookbook (https://lookbook.nu/). They devised a metric based on deep neural networks to select the potential iconic outfits for each city and formulated the detection problem of the iconic fashion items of a city as the prize-collecting Steiner tree problem, whereby a visually intuitive summary of the world’s iconic street fashion could be created. Performance evaluations The objective evaluation metrics used to measure the performance of existing fashion trend learning models are the MAPE, MAE, MSE, and SRC, which were introduced in the section “Popularity Prediction.” For the street-fashion prediction task, the agreement ratio (AR) is widely used as the evaluation metric. In this task, the iconic items of different cities are predicted. Suppose there are m participants in the user study to evaluate whether the extracted items are associated with k cities. For each city, if n participants prefer one method, the AR performance of this method for this city is calculated by n/m. Given k cities in total, the average agreement ratio (AAR) of the method can be calculated as k AAR = 1 / AR i . (2) k i=1 Future opportunities In addition to fashion elements, user group relations could be used to better understand the connections among fashion trend signals, e.g., city, gender, occupation, and IEEEDownloaded SIGNAL PROCESSING MAGAZINE 26,2023 | May 2023 | Authorized licensed use limited to: The University of Hong Kong Libraries. on November at 23:24:07 UTC from IEEE Xplore. Restrictions apply. 67 age. Moreover, in addition to the existing media sources, other sources, such as fashion magazines, bloggers, and brands, should be considered in the massive fashion trend prediction. As such, multisource learning is also a promising solution for better predicting fashion trends. Fashion recommendation Research work on fashion recommendation [3], [13], [14], [15] can be classified into fashion compatibility and outfit matching, which are defined as measuring the ability of items of different types to coordinate to form fashionable outfits as well as generating harmonious fashion matching based on individual preferences. Fashion compatibility A fashion recommendation is made according to fashion compatibility, which measures how well items of different types can coordinate to form fashionable outfits. The goal of fashion recommendation is to automatically provide users with advice on what looks best on them and how to improve their style. Specifically, given a set of human body measurements that can clarify the type of body shapes and clothing items in each image, the model aims to learn an accurate relationship between clothing styles and body shape types for recommending the correct clothing styles. As such, the key technology is to learn both visual similarity and visual compatibility among different clothing types. To learn the compatibility between clothing styles and body shapes, Hidayati et al. [3] first proposed a novel intelligent fashion analysis framework to model the correlation between human body shapes and their most suitable clothing styles, which could be further applied to improve fashion recommendations. They used two kinds of data, i.e., human body measurements and clothing styles. By incorporating both clothing style and body shape information, they mined the auxiliary clothing features to discover semantically important styles for each body shape. Then, they constructed graphs of images with visual and body shape information and selected the relevant styles across the visual and body shape graphs. Moreover, style transformations and style failures are two key rules for style recommendations. The concept of style transformation refers to a fashion style that inspires positive change in those who follow it, while style failure is regarded with little acceptance because of its lack of sophistication (inelegance). If there are any common styles that are incompatible with the previous rules, the recommendation results might be less reliable [16]. Bridging the gap between the diversity of target styles and fashion style rules in an unsupervised manner remains an issue to be resolved. To effectively capture and represent the detailed semantics of style concepts, Hidayati et al. [16] proposed analyzing fashion-related knowledge from social big data. That is, they utilized online available fashion knowledge rules, including the clothing styles of top stylish celebrities and their corresponding body measurements as well as the clothing styles that fashion experts recommend for each body type. Instead of mining clothing images and body shapes straightforwardly, Hidayati et al. [3] first filtered out clothing images that met the fashion rules engineered by fashion experts. Then, they investigated a joint embedding of clothing styles and body measurements with a multimodal representation learning algorithm. As a result, the semantic features of clothing style and body shape graphs were discovered through propagation and selection. Furthermore, they presented five basic female body shapes, i.e., hourglass, inverted triangle, rectangle, round, and triangle, as new style references. A novel dataset of style references was also constructed for the five basic female body shapes—hourglass, inverted triangle, rectangle, round, and triangle—which consists of 249,298 clothing images of five categories (dress, outerwear, pants, skirt, and top) worn by 270 stylish female celebrities, the body attributes of 3,150 female celebrities together with the associated body types, and 2,160 images of basic styles for female body shape types. Performance evaluations To measure the performance of fashion compatibility, the area under the receiver operating characteristic curve (AUC), recall, and mean average precision (MAP) are used. The AUC measures the probability that the evaluated work would recommend higher compatibility for the positive set than the negative set [17]. Recall measures the proportion of relevant items that are included in the recommendation list to the total number of relevant items for a given user [17]. The MAP is calculated by determining the mean of average precision at the points where relevant products or items are found, which can be expressed as follows: 1 / 1^ L n [k]! R h P @k u u u N u ; R u ; k =1 (3) MAP = n where u, N u, and R u indicate the specific user, the total number of users, and the set of items rated by the user, respectively. L indicates the list of ranking lengths (n) for the user (u), and k represents the position of the item found in the list L. Pu represents the precision in selecting the relevant item for the user. Future opportunities There has been limited research on combining product images with user photos and text (reviews and comments). Thus, future research should be conducted to develop hyperpersonalized recommendation models based on sentiment analysis and user images. To achieve this goal, hybrid and hyperpersonalized filtering techniques could be combined to develop a recommendation system. Since the final goal is to help users find the most suitable clothing that can make them look gorgeous, investigating multiple personal factors will be helpful for enhancing the recommendation ­system performance. For example, in addition to body measurements, building a fine-grained user graph that includes other factors, such as age and skin color, might be useful. Outfit matching In general, each outfit includes several complementary items, such as tops, bottoms, shoes, and accessories. However, generating harmonious fashion matching is challenging for three main reasons. First, the concept of fashion is subtle and IEEEDownloaded SIGNAL PROCESSING MAGAZINE 26,2023 | May 2023 | 68 Authorized licensed use limited to: The University of Hong Kong Libraries. on November at 23:24:07 UTC from IEEE Xplore. Restrictions apply. subjective. Second, there are numerous attributes for describing fashion. Third, the concept of fashion item compatibility crosses categories and involves complex relationships. To bridge the gap between fashion compatibility and personalized preference, several methods have been developed. Sanchez-Riera et al. [18] proposed a personalized clothing recommendation system, namely i-Stylist, through the analysis of personal images in social networks. Using the user’s personalized graph model, the probability distribution was calculated using visual information (deep learning features) and semantic information (clothing properties, such as fabric, pattern, and so on). Additionally, the user graph models can be dynamically modified when items from the retrieved search list are selected or discarded. To address personalized capsule wardrobe creation, Dong et al. [13] measured user preference and body shape to assess garment compatibility. In particular, they proposed a framework for personalized capsule wardrobe creation utilizing dual compatibility modeling, called PCW-DC, which evaluated both garment–garment compatibility and user–garment compatibility. Nevertheless, they overlooked attributes that are fundamental to characterizing and delivering items’ preferences to users. To alleviate such a problem, Guan et al. [19] considered attributes associated with fashion items and explored all of the related entities (i.e., users, items, and attributes) and their various relations (i.e., user–item interactions, item–item matching relations, and item–attribute association relations) to improve the performance. However, the heterogeneous contents of different entities, embeddings for new users, and various high-order relationships make this challenging. Therefore, Guan et al. [19] first presented a novel metapath-guided personalized fashion compatibility model, dubbed MG-PFCM, to solve these issues. In particular, the multiple semantic-enhanced embedding of users and items was obtained by combining the various fashion entities and relations into a single heterogeneous graph. Performance evaluations Most outfit matching methods use the same evaluation protocols as those for fashion compatibility, i.e., AUC, recall, and MAP (as introduced in the section “Fashion Compatibility”). Some methods are evaluated with the normalized discounted cumulative gain (NDCG). The NDCG is calculated by determining the graded relevance and positional information of the recommended items, which can be expressed as follows: n / G (u, n, k) D (k) NDCG u = kn= 1 / G ) (u, n, k) D (k) (4) k=1 where D (k) is a discounting function, G (u, n, k) is the gain obtained from recommending an item found at the kth position from the list L, and G ) (u, n, k) is the gain related to the kth item in the ideal ranking of n size for the u user. Future opportunities Existing methods are only able to measure the compatibility score of a bottom (top) from the given top (bottom) for a specific user. In reality, an outfit usually involves not only the top and bottom but also other items, such as shoes and accessories. Therefore, current models assume a fixed number of objects or dimensions as input, which is not realistic. There is a need for a model that can deal with different numbers of items as input and consider the outfit with an arbitrary number of items. Moreover, in the real-world scenario, overlapping category labels for fashion items might exist. Thus, future directions should explore how to learn compatibility without category labels. Fashion synthesis Makeup transfer Finding the most suitable makeup for a particular human face is challenging because of the tedious trial-and-error tryon process and the complicated combinations of faces and makeup. As such, a recent line of studies focuses on how to automatically synthesize the effects of makeup with one’s facial appearance [21], which provides an efficient way to achieve virtual makeup try-on and helps users select the most suitable makeup style. Generally, facial makeup transfer refers to translating the makeup from a given face to another face while preserving the identity of the user. On the other hand, as users may upload their photos with makeup, another line of study focuses on makeup removal [22], which is also a key technology for makeup transfer. For example, Wang and Fu [22] proposed a makeup detector and remover framework based on locality-constrained dictionary learning. Unlike the aforementioned facial makeup synthesis methods that treat makeup transfer and removal as separate problems, recent works [4], [20], [23], [24] performed makeup transfer and makeup removal simultaneously. For example, Li et al. [4] proposed a dual input/ output generative adversarial network called BeautyGAN for instance-level facial makeup transfer. Specifically, BeautyGAN first transferred a non-makeup face to the makeup domain with a couple of discriminators that distinguish generated images from real face images with makeup. In addition, it achieved instancelevel style transfer by successfully applying pixel-level histogram losses on local regions. To improve the performance of applying and removing makeup, Gu et al. [23] focused on local facial detail transfer and designed a local adversarial disentangling network containing multiple and overlapping local adversarial discriminators. However, this kind of approach has two limitations. First, it cannot handle the misalignment of images and can overfit on frontal images. Second, the existing methods cannot perform customizable makeup transfer that allows for shade-controllable transfer, in which the shade of the makeup can be changed from light to heavy. To solve these problems, Jiang et al. [25] proposed a pose and expression robust spatial-aware GAN (PSGAN), which computed the dense correspondence attention between two images to consolidate component-to-component transfer. Nevertheless, apart from the large computational overhead of pixelwise correspondence, PSGAN suffers from two main issues. First, the predicted IEEEDownloaded SIGNAL PROCESSING MAGAZINE 26,2023 | May 2023 | Authorized licensed use limited to: The University of Hong Kong Libraries. on November at 23:24:07 UTC from IEEE Xplore. Restrictions apply. 69 pixel-to-region attention is ambiguous. Second, it is cumbersome to implement local transfer from multiple references as it requires computing dense correspondences for every image and reconstructing a new attentive matrix in a pixelwise manner. To solve these issues, Deng et al. [24] overcame the spatial misalignment barrier from a completely different perspective. Their proposed style-based controllable GAN consists of two “extraction” modules and one “assignment” module. Specifically, a part-specific style encoder encodes the makeup style, component by component, into an intermediate latent style code. This style code discarded spatial information and was therefore invariant to spatial misalignment. By contrast, the style code embeds componentwise information that enables partial makeup editing from multiple references. A makeup fusion decoder equipped with multiple AdaIN layers was used to generate the final result using this style code and source identity features. As opposed to GANs, flow-based generative models have the advantage of being reversible and supporting different applications by manipulating latent space and reserving it for real-time input. Because of the ability to manipulate latent space, Chen et al. [20] proposed an unsupervised on-demand makeup transfer approach, namely BeautyGlow, based on the Glow architecture [26]. Specifically, Glow is a general framework for learning a generative network with invertible functions that encode input images into a meaningful latent space and enable modification of existing data points. Although Glow allows for the manipulation of the latent vectors, it is challenging to transfer the local makeup details of the reference image to the target image since the makeup and face are mixed as the latent vector. One possible solution is to find the average latent vector of makeup images and the average latent vector of nonmakeup images and then use the difference as the direction of manipulation. However, this approach contains two major issues: 1) it can only find the general makeup but not the user-specified makeup, and 2) it requires many images from the same person or same makeup to find the correct average latent vector. To address this issue, as shown in Figure 3, BeautyGlow first defined a transformation matrix that decomposed the latent vectors into a latent vector of makeup features and a latent vector of facial identity features. Compared with other methods based on GANs, BeautyGlow does not need to train two large networks, i.e., a generator and discriminator, which makes it more stable. Most importantly, the invertible meaningful latent space with the transformation matrix facilitated an on-demand makeup transfer; that is, users can freely adjust the makeup from light to heavy. Performance evaluations The evaluation of makeup transfer is based mostly on user studies. That is, the participants rate the results to a certain degree, such as “Very bad,” “Bad,” “Fine,” “Good,” and “Very good.” The percentages of each degree are then calculated to quantify the quality of the results. In addition, quantitative comparisons can be evaluated by the Fréchet inception distance (FID) [27] and the inception score (IS) [28]. The FID measures the effect of the transferred makeup style, whereas the IS quantitatively evaluates the synthesis quality of images. Future opportunities The preceding methods focus on transferring the makeup of specific parts (e.g., the lips and eyes). However, transferring the local facial pattern remains unsolved. This problem may be addressed by modeling faces in the 3D domain. In addition, there have been several new lines of generative models in recent years. Several articles [29], [30], [31] have shown that diffusion models can produce superior images compared to existing generative models. Thus, makeup transfer could also benefit from diffusion models. BeautyGlow W FrY LrY Reference IrY Result IsY MrY I-W Glow + LsY Glow W FsX LsX Source IsX Image Domain I-W MsX Latent Space Domain Eyeshadow Details Image Domain FIGURE 3. The framework of BeautyGlow. (Source: [20].) IEEEDownloaded SIGNAL PROCESSING MAGAZINE 26,2023 | May 2023 | 70 Authorized licensed use limited to: The University of Hong Kong Libraries. on November at 23:24:07 UTC from IEEE Xplore. Restrictions apply. Virtual try-on Clothing image synthesis is a relatively new research topic that is gaining increasing attention. The basic version of virtual try-on aims at generating a new image of a specific person wearing a target clothing item while retaining body parts and pose information. The virtual try-on task is challenging since synthesizing try-on images involves the estimation of 3D transformation from 2D images, which is an ill-posed problem. Han et al. [5] proposed the VITON framework, which focused on trying an in-shop clothing image on a person’s image. It first generated a coarse try-on result and predicted the mask for the clothing item. Based on the mask and coarse result, a refinement network for the clothing region was employed to synthesize a more detailed result. However, this approach failed to handle large deformations, especially with more texture details, because of the imperfect shape-context matching for aligning clothes and body shape. Wang et al. [33] proposed a novel network called the characteristic-preserving virtual tryon network (CP-VTON) to improve VITON by combining warped clothes with a generated person through a generated composition mask instead of using a refinement generator. More specifically, the spatial deformation could be better handled by a geometric matching module, which explicitly aligned the input clothing with the body shape. In addition to synthesizing the try-on results with a fixed pose, Hsieh et al. [34] first proposed a novel network, Fit-Me, which generates virtual try-on images with arbitrary customer poses. They designed a coarse-to-fine architecture for both pose transformation and virtual tryon. Furthermore, FashionOn [35] applied semantic segmentation for detailed partlevel learning and focused on refining the facial part and clothing region to present more realistic results. They succeeded in preserving detailed facial and clothing information, performed dramatic posture, and resolved the human limb occlusion problem in CP-VTON [33]. A virtual try-on service, however, still contains some artifacts in the clothing results (e.g., missing buttons, distorted plaids, and so on), which are critical for the accuracy of the results. Moreover, the work in Hsieh et al. [35] requires users to assign the target poses instead of directly recommending suitable poses based on clothing style. The creation of a virtual try-on application that automatically synthesizes a suitable pose corresponding to the target clothing is therefore necessary for creating a convenient and practical virtual try-on service. As such, Chou et al. [36] proposed a template-free try-on image synthesis (TF-TIS) framework for synthesizing high-quality try-on images with automatically synthesized poses. By learning the relationship between inshop clothes and try-on poses, TF-TIS synthesized a suitable try-on pose. By synthesizing poses automatically, a userfriendly platform can be created without the extra effort of uploading a target pose, and customers can experience better virtual try-on results. In addition, TF-TIS preserves critical human information and clothing characteristics by using both global and local discriminators in the clothing refinement network. Because of this, TF-TIS solved many challenging problems (for example, preserving detailed logos and generating tiny but essential details). Earlier works also required preprocessed semantic segmentation, which is time consuming, and the quality of the segmentation strongly affects the follow-up results. To reduce the time cost, Issenhuth et al. [37] proposed a parsingfree virtual try-on method. However, the information cannot be transferred from one view to another based on the users’ positions. Considering that users usually try on clothes and pose differently to assess whether the garment is suitable, this is crucial for emulating real-world tryon scenarios. To address these issues, Chen et al. [32] proposed a co-attention featureremapping try-on framework, FashionMirror, generating the try-on results according to the driven-pose sequence in two stages. Figure 4 shows the architecture of FashionMirror, which consists of two stages: 1) parsing-free co-attention mask prediction and 2) human and clothing feature remapping. For the first stage, FashionMirror directly relied on a co-attention mechanism to learn the rela- tion between consecutive human frames and the target try-on clothes to locate the regions related to the clothes. FashionMirror predicted the removed mask based on the co-attended results and the target try-on clothing mask based on the target wearable region. The second stage involves synthesizing the results of the target try-on based on the removed and target try-on clothing masks. To achieve realistic try-on results, the human and clothing information were warped at the feature level since remapping the visual information at the pixel level caused different-view and instability issues. Specifically, skeleton flow extraction learns feature-level optical flows among consecutive frames. To transfer the current-frame human to the next pose, the extracted feature flows were warped with the current-frame human features. In addition, FashionMirror enhanced the source human feature and the target clothing feature within every frame to provide a more detailed picture. Performance evaluations Most virtual try-on methods are evaluated by subjective assessment or user study. In addition, there are several objective comparisons in terms of IS [28], structural similarity (SSIM) [38], and learned perceptual image patch similarity (LPIPS) [39]. The purpose of IS is to measure the quality and diversity of images. A model that produces visually diverse and semantically meaningful images obtains a higher score. SSIM measures the similarity between the ­reference image and the generated image from 0 (dissimilar) to 1 (similar). Additionally, SSIM is used for pose transfer to assess many state-of-theart methods by comparing luminance, contrast, and structure information. By comparing the reconstruction results to the ground truth, LPIPS measures the perceptual similarity. Future opportunities While existing works demonstrate significant progress using learning-based image generation tools, such as GANs, they are limited in terms of visual quality, producing artifacts, such as blurry details, loss of facial identity, distorted body IEEEDownloaded SIGNAL PROCESSING MAGAZINE 26,2023 | May 2023 | Authorized licensed use limited to: The University of Hong Kong Libraries. on November at 23:24:07 UTC from IEEE Xplore. Restrictions apply. 71 ht+1 Pose t+1 Embedding p Extraction Skeleton Flow Extraction m1t m0t ht F0t pt t hm Parsing-Free Mr Co-Attention Mask Prediction Co-Attention Mask Network C t εm F1t Human and Clothing Feature Remapping hgt+1 ε gt+1 Gaussian Warping Mct+1 hms s εm Feature Correlation Gaussian Warping Human Sequence Generation (Gh) εMct+1 εc STN STN STN Train FIGURE 4. An overview of FashionMirror. STN: spatial transformer network. (Source: [32].) parts and garments, and severe changes in textures. Therefore, discovering how to synthesize virtual try-on with high resolution could be the focus of future studies. Moreover, in the real-world shopping scenario, it is quite important to select a suitable clothing size. The ability to synthesize different clothing sizes is also a future direction for virtual try-on research. Conclusions With the advance of deep learning, deep fashion has become a hot topic and has received a great deal of attention. In this survey, we introduce a taxonomy of fashion research that categorizes them according to the task, i.e., fashion analysis, fashion recommendation, and fashion synthesis. For each category, we not only provide a review of the renowned approaches but also introduce the evaluation metrics for different problems. Despite recent progress, developing intelligent fashion solutions remains challenging in regard to investigating and modeling complex real-world problems. We have listed possible directions for future development in each section to promote the integration of fashion and computer vision to accelerate fashion’s development. Acknowledgment This work was supported in part by the National Science and Technology Council of Taiwan under grants NSTC-109-2223-E-002-005-MY3, NSTC-111-2634-F-007-002, and NSTC109-2221-E-009-114-MY3. This work was developed by the IEEE Publications Technology Department. This work is distributed under the LaTeX Project Public License (LPPL) (http://www.latex -project.org/) version 1.3. A copy of the LPPL version 1.3 is included in the base LaTeX documentation of all distributions of LaTeX released 1 December 2003 or later. The opinions expressed here are entirely those of the author. No warranty is expressed or implied. The user assumes all risk. Authors Hung-Jen Chen (hjc.eed07g@nctu.edu. tw) received his B.S. degrees from the Department of Electrical and Computer Engineering, National Yang Ming Chiao Tung University (NYCU), Hsinchu 300 Taiwan, in 2018. He is now a Ph.D. candidate at NYCU. His research interests are computer vision, deep learning, and artificial intelligence. His works have been accepted in several top-tier conferences, such as the Computer Vision and Pattern Recognition Conference, European Conference on Computer Vision, and International Conference on Intelligent Robots and Systems. Hong-Han Shuai (hhshuai@nctu. edu.tw) received his B.S. degree from the Department of Electrical E n g i n e e r i n g , N a t i o n a l Ta i wa n University (NTU), Taipei, Taiwan, in 2007, his M.S. degree in computer science from NTU in 2009, and his Ph.D. degree from the Graduate Institute of Communication Engineering, NTU, in 2015. He is now an assistant professor at National Yang Ming Chiao Tung University, Hsinchu 300 Taiwan. His research interests are in the areas of multimedia processing, machine learning, social network analysis, and data mining. His works have appeared in top-tier conferences, such as the European Conference on Computer Vision, and top-tier journals, such as IEEE Transactions on Knowledge and Data Engineering (TKDE). Moreover, he has served as a program committee member for international conferences, including the International Joint Conference on Artificial Intelligence, and an invited reviewer of journals, including TKDE. He is a Member of IEEE. IEEEDownloaded SIGNAL PROCESSING MAGAZINE 26,2023 | May 2023 | 72 Authorized licensed use limited to: The University of Hong Kong Libraries. on November at 23:24:07 UTC from IEEE Xplore. Restrictions apply. Wen-Huang Cheng (wenhuang@ csie.ntu.edu.tw) received his Ph.D. degree from the Graduate Institute of Networking and Multimedia, National Taiwan University (NTU), Taipei, Taiwan, in 2008. He is a professor with the Department of Computer Science and Information Engineering, and Graduate Institute of Networking and Multimedia at NTU. His research interests include multimedia, artificial intelligence, computer vision, and machine learning. He has participated in international events and played leading roles in journals, conferences, and professional organizations, e.g., as associate editor of IEEE Transactions on Multimedia and general cochair of the 2022 IEEE International Conference on Multimedia and Expo (ICME). He has received numerous awards, including the Best Paper Award of the 2021 IEEE ICME and the 2017 Ta-Yu Wu Memorial Award from Taiwan’s Ministry of Science and Technology. He is an IEEE Distinguished Lecturer. References [1] B. Wu, T. Mei, W.-H. Cheng, and Y. Zhang, “Unfolding temporal dynamics: Predicting social media popularity using multi-scale temporal decomposition,” in Proc. 30th AAAI Conf. Artif. Intell., 2016, pp. 272–278, doi: 10.1609/aaai.v30i1.9970. [2] S. C. Hidayati, K.-L. Hua, W.-H. Cheng, and S.-W. Sun, “What are the fashion trends in New York?” in Proc. 22nd ACM Int. Conf. Multimedia, 2014, pp. 197–200, doi: 10.1145/2647868.2656405. [3] S. C. Hidayati, C.-C. Hsu, Y.-T. Chang, K.-L. Hua, J. Fu, and W.-H. Cheng, “What dress fits me best? Fashion recommendation on the clothing style for personal body shape,” in Proc. 26th ACM Int. Conf. Multimedia, 2018, pp. 438–446. [4] T. Li et al., “BeautyGAN: Instance-level facial makeup transfer with deep generative adversarial network,” in Proc. 26th ACM Int. Conf. Multimedia, 2018, pp. 645–653, doi: 10.1145/3240508.3240618. [5] X. Han, Z. Wu, Z. Wu, R. Yu, and L. S. Davis, “VITON: An image-based virtual try-on network,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit., 2018, pp. 7543–7552, doi: 10.1109/CVPR.2018. 00787. [6] F. S. Abousaleh, W.-H. Cheng, N.-H. Yu, and Y. Tsao, “Multimodal deep learning framework for image popularity prediction on social media,” IEEE Trans. Cogn. Devel. Syst., vol. 13, no. 3, pp. 679– 692, Sep. 2021, doi: 10.1109/TCDS.2020.3036690. [7] B. Wu, W.-H. Cheng, Y. Zhang, and T. Mei, “Time matters: Multi-scale temporalization of social media popularity,” in Proc. 24th ACM Int. Conf. M u l t i m e d i a , 2 016 , p p. 133 6 –13 4 4 , d o i : 10.1145/2964284.2964335. [8] B. Wu, W.-H. Cheng, Y. Zhang, Q. Huang, J. Li, and T. Mei, “Sequential prediction of social media popularity with deep temporal context networks,” 2017, arXiv:1712.04443. [9] E. Massip, S. C. Hidayati, W.-H. Cheng, and K.-L. Hua, “Exploiting category-specific information for image popularity prediction in social media,” in Proc. IEEE Int. Conf. Multimedia Expo Workshops, 2018, pp. 45–46, doi: 10.1109/ICMEW.2018.8551545. [25] W. Jiang et al., “PSGAN: Pose and expression robust spatial-aware GAN for customizable makeup transfer,” in Proc. IEEE/CVF Conf. Comput. Vis. Pattern Recognit., 2020, pp. 5194–5202, doi: 10.1109/CVPR42600.2020.00524. [10] L. Lo, C.-L. Liu, R.-A. Lin, B. Wu, H.-H. Shuai, and W.-H. Cheng, “Dressing for attention: Outfit based fashion popularity prediction,” in Proc. IEEE Int. Conf. Image Process., 2019, pp. 3222–3226, doi: 10.1109/ICIP.2019.8803461. [26] D. P. Kingma and P. Dhariwal, “Glow: Generative flow with invertible 1x1 convolutions,” in Proc. Adv. Neural Inf. Process. Syst., 2018, vol. 31, pp. 10,215–10,224. [11] K.-T. Chen and J. Luo, “When fashion meets big data: Discriminative mining of best selling clothing features,” in Proc. 26th Int. Conf. World Wide Web Companion, 2017, pp. 15–22. [12] Y.-T. Chang, W.-H. Cheng, B. Wu, and K.-L. Hua, “Fashion world map: Understanding cities through streetwear fashion,” in Proc. 25th ACM Int. Conf. Multimedia, 2017, pp. 91–99, doi: 10.1145/ 3123266.3123268. [13] X. Dong, X. Song, F. Feng, P. Jing, X.-S. Xu, and L. Nie, “Personalized capsule wardrobe creation with garment and user modeling,” in Proc. 27th ACM Int. Conf. Multimedia, 2019, pp. 302–310, doi: 10.1145/3343031.3350905. [14] Y. Hou, E. Vig, M. Donoser, and L. Bazzani, “Learning attribute-driven disentangled representations for interactive fashion retrieval,” in Proc. IEEE/ CVF Int. Conf. Comput. Vis., 2021, pp. 12,147– 12,157, doi: 10.1109/ICCV48922.2021.01193. [15] G. Bhattacharya et al., “DAtRNet: Disentangling fashion attribute embedding for substitute item retrieval,” in Proc. IEEE/CVF Conf. Comput. Vis. Pattern Recognit., 2022, pp. 2283–2287, doi: 10.1109/ CVPRW56347.2022.00253. [16] S. C. Hidayati et al., “Dress with style: Learning style from joint deep embedding of clothing styles and body shapes,” IEEE Trans. Multimedia, vol. 23, pp. 365–377, 2021, doi: 10.1109/TMM.2020. 2980195. [17] D. Valcarce, A. Bellogín, J. Parapar, and P. Castells, “Assessing ranking metrics in top-n recommendation,” Inf. Retrieval J., vol. 23, no. 4, pp. 411– 448, Jun. 2020, doi: 10.1007/s10791-020- 09377-x. [18] J. Sanchez-Riera, J.-M. Lin, K.-L. Hua, W.-H. Cheng, and A. W. Tsui, “i-stylist: Finding the right dress through your social networks,” in Proc. Int. Conf. Multimedia Model., Springer-Verlag, 2017, pp. 662–673, doi: 10.1007/978-3-319-51811-4_54. [19] W. Guan, F. Jiao, X. Song, H. Wen, C.-H. Yeh, and X. Chang, “Personalized fashion compatibility modeling via metapath-guided heterogeneous graph learning,” in Proc. 45th Int. ACM SIGIR Conf. Res. Develop. Inf. Retrieval, 2022, pp. 482–491, doi: 10.1145/3477495.3532038. [20] H.-J. Chen, K.-M. Hui, S.-Y. Wang, L.-W. Tsao, H.-H. Shuai, and W.-H. Cheng, “BeautyGlow: On-demand makeup transfer framework with reversible generative network,” in Proc. IEEE/CVF Conf. Comput. Vis. Pattern Recognit., 2019, pp. 10,042– 10,050, doi: 10.1109/CVPR.2019.01028. [21] S. Liu, X. Ou, R. Qian, W. Wang, and X. Cao, “Makeup like a superstar: Deep localized makeup transfer network,” 2016, arXiv:1604.07102. [22] S. Wang and Y. Fu, “Face behind makeup,” in Proc. 30th AAAI Conf. Artif. Intell., 2016, pp. 58–64, doi: 10.1609/aaai.v30i1.10002. [23] Q. Gu, G. Wang, M. T. Chiu, Y.-W. Tai, and C.-K. Tang, “LADN: Local adversarial disentangling network for facial makeup and de-makeup,” in Proc. IEEE/CVF Int. Conf. Comput. Vis., 2019, pp. 10,481–10,490, doi: 10.1109/ICCV.2019.01058. [24] H. Deng, C. Han, H. Cai, G. Han, and S. He, “Spatially-invariant style-codes controlled makeup transfer,” in Proc. IEEE/CVF Conf. Comput. Vis. Pattern Recognit., 2021, pp. 6549– 6557, doi: 10.1109/CVPR46437.2021.00648. [27] M. Heusel, H. Ramsauer, T. Unterthiner, B. Nessler, and S. Hochreiter, “GANs trained by a two time-scale update rule converge to a local NASH equilibrium,” in Proc. Adv. Neural Inf. Process. Syst., 2017, vol. 30, pp. 6629–6640. [28] T. Salimans, I. Goodfellow, W. Zaremba, V. Cheung, A. Radford, and X. Chen, “Improved techniques for training GANs,” in Proc. Adv. Neural Inf. Process. Syst., 2016, vol. 29, pp. 2234–2242. [29] P. Dhariwal and A. Nichol, “Diffusion models beat GANs on image synthesis,” in Proc. Adv. Neural Inf. Process. Syst., 2021, vol. 34, pp. 8780–8794. [30] G. Kim, T. Kwon, and J. C. Ye, “DiffusionCLIP: Text-guided diffusion models for robust image manipulation,” in Proc. IEEE/CVF Conf. Comput. Vis. Pattern Recognit., 2022, pp. 2426 –2435, doi: 10.1109/CVPR52688.2022.00246. [31] A. Lugmayr, M. Danelljan, A. Romero, F. Yu, R. Timofte, and L. Van Gool, “RePaint: Inpainting using denoising diffusion probabilistic models,” in Proc. IEEE/CVF Conf. Comput. Vis. Pattern Recognit., 2022, pp. 11,461–11,471, doi: 10.1109/CVPR52688. 2022.01117. [32] C.-Y. Chen, L. Lo, P.-J. Huang, H.-H. Shuai, and W.-H. Cheng, “FashionMirror: Co-attention featureremapping virtual try-on with sequential template poses,” in Proc. IEEE/CVF Int. Conf. Comput. Vis., 2021, pp. 13,809–13,818, doi: 10.1109/ICCV48922.2021.01355. [33] B. Wang, H. Zheng, X. Liang, Y. Chen, L. Lin, and M. Yang, “Toward characteristic-preserving image-based virtual try-on network,” in Proc. Eur. Conf. Comput. Vis., 2018, pp. 589–604, doi: 10.1007/ 978-3-030-01261-8_36. [34] C.-W. Hsieh, C.-Y. Chen, C.-L. Chou, H.-H. Shuai, and W.-H. Cheng, “Fit-me: Image-based virtual try-on with arbitrary poses,” in Proc. IEEE Int. Conf. Image Process., 2019, pp. 4694–4698, doi: 10.1109/ICIP.2019.8803681. [35] C.-W. Hsieh, C.-Y. Chen, C.-L. Chou, H.-H. Shuai, J. Liu, and W.-H. Cheng, “FashionOn: Semantic-guided image-based virtual try-on with detailed human and clothing information,” in Proc. 27th ACM Int. Conf. Multimedia, 2019, pp. 275–283, doi: 10.1145/3343031.3351075. [36] C.-L. Chou, C.-Y. Chen, C.-W. Hsieh, H.-H. Shuai, J. Liu, and W.-H. Cheng, “Template-free tryon image synthesis via semantic-guided optimization,” IEEE Trans. Neural Netw. Learn. Syst., vol. 33, no. 9, pp. 4584–4597, Sep. 2022, doi: 10.1109/ TNNLS.2021.3058379. [37] T. Issenhuth, J. Mary, and C. Calauzènes, “Do not mask what you do not need to mask: A parserfree virtual try-on,” in Proc. Eur. Conf. Comput. Vis., Springer-Verlag, 2020, pp. 619–635, doi: 10.1007/978-3-030-58565-5_37. [38] Z. Wang, A. C. Bovik, H. R. Sheikh, and E. P. Simoncelli, “Image quality assessment: From error visibility to structural similarity,” IEEE Trans. Image Process., vol. 13, no. 4, pp. 600–612, Apr. 2004, doi: 10.1109/TIP.2003.819861. [39] R. Zhang, P. Isola, A. A. Efros, E. Shechtman, and O. Wang, “The unreasonable effectiveness of deep features as a perceptual metric,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit., 2018, pp. 586–595, doi: 10.1109/CVPR.2018.00068. IEEEDownloaded SIGNAL PROCESSING MAGAZINE 26,2023 | May 2023 | Authorized licensed use limited to: The University of Hong Kong Libraries. on November at 23:24:07 UTC from IEEE Xplore. Restrictions apply. SP 73