Research Designs: Experimental, Cross-Sectional, Longitudinal

advertisement

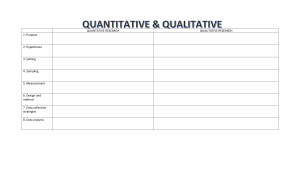

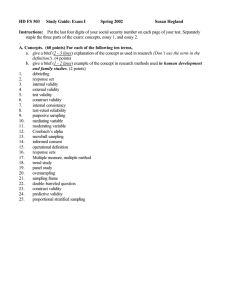

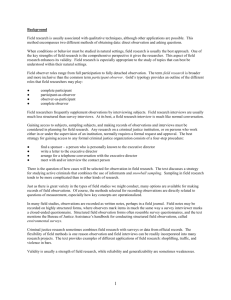

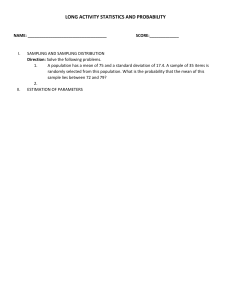

Week4 Research Designs Research Design What is a research design? A research design provides a framework for the collection and analysis of data. A choice of research design reflects decisions about the priority being given to a range of dimensions of the research process. These include the importance attached to: expressing causal connections between variables; Generalizing to larger groups of individuals than those actually forming part of the investigation; understanding behavior and the meaning of that behaviour in its specific social context; having a temporal (that is, over time) appreciation of social phenomena and their interconnections. Research Method What is a research method? A research method is simply a technique for collecting data. It can involve a specific instrument, such as a self-completion questionnaire or a structured interview schedule, or participant observation whereby the researcher listens to and watches others. Criteria in social research Reliability–are measures consistent? Replication/replicability–is study repeatable? Validity–are conclusions well-founded Types of validity Measurement (or construct) validity–do measures reflect concepts? Internal validity–are causal relations between variables real? External validity–can results be generalized beyond the research setting? Ecological validity–are findings applicable to everyday life? Alternative criteria in qualitative research Trustworthiness (Lincoln and Guba (1985): Credibility, parallels internal validity -i.e. how believable are the findings? Transferability, parallels external validity -i.e. do the findings apply to other contexts? Dependability, parallels reliability -i.e. are the findings likely to apply at other times? Confirmability, parallels objectivity -i.e. has the investigator allowed his or her values to intrude to a high degree? Variable What is a variable? A variable is simply an attribute on which cases vary. 'Cases' can obviously be people, but they can also include things such as households, cities, organizations, schools, and nations. If an attribute does not vary, it is a constant. If all manufacturing organizations had the same ratio of male to female managers, this attribute of such organizations would be a constant and not a variable. Constants are rarely of interest to social researchers. It is common to distinguish between different types of variable. The most basic distinction is between independent variables and dependent variables. The former are deemed to have a causal influence on the latter. In addition, it is important to distinguish between variables-whether independent or dependent-in terms of their measurement properties. This is an important issue in the context of quantitative data analysis Types of research design 1. Experimental 2. Cross-sectional 3. Longitudinal 4. Case study 5. Comparative Experimental design elements 1. 2. 3. 4. 5. Random assignment of subjects to experimental and control groups Pre-testing of both groups Independent variable manipulated; all other variables held constant Post-testing of both groups Computation and analysis of group differences Threats to validity 1. Other (non-experimental) events may have caused the changes observed (‘history’) 2. Subjects may become sensitized to ‘testing’ 3. People change over time in any event (‘maturation’) 4. Non-random ‘selection’ could explain differences 5. ‘Ambiguity about the direction of causal influence’ because sometimes the temporal sequence is unclear Cross-sectional design “A cross-sectional design entails the collection of data on more than one case (usually quite a lot more than one) and at a single point in time in order to collect a body of quantitative or quantifiable data in connection with two or more variables (usually many more than two), which are then examined to detect patterns of association.” A sample of cases At a single point in time Qualitative or quantitative data Patterns of association Evaluating cross-sectional research Reliability and Measurement Validity are not connected to the design as such Replicability will be high as long as the researcher specifies all the procedures Internal Validity is weak, because co-relations are much more likely to be found than causality External Validity will be strong if the sample is truly random Ecological Validity may be compromised by the instruments used Longitudinal design Survey of the same sample that is repeated on more than one occasion over time Typically used to map change in social research In panel study(e.g. TUIK IncomeandLivingConditionsPanel Survey) Or a cohort study(e.g. NCDS –National Child Development Study –sample of children born in 1958) Surveys like the Crime Survey for England and Wales, and the British Social Attitudes Survey should not be considered longitudinal as they do not involve the same people –they are better thought of as repeated cross-sectional designs Evaluating longitudinal research Attrition, because people die, or move home, or withdraw from the study. Knowing when is the right time for the next wave of data collection. The first round may have been badly thought out or not originally designed as longitudinal, which leaves the later rounds in a bit of a mess. A panel conditioning effect may creep into the research Case study design Detailed and intensive analysis of one case e.g. a single community, school, family, person, event, or organization Often involves qualitative research, but not necessarily so Approach is usually idiographic Types of case: critical, unique, exemplifying, revelatory, longitudinal e.g. Hold away(1982, 1983): an ethnography of occupational culture in a particular police force (Overall, the thesis demonstrates the continuing dominance of lower police ranks who maintain freedom from legal and organizational constraints to practice police work as they define it) Evaluating case-study research The biggest issue concerns external validity because it is impossible to generalize the findings. Many case-writers argue, though, that the point of the research is to examine particulars rather than attempt to generalize. Instead, the quality of theoretical reasoning is given precedence. Cases may be extended longitudinally or through a comparative design. Comparative design Using the same methods to compare two or more meaningfully contrasting cases Can be qualitative or quantitative Often cross-cultural comparisons Gallie’s(1978) study of the impact of automation on industrial workers in England and France (It shows that national traditions of industrial relations were more important than technology in explaining worker attitudes and management–worker relations Problem of translating research instruments and finding comparable samples Includes multiple case studies Evaluating comparative design The comparative design is essentially two or more cross-sectional studies carried out at the same point in time, so the problems are very similar. Familiar issues of reliability, validity, and replicability then apply. Observed differences between cases need to be treated with caution –and further research –as they might not be attributable to the distinguishing features of the case. Causality can not be assumed. However, comparing two or more cases can show circumstances in which a particular theory will or will not hold. Week5 The Nature of Quantitative Research What is a concept? Concepts are: Building blocks of theory Labels that we give to elements of the social world Categories for the organization of ideas and observations Concepts are useful for: Providing an explanation of a certain aspect of the social world Standing for things we want to explain Giving a basis for measuring variation Why measure? To delineate fine differences between people, organizations, or any other unit of analysis To provide a consistent device for gauging distinctions To produce precise estimates of the degree of the relationship between concepts Indicators What is an indicator? It is worth making two distinctions here. First, there is a distinction between an indicator and a measure. The latter can be taken to refer to things that can be relatively unambiguously counted, such as personal income, household income, age, number of children, or number of years spent at school. Measures, in other words, are quantities. If we are interested in some of the causes of variation in personal income, the latter can be quantified in a reasonably direct way. We use indicators to tap concepts that are less directly quantifiable. If we are interested in the causes of variation in job satisfaction, we will need indicators that will stand for the concept. These indicators will allow job satisfaction to be measured, and we can treat the resulting quantitative information as if it were a measure. An indicator, then, is something that is devised or already exists and that is employed as though it were a measure of a concept. It is viewed as an indirect measure of a concept, like job satisfaction. We see here a second distinction between direct and indirect indicators of concepts. Indicators A Multiple-Indicator measure of a concept A multiple-indicator measure of a concept The research on the effects of redundancy by Westergaard et al. (1989), which was referred to in Chapters 2 and 3, was conducted by structured interview with 378 steel workers who had been made redundant. One of the authors' interests was whether their respondents' commitment to work varied according to whether they were still unemployed at the time of the interview or had found work or had retired. In order to measure commitment to employment, the authors gave their respondents ten statements and asked them to indicate their level of agreement or disagreement on a seven-point scale running from 'Yes, I strongly agree' to 'No, I strongly disagree. There was a middle point on the scale that allowed for a neutral response. This approach to investigating a cluster of attitudes is known as a Likert scale, though in many cases researchers use a five-point rather than a seven-point scale for responses. See Key concept 7.2 for a description of what a Likert scale entails. The ten statements were as follows. A Multiple-Indicator measure of a concept 1. Work is necessary but rarely enjoyable. 2. Having a job is not very important to me. 3. I regard time spent at work as time taken away from the things I want to do. 4. Having a job is/was important to me only because it brings in money. 5. Even if I won a great deal of money on the pools I'd carry on working. 6. If unemployment benefits were really high, I would still prefer to work. 7. I would hate being on the dole. 8. I would soon get bored if I did not go out to work. 9. The most important things that have happened to me involved work. 10. Any feelings I've had in the past of achieving something worthwhile have usually come through things I've done at work. The authors found that their respondents' replies did not differ a great deal in terms of whether they had found work since being made redundant or were still unemployed or had taken retirement. Likert scale What is a Likert scale? The investigation of attitudes is a prominent area in much survey research. One of the most common techniques for conducting such an investigation is the Likert scale, named after Rensis Likert, who developed the method. The Likert scale is essentially a multiple-indicator or multiple-item measure of a set of attitudes relating to a particular area. The goal of the Likert scale is to measure intensity of feelings about the area in question. In its most common format, it comprises a series of statements (known as 'items') that focus on a certain issue or theme. Each respondent is then asked to indicate his or her level of agreement with the statement. Usually, the format for indicating level of agreement is a five-point scale going from 'strongly agree' to 'strongly disagree', but seven-point scale and other formats are used too. There is usually a middle position of 'neither agree nor disagree' or 'undecided' indicating neutrality on the issue. Each respondent's reply on each item is scored, and then the scores for each item are aggregated to form an overall score. Normally, since the scale measures intensity, the scoring is carried out so that a high level of intensity of feelings in connection with each indicator receives a high score (for example, on a five-point scale, a score of 5 for very strong positive feelings about an issue and a score of 1 for very negative feelings). The measure of commitment to work referred to in Research in focus 7.2 is an example of a Likert scale. Variations on the typical format of indicating degrees of agreement are scales referring to frequency (for example, 'never' through to 'always') and evaluation (for example, 'very poor' through to 'very good"). There are several points to bear in mind about the construction of a Likert scale. The following are particularly important. • The items must be statements and not questions. • The items must all relate to the same object (job, organization, ethnic groups, unemployment, sentencing of offenders, etc.). • The items that make up the scale should be interrelated (see the discussion of internal reliability in this chapter and Key concept 7.3). It is useful to vary the phrasing so that some items imply a positive view of the phenomenon of interest and others a negative one. Thus, in the example in Research in focus 7.2, some items imply a negative view of work (for example, 'Having a job is not very important to me') and others a positive view of work (for example, "I would soon get bored if I did not go out to work'). This variation is advised in order to identify respondents who exhibit response sets (see the sections on 'Response sets' in Chapters 9 and 10). Reliability What is reliability? Reliability refers to the consistency of a measure of a concept. The following are three prominent factors involved when considering whether a measure is reliable: • Stability. This consideration entails asking whether a measure is stable over time, so that we can be confident that the results relating to that measure for a sample of respondents do not fluctuate. This means that, if we administer a measure to a group and then readminister it, there will be little variation over time in the results obtained. In February 2010, the then Shadow Home Secretary, Chris Grayling, was roundly criticized by the UK Statistics Authority for comparing Home Office statistics from the late 1990s with current figures to suggest that there had been a big increase in violent crimes since Labour took office in 1997. The reason for the criticism was that there had been a change to the definition of violent crime, which had produced an immediate 35 per cent increase in the crime. In this case, the measure of violent crime was not reliable from the point of view of inferring a change over time. For this story, see 'Chris Grayling Accused of Damaging Public Trust over Crime Figures', www.thetimes.co.uk/tto/news/politics/article2030815.ece (accessed 9 August 2010). • Internal reliability. The key issue is whether the indicators that make up the scale or index are consistent- in other words, whether respondents' scores on any one indicator tend to be related to their scores on the other indicators. Inter-observer consistency. When a great deal of subjective judgement is involved in such activities as the recording of observations or the translation of data into categories and where more than one 'observer' is involved in such activities, there is the possibility that there is a lack of consistency in their decisions. This can arise in a number of contexts, for example: in content analysis where decisions have to be made about how to categorize media items; when answers to open questions have to be categorized; or in structured observation when observers have to decide how to classify subjects' behaviour. What does validity mean? Does the indicator measure the concept? It does if it has Face validity (right for the concept?) Concurrent validity(supported by a relevant criterion today?) Predictive validity(likely to be supported by a relevant criterion tomorrow?) Construct validity(are useful hypotheses produced?) Convergent validity(supported by results from other methods? Assessing the internal reliability and the concurrent and predictive validity of a measure of organizational climate 1. human relations model: feelings of belonging and trust in the organization and the degree to which there is training, good communication, and supervisory support; 2. internal process model: the degree of emphasis on formal rules and on traditional ways of doing things; 3. open systems model: the extent to which flexibility and innovativeness are valued; 4. rational goal model: the degree to which clearly defined objectives and the norms and values associated with efficiency, quality, and high performance are emphasized. A factor analysis (see Key concept 7.6) was conducted to explore the extent to which there were distinct groupings of items that tended to go together. This procedure yielded seventeen scales, such as autonomy, involvement, innovation and flexibility, and clarity of organizational goals. The internal reliability of the scales was assessed using Cronbach's alpha, showing that all scales were at a level of 0.73 or above. This suggests that the measure's constituent scales were internally reliable. Concurrent validity was assessed following semi-structured interviews, with each company's managers in connection with their organization's practices. The interview data were coded to provide criteria against which the validity of the scales could be gauged. In most cases, the scales were found to be concurrently valid. For example, the researcher examined the correlation between a scale designed to measure the emphasis on tradition and the degree to which practices associated with the 'new manufacturing paradigm' (Patterson et al. 2005: 397) were adopted, as revealed by the interview data. The correlation was -0.42, implying that those firms that were perceived as rooted in tradition tended to be less likely to adopt new manufacturing practices. Here the adoption of new manufacturing practices was treated as a criterion to assess the extent to which the scale measuring perceptions of tradition really was addressing tradition. If the correlation had been small or had been positive, the concurrent validity of the scale would have been in doubt. To assess predictive validity, the researchers asked a senior key informant at each company to complete a questionnaire one year after the main survey had been conducted. The questionnaire was meant to address two of the measure's constituent scales, one of which was the innovation and flexibility scale. It asked the informants to assess their company in terms of its innovativeness in a number of areas. For example, the correlation between the innovation and flexibility scale and informants' assessments of their companies in terms of innovativeness with respect to products achieved a correlation of 0.53. This implies that there was indeed a correlation between perceptions of innovativeness and flexibility and a subsequent indicator of innovativeness. The main preoccupation of quantitative researchers Measurement Causality Explanation of why things are the way they are Direction of causal influence between DV&IV Confidence in the researcher's causal inferences Sampling Basic terms and concepts: 1 Population: the universe of units from which the sample is to be selected Sample: the segment of population that is selected for investigation Sampling frame: list of all units Representative sample: a sample that reflects the population accurately Sample bias: distortion in the representativeness of the sample Basic terms and concepts: 2 Probability sample:sample selected using random selection Non-probability sample:sample selected not using random selection method Sampling error: difference between sample and population Non-sampling error:findings of research into difference between sample and population Non-response: when members of sample are unable or refuse to take part Census:data collected from entire population Sampling error Difference between sample and population Biased sample does not represent population some groups are over-represented; others are under-represented Sources of bias non-probability sampling, inadequate sample frame, non-response Probability sampling reduces sampling error and allows for inferential statistics 4 types of probability sample Simple random sample Systematic sample Stratified random sample Multi-stage cluster sample Simple random sampling Each unit has an equal probability of selection Sampling fraction: n/N where n = sample size and N = population size List all units and number them consecutively Use random numbers table to select units Systematic sampling Select units directly from sampling frame From a random starting point, choose every nth unit (e.g. every 4th name) Make sure sampling frame has no inherent ordering –if it has, rearrange it to remove bias Stratified random sampling Starting point is to categorize population into ‘strata’ (relevant divisions, or departments of companies for example) So the sample can be proportionately representative of each stratum Then, randomly select within each category as for a simple random sample Multi-stage cluster sampling Useful for widely dispersed populations First, divide population into groups (clusters) of units, like geographic areas, or industries, for example Sub-clusters (sub-groups) can then be sampled from these clusters, if appropriate Now randomly select units from each (sub)cluster Collect data from each cluster of units, consecutively Qualities of a probability sample Representative -allows for generalization from sample to population Inferential statistical tests Sample means can be used to estimate population means Standard error (SE): estimate of discrepancy between sample mean and population mean 95% of sample means fall between +/-1.96 SE from population mean Sample size Absolute size matters more than relative size The larger the sample, the more precise and representative it is likely to be As sample size increases, sampling error decreases Important to be honest about the limitations of your sample Factors affecting sample size: 1 Time and cost After a certain point (n=1000), increasing sample size produces less noticeable gains in precision very large samples are decreasingly cost-efficient (Hazelrigg, 2004) Non-response response rate = % of sample who agree to participate (or % who provide usable data) responders and non-responders may differ on a crucial variable Factors affecting sample size: 2 Heterogeneity of the population the more varied the population is, the larger the sample will have to be Kind of analysis to be carried out Some techniques require a large sample (e.g. contingency table; inferential statistics) Types of non-probability sampling: 1 1. Convenience sampling the most easily accessible individuals useful when piloting a research instrument may be a chance to collect data that is too good to miss 2. Snowball sampling researcher makes initial contact with a small group these respondents introduce others in their network Week6 Data Collection Techniques-I What is a structured interview? Useful tool of quantitative research Often used in social surveys Standardized interview schedule Each interviewee gets the same questions, in the same way, in the same order Closed, pre-coded or fixed choice questions Minimizes variation between interviews Advantages of structured interviewing: 1 Reduces error due to interviewer variability: differences in responses are due to ‘true variation’, not inconsistencies in the conduct of interviews potential sources of error are reduced by standardization (question wording, memory, misunderstanding) reduces intra-interviewer and inter-interviewer variability greater validity of data? Advantages of structured interviewing: 2 Accuracy and ease of data processing: closed ended, pre-coded or fixed choice questions (limited choice of possible answers) interviewer does not interpret responses before recording them standardized coding frame reduces variability in coding procedure reduces intra-coder and inter-coder variability Conducting structured interviews: 1 Know your way around the schedule Introduce the research Spoken or written rationale identify yourself, your employer, purposes of research and procedure of interview ethical issues: anonymity, confidentiality, right to withdraw opportunity for interviewee to ask questions Conducting structured interviews: 2 Building rapport can be difficult if limited time and little opportunity for discussion (closed questions) Asking questions keep to the schedule: even small variations in wording can affect responses Recording answers write exact words used by interviewee, or use fixed choice questions Conducting structured interviews: 3 Clear instruction some questions are not relevant to every interviewee filter questions help interviewer navigate the schedule Question order every interviewee must get questions in the same order general questions before specific questions earlier questions may affect salience of later ones first questions should be directly related to the topic potentially embarrassing or sensitive questions towards the end Conducting structured interviews: 4 Probing when respondent does not understand question or gives insufficient answer non-directive probes: “mmm”, “can you say a bit more about that?” repeat fixed choice alternatives Prompting interviewer suggests possible answers show cards Leaving the interview Training and supervision necessary if researcher hires interviewer(s) Problems with structured interviewing: 1 Characteristics of interviewers gender, age, ethnicity, class (rapport) can evoke socially desirable responses Response sets people may respond in consistent but irrelevant ways, by acquiescence (agreeing or disagreeing to all questions) or for reasons of social desirability (interviewees reflect on the way their answers might be perceived) What is a self-administered questionnaire? Also called a self-completion questionnaire No interviewer present Respondent writes answers on form Returned to researcher or deposited for collection Usually postal questionnaires Can be distributed in person or by email Self-completion questionnaires have advantages Cheaper and quicker to administer (to widely dispersed populations) No interviewer variability Convenience for respondents but many disadvantages Cannot probe or prompt Can only ask salient questions Few open-ended or complex questions Respondent can see the whole questionnaire before answering (question order effects) Cannot ensure that the ‘right’ person answers Cannot collect additional data Respondent fatigue if too many questions Excludes people with limited literacy skills Greater risk of missing data Lower response rates Online social surveys There has been a huge growth in online administration of surveys. They come in different forms Email Surveys Embedded –in the body of an email –using x or deleting alternatives, typing in answers etc. Easy to manage, higher response rate than attached survey Attached –as an email attachment and the email explains it –again respondents type answers. Web surveys -Respondents are invited to visit a website where the questionnaire can be completed. It can be widely embellished in appearance, filter questions can be used, answers can be programmed to download into a database eliminating the coding aspect Week7 Data Collection Techniques-II Asking Questions-Open questions Advantages Respondents answer in their own terms Allow for new, unexpected responses Exploratory -generate fixed answer questions Disadvantages Time-consuming for interviewer and respondent Difficult to code More effort required from respondent Interviewer variation in recording answers Asking Questions-Closed questions Advantages Quicker and easier to complete (better response rate and less missing data) Easy to process data (pre-coded) Easy to compare answers (inter-coder reliability) Disadvantages Restrictive range of answers: no spontaneity Difficult to make fixed choice answers exhaustive Respondents may interpret questions differently Designing questions: general rules Remember your research questions Decide exactly what you want to find out Imagine yourself as a respondent How would you answer the questions? Identify any vague or misleading questions Things to avoid….. Ambiguous terms: ‘often’, ‘regularly’, ‘frequently’ Long questions Double-barreled questions: may be different answers to each part(e.g. How satisfied are you with the pay and conditions in your job?) Very general questions: because they lack a frame of referance Leading questions: hinting at a preferred response Asking two questions in one Negative terms: ‘not’, ‘never’-especially double negatives Technical terms:(jargon and acronyms) Using existing questions Common practice in survey research Questions have already been piloted Known properties of reliability and validity Helps you to draw comparisons with other studies ‘Question banks ’Repositories of questions used in previous surveys ConsultTURKSTAT (https://www.tuik.gov.tr/) What is structured observation? A method of systematically observing people’s behavior By grouping behavior into categories Direct observation, not reports or inference, make it an alternative to survey methods Aggregates and compares behavior of everyone in the sample Uses a cross-sectional research design The observation schedule Must have a clear focus and be easy to use Categories must be inclusive (cover all options) and mutually exclusive It must be easy to operate Specifies categories of behaviour to be observed and how to allocate behaviour to a category Clear guidelines needed so that observers can distinguish between behaviour categories A pilot study is useful to iron out any problems Strategies for Observing Behaviour & Sampling Record incidents, events, and interventions Observe for short periods of time, repeatedly Observe for long periods of time, continuously Time sampling, record whatever is happening every x minute Ad libitum sampling Record whatever is happening at the time Focal sampling (most common form) Observe a specific individual for a set period of time Scan sampling Record behaviour of everyone in the group at regular time intervals Behavior sampling Observe whole group to see who was involved in a particular behaviour What is secondary analysis? Primary data is data we collect ourselves and Secondary data is that collected by others. Secondary analysis is done on secondary data In other words, someone else gathered the data for their own purposes and then we analyse it for our own purposes Advantages of secondary analysis • Saves money and time • Offers high quality data • Gives an opportunity for longitudinal analysis • Allows subgroup or subset analysis • Gives an opportunity for cross-cultural studies • Allows more time for data analysis • Enables the application of recent theory to old data • Gets more value from the original data …but there is a down-side… • You need to become familiar with how the data was collected, coded and managed • The data can be very large and complex • The quality of the data should never be taken for granted • Variables important to your analysis might be missing Official statistics • Collected by agencies of the state, in the course of their business – e.g. TURKSTAT compiles data for the level of unemployment • Advantages over quantitative data from surveys – reduced time and cost – no problem of reactivity – cross-sectional and longitudinal analysis – cross-cultural analysis • Disadvantages of official statistics - Only reveal ‘tip of the iceberg’: the ‘dark figure’ of unrecorded events e.g. unemployed people who do not claim benefits are not officially listed as unemployed - The process used for data collection needs interpretation Week 7 Quantitative Data Analysis – I Descriptive and Inferential Statistics Two branches of statistics: • Descriptive statistics – Graphical and numerical procedures to summarize and process data • Inferential statistics – Using data to make predictions, forecasts, and estimates to assist decision making Descriptive Statistic Collect data – e.g., Survey • Present data – e.g., Tables and graphs • Summarize data – e.g., Sample mean = Inferential Statistics Estimation e.g., Estimate the population mean weight using the sample mean weight Hypothesis testing e.g., Test the claim that the population mean weight is 140 pounds Inference is the process of drawing conclusions or making decisions about a population based on sample results Classification of Variables Measurement Levels Graphical Presentation of Data • Data in raw form are usually not easy to use for decision making • Some type of organization is needed • Table • Graph • The type of graph to use depends on the variable being summarized Graphical Presentation of Data Univariate analysis (analysis of one variable at a time) Frequency tables – Number of people or cases in each category – Often expressed as percentages of sample – Interval/ratio data needs to be grouped • Diagrams – Bar chart or pie chart (nominal or ordinal variables) – Histogram (interval/ratio variables) Describing Data Numerically The arithmetic mean (mean) is the most common measure of central tendency Median Not affected by extreme values Mode • A measure of central tendency • Value that occurs most often • There may may be no mode • There may be several modes Measures of Variability 1. Measures of variation give information on the spread or variability of the data values. Population Variance • Average of squared deviations of values from the mean Sample Variance • Average (approximately) of squared deviations of values from the mean Population Standard Deviation • Most commonly used measure of variation • Shows variation about the mean • Has the same units as the original data A i FINAL Week 9 Quantitative Data Analysis – II Bivariate analysis (analysis of two variables at a time) Explores relationships between variables Searches for co-variance and correlations Cannot establish causality Can sometimes infer the direction of a causal relationship o If one variable is obviously independent of the other Contingency tables Connects the frequencies of two variables Helps you identify any patterns of association Pearson’s r : relationship between two interval/ratio variables Coefficient shows the strength and direction of the relationship Lies between -1 (perfect negative relationship) and +1 (perfect positive relationship) Relationships must be linear for the method to work, so, plot a scatter diagram first Coefficient of determination found by squaring the value of r Shows how much of the variation in one variable is due to the other variable? Multivariate analysis(three or more variables) The relationship between two variables might be spurious Each variable could be related to a separate, third variable There might be an intervening variable A third variable might be moderating the relationship e.g. correlation between age and exercise could be moderated by gender Statistical significance How confident can we be that the findings from a sample can be generalized to the population as a whole? How risky is it to make this inference? Only applies to probability samples Testing procedure for statistical significance 1. Set up a null hypothesis -suggesting no relationship between examined variables in the population from which the sample was drawn 2. Decide on an acceptable level of statistical significance 3. Use a statistical test 4. If acceptable level attained Reject null hypothesis If acceptable level not attained Accept it but we might be wrong to accept or reject the null hypothesis Independent Samples t-test Independent samples t-test is used to decide whether the mean differences of the same variable from two different independent samples are statistically significant or not. Correlation and Significance How confident can we be about a relationship between two variables? Whether a correlation coefficient is statistically significant depends on: o the size of the coefficient (the higher the better) o the size of the sample (the larger the better) Result and Interpretation: The relationship between communication quality and job satisfaction is tested through correlation analysis. It shows that there is a statistically significant and positive relationship between communication and job satisfaction(r (155) = ,73; p < ,01). H1 is supported as a result of correlation test. The variables move together, meaning while better communication quality result in higher job satisfaction scores. Comparing means Statistical significance of relationship between two variables means Total variation in dependent variable: error variance (variation within subgroups of IV) explained variance (variation between subgroups of IV) F statistic expresses amount of explained variance in relation to amount of error variance Regression Analysis Regression analysis is used when you want to predict a continuous dependent variable from a number of independent variables. The independent variables used in regression can be either continuous or dichotomous. Regression analysis is usually used with naturally-occurring variables, as opposed to experimentally manipulated variables Simple Regression Analysis Simple linear regression is when you want to predict values of one variable, given values of another variable. Week11 Data Sources in Qualitative Research: Observation–Interview–Focus Groups-Documents What is ethnography? Researcher immersed in the social setting for an extended period. Participant observation, often with interviews and documentary methods. Participant observation is usually used with respect to the method of investigation only. Understanding the culture, norms and values of the group. It is both a research method and the written product of research. Gaining Access & Key informants Gaining access to the field requires permission and authorization in advance for the researcher to be present. Use friends, colleagues and contacts to help you. Be clear and honest about your aims and methods. Need time to gain trust of wary participants. Key informants are sponsors and/or gatekeepers who help ethnographer to collect data. They provide advice on playing the role of insider. Different roles of observers Taking field notes Write down notes as quickly as possible. Write up full field notes at the very latest at the end of the day. Use a tape recorder if you prefer. Notes must be vivid and clear -you should not have to ask at a later date ‘what did I mean by that?’ You need to take copious notes, so, if in doubt, write it down. Types of field notes Mental notes–particularly useful when it is inappropriate to be seen taking notes Jotted notes(also called Scratch notes) –very brief notes written down on pieces of paper or in small notebooks to jog one's memory about events that should be written up later Full field notes–as soon as possible make detailed notes, which will be your main data source Methodological notes –notes devoted to methodological decisions, that help to document the process of research Key Points Interviews-Differences between structured and qualitative interviews Qualitative interviews…are less structured/standardized, take the participant’s viewpoint, encourage ‘rambling’ off the topic, are more flexible, seek rich, detailed answers, aim to understand rather than to generalize. Preparing an interview guide Have a logical but flexible order of topics. Focus on research questions: ‘what do I need to know about?’ Use comprehensible and relevant language. Avoid leading questions. Record ‘facesheet’ information (name, age, gender, position etc.). Kinds of questions Introducing (“Tell me about…”) Follow-up (“What do you mean by that?”) Probing (“Could you tell me more about…?) Specifying, (“What happened next?”) Direct (“Do you think that…?”) Indirect (“What do most people think about…?”) Structuring (“Let’s move on to…”) Silence Interpreting (“Do you mean that…?”) Recording and transcription Audio-recording and transcribing:Researcher is not distracted by note-taking. Can focus on listening and interpreting. Corrects limitations of memory and intuitive glosses Detailed and accurate record of interviewee’s account. Opens data to public scrutiny. Good quality digital recorders are now widely available. Transcriber or transcription software? Selective transcription saves time. Getting it taped and transcribed: an illustration of two problem Online interviewing Online personal interviews for qualitative research Textual in nature: email exchanges, direct messaging, forums. Asynchronous/synchronous. Delivery of questions/answers made one-at-a-time, small batches, all-at-once? Editing –issues of reliability. ‘Spamming’ –issues of validity. Participant observation vs Interviews Advantages of participant observation over qualitative interviewing Seeing through others’ eyes Learning the native language Taken for granted ideas more likely to be revealed Access to deviant or hidden activities Sensitivity to context of action Flexibility in encountering the unexpected Naturalistic emphasis Embodied nature of the experience Advantages of qualitative interviewing over participant observation Finding out about issues resistant to observation Interviewees reflect on past events / life course More ethically defensible Fewer reactive effects Less intrusive Longitudinal research (follow-up interviews) Greater breadth of coverage Specific focus What is a focus group? Form of group interview Several participants and a moderator Individuals in a group/social context Discussion of specific issue Study interaction between group members How are opinions expressed and modified through group discussions? Uses of Focus Groups & Recording To examine the way people (collectively) construct and organize knowledge. To understand why people hold (and retain) certain views. Participants probe and challenge each other. To elicit a wide range of views. Keep a tape/digital record rather than take notes of how the topic was discussed(nuances of language, collective defining etc) Need to study not only what people say but who says what How many groups? More than one but not too many Continue until theoretical saturation point reached Socio-demographic characteristics Use stratifying criteria (age, gender, etc) Larger number of groups needed to represent a diverse range of viewpoints Running more groups increases volume of data and complexity of analysis Size of focus groups Average 6-10 members per group Over-recruit in anticipation of ‘no-shows’ Use smaller sized groups when: The topics are sensitive or controversial Each person will have plenty to say You want to glean personal, detailed accounts Use larger groups when: You want to hear numerous brief suggestions Level of moderator involvement Try to be unobtrusive and non-directive Ask small number of general questions to stimulate discussion Allow a ‘free rein’ to participants Discover what they define as important Intervene if the discussion wanders ‘off track’ or there is a long silence Respond to potentially interesting points that are not picked up by the participants Selecting participants Usually on the basis of a shared experience or characteristic Socio-demographic factors -stratifying criteria Look for systematic variation between groups Strangers versus ‘natural groups ’Which makes it easier to discuss the topic? Natural groups use taken for granted assumptions Limitations of focus groups Researcher has less control over proceedings Data are difficult to analyse Produce large volume of data Need to analyse forthemes in what people say as well as patterns of interaction Difficult to organize / risk of no-shows Very time-consuming to transcribe Tendency for more agreement than disagreement in discussions Potential for causing participant discomfort Longitudinal research (follow-up interviews) Greater breadth of coverage Documents available for research These are ‘documents’ that….. can be read (but not just text –visuals as well!) have not been produced specifically for the purposes of research are preserved so that they become available for analysis are relevant to the concerns of the social researcher Types of the documents Personal-Official State documents-Official company documents-Mass media outputs-Virtual documents Personal documents: diaries, letters, autobiographies, photographs Could be the primary source of data within a qualitative study Could be used as adjuncts to other methods, such as interviews or participant observation Perhaps to trace the history of an organization Diaries may also be used as a method of data collection –in turn, available to other researchers Official documents State documents: From the state, in the form of legislation, reports of public enquiries Can be a very time-consuming form of research Question of credibility because the original report may be biased Official company documents In the public domain: annual reports mission statements reports to shareholders transcripts of chief executives’ speeches press releases, advertisements public relations material in printed form and on the internet Not in the public domain: company newsletters organizational charts external consultancy reports minutes of meetings memos internal and external correspondence manuals for new recruits policy statements company regulations Mass media outputs Newspapers, magazines, radio, TV, film Problem with authenticity when authorship unclear Problem with credibility because of the possibilities of image distortion Much contextual awareness is often required The reality of documents Documents exist in their own right We should examine them in terms of their context and intended readership Inter-textuality:documents are linked to other documents Researchers are usually more interested in the content of documents but must keep the document’s original purpose in mind Week12 Qualitative Data Analysis What is content analysis? Approach to analysis of documents and texts Quantifies content in terms of predetermined categories Systematic and reliable Different from semiotics and ethnographic content analysis Most commonly focus on mass media communications Decide which dimensions of texts to quantify E.g. news coverage of an issue: who, what, how much, where and why? Selecting a sample If working with the mass media (most likely case), two dimensions are possible for random sampling: The media itself Which types of text? Printed or visual data? Documents? Mass media? If mass media, which kind? TV, radio, newspapers, magazines… More than one type? For each type of text, which examples? E.g. tabloid or broadsheet newspapers Dates of publication Starting dates may be predetermined by an historical event ( like the terrorist attack on the World Center on 11 September 2001 ) More open if the study is an going, general phenomenon End dates can be a matter of judgment What is to be counted? Significant actors: protagonists and alternative voices Words: frequency of words or phrases (e.g. ‘hooligan’ or ‘non-smoker’); can use computer software for analysis Subjects and themes Dispositions: values, bias and ideology The coding schedule A tabular form onto which coded data will be entered Each column represents a dimension to be analysed Each row represents a unit of analysis (item of text) Codes are written into blank cells in table E.g. Crime reporting in a local newspaper Portions of a coding manual Advantages of content analysis Transparency Ease of longitudinal analysis Flexibility Ease of access Disadvantages of content analysis Questions of authenticity, credibility and representativeness of documents Interpretation by coders Inability to answer `why?’ questions Has an a theoretical approach Qualitative data analysis 1. General strategies: Analytic induction Grounded theory 2. Coding: Steps, considerations, problems Thematic analysis Analytic induction A rigorous search for universal explanation of phenomena: 1. Rough definition of research question 2. Hypothetical explanation 3. Data collection (examination of cases) 4. If any deviant cases found, redefine or reformulate hypothesis 5. Continue until all cases fit hypothesis Grounded theory Theory is derived from the data, which are systematically gathered and analysed Iterative process Repetitive interplay between data collection and analysis / theory building Developments in grounded theory Straussian model more prescriptive Considerations in developing codes Of what general category is this item of data an instance? What does this item of data represent? What is this item of data about? Of what topic is this item of data an instance? What question about a topic does this item of data suggest? What sort of answer to a question about a topic does this item of data imply? What is happening here? What are people doing? What do people say they are doing? What kind of event is going on? Steps and considerations in coding 1. Code as soon as possible 2. Read through your initial set of transcripts 3. Do it again!! 4. Review your codes 5. Consider more general theoretical ideas in relation to codes and data 6. Any one item or slice of data can and often should be coded in more than one way 7. Do not worry about generating what seem to be too many codes 8. Keep coding in perspective –it is not analysis Problems with coding Losing the context of what was said (extracting sections of data) Fragmentation of data –loss of narrative flow Narrative analysis as solution Risk of only providing descriptive account of data rather than theorizing Thematic analysis One of the most common approaches to qualitative data analysis Not an approach to analysis that has an identifiable heritage or that has been outlined in terms of a distinctive cluster of techniques e.g. Disney study Narrative analysis Concerned with how people ‘make sense’ and emphasises the storied nature of human recounting of lives and events Elicited personal narratives Life history / biographical approach Secondary data Explores narrative accounts produced in interviews and other forms of data (i.e. documents) Narrative analysis of extent material Deliberately asking certain kinds of question that tend to elicit a narrative Week13 Research Ethics and Referencing What is “ethical” research? No harm should come to research participants They should agree to participate and know what the research is about Their privacy should not be invaded They should not be lied to or cheated How could you harm research participants? Physically By damaging their development or self-esteem By causing stress By hurting their career prospects or employment opportunities By breaking confidentiality By revealing their identity Invasion of privacy Privacy is very much linked to the notion of informed consent The research participant does not abrogate the right to privacy entirely by providing informed consent Covert methods are usually deemed to be violations of the privacy principle The issue of privacy is invariably linked to issues of anonymity and confidentiality in the research process Informed consent Lies, deception and research Deception usually means we represent our research as something other than it is, so that participants will respond more naturally This means it is quite a widespread practice So deception means not telling the whole truth, while not actually telling a lie We must be vigilant in keeping deception to a minimum, and when it is necessary to the research, mitigating its degree and effects as much as possible Apart from moral objections to deception, research participants and funders can become wary of being fooled, or tricked into providing data What does your own institution require? Most colleges and universities have developed their own guidelines for ethical research. Some of the questions they might pose are as follows: 1. Is the study funded? 2. Is the research compromised by the source of funding? 3. Are there potential conflicts of interest in the financial or organisational arrangements? 4. Will confidentiality be maintained appropriately at all stages of enquiry? 5. Will human rights and dignities be actively respected? 6. Will highly personal, intimate, or other private or confidential information be sought? 7. Will there be any harm, discomfort, physical, or psychological risks? 8. Will participants be involved whose ability to give informed voluntary consent may be limited? 9. Will the study involve obtaining or processing personal data relating to living individuals? Citation–APA style APA Style uses the author–date citation system, in which a brief in-text citation directs readers to a full reference list entry. The in-text citation appears within the body of the paper (or in a table, figure, footnote, or appendix) and briefly identifies the cited work by its author and date of publication. This enables readers to locate the corresponding entry in the alphabetical reference list at the end of the paper. Each work cited must appear in the reference list, and each work in the reference list must be cited in the text (or in a table, figure, footnote, or appendix). Both paraphrases and quotations require citations. Appropriate Level of Citation The number of sources you cite in your paper depends on the purpose of your work. For most papers, cite one or two of the most representative sources for each key point. Literature review papers, however, typically include a more exhaustive list of references. Provide appropriate credit to the source (e.g., by using an in-text citation) whenever you do the following: paraphrase (i.e., state in your own words) the ideas of others directly quote the words of others refer to data or data sets Plagiarism Plagiarism is the act of presenting the words, ideas, or images of another as your own; it denies authors or creators of content the credit they are due. Whether deliberate or unintentional, plagiarism violates ethical standards in scholarship. Writers who plagiarize disrespect the efforts of original authors by failing to acknowledge their contributions, stifle further research by preventing readers from tracing ideas back to their original sources, and unfairly disregard those who exerted the effort to complete their own work. Paraphrasing A paraphrase restates another’s idea (or your own previously published idea) in your own words. Paraphrasing allows you to summarize and synthesize information from one or more sources, focus on significant information, and compare and contrast relevant details. Quotations A direct quotation reproduces words verbatim from another work or from your own previously published work. It is best to paraphrase sources rather than directly quoting them because paraphrasing allows you to fit material to the context of your paper and writing style. Use direct quotations rather than paraphrasing: when reproducing an exact definition when an author has said something memorably or succinctly when you want to respond to exact wording Basic Principles of Reference List Entries A reference list entry generally has four elements: the author, date, title, and source. Each element answers a question: author: Who is responsible for this work? date: When was this work published? title: What is this work called? source: Where can I retrieve this work? Consistency in reference formatting allows readers to understand the types of works you consulted and the important reference elements with ease. Journal article Whole book Whole edited book Chapter in an edited book Aron, L., Botella, M., & Lubart, T. (2019). Culinary arts: Talent and their development. In R. F. Subotnik, P. Olszewski-Kubilius, & F. C. Worrell (Eds.),The psychology of high performance: Developing human potential into domain-specific talent(pp. 345–359). American Psychological Association.https://doi.org/10.1037/0000120-016 Dillard, J. P. (2020). Currents in the study of persuasion. In M. B. Oliver, A. A. Raney, & J. Bryant (Eds.),Media effects: Advances in theory and research(4th ed., pp. 115–129). Routledge. Parenthetical citations: (Aron et al., 2019; Dillard, 2020) Narrative citations: Aron et al. (2019), Dillard (2020)) Unpublished Dissertation or Thesis References Report by a Government Agency References Week14 Writing Up the Thesis& Abstract Issues in writing up social research Do quantitative and qualitative research reveal divergent approaches? The similarities are frequently more striking and apparent than the differences Many people find writing up research more difficult than carrying it out You must convince your readers about the credibility of the knowledge claims you make Good writing is to do with developing your style so that it is persuasive and convincing Typical ways of constructing an argument Checklist for writing up your research: 1. Have you clearly specified your research questions? Have you clearly indicated how the literature you have read relates to your research questions? Is your discussion of the literature critical and organized so that it is not just a summary of what you have read? Have you clearly outlined your research design and your research methods? Checklist for writing up your research: 2. Have you presented your data in a manner that relates to your research questions? Does your discussion of your findings show how they relate to your research questions? And on how they shed light on the literature that you presented? Are the interpretations of the data that you offer fully supported with tables, figures, or segments from transcripts? Checklist for writing up your research: 3. Does your writing avoid sexist, racist, and disablist language? Have you included all appendices that you need (interview schedule, communications with research participants)? Have you checked that your list of references includes all the items referred to in your text? And that it follows precisely the style that your institution requires? Checklist for writing up your research: 4. Have you ensured that your institution’s requirements for submitting projects are fully met in terms of word length (neither too long nor too short) and whether an abstract and table of contents are required? Have you ensured that you do not quote excessively when presenting the literature? Have you fully acknowledged the work of others so that you cannot be accused of plagiarism?