Ceph Version 15 - Octopus

Installation Guide for CentOS 7

Ceph-Ansible-45d Release Version 5.0.6

Nov 2020 - v5.0.4

Ceph Octopus Installation Guide for CentOS 7 - Release v5.0.6

Chapter 1 - What Is Ceph?

3

1.1 - Ansible Administrator Node

3

1.2 - Monitor Nodes

3

1.3 - OSD Nodes

4

1.4 - Manager Nodes

4

1.5 - Metadata Server (MDS) Nodes

4

1.6 - Object Gateway Nodes

4

1.7 - Filesystem Gateway Nodes

1.8 - System Diagram

5

5

CHAPTER 2 - Requirements For Installing Ceph

6

2.1 - Hardware Requirements

6

2.2 - Operating System

6

2.3 - Network Configuration

6

2.4 - Configuring Firewalls

7

2.4.1 Automatic Configuration

2.4.2 Manual Configuration

7

7

2.5 - Configuring Passwordless SSH

9

2.6 - Install ceph-ansible-45d

9

CHAPTER 3 - Deploying Ceph Octopus

10

3.1 - Prerequisites

10

3.2 - Configure Cluster Ansible Variables

11

3.3 - Configure OSD Variables

3.4 - Deploy Core Ceph Cluster

3.5 - Installing Metadata Servers (CephFS)

3.5.1 Configuring the SMB/CIFS Gateways

3.5.1.1 CephFS + Samba + Active Directory Integration

3.5.1.2 CephFS + Samba + Local Users

3.5.1.3 Overriding Default Samba Settings and Centralized Share Management

3.5.2 Configuring the NFS Gateways

3.5.2.1 Active-Passive Configuration

3.5.2.2 Active-Active Configuration

3.6 - Installing the Ceph Object Gateway

12

13

13

14

15

17

19

20

21

22

23

3.6.1 Configure Haproxy for RGW Load Balancing

24

3.7 - Configuring RBD + iSCSI

3.7.1 Ceph iSCSI Installation

26

26

1

Ceph Octopus Installation Guide for CentOS 7 - Release v5.0.6

3.7.2 Ceph iSCSI Configuration

26

CHAPTER 4 - Configuring Ceph Dashboard

28

5.1 Installing Ceph Dashboard

28

CHAPTER 5 - UPGRADING A CEPH CLUSTER

29

2

Ceph Octopus Installation Guide for CentOS 7 - Release v5.0.6

Chapter 1 - What Is Ceph?

Ceph is a license free, open source, Software Defined Storage platform that ties together

multiple storage servers to provide a horizontally scalable storage cluster, with no single point

of failure. Storage can be consumed via object, block and file-level.

The core software components of a Ceph cluster are explained below.

This document will define the terms and acronyms it uses as it relates to the whole 45Drives

Ceph Storage Solution. A full glossary of Ceph specific terms can be found here.

1.1 - Ansible Administrator Node

This type of node is where ansible will be configured and run from. Any node in the cluster can

function as the ansible node. This node provide the following functions:

● Centralized storage cluster management

● Ceph configuration files and keys

1.2 - Monitor Nodes

Each monitor node runs the monitor daemon (ceph-mon). The Monitor daemons are

responsible for maintaining an accurate and up to date cluster map. The cluster map is an

iterative history of the cluster members, state, changes, and the overall health of the Ceph

Storage Cluster. Monitors delegate detailed statistics of the cluster to the Manager service,

documented below. Clients can interact and consume ceph storage by retrieving a copy of the

cluster map from the Ceph Monitor.

● A ceph cluster can function with only one monitor; however, it is required to have three

monitors to ensure high availability.

● Do not use an even number of monitors as an odd number of monitors are required to

maintain quorum.

3

Ceph Octopus Installation Guide for CentOS 7 - Release v5.0.6

1.3 - OSD Nodes

Each Object Storage Daemon (OSD) node runs the Ceph OSD daemon (ceph-osd), which

interacts with logical disks attached to the node. Simply put, an OSD node is a server, and an

OSD itself is an HDD/SSD/NVMe inside the server. Ceph stores data on these OSDs.

● A Ceph cluster can run with very few OSD nodes, where the minimum is three, but

production clusters realize better redundancy and performance as node count

increases.

● A minimum of 1 Storage device is required in each OSD node.

1.4 - Manager Nodes

Each Manager node runs the MGR daemon (ceph-mgr), which maintains detailed information

about placement groups, process metadata and host metadata in lieu of the Ceph

Monitor—significantly improving performance at scale. The Ceph Manager handles execution

of many of the read-only Ceph CLI queries, such as placement group statistics. The Ceph

Manager also provides the RESTful monitoring APIs. The manager node is also responsible for

dashboard hosting, giving the user real time metrics, as well as the capability to create new

pools, exports, etc.

1.5 - Metadata Server (MDS) Nodes

Each Metadata Server (MDS) node runs the MDS daemon (ceph-mds), which manages

metadata related to files stored on the Ceph File System (CephFS). The MDS daemon also

coordinates access to the shared cluster. The MDS daemon maintains a cache of CephFS

metadata in system memory to accelerate IO performance. This cache size can be grown or

shrunk based on workload, allowing linearly scaling of performance as data grows. The service

is required for CephFS to function.

1.6 - Object Gateway Nodes

Ceph Object Gateway node runs the Ceph RADOS Gateway daemon (ceph-radosgw), and is an

object storage interface built on top of librados to provide applications with a RESTful gateway

to Ceph Storage Clusters. The Ceph Object Gateway supports two interfaces:

● S3 - Provides object storage functionality with an interface that is compatible with a

large subset of the Amazon S3 RESTful API.

● Swift - Provides object storage functionality with an interface that is compatible with a

large subset of the OpenStack Swift API.

4

Ceph Octopus Installation Guide for CentOS 7 - Release v5.0.6

1.7 - Filesystem Gateway Nodes

SMB/CIFS, NFS access into cluster

5

Ceph Octopus Installation Guide for CentOS 7 - Release v5.0.6

1.8 - System Diagram

Below is a diagram showing the architecture of the above services, and how they communicate

on the networks.

The cluster network relieves OSD replication and heartbeat traffic from the public network.

6

Ceph Octopus Installation Guide for CentOS 7 - Release v5.0.6

CHAPTER 2 - Requirements For Installing Ceph

Before starting the installation and configuration of your Ceph cluster, there are a few

requirements that need to be met.

2.1 - Hardware Requirements

As mentioned before, there are minimum quantities required for the different types of nodes.

Below is a table showing the minimum number required to achieve a highly available Ceph

cluster. It is important to note that the MON’s, MGR’s, MDS’s, FSGW’s, and RGW’s can be

either virtualized or on physical hardware.

Pool Type

OSD

MON

MGR

MDS

FSGW

RGW

2 Rep / 3 Rep

3

3

2

2

2

2

Erasure Coded

3

3

2

2

2

2

2.2 - Operating System

45Drives requires that Ceph Nautilus be deployed on a minimal installation of

CentOS 7.9 or newer. Every node in the cluster should be running the same version to ensure

uniformity.

2.3 - Network Configuration

As seen in the Figure in Chapter 1, all Ceph nodes require a public network. It is required to

have a network interface card configured to a public network where Ceph clients can reach

Ceph monitors and Ceph OSD nodes.

45Drives recommends having a second network interface configured to communicate on a

backend, private cluster n

etwork so that Ceph can conduct heart-beating, peering, replication,

and recovery on a network separate from the public network. It is important to note only the

OSD nodes need the cluster backend network configured

7

Ceph Octopus Installation Guide for CentOS 7 - Release v5.0.6

2.4 - Configuring Firewalls

2.4.1 Automatic Configuration

By default when installing Ceph using these ansible packages, it will open the required firewall

ports on the appropriate nodes using firewalld. This behavior can be overridden by disabling

“configure_firewall” in group_vars/all.yml and manually opening the ports outlined in section

2.4.2 below

2.4.2 Manual Configuration

If using iptables or requiring manual firewall configuration, the following is a table for reference

showing the default ports / ranges which are required for each ceph daemon as well as services

used for real time metrics. These must be open before you begin installing the cluster.

Note the cluster role column, that will determine which hosts need the ports opened. It

corresponds with the group names in the ansible inventory file.

8

Ceph Octopus Installation Guide for CentOS 7 - Release v5.0.6

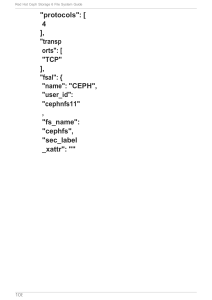

1

Ceph Daemon /

Service

Firewall Port

Protocol

Firewalld

Service Name

Cluster Role

ceph-osd

6800-7300

TCP

ceph

osds

ceph-mon

6789,3300

TCP

ceph, ceph-mon

mons

ceph-mgr

6800-7300

TCP

ceph

mgrs

ceph-mds

6800

TCP

ceph

mdss

ceph-radosgw1

8080

TCP

rgws

Ceph Prometheus

Exporter

9283

TCP

mgrs

Node Exporter

9100

TCP

metric

Prometheus Server

9090

TCP

metric

Alertmanager

9091

TCP

metric

Grafana Server

3000

TCP

metric

nfs

2049

TCP

nfs

nfss

rpcbind

111

TCP/UDP

rpc-bind

nfss

corosync

5404-5406

UDP

nfss

pacemaker

2224

TCP

nfss

samba

137,138

UDP

samba

smbs

samba

139,445

TCP

samba

smbs

CTDB

4379

TCP/UDP

ctdb

smbs

iSCSI Target

3260

TCP

iscsigws

iSCSI API Port

5000

TCP

iscsigws

iSCSI Metric

9287

TCP

iscsigws

This port is user specified. It defaults to 8080

9

Ceph Octopus Installation Guide for CentOS 7 - Release v5.0.6

Exporter

2.5 - Configuring Passwordless SSH

Ansible needs to be able to SSH without prompting for the password into each ceph node to

function. Only the server running the ansible playbooks needs to have the ability to

passwordless SSH into every node, the ceph nodes themselves do not.

These SSH keys can be removed after install if necessary, however note that playbooks will not

function without them.

Generate an SSH key pair on the Ansible administrator node and distribute the public key to all

other nodes in the storage cluster so that Ansible can access the nodes without being prompted

for a password.

Perform the following steps from the Ansible administrator node, as the root user.

1. Generate the SSH key pair, accept the default filename and leave the passphrase empty:

[root@cephADMIN ~]$ ssh-keygen

2. Copy the public key to all nodes in the storage cluster:

[root@cephADMIN ~]$ ssh-copy-id root@$HOST_NAME

Replace $HOST_NAME with the host name of the Ceph nodes.

Example

[root@cephADMIN ~]$ ssh-copy-id root@cephOSD1

Verify this process by SSHing into one of the ceph nodes, you should not be prompted for a

password. If you are troubleshooting before moving on.

10

Ceph Octopus Installation Guide for CentOS 7 - Release v5.0.6

2.6 - Install ceph-ansible-45d

45Drives provides modified ceph-ansible packages forked from the official ceph-ansible

repository. It is required you work from the 45drives provided ceph-ansible in the guide.

From the Ansible administrator node, the first thing to do is to pull down the ceph-ansible

repository file.

[root@cephADMIN

[root@cephADMIN

[root@cephADMIN

[root@cephADMIN

[root@cephADMIN

~]$

~]$

~]$

~]$

~]$

cd /etc/yum.repos.d/

curl -LO http://images.45drives.com/ceph/rpm/ceph_45drives_el7.repo

yum install ceph-ansible-45d

cp /usr/share/ceph-ansible/hosts.sample /usr/share/ceph-ansible/hosts

ln -s /usr/share/ceph-ansible/hosts /etc/ansible

This will set up the Ansible environment for a 45Drives Ceph cluster.

11

Ceph Octopus Installation Guide for CentOS 7 - Release v5.0.6

CHAPTER 3 - Deploying Ceph Octopus

This chapter describes how to use the ceph-ansible to deploy the core services of a Ceph

cluster.

● Monitors

● OSDs

● Mgrs

As well as the services required for Filesystem, Block and Object access.

● MDSs (Cephfs)

○ Samba (SMB/CIFS)

○ NFS-ganesha (NFS)

● iSCSI Gateway (iSCSI)

● Rados Gateway (S3, Swift)

3.1 - Prerequisites

Prepare the cluster nodes. On each node verify:

● Passwordless SSH configured from Ansible Node to all other nodes

● Networking configured

○ All nodes must be reachable from each on the public network

○ If cluster network is present, all OSDS nodes must be reachable on the cluster

network and public network

12

Ceph Octopus Installation Guide for CentOS 7 - Release v5.0.6

3.2 - Configure Cluster Ansible Variables

1. Navigate to the directory containing the deploy playbooks

[root@cephADMIN ~]$ cd /usr/share/ceph-ansible/

2. Edit the ansible inventory file and place the hostnames under the correct group blocks.

It is common to collocate the Ceph Manager (mgrs) with the Ceph Monitor nodes

(mons).

If you have a lengthy list with sequential naming you can use a range such as

OSD_[1:10]. See hosts.sample for a full list of host groups available.

[mons]

MONITOR_1

MONITOR_2

MONITOR_3

[mgrs]

MONITOR_1

MONITOR_2

MONITOR_3

[osds]

OSD_1

OSD_2

OSD_3

3. Ensure that Ansible can reach the Ceph hosts. Until this command finishes with success,

stop here and verify the connectivity to each host in your host file. Be aware, if it hangs

without returning to the command prompt, ansible is failing to reach one of your nodes.

[root@cephADMIN ceph-ansible]# ansible all -m ping

4. Edit the group_vars/all.yml file:

[root@cephADMIN ceph-ansible]# vim group_vars/all.yml

13

Ceph Octopus Installation Guide for CentOS 7 - Release v5.0.6

Below is a table that includes the minimum parameters that have to be updated to successfully

build a cluster.

Option

Value

Required

Notes

monitor_interface

The interface that the Yes

Monitor nodes listen

to (eth0, bond0, etc)

If the monitor interface is

different on each server,

specify monitor_interface

in the inventory file

instead of all.yml

public_network

The IP address and

netmask of the Ceph

public network

Yes

In the form of:

192.168.0.0/16

cluster_network

The IP address and

netmask of the Ceph

cluster network

No, defaults to

public_network

In the form of:

10.0.0.0/24

hybrid_cluster

Are there SSD and

HDD OSDs in the

cluster ?

No, defaults to

false

In the form of: true or

false

14

Ceph Octopus Installation Guide for CentOS 7 - Release v5.0.6

3.3 - Configure OSD Variables

1. Run the ansible-playbook to configure device alias’.

[root@cephADMIN ceph-ansible]# ansible-playbook device-alias.yml

2. Run the ansible-playbook to generate-osd-vars.yml to populate device variables. This

will use every disk present in the chassis. If you want to exclude certain drives manually

see here

[root@cephADMIN ceph-ansible]# ansible-playbook generate-osd-vars.yml

3.4 - Deploy Core Ceph Cluster

Run the ceph-ansible “core.yml” playbook to build the cluster.

[root@cephADMIN ceph-ansible]# ansible-playbook core.yml

Verify the status of the core cluster

[root@cephADMIN ceph-ansible]# ssh cephOSD1 “ceph -s”

If you encounter errors during this playbook.

● Double check the variables configured in the above section. Particularly the mandatory

ones.

● Verify all nodes in the cluster can communicate with each other, take a look for packet

drops or any other network issues.

● You can run ansible in verbose mode for more details on the failed step

○ ansible-playbook core.yml -vvvv

● The cluster can be purged back to a fresh state with

○ ansible-playbook infrastructure-playbooks/purge-cluster.yml

15

Ceph Octopus Installation Guide for CentOS 7 - Release v5.0.6

3.5 - Installing Metadata Servers (CephFS)

Metadata Server daemons are necessary for deploying a Ceph File System. This section will

show you using Ansible, how to install a Ceph Metadata Server (MDS)

1. Add a new section [mdss] to the /usr/share/ceph-ansible/hosts file:

[mdss]

cephMDS1

cephMDS2

cephMDS3

2. Verify you can communicate with each

[root@cephADMIN ceph-ansible]# ansible -m ping mdss

3. Run the cephfs.yml playbook and install and configure the Ceph Metadata Servers.

[root@cephADMIN ceph-ansible]# ansible-playbook cephfs.yml

4. Verify the file system from one of the cluster nodes

[root@cephADMIN ~]# ssh OSD1 “ceph fs status”

3.5.1 Configuring the SMB/CIFS Gateways

There are two cases that will be covered in this section. They are:

1. CephFS + Samba + Active Directory Integration

2. CephFS + Samba + Local Users

The SMB/CIFS Gateways can be physical hardware or virtual machines. CTDB is used to provide

a floating IP for High Availability access to the Samba share.

Two SMB/CIFS shares types are available, kernel and native(vfs). You are able to have mixed

shares. I.e one or more native shares and one more Kernel shares.

● Kernel - Allows for faster single client transfers and lower write latency. Useful for when

you have few clients that need maximum performance.

16

Ceph Octopus Installation Guide for CentOS 7 - Release v5.0.6

● Native(VFS) - Enable native samba to communicate with cephfs. Allows for better

parallelism in and out of the cluster. Useful for when you have a lot of SMB/CIFS clients

hitting the cluster

Edit the hosts file to include the SMB/CIFS Gateways in the [smbs] block.

[smbs]

smb1

smb2

Verify you can communicate with each SMB/CIFS gateway

[root@cephADMIN ceph-ansible]# ansible -m ping smbs

3.5.1.1 CephFS + Samba + Active Directory Integration

3. Edit the group_vars/smbs.yml file to choose the samba role

# Roles

samba_server: true

samba_cluster: true

domain_member: true

4. Edit group_vars/smbs.yml to edit active_directory_info

a. The domain join user must be an existing Domain User with the rights to join

computers to the domain it is a member of. The password is required for initial

setup and should be removed from this file after the SMB/CIFS gateways are

configured.

active_directory_info:

workgroup: 'SAMDOM'

idmap_range: '100000 - 999999'

realm: 'SAMDOM.COM'

winbind_enum_groups: no

winbind_enum_users: no

winbind_use_default_domain: no

domain_join_user: ''

17

Ceph Octopus Installation Guide for CentOS 7 - Release v5.0.6

domain_join_password: ''

5. Edit group_vars/smbs.yml to edit ctdb_public_addresses.

a. This is the floating IP(s) your SMB/CIFS clients will connect to.

b. The vip_interface is often the same network interface the gateway is

communicating on currently. It is possible to use a separate Network Interface if

you wish to segregate traffic.

c. You can specify as many floating IPs as you would like, but is recommended to

not use more IPs than the number of gateways used.

ctdb_public_addresses:

- vip_address: '192.168.103.10'

vip_interface: 'eth0'

subnet_mask: '16'

- vip_address: '192.168.103.11'

vip_interface: 'eth0'

subnet_mask: '16'

6. To manage share permissions windows side, set the enable_windows_acl to true. This is

enabled by default, but can be changed by editing group_vars/smbs.yml.

# Samba Share (SMB) Definitions

# Path is relative to cephfs root

configure_shares: true

enable_windows_acl: false

7. Edit group_vars/smbs.yml to edit samba_shares

a. name - Name of the share

b. path - Path to be shared out.

i.

The path is always relative to the root of cephfs filesystem

c. type - Type of share, kernel or vfs

d. windows_acl - When true share permissions are managed on Windows clients,

when false share permissions are managed Server side. This value is ignored

when "enable_windows_acl” is false.

e. writable - When false share is always read only

f. guest_ok - When true no password is required to connect to the service.

Privileges will be those of the guest account.

18

Ceph Octopus Installation Guide for CentOS 7 - Release v5.0.6

samba_shares:

- name: 'public'

path: '/fsgw/public'

type: 'vfs'

windows_acl: false

writeable: 'yes'

guest_ok: 'yes'

comment: "public rw access"

- name: 'secure'

path: '/fsgw/secure'

type: 'kernel'

windows_acl: true

writeable: 'yes'

guest_ok: 'no'

comment: "secure rw access"

8. Run the samba.yml playbook

[root@cephADMIN ceph-ansible]# ansible-playbook samba.yml

9. SMB/CIFS access is now available at the public IPs defined above.

a. Permissions can be configured by logging into the share on a Windows Machine.

More info on how to configure permissions windows side can be found here.

b. You can place your public IPs in a DNS round robin record to achieve some load

balancing across gateways.

3.5.1.2 CephFS + Samba + Local Users

1. Edit the group_vars/smbs.yml file to choose the samba role. Set domain_member to

false as we are not joining a Domain.

# Roles

samba_server: true

19

Ceph Octopus Installation Guide for CentOS 7 - Release v5.0.6

samba_cluster: t

rue

domain_member: f

alse

2. Edit group_vars/smbs.yml to edit ctdb_public_addresses.

a. This is the floating IP(s) your SMB/CIFS clients will connect to.

b. The vip_interface is often the same network interface the gateway is

communicating on currently. It is possible to use a separate Network Interface if

you wish to segregate traffic.

c. You can specify as many floating IPs as you would like, but is recommended to

not use more IPs than the number of gateways used.

ctdb_public_addresses:

- vip_address: '192.168.103.10'

vip_interface: 'eth0'

subnet_mask: '16'

- vip_address: '192.168.103.11'

vip_interface: 'eth0'

subnet_mask: '16'

3. Edit group_vars/smbs.yml to disable Windows ACL as no domain is being joined,

permissions cannot be managed windows side. Set enable_windows_acl to false.

# Samba Share (SMB) Definitions

# Path is relative to cephfs root

configure_shares: true

enable_windows_acl: false

4. Edit group_vars/smbs.yml to edit samba_shares

a. name - Name of the share

b. path - Path to be shared out.

i.

If using type:vfs path is relative to the root of the cephfs share

ii.

If using type:kernel path is relative to the root of the filesystem

c. type - Type of share, kernel or vfs

d. windows_acl - When true share permissions are managed on Windows clients,

when false share permissions are managed Server side. This value is ignored

when "enable_windows_acl” is false

20

Ceph Octopus Installation Guide for CentOS 7 - Release v5.0.6

e. writable - When false share is always read only

f. guest_ok - When true no password is required to connect to the service.

Privileges will be those of the guest account.

samba_shares:

- name: 'public'

path: '/fsgw/public'

type: 'vfs'

windows_acl: false

writeable: 'yes'

guest_ok: 'yes'

comment: "public rw access"

- name: 'secure'

path: '/fsgw/secure'

type: 'kernel'

windows_acl: true

writeable: 'yes'

guest_ok: 'no'

comment: "secure rw access"

5. Run the samba.yml playbook

[root@cephADMIN ceph-ansible]# ansible-playbook samba.yml

6. SMB/CIFS access is now available at the public IPs defined above.

a. Permissions need to be managed on the server side.

i.

Create local users/groups on ALL SMB/CIFS gateways

ii.

Create an smbpasswd for each user on ALL SMB/CIFS gateways

iii.

Set the permissions on the underlying storage and in share

1. chown/chmod on the underlying cephfs storage.

2. Add “write list” and “valid users” to each share definition with

“net conf”

iv. Complete instructions can be found here…. INSERT KB LINK

b. You can place your public IPs in a DNS round robin record to achieve some load

balancing across gateways.

21

Ceph Octopus Installation Guide for CentOS 7 - Release v5.0.6

3.5.1.3 Overriding Default Samba Settings and Centralized Share Management

Samba offers a registry based configuration system to complement the original text-only

configuration via smb.conf. The "net conf" command offers a dedicated interface for reading

and modifying the registry based configuration.

Using this interface default global and share settings can be fined tuned. Do not edit the

smb.conf.

To edit a global option, for example increase the log level for debugging

net conf setparm global "log level" 3

To edit a share option, for example add the “valid users” parameter to “share1” to allow

groups readonly and trusted.

net conf setparm share1 "valid users" "@readonly,@trusted"

You can view all configured samba options with “testparm” regardless if it defined in smb.conf

or net conf

3.5.2 Configuring the NFS Gateways

There are two configuration options for the NFS gateways. By default the playbooks are

configured to use Active-Passive mode.

● Active-Passive

○ Floating IP. Service only running on 1 of the gateways at a time.

○ HA possible for all clients.

○ Uses Pacemaker/Corosync HA stack.

● Active-Active

○ No floating IP. Shares accessible from every gateway IP.

○ HA only possible if application can multipath.

○ Useful for highly concurrent use cases.

Edit the hosts file to include the NFS Gateways in the [nfss] block.

22

Ceph Octopus Installation Guide for CentOS 7 - Release v5.0.6

[nfss]

cephNFS1

cephNFS2

Verify you can communicate with each NFS gateway

[root@cephADMIN ceph-ansible]# ansible -m ping nfss

Edit the /usr/share/ceph-ansible/group_vars/nfss.yml file to enable the nfs_file_gw. This is

enabled by default.

nfs_file_gw: true

3.5.2.1 Active-Passive Configuration

1. Set the “ceph_nfs_rados_backend_driver” to “rados_ng” in

/usr/share/ceph-ansible/group_vars/nfss.yml. This is set by default.

# backend mode, either rados_ng, or rados_cluster

# Default (rados_ng) is for single gateway/active-passive use.

# rados_cluster is for active-active nfs cluster. Requires ganesha-grace-db to be

initialized

ceph_nfs_rados_backend_driver: "rados_ng"

2. Specify the floating IP and subnet the NFS-Ganesha Gateway will be reachable from in

/usr/share/ceph-ansible/group_vars/nfss.yml.

ceph_nfs_floating_ip_address: '192.168.18.73'

ceph_nfs_floating_ip_cidr: '16'

3. Run the nfs.yml playbook

[root@cephADMIN ceph-ansible]# ansible-playbook nfs.yml

23

Ceph Octopus Installation Guide for CentOS 7 - Release v5.0.6

4. In case of errors, the NFS configuration can be purged back to a fresh state. Not no data

is removed, only the NFS configuration is reset.

[root@cephADMIN ceph-ansible]# ansible-playbook infrastructure-playbooks/nfs.yml

5. NFS exports are created via the ceph Dashboard which is set up in the following section.

24

Ceph Octopus Installation Guide for CentOS 7 - Release v5.0.6

3.5.2.2 Active-Active Configuration

1. Set the “ceph_nfs_rados_backend_driver” to “rados_cluster” in

/usr/share/ceph-ansible/group_vars/nfss.yml.

# backend mode, either rados_ng, or rados_cluster

# Default (rados_ng) is for single gateway/active-passive use.

# rados_cluster is for active-active nfs cluster. Requires ganesha-grace-db to be

initialized

ceph_nfs_rados_backend_driver: "rados_cluster"

2. Run the nfs.yml playbook

[root@cephADMIN ceph-ansible]# ansible-playbook nfs.yml

3. In case of errors, the NFS configuration can be purged back to a fresh state. Not no data

is removed, only the NFS configuration is reset.

[root@cephADMIN ceph-ansible]# ansible-playbook infrastructure-playbooks/nfs.yml

4. NFS exports are created via the ceph Dashboard which is set up in the following section.

25

Ceph Octopus Installation Guide for CentOS 7 - Release v5.0.6

3.6 - Installing the Ceph Object Gateway

The Ceph Object Gateway, also known as the RADOS gateway, is an object storage interface

built on top of the librados API to provide applications with a RESTful gateway to Ceph storage

clusters.

1. Add a new section, [rgws] to the /usr/share/ceph-ansible/hosts file. Be sure to define

the IP for each rgw as well.

[rgws]

cephRGW1 radosgw_address=192.168.18.53

cephRGW2 radosgw_address=192.168.18.56

2. Verify you can communicate with each RGW

[root@cephADMIN ceph-ansible]# ansible -m ping rgws

3. The default port will be 8080, change the following line in group_vars/all.yml if another

is desired:

radosgw_civetweb_port: 8080

4. Below is an example section of the group_vars/all.yml.

## Rados Gateway options

#

radosgw_frontend_type: beast

radosgw_civetweb_port: 8080

radosgw_civetweb_num_threads: 512

radosgw_civetweb_options: "num_threads={{ radosgw_civetweb_num_threads }}"

5. Run the radosgw.yml playbook to install the RGWs.

[root@cephADMIN ceph-ansible]# ansible-playbook radosgw.yml

26

Ceph Octopus Installation Guide for CentOS 7 - Release v5.0.6

6. Verify with:

[root@cephADMIN ceph-ansible]# curl -g http://cephRGW1:8080

<?xml version="1.0" encoding="UTF-8"?><ListAllMyBucketsResult

xmlns="http://s3.amazonaws.com/doc/2006-03-01/"><Owner><ID>anonymous</ID><DisplayName></Dis

playName></Owner><Buckets></Buckets></ListAllMyBucketsResult>

3.6.1 Configure Haproxy for RGW Load Balancing

Since each object gateway instance has its own IP address, HAProxy and keepalived can be used

to balance the load across Ceph Object Gateway servers.

Another use case for HAProxy and keepalived is to terminate HTTPS at the HAProxy server. You

can use an HAProxy server to terminate HTTPS at the HAProxy server and use HTTP between

the HAProxy server and the RGWs.

1. Add a new section, [rgwloadbalancers] to the /usr/share/ceph-ansible/hosts file. The

RGW nodes themselves can be used or other CentOS servers

[rgwloadbalancers]

cephRGW1

cephRGW2

2. Verify you can communicate with each

[root@cephADMIN ceph-ansible]# ansible -m ping rgwloadbalancers

27

Ceph Octopus Installation Guide for CentOS 7 - Release v5.0.6

3. Edit group_vars/rgwloadbalancers.yml and specify

a. Virtual IP(s)

b. Virtual IP Netmask

c. Virtual IP interface

d. Path to ssl certificate. Path is local to each RGW, ‘.pem’ format required

(optional)

###########

# GENERAL #

###########

haproxy_frontend_port: 80

haproxy_frontend_ssl_port: 443

haproxy_frontend_ssl_certificate:

haproxy_ssl_dh_param: 4096

haproxy_ssl_ciphers:

- EECDH+AESGCM

- EDH+AESGCM

haproxy_ssl_options:

- no-sslv3

- no-tlsv10

- no-tlsv11

- no-tls-tickets

virtual_ips:

- 192.168.18.57

virtual_ip_netmask: 16

virtual_ip_interface: eth0

4. Run the radosgw-lb.yml playbook to install the RGW load balancer

[root@cephADMIN ceph-ansible]# ansible-playbook radosgw.yml

28

Ceph Octopus Installation Guide for CentOS 7 - Release v5.0.6

3.7 - Configuring RBD + iSCSI

3.7.1 Ceph iSCSI Installation

See Knowledge Base article for more detail. Ceph iSCSI Configuration

Add iSCSI gateways hostnames to /usr/share/ceph-ansible/hosts

[iscsigws]

iscsi1

iscsi2

iscsi3

Run the iscsi.yml playbook

[root@cephADMIN ceph-ansible]# ansible-playbook iscsi.yml

3.7.2 Ceph iSCSI Configuration

Configuration on the iSCSI nodes is to be done on the iSCSI gateways and the ceph dashboard.

From one of the iSCSI nodes, create the initial iSCSI gateways with gwcli. Note the first time

gwcli is run you will be promoted with the warning below, it can be ignored as gwcli will create

an initial preferences file if not present.

[root@iscsi1 ~]# gwcli

Warning: Could not load preferences file /root/.gwcli/prefs.bin.

>

29

Ceph Octopus Installation Guide for CentOS 7 - Release v5.0.6

Create iSCSI target of for the cluster

[root@iscsi1 ~]# gwcli

> /> cd /iscsi-target

> /iscsi-target> create iqn.2003-01.com.45drives.iscsi-gw:iscsi-igw

Create the first iSCSI gateway. It has to be the node you are running this command on.

[root@iscsi1 ~]# gwcli

> cd

/iscsi-targets/iqn.2003-01.com.45drives.iscsi-gw:iscsi-igw/gateways

/iscsi-target...7283/gateways> create iscsi1.45lab.com 192.168.*.*

The Ceph Administration dashboard is recommended to finish iSCSI configuration. See Section

5 before proceeding here.

30

Ceph Octopus Installation Guide for CentOS 7 - Release v5.0.6

CHAPTER 4 - Configuring Ceph Dashboard

5.1 Installing Ceph Dashboard

Using Ansible, the steps below will be install and configure the metric collection/alert stack.This

will also configure and start the ceph management UI.

By default the metric stack will be installed to the first node running the manager service in

your cluster. Optionally to specify another server to host this stack use the group label

“metrics”

[metrics]

metric1

Run the /usr/share/ceph-ansible/dashboard.yml file:

[root@cephADMIN ceph-ansible]# ansible-playbook dashboard.yml

Below is a table of the network ports used by the dashboard services.

Name

Default Port

Grafana

3000/tcp

Prometheus

9090/tcp

Alertmanager

9091/tcp

Ceph Dashboard

8234/tcp

31

Ceph Octopus Installation Guide for CentOS 7 - Release v5.0.6

CHAPTER 5 - UPGRADING A CEPH CLUSTER

Minor updates are minor bug fixes released every 4-6 months. These are quick updates that can

be done safely by simply running a yum update.

If a new kernel is installed, a reboot will be required to take effect. If there is no kernel update

you can stop here.

If there is a new kernel, set osd flag noout and norebalance to prevent the cluster from trying to

heal itself while the nodes reboot one by one.

ceph osd set flag noout

ceph osd s

et flag norebalance

Then reboot each node one at a time. Do not reboot the next node until the prior is up and

back in the cluster. After each node is rebooted, unset the flags set earlier when you’re all

done.

ceph osd unset flag noout

ceph osd u

nset flag norebalance

32

Ceph Octopus Installation Guide for CentOS 7 - Release v5.0.6

33