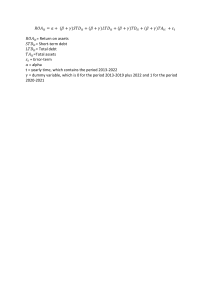

Is There Evidence of Wage Discrimination? ◼ Three Seton Hall professors recently learned in a court decision that they could pursue their lawsuit alleging the University paid higher salaries to younger instructors and male professors. ◼ Mary Schweitzer works in human resources at another college and has been asked by the college to test for age and gender discrimination in salaries. ◼ She gathers data on 42 professors, including the salary, experience, gender, and age of each. 17-2 Is There Evidence of Wage Discrimination? ◼ Using this data set, Mary hopes to: 1. Test whether salary differs by a fixed amount between males and females. 2. Determine whether there is evidence of age discrimination in salaries. 3. Determine if the salary difference between males and females increases with experience. 17-3 17.1 Dummy Variables LO 17.1 Use dummy variables to capture a shift of the intercept. ◼ In previous chapters, all the variables used in regression applications have been quantitative. ◼ In empirical work it is common to have some variables that are qualitative: the values represent categories that may have no implied ordering. ◼ We can include these factors in a regression through the use of dummy variables. ◼ A dummy variable for a qualitative variable with two categories assigns a value of 1 for one of the categories and a value of 0 for the other. 17-4 LO 17.1 ◼ Variables with Two Categories For example, suppose we are interested in determining the impact of gender on salary. ◼ We might first define a dummy variable d that has the following structure: ◼ Let d=1 if gender = “female” ◼ and d=0 if gender = “male.” ◼ This allows us to include a measure for gender in a regression model and quantify the impact of gender on salary. 17-5 LO 17.1 Regression with a Dummy Variable 17-6 LO 17.1 Regression with a Dummy Variable 17-7 LO 17.1 Regression with a Dummy Variable Graphically, we can see how the dummy variable shifts the intercept of the regression line. 17-8 LO 17.1 Salaries, Gender, and Age 17-9 LO 17.1 Estimation Results ◼ 17-10 Testing the Significance of Dummy Variables LO 17.2 Test for differences between the categories of a qualitative variable. ◼ The statistical tests discussed in Chapter 15 remain valid for dummy variables as well. ◼ We can perform a t-test for individual significance, form a confidence interval using the parameter estimate and its standard error, and conduct a partial F test for joint significance. 17-11 LO 17.2 Example 17.2 17-12 Multiple Categories 17-13 Multiple Categories 17-14 LO 17.2 Multiple Categories 17-15 Avoiding the Dummy Variable Trap ◼ Given the intercept term, we exclude one of the dummy variables from the regression, ◼ where the excluded variable represents the reference category against which the others are assessed. ◼ If we included as many dummy variables as categories, this would create perfect multicollinearity in the data, and such a model cannot be estimated. ◼ So, we include one less dummy variable than the number of categories of the qualitative variable. 17-16 17.2 Interactions with Dummy Variables LO 17.3 Use dummy variables to capture a shift of the intercept and/or slope. 17-17 LO 17.3 Modeling Interaction 17-18 LO 17.3 Shifts in the Intercept and the Slope Graphically, we can see how both the intercept and the slope might be impacted. 17-19 LO 17.3 Testing for Significance 17-20 LO 17.3 ◼ Example 17.4 Our introductory case, is about the impact of gender on salary. Further “Does additional experience get a higher reward for one gender over the other?” ◼ Since age was not significant, we shall consider three models, one with a dummy variable for gender, one with an interaction variable between gender and experience, and one with both a dummy variable and an interaction variable. ◼ As before, we keep experience as a quantitative explanatory variable. 17-21 ◼ 17-22 ◼ 17-23 ◼ 17-24 LO 17.3 Predicted Salaries ◼ The interaction term allows for male professors to have a different slope coefficient than female professors. ◼ Conceptually, experience impacts the salary of each gender differently. 17-25 17.3: Binary Choice Models LO 17.4 Use a linear probability model to estimate a binary response variable. ◼ So far, we have been considering models where dummy variables are used as explanatory variables. ◼ There are, however, many applications where the variable of interest, the response variable, is binary. ◼ Consumer choice literature has many applications including whether to buy a house, join a health club, or go to graduate school. 17-26 LO 17.4 The Linear Probability Model 17-27 LO 17.4 Weakness of LPM 17-28 Approval of Loan application 17-29 ◼ Here 0.0188 means a 1 percent increase in the down payment will increase the probability by 0.0118. ◼ Similarly, a 1 percent increase in the income-to-loan ratio will increase the probability of getting a loan by 0.0258. ◼ If the DP = 30%, IL = 30%, then the predicted probability= 1.0338, which does not make sense. 17-30 The Logit Model ◼ To address the problem of the LPM that the predicted probabilities may be negative or greater than 1, ◼ we consider an alternative called the logistic model, often referred to as a logit model. ◼ A logit model uses a nonlinear regression function that ensures that the result is always in the interval [0,1]. ◼ But interpreting the coefficients becomes more complicated ◼ and estimation cannot be done by OLS. 17-31 LO 17.5 Logistic Regression 17-32 LO 17.5 Logit versus Linear Probability Model 17-33 LO 17.5 Example 17.6 ◼ An educator wants to determine if a student’s interest in science is linked with the student’s GPA. ◼ She uses Minitab to estimate a logit model where a student’s choice of field (1=science, 0=other) is predicted by GPA. ◼ With a p-value of 0.0012, GPA is indeed a significant factor in predicting whether a student chooses science. 17-34 LO 17.5 Predicted Field Choice 17-35 LO 17.5 Example 17.7 17-36 LO 17.5 Prediction Comparison ◼ Compared to the linear probability model, the logit model does not predict probabilities less than zero or greater than one. ◼ Therefore, whenever possible, it is generally preferable to use the logit model rather than the linear probability model. 17-37 Chapter 17 Learning Objectives (LOs) LO 17.1: Use dummy variables to capture a shift of the intercept. LO 17.2: Test for differences between the categories of a qualitative variable. LO 17.3: Use dummy variables to capture a shift of the intercept and/or slope. LO 17.4: Use a linear probability model to estimate a binary response variable. LO 17.5: Interpret the results from a logit model. 17-38