Exam 2 - BYU Department of Economics

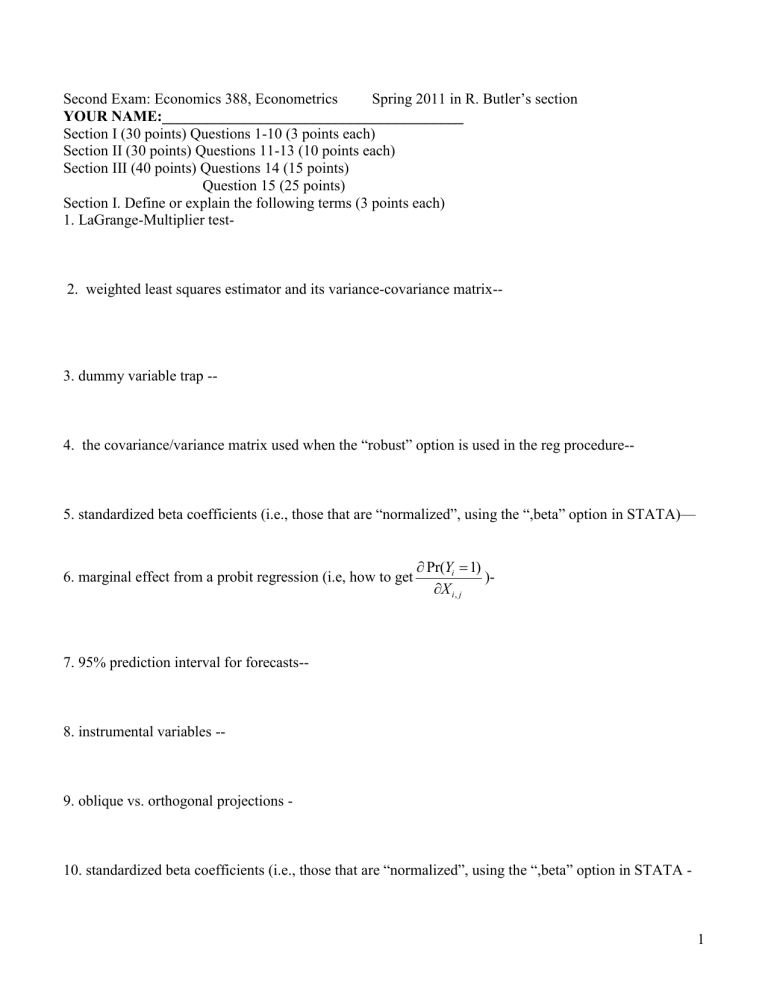

Second Exam: Economics 388, Econometrics Spring 2011 in R. Butler’s section

YOUR NAME:________________________________________

Section I (30 points) Questions 1-10 (3 points each)

Section II (30 points) Questions 11-13 (10 points each)

Section III (40 points) Questions 14 (15 points)

Question 15 (25 points)

Section I. Define or explain the following terms (3 points each)

1. LaGrange-Multiplier test-

2. weighted least squares estimator and its variance-covariance matrix--

3. dummy variable trap --

4. the covariance/variance matrix used when the “robust” option is used in the reg procedure--

5. standardized beta coefficients (i.e., those that are “normalized”, using the “,beta” option in STATA)—

6. marginal effect from a probit regression (i.e, how to get

Pr( Y i

1)

X

)-

7. 95% prediction interval for forecasts--

8. instrumental variables --

9. oblique vs. orthogonal projections -

10. standardized beta coefficients (i.e., those that are “normalized”, using the “,beta” option in STATA -

1

II. Some Fun Stuff:

11. True, False or Uncertain question: you are graded on your explanation and not on whether you guessed T or F correctly. “ The output from the regression of the squared residuals on experience, given below, and the square of experience (the original regression, from which the residuals were calculated, was the professor’s salaries regressed on experience for 10 faculty randomly chosen from the FHSS college), indicate heteroskedasticity by using the Goldfeld-Quandt test:

RESID_SQUARE = 2446043 + 3254402 experien - 93642 exper_sq

Predictor Coef StDev T P

Constant 2446043 42419907 0.06 0.956 experien 3254402 7404898 0.44 0.674 exper_sq -93642 260656 -0.36 0.730

S = 36042350 R-Sq = .041 R-Sq(adj) = 0.0%”

12. True, False or Uncertain, “Height is an important omitted variable in my regression, but it is missing from the sample information (though it was originally available, but has since been lost). Fortunately, I know that all the individuals in the sample were arranged in ascending order by height (so that the individual with the smallest sample ID is the shortest, and the individual with the largest sample ID is the tallest) AND that height is uniformly distributed. Therefore, if I difference adjacent individuals in the sample and then run a regression (so that y i

y i

1

is regressed on X i

X i

1

), I will get better estimates than if I just do the regression of y on i

X , in particular, I avoid a heteroskedasticity problem due to i omitted variables.”

2

13. Your company is considering adopting an assembly line schedule of 4 10-hour days per week instead of 5 8-hour days. Management is concerned about a potential loss in productivity due to fatigue if the company goes to longer shifts. So an experiment is planned, in which individuals will be randomly assigned to the 10 shift, and then the relationship between assembly time, y, and time since lunch, x, will be measured. Management’s concern is that initially after lunch, productivity will be relatively high

(assembly times low) but that assembly time will increase as the workers tire. The following model is estimated given the experimental data (with the absolute value of t-statistics given in parenthesis): assembly time (in hours) = .5 -.100 time since lunch + .025 (time since lunch-squared)

(3.4) (4.5) (2.9)

R-square= 60 percent. What does this imply about the optimal length of a workday? In particular, if it takes an assembly rate of two per hour to break even, should they consider going to the 10-shifts?

3

14. We have nine observations in total, three for each of three college educated women (the first three observations are for Callie, each from a different year; the next three for Bella, and the last three for

Lizzy, each from a different year). Regress their wage rates ( Y ) on three dummy variables (with no constant in the model), so that the vector of independent variables (the D matrix) looks like

1 0 0

D=

1

1

0

0

0

0

0

0

1

1

1

0

0

0

0

0

0

1

(

0

0

0

0

1

1) a) then what is the predicted wages look like, that is, what is: P Y

D

( ' )

1 '

?

b) what do the residuals look like in this model with just 9 observations, that is, what is

M Y

D

( I

D

)

?

4

15. STATA Output and interpretation: In FHSS college professors example (from lecture 1, and several times since then), I regress wages on years of teaching experience, plus a new “dummy” variable for the

11 th observation, defined implicitly as follows(namely, -1 for the 11 th observation, zero for everyone else): input obs salary experience dummy;

1 45000 2 0;

2 60600 7 0;

3 70000 10 0;

4 85000 18 0;

5 50800 6 0;

6 64000 8 0;

7 62500 8 0;

8 87000 15 0;

9 92000 25 0;

10 89500 22 0;

11 0 12 -1; end; reg salary experience dummy; estat vif; estat hottest, rhs;

Source | SS df MS Number of obs = 11

-------------+------------------------------ F( 2, 8) = 117.47

Model | 6.8515e+09 2 3.4257e+09 Prob > F = 0.0000

Residual | 233299765 8 29162470.6 R-squared = 0.9671

-------------+------------------------------ Adj R-squared = 0.9588

Total | 7.0848e+09 10 708477636 Root MSE = 5400.2

------------------------------------------------------------------------------

salary | Coef. Std. Err. t P>|t| [95% Conf. Interval]

-------------+----------------------------------------------------------------

experience | 2128.714 238.9154 8.91 0.000 1577.774 2679.654

dummy | 70427.13 5663.858 12.43 0.000 57366.25 83488.01

_cons | 44882.56 3357.591 13.37 0.000 37139.94 52625.18

------------------------------------------------------------------------------

Variable | VIF 1/VIF

-------------+----------------------

dummy | 1.00 0.999982

experience | 1.00 0.999982

Breusch-Pagan / Cook-Weisberg test

chi2(2) = 0.79

Prob > chi2 = 0.6721 a) What does the “dummy” variable coefficient and its standard error in the regression indicate? b) What does the VIF scores indicate? c) What does last Chi-square test at the bottom of the output indicate?

5