Emotion Recognition using Facial Expressions in Children using the NAO Robot

advertisement

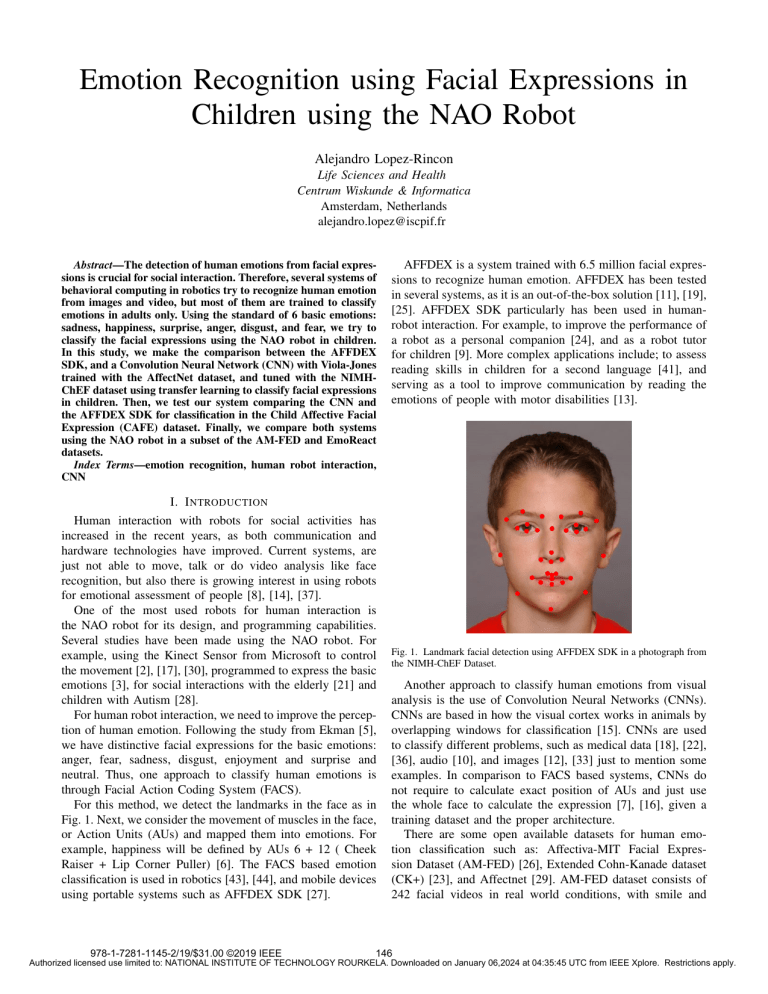

Emotion Recognition using Facial Expressions in Children using the NAO Robot Alejandro Lopez-Rincon Life Sciences and Health Centrum Wiskunde & Informatica Amsterdam, Netherlands alejandro.lopez@iscpif.fr Abstract—The detection of human emotions from facial expressions is crucial for social interaction. Therefore, several systems of behavioral computing in robotics try to recognize human emotion from images and video, but most of them are trained to classify emotions in adults only. Using the standard of 6 basic emotions: sadness, happiness, surprise, anger, disgust, and fear, we try to classify the facial expressions using the NAO robot in children. In this study, we make the comparison between the AFFDEX SDK, and a Convolution Neural Network (CNN) with Viola-Jones trained with the AffectNet dataset, and tuned with the NIMHChEF dataset using transfer learning to classify facial expressions in children. Then, we test our system comparing the CNN and the AFFDEX SDK for classification in the Child Affective Facial Expression (CAFE) dataset. Finally, we compare both systems using the NAO robot in a subset of the AM-FED and EmoReact datasets. Index Terms—emotion recognition, human robot interaction, CNN I. I NTRODUCTION Human interaction with robots for social activities has increased in the recent years, as both communication and hardware technologies have improved. Current systems, are just not able to move, talk or do video analysis like face recognition, but also there is growing interest in using robots for emotional assessment of people [8], [14], [37]. One of the most used robots for human interaction is the NAO robot for its design, and programming capabilities. Several studies have been made using the NAO robot. For example, using the Kinect Sensor from Microsoft to control the movement [2], [17], [30], programmed to express the basic emotions [3], for social interactions with the elderly [21] and children with Autism [28]. For human robot interaction, we need to improve the perception of human emotion. Following the study from Ekman [5], we have distinctive facial expressions for the basic emotions: anger, fear, sadness, disgust, enjoyment and surprise and neutral. Thus, one approach to classify human emotions is through Facial Action Coding System (FACS). For this method, we detect the landmarks in the face as in Fig. 1. Next, we consider the movement of muscles in the face, or Action Units (AUs) and mapped them into emotions. For example, happiness will be defined by AUs 6 + 12 ( Cheek Raiser + Lip Corner Puller) [6]. The FACS based emotion classification is used in robotics [43], [44], and mobile devices using portable systems such as AFFDEX SDK [27]. 978-1-7281-1145-2/19/$31.00 ©2019 IEEE AFFDEX is a system trained with 6.5 million facial expressions to recognize human emotion. AFFDEX has been tested in several systems, as it is an out-of-the-box solution [11], [19], [25]. AFFDEX SDK particularly has been used in humanrobot interaction. For example, to improve the performance of a robot as a personal companion [24], and as a robot tutor for children [9]. More complex applications include; to assess reading skills in children for a second language [41], and serving as a tool to improve communication by reading the emotions of people with motor disabilities [13]. Fig. 1. Landmark facial detection using AFFDEX SDK in a photograph from the NIMH-ChEF Dataset. Another approach to classify human emotions from visual analysis is the use of Convolution Neural Networks (CNNs). CNNs are based in how the visual cortex works in animals by overlapping windows for classification [15]. CNNs are used to classify different problems, such as medical data [18], [22], [36], audio [10], and images [12], [33] just to mention some examples. In comparison to FACS based systems, CNNs do not require to calculate exact position of AUs and just use the whole face to calculate the expression [7], [16], given a training dataset and the proper architecture. There are some open available datasets for human emotion classification such as: Affectiva-MIT Facial Expression Dataset (AM-FED) [26], Extended Cohn-Kanade dataset (CK+) [23], and Affectnet [29]. AM-FED dataset consists of 242 facial videos in real world conditions, with smile and 146 Authorized licensed use limited to: NATIONAL INSTITUTE OF TECHNOLOGY ROURKELA. Downloaded on January 06,2024 at 04:35:45 UTC from IEEE Xplore. Restrictions apply. some FACS AUs information. CK+ has 593 sequences from 123 subjects for the 6 basic emotions. Affectnet dataset is made of 1250 images form the internet in normal conditions, half labelled by humans and half by computer for the 6 basic emotions plus contempt, uncertain, and no-face. Most of the available datasets were created with adults mostly, therefore the studies do not focus in children. Nevertheless, there are some datasets that focus on the analysis of children facial expressions such as the Child Affective Facial Expression (CAFE) dataset [20], the NIMH Child Emotional Faces Picture Set (NIMH-ChEF) [4] and the EmoReact [31]. The CAFE dataset consists of 1192 photographs of children with ages ranging from 2-8 years old for the 6 basic expressions and neutral with human classification. EmoReact is an expression dataset that consists of 1102 videos of children ages ranging from 4-14 years old that measures if Curiosity, Uncertainty, Excitement, Happiness, Surprise, Disgust, Fear, Frustration are present in the video and gives a measure of valence. NIMH-ChEF dataset has 534 photographs of fearful, angry, happy, sad and neutral child face expressions looking directly to the camera and averted. In this study, we compare a CNN with Viola-Jones trained with a subset of the Affectnet and re-trained with the NIMHChEF dataset, against a FACS based system using AFFDEX SDK for human emotion recognition based in facial expressions. We compare both systems by measuring the classification accuracy in the CAFE dataset. Then, we put the system into the NAO robot, and use its video capture for detecting smile in individuals from 5 videos of the AM-FED dataset, and processing the reaction in 5 videos from the EmoReact dataset to try to simulate emotion recognition in real time. In our overall system, we map these instructions to control the NAO Robot using a controller as explained in Fig. 2. Besides the movement instructions from the controller we add the emotion recognition into the system, and the reaction. To avoid racing conditions in the robot we send the instructions in the robot as in Fig. 3. In summary, we have three main subsystems: the movement, and walking of the NAO robots, the emotion recognition using the NAO camera, and the reaction to the emotion. II. M ETHODOLOGY We programmed a light version of the NAOqi [35] library for .NET, to make it compatible with visual studio 2017, AFFDEX SDK and EMGUCV [39]. This light version includes the basic motion of arms, hand, head, walking, text-tospeech (TTS) and video input of the NAO robot. The video input is configured to 320x240 color images with automatic head tracking. Next, for each frame we make an analysis using AFFDEX or the CNN. Fig. 2. Control of the NAO robot in the overall system. A. NAOqi .NET lite version As current versions of NAOqi are not visual studio compatible, or use specific version of Python libraries to run, we develop a lite version of the NAOqi to control the NAO robot. This NAOqi .NET lite version is able to interface between the AFFDEX SDK, python 3.0, Tensorflow, Accord .NET [40] and EMGUCV under Visual Studio 2017, and Visual Studio 2015. We tested our framework in NAO Robot V4 and V5. For this purpose, we include the instructions for moving the following joints: LShoulderPitch, RShoulderPitch, LShoulderRoll, RShoulderRoll, LElbowYaw, RElbowYaw, LHand, RHand, HeadPitch, HeadYaw. In addition, we include the instructions for videoframe call, TTS, and moveTo. 978-1-7281-1145-2/19/$31.00 ©2019 IEEE Fig. 3. Clock Signal of the NAO robot control. B. CNN Architecture with Viola-Jones The CNN we use has a 4-layer architecture with chosen hyperparameters given by Bayesian Optimization [38] trained with a subset of AffectNet. The subset of AffectNet consists of 283,901 faces for the 6 basic emotions and neutral labeled by humans. The CNN architecture has 4 layers with a Softmax 147 Authorized licensed use limited to: NATIONAL INSTITUTE OF TECHNOLOGY ROURKELA. Downloaded on January 06,2024 at 04:35:45 UTC from IEEE Xplore. Restrictions apply. as in Fig. 4. The activations units are Rectified Linear Units (RELUs). Fig. 6. Hyper parameter explanation in each of the CNN layers. Fig. 4. CNN structure with 3 convolution layers and fully connected layer with a Softmax for emotion recognition. The input is a 100x100 greyscale image of a face detected using the Viola-Jones algorithm with Haar feature-based cascade classifiers [42] from the EMGUCV framework. The Viola-Jones algorithm is a machine learning technique based in detecting specific characteristics like edges in an image. In a cascade classifier, the algorithm detects an order in these characteristics to then detect an object. In the case of face recognition, the algorithm has to be trained using several positive (with faces) and negative images (without faces). In the EMGUCV library there are already pretrained face detection cascade classifiers. In a first stage, we used the haar cascade def ault classifier, but in order improve the performance and detection of faces, we included a the haar cascade alt2 classifier. Thus, our system works using both classifiers as in Fig. 5. Finally, to improve our face detection even further we use the cascade classifier available in the AFFDEX SDK, in addition to the haar cascade alt2 present in the EMGUCV. SDK. Using this, we recognize 267,864 faces out of 283,901. Then, we divide the subset into 254,470 training and 13,394 for validation. From the system we get 68.4% accuracy on the validation set. The reported accuracy on the Affectnet dataset using a ResNext Neural Network is 65%. The resulting confusion matrix for validation set is in Table I. TABLE I C ONFUSION M ATRIX OF THE THE VALIDATION Neutral Happy Sad Surprise Fear Disgust Anger Neutral 2797 705 761 382 161 81 594 Happy 431 5597 137 130 36 38 98 Sad 45 40 188 16 21 6 27 Surprise 12 15 2 83 41 1 10 SET Fear 0 0 0 3 15 0 1 (13,394 FACES ). Disgust 0 0 0 0 0 0 0 Anger 172 62 99 22 26 58 481 To improve the accuracy of our system for the CAFE dataset, we use the NIMH-ChEF database to fine-tuned our pre-trained model. As the new dataset is small (534 faces) in comparison to the original (283,901 faces), we reduce our learning rate by a factor of 1/100 to avoid over-fitting, and retrain the system. This is known as weight tuning with transfer learning in Convolution Neural Networks [32]. The summary of how we use the data is explained in Fig. 7. Fig. 5. CNN structure with 3 convolution layers and fully connected layer with a Softmax for emotion recognition, and 2 input cascade classifiers. The first three layers of the CNN have the form as in Fig. 6, where i is the number of the layer, v the size of the convolution window, w the number of output channels, and h the max pooling window size. We have an extra hyper parameter from w4 for the size of the fully connected layer. The training of the CNN is done using Tensorflow library [1]. We choose these hyper parameters using Bayesian Optimization with the best result after 65 architectures using a 115,864 faces subset of Affectnet. The hyper parameters are the following w1 = 255, w2 = 255, w3 = 255, w4 = 256, h1 = 4, h2 = 3, h3 = 2, v1 = 12, v2 = 3, v3 = 2. From the 283,901 faces available in Affectnet we use different combinations of the cascade classifiers available in EMGUCV and the AFFDEX SDK. Then we divide the dataset into 95% Training and 5% Validation. The best combination is using the cascade classifier available in the AFFDEX 978-1-7281-1145-2/19/$31.00 ©2019 IEEE Fig. 7. Summary of the used Datasets for training the CNN system. To train the system we assign a label for each of the basic emotions and neutral. The summary of number of cases for each emotion in each dataset is given in Table II. C. Emotion Recognition System For our analysis, we will have two possible systems as explained in Fig. 8. The system asks for a 320x240 frame from the camera in the robot. System 1 will use the AFFDEX SDK and system 2 will use the CNN to classify the emotions from the captured frames. The frame rate for the CNN is 6.32 frames per second (fps) and 3.38 fps for AFFDEX, under the 148 Authorized licensed use limited to: NATIONAL INSTITUTE OF TECHNOLOGY ROURKELA. Downloaded on January 06,2024 at 04:35:45 UTC from IEEE Xplore. Restrictions apply. TABLE II S UMMARY OF CASES FOR EACH EMOTION IN EACH DATASET. CAFE 230 215 108 103 140 191 205 1192 Neutral Happy Sad Surprise Fear Disgust Anger Total Affectnet 70473 128064 23354 13139 5861 3573 23400 267864 NIMH-ChEF 108 104 107 0 104 0 111 534 TABLE IV C ONFUSION MATRIX FOR THE CNN SYSTEM IN Label 0 1 2 3 4 5 6 Neutral Happy Sad Surprise Fear Disgust Anger Neutral 173 2 49 4 38 12 8 Happy 20 196 14 25 42 50 71 Sad 3 12 16 4 6 22 18 Surprise 23 2 4 53 42 0 5 THE Fear 0 0 0 5 2 0 1 CAFE DATASET. Disgust 0 0 0 0 0 0 0 Anger 10 3 22 8 7 105 95 TABLE V C OMPARISON IN ACCURACY FOR THE CAFE DATASET. DF= D ETECTED FACES , IN THE T EST SET (CAFE), T RAIN SET (A FFECTNET ), R ETRAIN SET (NIMH C HILD ). Classifier CNN-Viola-Jones CNN-2-Viola-Jones CNN-2-Viola-Jones Re-Trained CNN-AFFDEX Viola Jones CNN-AFFDEX Viola Jones Re-Trained AFFDEX SDK Fig. 8. Summary of the emotion recognitions systems. same conditions in a computer with an intel processsor i77700HQ, 16.0 GB RAM with a 6.0 GB NVIDIA graphics card. Accuracy Test 0.396812 0.407718 0.425336 0.43959731543 0.44882550335 0.42449664429 DF Test 1105 1147 1147 1172 1172 1179 DF Train 115632 255346 255346 267864 267864 - DF Retrain 484 534 - As far as we know, the only available classification study on the CAFE dataset is the study from ROA [34]. In comparison to this study, we do not use the CAFE dataset as part of the training, just for the test set. B. Test 2 III. T ESTS A. Test 1 For this test, we compare the emotion recognition in the CAFE dataset. The dataset has 1,192 photos of children for the 6 basic emotions and neutral. We do not do any preprocessing to adjust the images. For AFFDEX the considered emotion has to surpass the threshold value of threshold = 50, and be the maximum value among the emotions. For any other case, we consider the emotion as neutral, as used in the SDK examples. The results are shown in the confusion matrix in Table III. TABLE III C ONFUSION MATRIX FOR THE AFFDEX SYSTEM IN Neutral Happy Sad Surprise Fear Disgust Anger Neutral 203 38 82 37 42 75 62 Happy 2 159 7 1 5 41 47 Sad 0 0 5 0 0 1 0 Surprise 20 11 1 59 90 2 1 Fear 4 0 1 0 2 1 1 THE CAFE Disgust 1 5 9 1 0 67 79 DATASET. Anger 0 0 2 0 0 4 11 Fig. 9. NAO robot watching the screen, and then the analysis is done through the visual capture of the robot. For the CNN case, we compare the classification using different cascade classifiers for the face detection. First, using the default classifier in EMGUCV. Next, using 2 available classifiers in EMGUCV, and then using the cascade classifier in the AFFDEX SDK. Finally we retrain the CNN using the NIMH-ChEF dataset. The confusion matrix for the best system is in Table IV. The summary of the results from using the AFFDEX SDK, and the CNN systems are shown in Table V. The table also reflects the detected faces from the CAFE dataset. 978-1-7281-1145-2/19/$31.00 ©2019 IEEE In this test, we put the robot separated from the monitor as in the Fig. 9. The robot processes every frame using either AFFDEX SDK or the CNN. The analysis we display are through the eyes of the robot, and not from the analysis directly in the computer, to ensure the quality of the system in the robot directly and with natural lightning conditions. The objective is to simulate measuring reactions in real time, using the NAO robot. We use 5 videos from the AM-FED dataset that get to a value close to 1.0 in the smile metrics. Next, we compare each video with the AFFDEX SDK Fig. 10 and the CNN Fig. 11. 149 Authorized licensed use limited to: NATIONAL INSTITUTE OF TECHNOLOGY ROURKELA. Downloaded on January 06,2024 at 04:35:45 UTC from IEEE Xplore. Restrictions apply. For the comparison we consider joy intensity as a measure of happiness. Although this is not a children-based dataset, we consider making an analysis of this dataset because as far as we know, is the only available and open source database that has an analysis frame by frame of one of the six basic emotions. Fig. 12. Comparison between the smile metrics from the AM-FED dataset id 09e3a5a1-824e-4623-813b-b61af2a59f7c, AFFDEX SDK, and the CNN measurements of joy. Fig. 10. Example of a processed image from the NIMH-ChEF dataset using AFFDEX SDK. Fig. 13. Comparison between the smile metrics from the AM-FED dataset id 2f88bbb8-51ef-42bf-82c7-e0f341df1d88, AFFDEX SDK, and the CNN measurements of joy. Fig. 11. Example of a processed image from the NIMH-ChEF dataset using a CNN. The comparison for the different systems in the 5 videos are in Figs. 12-16. To calculate the accuracy of the methods we calculate the error between the systems and the smile metrics, (Table VI). The error is given by, P ((vm − vc )2 ) error = , (1) #f rames where vm is the smile metrics value, and vc is the calculated value for joy. C. Test 3 Fig. 14. Comparison between the smile metrics from the AM-FED dataset id 3c06b2cd-62bb-4308-aefd-b79d9de748b7, AFFDEX SDK, and the CNN measurements of joy. In this test we make a comparison of the classification in both systems for 5 videos in the EmoReact dataset. The 978-1-7281-1145-2/19/$31.00 ©2019 IEEE 150 Authorized licensed use limited to: NATIONAL INSTITUTE OF TECHNOLOGY ROURKELA. Downloaded on January 06,2024 at 04:35:45 UTC from IEEE Xplore. Restrictions apply. Fig. 15. Comparison between the smile metrics from the AM-FED dataset id 0d48e11a-2f87-4626-9c30-46a2e54ce58e, AFFDEX SDK, and the CNN measurements of joy. Fig. 17. Frame analysis of the EmoReact Dataset, from the NAO Robot using AFFDEX. Fig. 16. Comparison between the smile metrics from the AM-FED dataset id 1e7bf94c-02a5-48de-92bd-b0234354dbd5, AFFDEX SDK, and the CNN measurements of joy. Fig. 18. Frame analysis of the EmoReact Dataset, from the NAO Robot using CNN. EmoReact Dataset is different from the AM-FED dataset, as it only gives a summary of the reactions in a video. The measurements in the EmoReact dataset are: Curiosity, Uncertainty, Excitement, Happiness, Surprise, Disgust, Fear, Frustration, and Valence giving 1 or 0 for the present emotions and percentage for Valence. From this set we consider only Happiness, Surprise, Disgust, Fear and Frustration, as these are a subset of the basic emotions. As in Test 1, we separate the robot from the screen, and analyze the first 5 videos of the test set from EmoReact frame by frame using Affdex and the CNN as in Figs. 17,18. Then, we calculate the average for the 5 emotions in the whole video. Finally, we calculate the error between the given values, and the calculated. The calculated and given values are summarized in Table VII. TABLE VII R ESULTS BY THE GIVEN VALUE AND THE MEASURED VALUE FOR H APPINESS , S URPRISE , D ISGUST, F EAR AND A NGER IN 5 VIDEOS OF THE E MO R EACT DATASET Happiness CNN Joy Affdex Joy Surprise CNN Surprise Affdex Surprise Disgust CNN Disgust Affdex Disgust Fear CNN Fear Affdex Fear Frustration CNN Anger Affdex Anger TABLE VI E RROR COMPARISON BETWEEN THE MEASUREMENT OF JOY OR HAPPINESS IN THE DIFFERENT SYSTEMS AND THE SMILE METRIC FROM THE AM-FED DATASET. Video 1 Video 2 Video 3 Video 4 Video 5 Average AFFDEX 0.002728 0.363869 0.030868 0.072483 0.279876 0.149965 CNN 0.006761 0.180762 0.073629 0.219818 0.227063 0.141606 978-1-7281-1145-2/19/$31.00 ©2019 IEEE Video 1 0 0.622983 0.45577 0 0.039917 0.003736 0 0.011083 0.006335 0 0.007167 2.97E-05 0 0.036983 0.00028 Video 2 1 0.085684 1.45E-05 0 0.123789 0.052033 0 0.016421 0.003793 0 0.055684 1.7E-05 0 0.076684 0.000148 Video 3 1 0.669042 0.162013 0 0.030722 0.003821 0 0.008528 0.002452 0 0.007125 0.013329 0 0.036903 0.002232 Video 4 1 1 0.927167 0 0 0.013467 0 0 4.52E-05 0 0 4.58E-08 0 0 4.8E-07 Video 5 1 0.688294 0.436498 0 0.114235 0.039121 0 0.021529 0.000201 0 0.007824 6.15E-05 0 0.013647 5.03E-05 Finally, we calculate the error for both systems, for each video (Table VIII). 151 Authorized licensed use limited to: NATIONAL INSTITUTE OF TECHNOLOGY ROURKELA. Downloaded on January 06,2024 at 04:35:45 UTC from IEEE Xplore. Restrictions apply. to see how the frame rate is affected. Thus, improving the CNN system and using ensemble methods, we will be able to increase even further the emotion recognition in the future. TABLE VIII E RROR COMPARISON BETWEEN A FFDEX AND THE THE E MO R EACT DATASET. Video 1 Video 2 Video 3 Video 4 Video 5 Average Affdex 0.466151 1.055977 0.859821 0.086346 0.602936 0.614246 CNN FOR 5 VIDEOS IN CNN 0.718133 1.186895 0.414236 0 0.468941 0.557641 ACKNOWLEDGMENT IV. C ONCLUSION AND F UTURE W ORK In this study we compare a FACS based system and a CNN with cascade classifiers to classify emotion in children in the CAFE dataset, and using the NAO robot. From the results in Table V, the global emotion classification accuracy of the CNN is better than the AFFDEX system, but the face detection in AFFDEX is slightly better. The best classification was 535 correct out of 1192 faces for the CNN, detecting 1172 faces. In contrast, AFFDEX classifies correctly 506, and detects 1179 faces. Nevertheless, it is important to remember that the CNN based system has room for improvement as the number of used faces for training is only 3% in comparison to the FACS system. From the confusion matrix in Tables I and IV we can see that the CNN system is not good detecting disgust. One reason is that the Affectnet dataset has very few examples in comparison to the other classes. In Test 2, and Test 3, the CNN results are slightly better than the AFFDEX, this is probably due to the fact that the CNN analysis is almost twice as fast than AFFDEX, 6.32 (fps) and 3.38 fps respectively. The percentage difference in the error for detecting joy in AM-FED video is around 0.8359% and 5.66% for the classification of reaction in EmoReact. Both these systems require that the individual look frontal into the camera, but the coded version of the NAOqi in .NET allows us to easily add several cameras besides the camera in the NAO or other inputs, such as audio. Thus, future analysis can be done in ensemble methods, using several cameras and inputs in real time. For example, if we combine both systems for Test 1, the accuracy increases to 46.05% (549 faces correct). To combine them, first we run AFFDEX SDK, if we find disgust that is the output, any other case we run the CNN system. The confusion matrix for the ensemble system is in Table IX. TABLE IX C ONFUSION M ATRIX FOR ENSEMBLE SYSTEM IN T EST 1. Neutral Happy Sad Surprise Fear Disgust Anger Neutral 174 2 51 7 40 12 9 Happy 19 193 15 25 42 42 58 Sad 3 11 13 4 6 18 9 Surprise 23 1 4 54 43 0 4 Fear 0 0 0 4 2 0 0 Disgust 1 5 9 1 0 67 79 Anger 10 3 16 8 7 52 46 From combining both systems, we see that the accuracy increases, this system still needs to be tested on the NAO 978-1-7281-1145-2/19/$31.00 ©2019 IEEE This work is part of a project1 (#15198) that is included in the research program Technology for Oncology, which is financed by the Netherlands Organization for Scientific Research (NWO), the Dutch Cancer Society (KWF), the TKI Life Sciences & Health, Asolutions, Brocacef, Cancer Health Coach, and Focal Meditech. The research consortium consists of the Centrum Wiskunde & Informatica, Delft University of Technology, the Academic Medical Center, and the Princess Maxima Center. A NNEX Code Available at: https://github.com/steppenwolf0/emotioNAO R EFERENCES [1] A BADI , M., BARHAM , P., C HEN , J., C HEN , Z., DAVIS , A., D EAN , J., D EVIN , M., G HEMAWAT, S., I RVING , G., I SARD , M., ET AL . Tensorflow: a system for large-scale machine learning. In OSDI (2016), vol. 16, pp. 265–283. [2] C HENG , L., S UN , Q., S U , H., C ONG , Y., AND Z HAO , S. Design and implementation of human-robot interactive demonstration system based on kinect. In Control and Decision Conference (CCDC), 2012 24th Chinese (2012), IEEE, pp. 971–975. [3] C OHEN , I., L OOIJE , R., AND N EERINCX , M. A. Child’s recognition of emotions in robot’s face and body. In Proceedings of the 6th international conference on Human-robot interaction (2011), ACM, pp. 123–124. [4] E GGER , H. L., P INE , D. S., N ELSON , E., L EIBENLUFT, E., E RNST, M., T OWBIN , K. E., AND A NGOLD , A. The nimh child emotional faces picture set (nimh-chefs): a new set of children’s facial emotion stimuli. International journal of methods in psychiatric research 20, 3 (2011), 145–156. [5] E KMAN , P. An argument for basic emotions. Cognition & emotion 6, 3-4 (1992), 169–200. [6] E KMAN , P., AND ROSENBERG , E. L. What the face reveals: Basic and applied studies of spontaneous expression using the Facial Action Coding System (FACS). Oxford University Press, USA, 1997. [7] FAN , Y., L U , X., L I , D., AND L IU , Y. Video-based emotion recognition using cnn-rnn and c3d hybrid networks. In Proceedings of the 18th ACM International Conference on Multimodal Interaction (2016), ACM, pp. 445–450. [8] G E , S. S., S AMANI , H. A., O NG , Y. H. J., AND H ANG , C. C. Active affective facial analysis for human-robot interaction. In Robot and Human Interactive Communication, 2008. RO-MAN 2008. The 17th IEEE International Symposium on (2008), IEEE, pp. 83–88. [9] G ORDON , G., S PAULDING , S., W ESTLUND , J. K., L EE , J. J., P LUM MER , L., M ARTINEZ , M., DAS , M., AND B REAZEAL , C. Affective personalization of a social robot tutor for children’s second language skills. In AAAI (2016), pp. 3951–3957. [10] H ERSHEY, S., C HAUDHURI , S., E LLIS , D. P., G EMMEKE , J. F., JANSEN , A., M OORE , R. C., P LAKAL , M., P LATT, D., S AUROUS , R. A., S EYBOLD , B., ET AL . Cnn architectures for large-scale audio classification. In Acoustics, Speech and Signal Processing (ICASSP), 2017 IEEE International Conference on (2017), IEEE, pp. 131–135. [11] JAQUES , N., M C D UFF , D., K IM , Y. L., AND P ICARD , R. Understanding and predicting bonding in conversations using thin slices of facial expressions and body language. In International Conference on Intelligent Virtual Agents (2016), Springer, pp. 64–74. 1 Improving Childhood Cancer Care when Parents Cannot be There – Reducing Medical Traumatic Stress in Childhood Cancer Patients by Bonding with a Robot Companion. 152 Authorized licensed use limited to: NATIONAL INSTITUTE OF TECHNOLOGY ROURKELA. Downloaded on January 06,2024 at 04:35:45 UTC from IEEE Xplore. Restrictions apply. [12] J MOUR , N., Z AYEN , S., AND A BDELKRIM , A. Convolutional neural networks for image classification. In 2018 International Conference on Advanced Systems and Electric Technologies (IC ASET) (2018), IEEE, pp. 397–402. [13] K ASHII , A., TAKASHIO , K., AND T OKUDA , H. Ex-amp robot: Expressive robotic avatar with multimodal emotion detection to enhance communication of users with motor disabilities. In Robot and Human Interactive Communication (RO-MAN), 2017 26th IEEE International Symposium on (2017), IEEE, pp. 864–870. [14] KOLLAR , T., T ELLEX , S., ROY, D., AND ROY, N. Toward understanding natural language directions. In Proceedings of the 5th ACM/IEEE international conference on Human-robot interaction (2010), IEEE Press, pp. 259–266. [15] L E C UN , Y., B OTTOU , L., B ENGIO , Y., AND H AFFNER , P. Gradientbased learning applied to document recognition. Proceedings of the IEEE 86, 11 (1998), 2278–2324. [16] L EVI , G., AND H ASSNER , T. Emotion recognition in the wild via convolutional neural networks and mapped binary patterns. In Proceedings of the 2015 ACM on international conference on multimodal interaction (2015), ACM, pp. 503–510. [17] L I , C., YANG , C., L IANG , P., C ANGELOSI , A., AND WAN , J. Development of kinect based teleoperation of nao robot. In 2016 International Conference on Advanced Robotics and Mechatronics (ICARM) (2016), IEEE, pp. 133–138. [18] L I , Q., C AI , W., WANG , X., Z HOU , Y., F ENG , D. D., AND C HEN , M. Medical image classification with convolutional neural network. In Control Automation Robotics & Vision (ICARCV), 2014 13th International Conference on (2014), IEEE, pp. 844–848. [19] L IU , R., S ALISBURY, J. P., VAHABZADEH , A., AND S AHIN , N. T. Feasibility of an autism-focused augmented reality smartglasses system for social communication and behavioral coaching. Frontiers in pediatrics 5 (2017), 145. [20] L O B UE , V., AND T HRASHER , C. The child affective facial expression (cafe) set: validity and reliability from untrained adults. Frontiers in psychology 5 (2015), 1532. [21] L ÓPEZ R ECIO , D., M ÁRQUEZ S EGURA , E., M ÁRQUEZ S EGURA , L., AND WAERN , A. The nao models for the elderly. In Proceedings of the 8th ACM/IEEE international conference on Human-robot interaction (2013), IEEE Press, pp. 187–188. [22] L OPEZ -R INCON , A., T ONDA , A., E LATI , M., S CHWANDER , O., P I WOWARSKI , B., AND G ALLINARI , P. Evolutionary optimization of convolutional neural networks for cancer mirna biomarkers classification. Applied Soft Computing 65 (2018), 91–100. [23] L UCEY, P., C OHN , J. F., K ANADE , T., S ARAGIH , J., A MBADAR , Z., AND M ATTHEWS , I. The extended cohn-kanade dataset (ck+): A complete dataset for action unit and emotion-specified expression. In Computer Vision and Pattern Recognition Workshops (CVPRW), 2010 IEEE Computer Society Conference on (2010), IEEE, pp. 94–101. [24] L UI , J. H., S AMANI , H., AND T IEN , K.-Y. An affective mood booster robot based on emotional processing unit. In Automatic Control Conference (CACS), 2017 International (2017), IEEE, pp. 1–6. [25] M C D UFF , D. Discovering facial expressions for states of amused, persuaded, informed, sentimental and inspired. In Proceedings of the 18th ACM International Conference on Multimodal Interaction (2016), ACM, pp. 71–75. [26] M C D UFF , D., K ALIOUBY, R., S ENECHAL , T., A MR , M., C OHN , J., AND P ICARD , R. Affectiva-mit facial expression dataset (am-fed): Naturalistic and spontaneous facial expressions collected. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops (2013), pp. 881–888. [27] M C D UFF , D., M AHMOUD , A., M AVADATI , M., A MR , M., T URCOT, J., AND K ALIOUBY, R. E . Affdex sdk: a cross-platform real-time multi-face expression recognition toolkit. In Proceedings of the 2016 CHI Conference Extended Abstracts on Human Factors in Computing Systems (2016), ACM, pp. 3723–3726. [28] M ISKAM , M. A., S HAMSUDDIN , S., S AMAT, M. R. A., Y USSOF, H., A INUDIN , H. A., AND O MAR , A. R. Humanoid robot nao as a teaching tool of emotion recognition for children with autism using the android app. In 2014 International Symposium on Micro-NanoMechatronics and Human Science (MHS) (2014), IEEE, pp. 1–5. [29] M OLLAHOSSEINI , A., H ASANI , B., AND M AHOOR , M. H. Affectnet: A database for facial expression, valence, and arousal computing in the wild. arXiv preprint arXiv:1708.03985 (2017). 978-1-7281-1145-2/19/$31.00 ©2019 IEEE [30] M UKHERJEE , S., PARAMKUSAM , D., AND DWIVEDY, S. K. Inverse kinematics of a nao humanoid robot using kinect to track and imitate human motion. In Robotics, Automation, Control and Embedded Systems (RACE), 2015 International Conference on (2015), IEEE, pp. 1–7. [31] N OJAVANASGHARI , B., BALTRU ŠAITIS , T., H UGHES , C. E., AND M ORENCY, L.-P. Emoreact: a multimodal approach and dataset for recognizing emotional responses in children. In Proceedings of the 18th ACM International Conference on Multimodal Interaction (2016), ACM, pp. 137–144. [32] O QUAB , M., B OTTOU , L., L APTEV, I., AND S IVIC , J. Learning and transferring mid-level image representations using convolutional neural networks. In Proceedings of the IEEE conference on computer vision and pattern recognition (2014), pp. 1717–1724. [33] R AWAT, W., AND WANG , Z. Deep convolutional neural networks for image classification: A comprehensive review. Neural computation 29, 9 (2017), 2352–2449. [34] ROA BARCO , L. Analysis of facial expressions in children: Experiments based on the db child affective facial expression (cafe). [35] ROBOTICS , A. Naoqi framework. Consulted in http://doc. aldebaran. com/1-14/dev/naoqi/index. html. Google Scholar. [36] S AHINER , B., C HAN , H.-P., P ETRICK , N., W EI , D., H ELVIE , M. A., A DLER , D. D., AND G OODSITT, M. M. Classification of mass and normal breast tissue: a convolution neural network classifier with spatial domain and texture images. IEEE transactions on Medical Imaging 15, 5 (1996), 598–610. [37] S CHAAFF , K., AND S CHULTZ , T. Towards an eeg-based emotion recognizer for humanoid robots. In Robot and Human Interactive Communication, 2009. RO-MAN 2009. The 18th IEEE International Symposium on (2009), IEEE, pp. 792–796. [38] S HAHRIARI , B., S WERSKY, K., WANG , Z., A DAMS , R. P., AND D E F REITAS , N. Taking the human out of the loop: A review of bayesian optimization. Proceedings of the IEEE 104, 1 (2016), 148–175. [39] S HI , S. Emgu CV Essentials. Packt Publishing Ltd, 2013. [40] S OUZA , C. R. The accord .net framework. São Carlos, Brazil: http://accord-framework. net (2014). [41] S PAULDING , S., G ORDON , G., AND B REAZEAL , C. Affect-aware student models for robot tutors. In Proceedings of the 2016 International Conference on Autonomous Agents & Multiagent Systems (2016), International Foundation for Autonomous Agents and Multiagent Systems, pp. 864–872. [42] V IOLA , P., AND J ONES , M. Rapid object detection using a boosted cascade of simple features. In Computer Vision and Pattern Recognition, 2001. CVPR 2001. Proceedings of the 2001 IEEE Computer Society Conference on (2001), vol. 1, IEEE, pp. I–I. [43] Z HANG , L., J IANG , M., FARID , D., AND H OSSAIN , M. A. Intelligent facial emotion recognition and semantic-based topic detection for a humanoid robot. Expert Systems with Applications 40, 13 (2013), 5160– 5168. [44] Z HANG , L., M ISTRY, K., J IANG , M., N EOH , S. C., AND H OSSAIN , M. A. Adaptive facial point detection and emotion recognition for a humanoid robot. Computer Vision and Image Understanding 140 (2015), 93–114. 153 Authorized licensed use limited to: NATIONAL INSTITUTE OF TECHNOLOGY ROURKELA. Downloaded on January 06,2024 at 04:35:45 UTC from IEEE Xplore. Restrictions apply.