International Journal of Human–Computer Interaction

ISSN: (Print) (Online) Journal homepage: https://www.tandfonline.com/loi/hihc20

An Improved Human-Computer Interaction

Content Recommendation Method Based on

Knowledge Graph

Zhu He

To cite this article: Zhu He (22 Dec 2023): An Improved Human-Computer Interaction

Content Recommendation Method Based on Knowledge Graph, International Journal of

Human–Computer Interaction, DOI: 10.1080/10447318.2023.2295734

To link to this article: https://doi.org/10.1080/10447318.2023.2295734

Published online: 22 Dec 2023.

Submit your article to this journal

Article views: 34

View related articles

View Crossmark data

Full Terms & Conditions of access and use can be found at

https://www.tandfonline.com/action/journalInformation?journalCode=hihc20

INTERNATIONAL JOURNAL OF HUMAN–COMPUTER INTERACTION

https://doi.org/10.1080/10447318.2023.2295734

An Improved Human-Computer Interaction Content Recommendation Method

Based on Knowledge Graph

Zhu He

Global Business School, UCSI University, Kuala Lumpur, Malaysia

ABSTRACT

KEYWORDS

Since human-to-human communication is contentful and coherent, during human-computer inter­

action (HCI), people naturally hope that the computer’s reply will be contentful and coherent.

How to enable computers to recognize, understand and generate quality content and coherent

responses like humanshas drawn a great deal of interest from bothindustry and academia.In order

to address the current problems of lack of background knowledge of robots and low consistency

of responses in open-domain HCI systems, we present a content recommendation method on the

basis of knowledge graph ripple network. In order to realize a human-computer interaction system

with better content and stronger coherence, and this model simulates the real process of humanhuman communication. At first, the emotional friendliness of HCI is gotten through calculating the

emotional confidence level together with emotional evaluation value of HCI. After that, the exter­

nal knowledge graph is introduced as the robot’s background knowledge, and the dialog entities

are embedded in the ripple network of knowledge graphsto access the content of entities that

may be of interest to participants. Lastly, the robot reply is given by comprehensively considering

the content and emotional friendliness. The results of the experiments indicate a comparison with

comparative modeling approaches like MECs models, robots with emotional measures and back­

ground knowledge can effectively enhance their emotional consistency and friendliness when per­

forming HCI.

Human-computer inter­

action; knowledge graph;

ripple network; deep

learning

1. Introduction

The evolution of wireless communication technologies and

the spread of social networks have driven transformations in

the field of human-computer interaction (HCI). Every

change in HCI will set off a huge wave of changes in the

information industry. Changes in the information industry

in turn promote the development of the communications

industry. Human-computer interaction technology is com­

mitted to smooth and natural communication between

humans and computers, aiming to dissolve the boundaries

of dialogue between interactive systems and achieve smooth

and natural information exchange between humans and

machines. Specifically, it ranges from the initial command

line human-computer interaction to traditional graphical

interface interaction, and then to various voice control

interactions (Shackel, 1997). Nowadays, the innovation of

interactive interface aesthetics no longer meets people’s

needs. During the interaction process, participants hope to

take into account more convenient and more customary aes­

thetic interaction methods (Ren et al., 2023). In the era of

mobile Internet, human-computer interaction is gradually

changing from computers passively accepting, processing,

and replying to information to actively understanding, proc­

essing, and replying to information, further improving the

user’s interactive experience.

CONTACT Zhu He

julylitbri@outlook.com

� 2023 Taylor & Francis Group, LLC

With the emergence and popularization of various

human-computer interaction systems, the relationship

between humans and machines, data, and information has

been broken. Human-computer interaction is developing in

the direction of natural human conversation and towards a

more intelligent and natural human-computer interaction.

interactive development. Conventional passive interaction

logic is very simple. The user gives instructions to the

machine, the machine executes them and gives the results

back to the user. While the entire process is straightforward

and efficient, it is not natural or intelligent. Real intelligence

and nature should be when the machine actively provides

natural and intelligent services to users through perception

and prediction. Human-computer interaction systems are

also developing from passively accepting user information to

actively understanding user intentions. This change in inter­

action methods will enable machines to more naturally and

accurately understand user intentions or emotions to pro­

vide services for users. Such a change requires intelligent

robots to have stronger emotional perception and intelligent

understanding capabilities. In order to achieve such a goal,

more and more academics and industrial people have

launched many researches including robot cognitive and

emotional computing (Zhou and Wang, 2017), robot per­

sonalization (Irfan et al., 2019), robot anthropomorphism

Global Business School, UCSI University, Kuala Lumpur, Malaysia

2

Z. HE

(Spatola et al., 2019), robot intelligent dialogue (Jokinen,

2018), etc.

The study of knowledge graph-based content recommen­

dation techniques for HCI is the focus of this article. Our

suggested model technique replicates the true human-tohuman communication process in an open domain inter­

active system. Initially, the robot’s background knowledge is

considered to be represented by the knowledge graph.

Second, during human-to-human contact, the ripple net­

work is employed to imitate the waking of locally relevant

background information. This article is focused on know­

ledge graph-based HCI content recommendation, which

offers helpful investigation for implementing a more natural

and intelligent HCI system in both closed and open

domains.

2. Related work

Letting robots have similar capabilities to human brains is

the eventual goal of HCI, and it is a long and arduous chal­

lenging task. Since 1951, academia and industry have widely

adopted the ideas proposed by Turing in “Computers and

Intelligence” and used human-machine dialogue to test the

intelligence level of robots. Usually, humans perceive the

intelligence or emotional intelligence level of a robot

through the content of its reply during human-computer

interaction. Human-computer interaction systems are div­

ided into task-driven closed-and open-domain dialogue sys­

tems without specific tasks. In the industrial world, the next

generation of human-computer interaction mainly takes the

form of dialogue systems, and many technology companies

have invested in research and development work and have

also developed some products with commercial value

(Adamopoulou and Moussiades, 2020; Hoy, 2018). We ana­

lyze the current research status of content recommendation

by robots in open domain and closed domain interactive

systems respectively.

2.1. Research status of robot content recommendation

in open domain

The human-computer interaction system under the open

domain has the following two distinctive characteristics.

First, participants can input any content of interest, and

there are no clear reply rules for the robot’s replies. The

reply content only needs to take into account the partici­

pants’ emotions and the relevance of the content. Second,

the robot, which plays the role of companion in the humancomputer interaction process, does not have background

knowledge, and its replies are not very relevant. Many

researchers have conducted various studies on robot content

recommendation under human-computer interaction sys­

tems in the open domain. In the literature (Liu et al., 2017),

the author argued that participants’ expression changes gen­

erate external emotional stimuli for the robot and presented

a successive cognitive emotion regulation model that can

endow the robot with certain emotional cognitive capabil­

ities. For realizing the intelligent emotional expression,

Rodr�ıguez et al. (2016) presented an emotion generation

frame that allows robots to generate the same emotional

state as the participants, which can build emotional trust

between both parties in the interaction during the dialog.

Jokinen and Wilcock (2013) maps real-life realistic emo­

tional states into the PAD 3D space, where the value of each

dimension on the 3D axes is given by a psychological attri­

bute. The emotional space model of PAD is applied to

express the various successive emotional states of a robot.

To fulfill the emotional demands of participants during

HCI, robots are gradually realizing the emotional intelli­

gence ability of “sorrowing you when you are sad, and

happy when you are happy”. The authors proposed an

Emotional Chatting Machine (ECM) in the literature (Zhou

et al., 2018). They quantified unique human emotions and

introduced them into the generative dialogue system so that

the robot can not only extract emotions from the partici­

pants’ dialogue content but also make empathetic responses

from an emotionally consistent direction. The purpose of its

research is to enable robotic responses to participants’ emo­

tions. Adamopoulou and Moussiades (2020) made a

sequence-to-sequence improvement for emotional issues in

dialogue. First, three-dimensional word vectors with emo­

tional information are introduced. Second, for better results,

the emotional dissonance loss function is minimized and the

emotional content is maximized. Finally, emotional factors

are comprehensively considered to allow the decoded con­

tent to have a variety of Different emotional responses. A

series of improvements are designed to enable decoding

emotionally diverse replies from candidate replies. Liu et al.

(2017) presented an emotion regulation model on the basis

of guided cognitive reappraisal under continuous external

emotional stimulation. This model is able to achieve positive

emotional responses from the robot. When participants are

in an emotional state of weak emotional involvement, they

can also respond positively to the reply, which improves the

participants’ positive emotional tendencies. In order to

achieve contextual coherence and natural and coherent con­

tent connection during human-computer interaction, robots

are gradually endowed with external knowledge. Lowe et al.

(2015) first chooses external knowledge of unstructured text

relevant to the conversation content context with TFIDF

(Term Frequency–Inverse Document Frequency) and subse­

quently utilizes a convolutional neural network (Recurrent

Neural Network, RNN) encoder to present knowledge.

Lastly, the scores of the candidate responses are computed

according to the external knowledge and contextual seman­

tics to make sure the accuracy of the bot’s responses. Young

et al. (2018) proposed to store the common-sense knowledge

graph in an external memory module. The authors encode

queries, responses, and common sense through the Tri-LSTM

(Triggered Long Short-Term Memory) model. Then, they

combined relevant common sense with the retrieval dialogue

model to get more precise responds from the robot, resolving

the issue of background common sense being lacking in HCI.

A deep neural matching network-based learning framework

was presented by Yang et al. (2018). Utilizing question-andanswer sessions and pseudo-associative feedback, the authors

INTERNATIONAL JOURNAL OF HUMAN–COMPUTER INTERACTION

score the retrieval dialogue system’s response set based on

external knowledge. This has the advantage of incorporating

outside information into the deep neural network, which

enhances the coherence of settings in HCI.

2.2. Research status of robot content recommendation

in closed domain

Human-computer interaction systems in closed domains

have the following two distinguishing features. First, the par­

ticipants’ input was limited to a particular domain, and the

robot’s reply content is limited to a specific field. Replies to

specific fields often require standardized answers. Second,

robots usually play the role of facilitator in the interaction

process. In this process, the relevance of the robot inter­

action environment is essential. The major goal of the

closed-domain dialogue system is to guide participants to

complete their demands in a specific field, and during the

interaction process, the participants feel that the communi­

cation method is similar to human communication, and the

communication process is natural and smooth. Closed

domain task-based dialogue model frameworks are divided

into two major categories, one is a pipeline model-based

framework, and the other is an end-to-end model

framework.

In a framework on the basis of the pipeline model, intent

recognition has always been a research focus in this field.

Jeong and Lee (2008) suggested a coordinated probabilistic

model of a triangular chain of conditional random fields.

The model denotes meta-sequence and sequence labels

jointly in a single graph structure, which explicitly encodes

the association between them while retaining the uncertainty

between the sequences. By extending the linear chain tri­

angle chain conditional random field probabilistic model,

the model enables efficient inference and evaluation

methods.

Xu and Sarikaya (2013) suggested a neural network ver­

sion according to the triangular conditional random field

algorithm model. In this model, intent tags and slot sequen­

ces are jointly modeled using their correlation, and their fea­

tures are automatically extracted through the convolutional

neural network layer and shared by the intent model,

achieving clearer intent discovery. Guo et al. (2014) imple­

mented a task-oriented dialogue system and solved the

problem of completing various online shopping-related tasks

through natural language dialogue. The authors established

a task-based dialogue system for e-commerce through nat­

ural language processing technology and data resources, and

realized good effects in practical applications. Yan et al.

(2017) implemented generalization via successive spatial rep­

resentations and applied arithmetic operations to these rep­

resentations

to

realize

combinatoriality,

including

feedforward and recurrent neural network language models.

This model provides an elegant mechanism to merge the

discrete syntactic structures with the representations of

phrases and words in continuous space into a powerful

combinatorial model to provide additional feature informa­

tion for intent detection.

3

In the last few years, with the boom in social networks

and deep learning, end-to-end closed-domain dialogue sys­

tems have been developed more strongly. Liu and Lane

(2017) proposed an optimized deep reinforcement learning

framework for dialogue systems on the basis of end-to-end

task. The dialogue agent and user simulator are jointly opti­

mized through deep reinforcement learning. The authors

simulate the dialogue between the two agents and imple­

ments a reliable user simulator that achieves good results in

the movie ticket booking scenario. Wen et al. (2016) pro­

posed a target-oriented trainable dialogue method and a

new approach to the collection of dialog data on the basis of

a new pipeline framework through neural network text input

and end-to-end text output. In this article, the author con­

structs a dialogue system to achieve better results in restaur­

ant search applications. Su et al. (2016) presented an active

learning Gaussian process model. Such Gaussian process

runs on successive spatial dialog representations, which are

produced in an unsupervised way utilizing recursive neural

network codecs. This framework can greatly decrease the

user feedback noise and the costs of data annotation in dia­

log strategy learning.

While the aforementioned studies do, to some extent,

take into account the two primary factors of external know­

ledge and emotional state during HCI, some only take into

account the impact of a single interaction round and neglect

the coherence of the context, or they only take into account

the robot’s reaction to the emotional state in the context.

The robot’s reaction is evaluated, or rather, solely the effect

of contextually relevant external knowledge.

We proposed an HCI model according to the ripple net­

work of knowledge graph with the goal of enhancing the

emotional friendliness of the robot during the HCI process

in order to address the issues of lack of background know­

ledge and low coherence of responses in the current HCI

model. We examine the participants’ emotional friendliness

and replicate the process of background knowledge awaken­

ing during a human-to-human conversation by introducing

the external knowledge graph as the robot’s background

knowledge. In accordance with the experimental data, robots

with emotional measurements and prior information may

evidently raise their emotional friendliness and coherence

during HCI, in contrast to contrasting model techniques like

MECs models.

3. Content recommendation method based on

knowledge graph ripple network

Human learning, life, work, and other experiences will be

stored in the brain as associated memories, and these

memories can be viewed as individual background know­

ledge (Richmond and Nelson, 2009). The communication

process among people can be seen as a process in which

background knowledge is constantly awakened. During

the communication process, one party’s strong emotional

desire to communicate will usually keep the conversation

going. Correspondingly, during human-computer inter­

action, humans naturally hope to communicate with a

4

Z. HE

knowledgeable and emotional robot. How to enable robots

to use background knowledge to achieve emotional com­

munication like humans during HCI is the focus of many

scholars. Our proposed model in this section simulates

the interaction process between people that considers

both content and emotion. The ripple network of know­

ledge graph enables the discovery of content that may be

of interest to participants and the emotional friendliness

of HCI realizes the calculation of the emotional process of

participants.

2.

basis of this. An overall raising tendency in the partici­

pants’ emotional states during the interaction process is

indicative of a healthy interactive relationship.

Assessment of the content connection between HCI:

People communicate with one other through a process

of ongoing background knowledge awakening, and the

contents of communications are correlated. We subse­

quently employ this information to perform a correl­

ation analysis on the content of the human-computer

dialogue and identify any potentially fascinating partici­

pant content on the knowledge network.

3.1. Problem description

When two engaging individuals converse on a specific prob­

lem in an open domain, the topic’s substance will progres­

sively expand as the conversation goes on, and both parties’

prior knowledge will gradually come to light. The party with

prior knowledge of the interaction process is referred to as

the participant in the present HCI system. When carrying

out multiple rounds of dialogue, the participants’ willingness

to participate will be substantially diminished through the

dialogue system, which will eventually happen in the end of

the dialogue, if the system fails to present new content or if

the content introduced is not relevant enough or is not

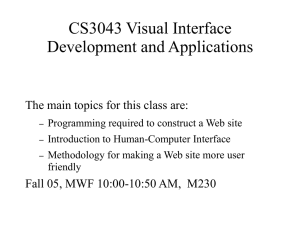

emotionally expected by the participants. In Figure 1, the

process of HCI is displayed. The participants in robot and

HCI are represented by R and H, respectively.

3.1.1. Problem formalization

From Figure 1, it can be observed that the participant’s

k

input in the k-th interaction system is CH

: Supposing that

the friendliness of the emotional interaction between the

robot and the participant is R(k), what requires to be

gotten is the content CRk of the robot replies to the partici­

pant in the k-th conversation. Its concrete mathematical

expression is:

�

�

k

f : RðkÞ, G, CH

! CRk

(1)

3.1.2. Emotional friendliness of HCI

HCI is an ongoing process. The current emotional state is

not only associated with the content of the current interactive

dialog, but also with the content of the historical interactive

dialog. The update function that defines the human-computer

interaction emotional friendliness R(k) based on the current

and historical interactive session content is:

RðkÞ ¼ minð1, maxðRðk − 1Þ þ WðkÞ � CðkÞ, 0ÞÞ

(2)

Among them, R(k) is the emotional friendliness of the

kth human-computer interaction, and the range of value is

[0,1]. The lower the value, the poorer the emotional inter­

action state. Conversely, the larger the value, the better the

emotional interaction state. The initial value of R(0) is 0.5,

which suggests that the HCI association is indeterminate.

W(k) is the emotional evaluation value of the interaction

input, with an initial value of 0 and a range of [−1]. When

their values were positive and plural, the participants’ emo­

tional states were positive and negative, respectively. With

an initial value of 0, C(k) is the emotional confidence of

HCI and denotes the reinforcement impact of persistently

negative or positive feelings. In other words, both the emo­

tional certainty and the value of C(k) rise when the two

conversations have the same emotional tendency. The degree

of confidence declines when the two conversation’s emotions

diverge. We will define C(k) and W(k) in detail below.

Input and output:

Knowledge graph G is being introduced when the system

is initialized. During the interaction, the input of the partici­

k

, and the

pant is the content of the k-th conversation CH

output of the system is the content of the k-th robot’s

reply CRk :

Considerations:

3.1.2.1. Human-computer interaction emotion assessment.

Interactive input emotions were quantified into vectors with

numerical values according to the literature (Park et al.,

2011) in order to more accurately quantify and compute

emotions. The six fundamental emotion states that make up

the PAD emotion space’s emotion vector are happiness, dis­

gust, surprise, sadness, fear, and anger. Its specific definition

is as follows:

1.

hl ¼ ðEp − El ÞCl ðEp − El ÞT l ¼ 1, 2, 3, 4, 5, 6

(3)

�

�

Among them, Ep ¼ pp , ap , dp represents the emotion of

interactive input, that is, combining the PAD emotion

model in psychology to analyze the semantic sense to obtain

the input emotion. The values of l are 1, 2, 3, 4, 5, and 6,

respectively representing the six emotions of happiness, dis­

gust, surprise, sadness, fear, and anger. El represents the

coordinate set of fundamental emotional states in PAD

space, which is used as a standard emotion measurement

vector. Cl represents the set of covariance matrices of

Assessment of emotional connection in HCI: HCI is an

ongoing process of interaction, and the participants’

emotional attachment to the material influences the dia­

logue’s continuity. We first assess the human-computer

dialogue’s friendliness of emotional interaction on the

Figure 1. Diagram of content input and output during HCI.

INTERNATIONAL JOURNAL OF HUMAN–COMPUTER INTERACTION

underlying emotional states in the PAD space. Later, hl

denotes the separation between the basic emotion El and

interactive input emotion Ep acquired under Cl constraint.

This distance corresponds to 6 emotional states.

The interactive input sentiment evaluation function was

defined as P(Ep), and normalize its corresponding emotion

intensity l, as shown in Equation (4). To make sure that the

formula makes sense, we especially define hl not to be zero,

which is expressed as follows by Equation (5).

8

1=h

>

>

> pl ¼ P6 l ,

hl 6¼ 0

>

<

k¼1 1=hk

(4)

l

6

X

X

>

>

>

p

¼

1,

p

¼

p

¼

0,

h

¼

0

i

i

l

>

: l

i¼0

i¼lþ1

PðEp Þ ¼ ½p1 , p2 , p3 , p4 , p5 , p6 �

(5)

For the k-th conversation, the emotion evaluation of HCI

can be defined as follows:

WðkÞ ¼ p1 þ 0:6p2 þ 0:2p3 − 0:2p4 − 0:6p5 − p6

(6)

The range of the emotional evaluation value W(k) is

[−1]. When the value is positive, it means that the emo­

tional state of the participant is positive. On the contrary,

when the value is negative, it means negative emotion.

3.1.2.2. Emotional confidence in human-computer inter­

action. Emotional certainty represents the influence of previ­

ous emotional states on subsequent emotional states. When

the same emotion appears continuously, it will strengthen

this emotion. Now define the dynamic change of C(k) as

follows.

8

�

�!!!

>

�WðkÞ − Wðk − 1Þ�

>

>

�

>

,

min 1, max 0, Cðk − 1Þ þ 1 − ��

>

�

>

>

2

>

>

<

WðkÞWðk − 1Þ � 0

CðkÞ ¼

�

� !!

>

�

�

>

> min 1, max 0, Cðk − 1Þ − �WðkÞ − Wðk − 1Þ�

>

,

>

�

�

>

2

>

>

>

: WðkÞWðk − 1Þ < 0

(7)

The interactive emotional confidence C(k) is closely related

to the interactive emotional evaluation value W(k).

Continuous positive emotional evaluation values will posi­

tively promote emotional certainty and make the humancomputer interaction state more positive. Continuous negative

emotional evaluation values will reversely promote emotional

certainty, which enhances the negativity of the entire inter­

action state. The psychological process of genuine interper­

sonal communication is more in accordance with the

emotional certainty evaluation process.

3.1.3. Knowledge graph ripple network content recom­

mendation method

3.1.3.1. Knowledge graph ripple network. The Knowledge

Graph (KG) is a type of heterogeneous graph where the

edges represent relationships and the nodes represent enti­

ties. Triples (tail entity, relationship, head entity) are the

5

forms that relationships and entities take to construct a het­

erogeneous network. The following benefits arise from inte­

grating knowledge graphs into recommendation systems:

First, it can provide rich semantic information, allowing the

recommendation system to recommend richer and more

diverse content. For example, a recommendation system

based on knowledge graphs can semantically link movies,

music, etc. that users have previously liked, thereby recom­

mending more relevant content. Second, it can semantically

link various information to enable recommender systems to

better understand the interests and needs of users, thereby

improving the recommendation effect. By analyzing the enti­

ties, relationships and attributes in the knowledge graph,

more precise personalized recommendations can be realized.

Third, it provides a structured model that can express the

recommendation logic of the recommendation system more

clearly, making the recommendation results easier to under­

stand and explain. Currently, there are three main categories

of recommendation methods based on knowledge graphs:

embedding-based knowledge graph recommendation, pathbased knowledge graph recommendation, and hybrid

method-based knowledge graph recommendation.

This complex relationship in the knowledge graph pro­

vides us with a deeper and broader content perspective,

which corresponds to the relevance of the real objective

world and provides prerequisites for the expansion of par­

ticipant dialogue content in human-computer interaction

systems. A common representation of knowledge graphs is

triples, namely G ¼ (H, R, T). Here, H ¼ {e1, e2, … ,eN}

denotes the set of head entities in the knowledge base, N

represents the entities number, R ¼ {r1, r2, … , rM} denotes

the combination of connections in knowledge base, M is the

entity relationships number, T is the set of tail entities in

knowledge base, and T � H � R � H:H(head), R(relation),

T(tail) form a triplet, namely head entity-relationship-tail

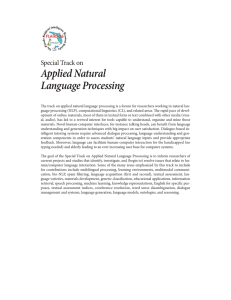

entity. A simple triplet link is from Figure 2.

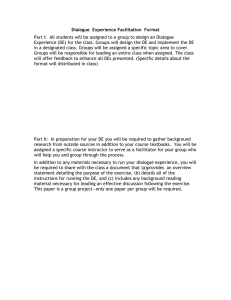

Figure 3 illustrates the activation of underlying entities of

participants by dialog entities, in which entities A and B are

the entities participating in the content of the participants’ dia­

logs in a certain human-computer interaction process. The

entity number of entity A’s first-level association is (1, 4, 5),

and the entity number of entity B’s first-level association is (1,

8, 9). The common first-level associated entity number of

entity A and entity B is 1. The entities involved are the shaded

parts in Figure 3(b). The second-level related entities of entity

A are numbered (2, 3, 6, 7) and those of entity B are num­

bered (2, 7). The common number of the second-level related

entities of entity A and B is (2, 7), the involved entities are as

shown in the shaded part in Figure 3(c).

Based on this reasoning, we can conclude the entity number

of the lower association hierarchy of entity A or entity B (the

lower the relationship, the higher the level of association).

Content that may be of interest to a participant is activated

through conversation entities and propagated along hierarchical

connections in the knowledge graph from strong to weak and

near to far. As the number of related entities raises, the interest

of the user reduces, which correlates with the shaded area in

Figure 3. Such a process is similar to the propagation of water

6

Z. HE

Figure 2. Example of knowledge graph perspective.

Figure 3. Knowledge graph ripple network propagation model diagram.

ripples from strong to weak and near to far. The water ripple’s

amplitude will progressively weaken as they propagate from

near to far. Similarly, the influence of dialogue entities on enti­

ties with lower correlation levels gradually becomes smaller,

and during the ripple propagation process, interference super­

position effects will occur at certain entities to highlight certain

entities (common related entities). Finally, the entity content

that the participants are interested in is optimally selected

based on their emotional tendencies. The above entire process

describes the HCI model.

To utilize the knowledge graph to dig out the potentially

interesting content of the participants, entity extraction and

disambiguation are performed on the dialogue content of the

participants through entity linking (Sil and Yates, 2013), and

the dialogue entity set in the interaction process is obtained,

and then the entities are the set is embedded into the ripple

network of the knowledge graph. The relevant sets in the rip­

ple network of knowledge graph are specified as below.

According to the propagation model of the ripple network

of the knowledge graph, the set of participant dialog entities

that we acquired in the kth conversation is defined as below.

�

�

Hk ¼ hk jhk 2 G

(8)

In which, G represents the known knowledge graph,

hkand k denote the dialogue entity and dialogue round. The

ternary ripple of the obtained set of participant dialog enti­

ties Hk is given in below:

�

�

(9)

Skn ¼ ðh, r, tÞjðh, r, tÞ 2 Gh 2 Hk , n ¼ 1, 2, ::, N

Here, n stands for the associated entity level, eg, S11 denotes

the first-level associated entity of dialogue entity in the first

conversation. The set of responses from the participants is in

INTERNATIONAL JOURNAL OF HUMAN–COMPUTER INTERACTION

turn derived from the retrieval formula and a vector of sen­

tence feature representation v 2 Kd is obtained by using the

word2Vec (Mikolov et al., 2013) method and the Embedding

Average vector mean method to obtain the content of the

reply set. In which, K and d stand for the feature representa­

tion vector and word vector dimension, separately. We com­

pute the probability of association pi between the sentence

feature vector v and each triad (ti, ri, hi) in the first-level

associated entity of ripple set Sk1 in the k-th conversation with

the formula:

�

exp ðvT Ri hi Þ

pi ¼ softmax vT Ri hi ¼ P

(10)

ð T Þ

ðh, r , tÞ2Sk1 exp v Rh

Here, hi 2 Kd and Ri 2 Kd�d stand for the entity vectors

of ripple sets hi and ri separately. The entity vectors of the

ripple set triad (ti, ri, hi) are acquired by the feature learning

method Trans D for the knowledge graph, and the respect­

ive corresponding entity vectors stand for ti, ri, and hi. pi

can be viewed as a probability that gauges the similarity

between the entity hi and the word vector vin the relation

Ri space. After getting the correlation probability, we later

compute the effect of the dialog entity on the tail entity r of

the first-level correlated entities. We can compute vector o1.

X

o1 ¼

pi ti

(11)

ðhi , ri , ti Þ2S11

Vector o1 is the first-order response of sentence feature

vector v, in which ti 2 Kd : With Equations (10 and 11), the

content of interest to the participants is distributed far along

the hierarchical relationship of knowledge graph. The secondorder response o2 is acquired from replacing the sentence

vector v in Equation (10) by the vector o1 in Equation (11).

This process is repeated on ripple set, ie, the values of n in Skn

are 1 and 2 separately. It stands for the secondary propagation

of the participant’s conversational entities on the knowledge

graph ripple network. Since too large an order response

dilutes valuable information, the value of N in Equation (9) is

2 or 3. In summary, the formula for the participant’s response

with respect to vector v can be represented as.

H

X

O¼

oi

(12)

i¼1

The normalized content probability is:

y ¼ rðOT vÞ

(13)

Equation (13) was defined as the content friendliness of

participant, where the sigmoid function is as below:

1

1 þ exp ð−xÞ

value in the range [0, 1]. As the value gets closer to 1, the

participant is more satisfied with the response.

3.1.3.2. Construction of knowledge graph ripple network

interaction model. First of all, a knowledge graph G (in this

paper, we adopt CN-Dbpedia, a Chinese general encyclope­

dia knowledge graph developed and maintained via Fudan

University) is given, and the input to the model is the con­

k

, and the

tent of the kth participant’s interaction input CH

output is the content of the kth þ 1th robot’s reply CRkþ1 :

Next, the ripple network of knowledge graph is employed

for assessing the friendliness of the participant’s dialogue

material, and emotional friendliness is applied to establish

the participant’s continuous emotional state vector.

Ultimately, the robot’s best response is identified via taking

into account both the friendliness of the content and the

participant’s emotional state vector. In particular, Table 1

illustrates how the ripple network of knowledge graph inter­

action model is built.

4. Experimental design and result analysis

4.1. Experimental framework

We modified ChatterBot in Python to the suggested model

and simulated it as text chat for performing an efficient

experimental comparison of the suggested HCI model con­

tent recommendation approach. The ChatterBot framework

is represented by the solid line in the model process struc­

ture presented in Figure 4, while the expanded content is

represented by the dotted line. During HCI, facial expres­

sions, voice, gestures, as well as other cues are employed to

evaluate emotional friendliness.

4.2. Experimental data and comparative models

The experimental data is derived from the conversation

material of the 2018 NLPCC task Open Domain Question

Answering, ie, Chinese dialogue question and answer. In the

corpus, there are 24,479 pairs of questions and answers. We

designate 2500 pairs of questions and answers at random to

serve as the verification set, another 2500 pairs at random to

serve as the test set, and the rest of the pairs to serve as the

model training set.

We have chosen these four models for comparison tests

in this section.

(14)

1.

In combination with Equations (2) and (13), we add con­

tent- and sentiment-friendliness weights to the content of

candidate responses, normalized as below.

2.

yv ¼ aRðkÞ þ by

3.

rðxÞ ¼

(15)

Where a and b are the constraint factorsa þ b ¼ 1 (the

default is a ¼ b ¼ 0.5, which will be described in more detail

later in the experimental discussion section). Yv takes the

7

Literature (Sutskever et al., 2014) is a dialogue model

that automatically generates replies based on Seq2Seq of

LSTM network.

Literature (Gunther, 2019) is a ChatterBot interaction

model that sorts answers and outputs them based on

confidence level.

Literature (Zhang et al., 2018) takes into account the

“empathy” of the participants in the interaction and

chooses emotionally similar replies as responses, thereby

realizing an emotional MECs dialogue cognitive model.

8

Z. HE

Table 1. Modeling of human-computer dialogues on the basis of knowledge graph ripple network.

Input: The k-th participant inputs content CHk , known knowledge graph G;

Output: The response content CRkþ1 of the robot for the k þ 1th time

(1) Repeat:

(2) Return the n replies with the highest semantic confidence based on the participant’s k-th interactive input, and vectorize the reply sentences to obtain their

feature representations; perform entity extraction and disambiguation of the dialogue content through entity connection;

(3) Calculate the emotional friendliness R(k) of the k-th participant’s interactive input according to patterns Equations (2)–(7);

(4) According to Equations (8) and (9), the ripple set of associated entities is obtained;

(5) According to Equations (10)–(14), the content response probability of the candidate reply is obtained;

(6) According to Equation (15), the normalized values of content friendliness and emotional friendliness are obtained, that is, the satisfaction value of the

candidate reply;

(7) Select the maximum satisfaction value yv as the reply content;

(8) k ¼ k þ 1;

(9) Until the participant stops interactive input;

(10) The human-computer interaction session is terminated.

Figure 4. Content recommendation method process framework according to the ripple network of knowledge graph.

4.

Literature (Lowe et al., 2015) is a Concept Net cognitive

model that states general knowledge in an external

memory module and incorporates associated general

knowledge into the retrieval dialog.

4.3. Evaluation metrics

The two measures we employ to objectively assess the accur­

acy of model response are Mean Average Precision

(MAP)together with Mean Reciprocal Rank (MRR).

Whereas MAP represents the accuracy of a particular statis­

tic, MRR reflects overall accuracy. Both indicators are

defined as below:

MRR ¼

k

1 X

1

jkj i¼1 rankqi

8

k

>

>

1 X

>

>

AveðAi Þ

< MAP ¼

jkj i¼1

Pn

>

>

j¼1 ðrðjÞ=pðjÞÞ

>

>

: AveðAi Þ ¼

n

(16)

(17)

where k stands for the number of times a participant has

q

engaged in a conversation and ranki stands for the ordering

of the i-th participant’s response in the reply set. Ave(Ai)

denotes the mean accuracy of the ordering of the responses

of the i-th conversation model. p(j) denotes the ordering

level of the j-th answer in the adjusted response set after the

model has adapted the ordering of the candidate reply set

taking into account the given constraints; r(j) denotes the

ordering of the j-th answer in the standard reply set; and n

indicates the number of replies in the standard reply set.

MAP expresses the mean accuracy of single-valued accuracy

of response performance.

To confirm the model’s efficacy, a manual assessment

technique was employed to enlist 40 participants as needed

to engage with chatbots using varying cognitive models.

First, determine the length of the debate between a person

and a computer by measuring each model’s efficacy in terms

of time. Next, ask the volunteers to rate using the assess­

ment criteria for Sentiment and Fluency. Table 2 displays

the evaluation criterion table.

We assume that there are 10 candidate responses in the

standard reply set, or that the number of standard reply sets

INTERNATIONAL JOURNAL OF HUMAN–COMPUTER INTERACTION

9

Table 2. Evaluation indicators of ripple network content recommendation method based on knowledge graph.

Fluency

Evaluation index

The content is relevant, grammatically fluent, and consistent with human communication.

The content logic is barely relevant, and the grammatical expression is acceptable.

Content lacks logical relevance, the answers are incorrect, and the expressions are confusing.

Evaluation index

Responses are emotionally appropriate and expressive and interesting

Responding with appropriate emotion

Vague expressions, meaningless replies, etc.

þ2

þ1

þ0

Sentiment

þ2

þ1

þ0

Table 3. Objective assessment results of various cognitive models.

Models

Seq2Seq

ChatterBot

MECs

ConceptNet

Ours

MRR

MAP

0.3729

0.4514

0.5820

0.6317

0.6492

0.4123

0.4871

0.6114

0.6513

0.6696

is 10. This allows us to compute the outcomes of the two

objective evaluation indicators of MRR and MAP. Table 3

displays the results of the computation.

Table 3 presents that our suggested model outperformed

the other four comparison models in terms of results. The

primary explanation for this is that the model sorts the can­

didate reply set based on both content and emotional friend­

liness. It limits the reply content not only in terms of the

coherence of objective things but also in terms of subjective

emotional friendliness. When comparing the ChatterBot and

Seq2Seq models, the ConceptNet and MECs models per­

formed better than the others. The rationale is that

ConceptNet presents external knowledge graphs as com­

mon-sense knowledge, and MECs take empathy into

account during the interaction process. To improve the

model’s performance, they take into account, respectively,

background knowledge and emotional elements. Seq2Seq

has the lowest grade because it produces a large number of

nonsensical responses while accounting for interaction pro­

cess security. Again, objective assessment confirmed the effi­

caciousness of our suggested strategy in raising response

accuracy.

The impact of varying gender and age groups on the

assessment of the dialogue system’s interaction effect must

be taken into account throughout the process of manual

evaluation. Forty participants, varying in age and gender,

were asked to engage with each model, and the duration of

each interaction as well as the number of rounds were

recorded. Table 4 exhibits the statistical results. We then

rated 40 volunteers’ satisfaction with Fluency and Sentiment

according to different gender groups and different age

groups. The gender group consists of 20 males and 20

females, respectively, group 1 and group 2. Table 5 displays

statistical results.

In the age group, 19–23 years old is group 3, and 24–

28 years old is group 4. Each age group consisted of 2

females and 2 males (19–22 years old are undergraduates,

23–25 years old are undergraduates) Those aged between 26

and 28 years old are master students, and those between 26

and 28 years old are social workers. Table 6 displays the stat­

istical results.

Table 4. Statistics on the number of rounds and time of interaction between

models and volunteers.

Models

Average number of interaction

rounds/person

Average interaction

time/person

6.350

6.850

7.925

9.850

11.250

71.32

72.57

83.26

109.63

122.45

Seq2Seq

ChatterBot

MECs

ConceptNet

Ours

Table 5. Statistics on the Fluency and Sentiment scores of each model by vol­

unteers in the gender group.

Fluency

Models

Seq2Seq

ChatterBot

MECs

ConceptNet

Ours

Group 1

0.82

1.26

1.47

1.48

1.55

Sentiment

Group 2

0.75

1.18

1.41

1.46

1.48

Group 1

0.63

0.84

1.42

1.36

1.50

Group 2

0.68

0.97

1.46

1.43

1.53

Table 6. Statistics on fluency and sentiment scores of each model by volun­

teers in age groups.

Fluency

Models

Seq2Seq

ChatterNot

MECs

ConceptNet

Ours

Group 3

0.68

1.32

1.39

1.50

1.54

Sentiment

Group 4

0.62

1.27

1.32

1.41

1.45

Group 3

0.67

0.82

1.50

1.43

1.54

Group 4

0.74

0.85

1.50

1.49

1.53

Table 4 shows that when in contrast to other models, vol­

unteers and the model applied in this chapter did superior

in terms of the duration of interactions and the quantity of

interaction rounds. Tables 5 and 6 indicate that in this chap­

ter, the model received higher results in terms of Sentiment

and Fluency than other models from volunteers of varying

ages and genders. The model in this chapter, which consid­

ers emotional friendliness and coherence of material, has

been manually evaluated and demonstrated to be capable of

extending both the number and duration of discussion

rounds in HCI.

4.4. Experimental discussion

We address the constraint parameters a and b in Equation

(15) to examine the real effects of content and emotional

friendliness on the model in more detail. We just need to

talk about how a between [0,1] affects the model because

there is a link between b ¼ 1–a. The two indicators, MAP

and MRR, are still measured utilizing objective assessment,

and their values are likewise identified via applying the

number of standard answer sets (n ¼ 10).

10

Z. HE

Table 7. Evaluation results of different knowledge perception models.

Models

ChatterBot

DISAN

BIMPM

Ours

MRR

MAP

0.4512

0.5638

0.5711

0.6223

0.4354

0.5775

0.5628

0.6316

Figure 5. MAP objective evaluation results.

Figure 7. Satisfaction survey of volunteers interacting with customer service

robots.

Figure 6. MRR objective evaluation results.

Figures 5 and 6 indicate that the model in this section

outperforms the MECs and ChatterBot models in the object­

ive assessment of MRR and MAP when a approaches 0,

while the difference is negligible when in contrast to the

ConceptNet model. The rationale is because the ConceptNet

model and the model in this section only take content

coherence into account when subjected to this restriction.

When a approaches 1, the model in this chapter also

achieves better results than the ChatterBot model and

ConceptNet model in the objective assessment of MRR and

MAP, however, the difference is small in comparison to the

MECs model. The rationale is because both the MECs

model and the model in this chapter only take emotional

elements into account when faced with this limitation. The

model in this chapter outperforms the comparative model in

the objective assessment of MRR and MAP when a is nearer

to 0.5. This is due to the fact that at that point, both content

and emotional friendliness are considered, which may sub­

stantially raise response accuracy.

We employed a manual evaluation technique to confirm

the model’s efficacy, which was implemented by 30 online

shopping volunteers interacting with chatbots with different

cognitive models. The classic Likert scale in psychology was

used to allow volunteers to rate the accuracy, friendliness,

and acceptability of each interaction model. The scoring

details are listed below: very satisfied with five points, satis­

fied with four points, unsure with three points, dissatisfied

with two points, and extremely dissatisfied with one point.

The indicator evaluation uses the results of calculating

the two indicators MRR and MAP. In order to compute the

two indicator values, we assume that there are n ¼ 10 recov­

ery sets. Table 7 presents the results.

Table 7 illustrates that the knowledge perception model

that presented in this section has superior outcomes in con­

trast to other model methods. The reason is that this model

method provides a more profound representation of the dia­

logue content of the participants. First, we extract knowledge

from the participants’ dialogue content to obtain the partici­

pant dialogue entity set. After obtaining the dialogue enti­

ties, we link the entities to form word link entities. Not only

that, we also perform first-order external expansion on each

entity to form word expansion entities. Secondly, we vector­

ize the obtained participant conversations and obtain a vec­

tor set of participant knowledge vectors through the

knowledge-aware deep learning network. Finally, we dynam­

ically obtain the focus of participants’ attention on the basis

of the attention mechanism and give the optimal content

reply. Therefore, the modeling approach presented in this

section can get better assessment results.

In the manual evaluation, we also invited 30 volunteers

to interact with each model and then perform scoring oper­

ations. Volunteers rated each model from three aspects:

acceptability, accuracy, and friendliness. The statistical

results after averaging are presented in Figure 7. Figure 7

displays that in the statistical results of volunteer scoring,

the model approaches in this chapter are superior to other

models. Specifically, the modeling approach in this chapter

is slightly superior to the BIMPM and DISAN model, as

well as to the ChatterBot model. For the four models, volun­

teers gave good scores for the acceptability, accuracy, and

friendliness of the models during the interaction process.

INTERNATIONAL JOURNAL OF HUMAN–COMPUTER INTERACTION

For the ChatterBot model, volunteers rated all three items

near satisfaction. Volunteers gave the BIMPM model and

DISAN model a score between 4 and 4.5. The volunteers

gave the model in this chapter a score of around 4.5. From

the scores, we can see how satisfied the volunteers are with

the model.

The acceptability of the four models was affirmed by volun­

teers. Accuracy focused on the quality of responses during the

customer service human-computer interaction process, while

friendliness focused on the dialogue coherence during the cus­

tomer service human-computer interaction process. From the

scoring, we can see that the dialogue quality and coherence of

the BIMPM model and the DISAN model have reached a sat­

isfactory level. In contrast to the DISAN and BIMPM model,

the dialogue quality and coherence of the model in this section

have reached a better level of satisfaction.

5. Conclusions

Human’s learning, work, life, as well as other experiences are

held in the brain as associations. These memories can be con­

sidered as individual background knowledge. The process of

interpersonal communication is the process of awakening

locally relevant background knowledge under the impact of

emotional factors. The content recommendation method we

proposed according to the ripple network of knowledge graph

considers the knowledge graph as the robot’s background

knowledge. Then, we extract the potentially interesting enti­

ties of the participants and thoroughly consider the partici­

pants’ subjective emotional friendliness to make the best

decision for the robot dialogue reply. We accomplish this by

simulating the awakening of local associated background

knowledge during the process of human-to-human communi­

cation employing the ripple network. Our suggested model

can successfully enhance the emotional coherence and friend­

liness of robots during HCI, according to comparative testing

data. Our suggested HCI model technique offers valuable

possibilities for the development of a more intelligent and

realistic HCI system, while also simulating real-world humanto-human communication. The knowledge graph ripple net­

work-based HCI model presented in this work in the open

domain interaction system achieves natural and intelligent

interaction. But real conversations are complex and full of

changes. The robot not only needs to have a “brain”, but also

needs to have the rhythm of the conversation. The rhythm of

the conversation is usually maintained jointly by both parties.

How to continue the conversation rhythmically becomes one

of the next research goals.

Disclosure statement

No potential conflict of interest was reported by the author(s).

References

Adamopoulou, E., & Moussiades, L. (2020). Chatbots: History, technol­

ogy, and applications. Machine Learning with Applications, 2(53),

100006. https://doi.org/10.1016/j.mlwa.2020.100006

11

Gunther, C. (2019). Tutorial-ChatterBot 1.0. 2 documentation. https://

readthedocs.org/projects/chatterbot/downloads/pdf/stable/

Guo, D., Tur, G., Yih, W., Zweig, G. (2014). Joint semantic utterance

classification and slot filling with recursive neural networks. 2014

IEEE Spoken Language Technology Workshop (SLT) (pp. 554–559).

IEEE.

Hoy, M. B. (2018). Alexa, Siri, Cortana, and more: An introduction to

voice assistants. Medical Reference Services Quarterly, 37(1), 81–88.

https://doi.org/10.1080/02763869.2018.1404391

Irfan, B., Ramachandran, A., Spaulding, S., Glas, D. F., Leite, I., &

Koay, K. L. (2019). Personalization in long-term human-robot inter­

action. 2019 14th ACM/IEEE International Conference on HumanRobot Interaction (HRI) (pp. 685–686). IEEE.

Jeong, M., & Lee, G. G. (2008). Triangular-chain conditional random

fields. IEEE Transactions on Audio, Speech, and Language Processing,

16(7), 1287–1302. https://doi.org/10.1109/TASL.2008.925143

Jokinen, K. (2018). Dialogue models for socially intelligent robots.

Social Robotics: 10th International Conference, ICSR 2018, Qingdao,

China, November 28–30 (pp. 127–138). Springer International

Publishing.

Jokinen, K., & Wilcock, G. (2013). Multimodal open-domain conversa­

tions with the NaoRobot. In Natural interaction with robots, know­

bots and smartphones: Putting spoken dialog systems into practice

(pp. 213–224). Springer Verlag.

Liu, B., & Lane, I. (2017). Iterative policy learning in end-to-end train­

able task-oriented neural dialog models. 2017 IEEE Automatic

Speech Recognition and Understanding Workshop (ASRU) (pp. 482–

489). IEEE. https://doi.org/10.1109/ASRU.2017.8268975

Liu, X., Xie, L., & Wang, Z. (2017). Empathizing with emotional robot

based on cognition reappraisal. China Communications, 14(9), 100–

113. https://doi.org/10.1109/CC.2017.8068769

Lowe, R., Pow, N., Serban, I., Charlin, L., Pineau, J. (2015).

Incorporating unstructured textual knowledge sources into neural

dialogue systems. Neural Information Processing Systems Workshop

on Machine Learning for Spoken Language Understanding (pp. 1–7).

Mikolov, T., Sutskever, I., Chen, K., Corrado, G. S., & Dean, J. (2013).

Distributed representations of words and phrases and their composi­

tionality. Advances in Neural Information Processing Systems, 26,

3111–3119.

Park, J. W., Kim, W. H., Lee, W. H., Kim, J. C., & Chung, M. J. (2011,

December). How to completely use the PAD space for socially inter­

active robots. In 2011 IEEE International Conference on Robotics

and Biomimetics (pp. 3005–3010). IEEE.

Ren, M., Chen, N., & Qiu, H. (2023). Human-machine collaborative

decision-making: An evolutionary roadmap based on cognitive intel­

ligence. International Journal of Social Robotics, 15(7), 1–14. https://

doi.org/10.1007/s12369-023-01020-1

Richmond, J., & Nelson, C. A. (2009). Relational memory during

infancy: Evidence from eye tracking. Developmental Science, 12(4),

549–556. https://doi.org/10.1111/j.1467-7687.2009.00795.x

Rodr�ıguez, L. F., Gutierrez-Garcia, J. O., & Ramos, F. (2016). Modeling

the interaction of emotion and cognition in autonomous agents.

Biologically Inspired Cognitive Architectures, 17, 57–70. https://doi.

org/10.1016/j.bica.2016.07.008

Shackel, B. (1997). Human-computer interaction—Whence and

whither? Journal of the American Society for Information Science,

48(11), 970–986. https://doi.org/10.1002/(SICI)1097-4571(199711)48:

11<970::AID-ASI2>3.0.CO;2-Z

Sil, A., & Yates, A. (2013). Re-ranking for joint named-entity recogni­

tion and linking. The 22nd ACM International Conference on

Information & Knowledge Management (pp. 2369–2374). ACM.

https://doi.org/10.1145/2505515.2505601

Spatola, N., Belletier, C., Chausse, P., Augustinova, M., Normand, A.,

Barra, V., Ferrand, L., & Huguet, P. (2019). Improved cognitive con­

trol in presence of anthropomorphized robots. International Journal

of Social Robotics, 11(3), 463–476. https://doi.org/10.1007/s12369018-00511-w

Su, P. H., Gasic, M., Mrksic, N., Rojas-Barahona, L., Ultes, S.,

Vandyke, D., Wen, T. H., & Young, S. (2016). On-line active reward

12

Z. HE

learning for policy optimisation in spoken dialogue systems. arXiv

preprint arXiv:1605.07669,

Sutskever, I., Vinyals, O., & Le, Q. V. (2014). Sequence to sequence

learning with neural networks. Advances in Neural Information

Processing Systems, 27, 3104–3112.

Wen, T. H., Vandyke, D., Mrksic, N., Gasic, M., Rojas-Barahona,

L. M., Su, P. H., Ultes, S., Young, S. (2016). A network-based endto-end trainable task-oriented dialogue system. arXiv preprint arXiv:

1604.04562,

Xu, P., & Sarikaya, R. (2013). Convolutional neural network based tri­

angular CRF for joint intent detection and slot filling. 2013 IEEE

workshop on automatic speech recognition and understanding (pp.

78–83). IEEE.

Yan, Z., Duan, N., Chen, P., Zhou, M., Zhou, J., Li, Z. (2017). Building

task-oriented dialogue systems for online shopping. Proceedings of

the AAAI Conference on Artificial Intelligence. 31(1): 4618–4625.

https://doi.org/10.1609/aaai.v31i1.11182

Yang, L., Qiu, M., Qu, C., Guo, J., Zhang, Y., Croft, W. B., Huang, J.,

Chen, H. (2018). Response ranking with deep matching networks

and external knowledge in information-seeking conversation sys­

tems. In The 41st International ACMSIGIR Conference on Research

& Development in Information Retrieval (pp. 245–254). https://doi.

org/10.1145/3209978.3210011

Young, T., Cambria, E., Chaturvedi, I., Zhou, H., Biswas, S., & Huang,

M. (2018). Augmenting end-to-end dialogue systems with common­

sense knowledge. Proceedings of the AAAI Conference on Artificial

Intelligence, 32(1), 4970–4977. https://doi.org/10.1609/aaai.v32i1.11923

Zhang, R., Wang, Z., & Mai, D. (2018). Building emotional conversa­

tion systems using multi-task Seq2Seq learning. Natural Language

Processing and Chinese Computing 6th CCF International

Conference, NLPCC 2017, Dalian, China, November 8–12 (pp. 612–

621). Springer International Publishing.

Zhou, H., Huang, M., Zhang, T., Zhu, X., & Liu, B. (2018). Emotional

chatting machine: Emotional conversation generation with internal and

external memory. Proceedings of the AAAI Conference on Artificial

Intelligence, 32(1), 1–9. https://doi.org/10.1609/aaai.v32i1.11325

Zhou, X., & Wang, W. Y. (2017). Mojitalk: Generating emotional

responses at scale. arXiv preprint arXiv:1711.04090,

About the author

Zhu He is a Ph.D. student currently pursuing her degree in UCSI

University – Business and Management program. She is currently

researching how responsible and lovable AI-based agents, both tangible

and intangible, provide values to the business community. Her research

interests are AI in marketing and human-AI interactions.