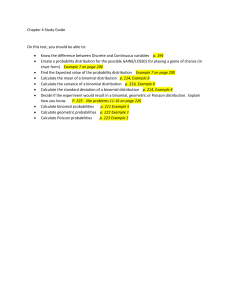

PROBABILITY DISTRIBUTIONS

LECTURE ONE

INTRODUCTION

It involves use of the laws of probability and how the laws relate to experiments. The lecture

will involve analysis of the following probability distributions: Binomial, hypergeometric,

poisson, exponential and normal distribution. Probability distributions are used to show how

the entire set of events or outcomes for an experiment can easily be represented and

expressed as a random variable.

A random variable is a variable whose value is the result of a random or uncertain by chance

event. For example, suppose a coin is tossed. The specific outcome of a single toss (either

Head or Tail) is an observation. Such an observation is the random variable. The value of the

random is the result of chance.

LECTURE OBJECTIVES

By the end of lesson the learner should be able:

Define probability distributions.

Distinguish the various forms of probability distributions

Use and apply discrete probability distributions.

A probability distribution is a display or a list of all possible outcomes of an experiment, along

with the probabilities associated with each outcome as presented in the form of a table, a graph

or a formula. For example, suppose we toss (flip) a coin three (unbiased) times. The sample

space for the above is represented as follows:

𝐸 = {𝐻𝐻𝐻, 𝐻𝐻𝑇, 𝐻𝑇𝐻, 𝑇𝐻𝐻, 𝐻𝑇𝑇, 𝑇𝐻𝑇, 𝑇𝑇𝐻, 𝑇𝑇𝑇}

From the above sample space, the probability of getting:

1. Zero heads i.e. P(TTT) =1/8

2. One head i.e. P(HTT or THT or TTH) = 3/8

3. Two heads i.e. P(HHT or HTH or THH) = 3/8

4.

All heads i.e. P( H HH) = 1/8

Listing all the possible outcomes, along with the probability associated with outcome, we obtain

a Probability distribution.

Outcome

(heads) Probability

(x)

0

𝟏

𝟖

1

𝟑

𝟖

2

𝟑

𝟖

3

𝟏

𝟖

The probabilities of all possible outcomes sum up to one.

𝑛

∑

𝑃𝑖 = 1

𝑖=1

The probability that the random variable X can take on some specific value 𝑥𝑖 is written as

𝑃(𝑋 = 𝑥𝑖 )

Thus, the probability that two heads are obtained above is given as

𝑃 𝑋 =2 =3/ 8

Note that

0 ≤ 𝑃(𝑋 = 𝑥𝑖 ) ≤ 1 𝑎𝑛𝑑 ∑𝑃(𝑋 = 𝑥𝑖) = 1

NB: A distribution in which the probabilities of all outcomes are the same is referred to as a

uniform probability distribution. For example, suppose you toss a die. The outcome is a random

variable and can take any value from one to six, that is 1, 2, 3, 4, 5, 6. The probabilities of all

possible outcomes are all 1⁄6

Hence

OUTCOME

PROBABILITY

1

2

1/

6

3

1/

6

4

1/

6

5

1/

6

6

1/

6

1/

6

Discrete Vs continuous probability distributions

There are two types of probability distributions i.e. discrete and continuous distributions.

A discrete probability distribution is one where a distinct number of values, usually whole

number. It is most often the result of counting or enumeration for example, No of customers, No

of units sold, No of heads after tossing a coin etc. In such cases, the random variable cannot be a

fraction.

A continuous probability distribution is one whose random variable can take an infinite number

or value within a given range. There are no gaps in the observations since no matter how close

two observations might be, a third can be found which will fall between the first two. A

continuous probability distribution is usually the result of measurement.

DISCRETE PROBABILITY DISTRIBUTION

The Mean and the Variance

Mean

The mean of a probability distribution is referred to as an expected value of the random variable.

The expected value of a random variable X is written as E(X).

It is the sum of the product of each possible outcome and its probability. That is,

𝜇 = 𝐸( 𝑋 ) = ∑⌊𝑥 𝑝𝑥 ⌋

The expected value of a discrete random variable is the weighted mean of all possible outcomes,

in which the weights are the respective probabilities of those outcomes.

For example,

i.

The expected value of the experiment of tossing a coin three times is

𝜇 = 𝐸 (𝑋) = 0 (1 /8) + 1(3 /8) + 2(3 /8) + 3 (1 /8) = 12 /8 = 1.5

ii.

The expected value of tossing a dice is

𝜇 = 𝐸( 𝑋 ) = 1( 1 /6 )+ 2( 1 /6 )+ 3( 1 /6 )+ 4 (1 /6 )+ 5( 1 /6 ) + 6( 1 /6 ) = 3.5

Variance

Variance is the mean of the squared deviations from the mean (expected value).

∑ {( 𝑥𝑖 − 𝜇 ) 2 ∗ 𝑃 𝑥𝑖}

𝛿2 =

𝛿2 =

{((𝑥𝑖 )2 ∗ 𝑃 𝑥𝑖 ) − 𝜇2 }

For example, the variance of tossing a dice

𝛿2 =

∑*( 𝑥𝑖 − 𝜇 ) 2 ∗ 𝑃 𝑥𝑖}

𝛿2 = (1 − 3.5) 2 (1 /6 )+ ( 2 − 3.5 )2 (1 /6 )+ (3 − 3.5) 2 (1 /6 )+ (4 − 3.5) 2 (1 /6 )

+ (5 − 3.5 )2 (1 /6 )+ (6 − 3.5 )2 (1 /6 )= 𝟐. 𝟗𝟐

EXAMPLES OF DISCRETE PROBABILITY DISTRIBUTIONS

1. BINOMIAL DISTRIBUTION

This is a process in which each trial or observation can assume only one of two states that is each

trial in a binomial distribution results in one of only two mutually exclusive outcomes, one of

which is identified as a success and the other as a failure. The probability of each remains

constant from one trial to the next.

Experiments of this type follow a binomial distribution or Bernoulli process, named after a Swiss

mathematician Jacob Bernoulli. A binomial distribution or Bernoulli process must fulfill the

following conditions:1. There must be only two possible outcomes. One is identified as a success, the other as a

failure (do not attach any connotation of ‗good‘or‘bad‘to the terms. They are quite

objective and a success does not necessarily imply a desirable outcome)

2. The probability of a success, π, remains constant from one trial to the next as does the

probability of a failure, (1-𝜋)

3. The probability of a success in one trial is very independent of any other trial.

4. The experiment can be repeated many times.

NB: Condition 3, above, states that the trials or experiments must be independent in order for a

random variable to be considered a binomial random variable. This can be assured in a finite

population if the sampling is perfumed with replacement.

However, sampling with replacement is the exception rather than the rule in business

applications. Most often, the sampling is performed without replacement. But strictly speaking,

when sampling is perfomed without replacement, the conditions for the Binomial distribution

cannot be satisfied. However, the conditions are approximately satisfied if the sample selected is

quite small relative to the size of the population from which the sample is selected. A commonly

used rule of the thumb is that the Binomial distribution can be applied if 𝑛 𝑁 < 1 20. Thus if

the sample is less than 5% of the size of the population, the conditions from the binomial will be

approximately satisfied.

The probability that out of n trials, there will be X successes are given

𝑃 (𝑥) = 𝑛𝐶𝑥 𝜋𝑥 1 − 𝜋 𝑛−𝑥

𝑊h𝑒𝑟𝑒 𝑛 = 𝑠𝑎𝑚𝑝𝑙𝑒 𝑠𝑖𝑧𝑒 𝑜𝑟 the 𝑁𝑜. 𝑜𝑓 𝑡𝑟𝑖𝑎𝑙𝑠

𝜋 = 𝑝𝑟𝑜𝑏𝑎𝑏𝑖𝑙𝑖𝑡𝑦 𝑜𝑓 𝑠𝑢𝑐𝑐𝑒𝑠𝑠

𝑋 = 𝑁𝑜. 𝑜𝑓 𝑠𝑢𝑐𝑐𝑒𝑠𝑠𝑒𝑠

1 − 𝜋 = 𝑃𝑟𝑜𝑏𝑎𝑏𝑖𝑙𝑖𝑡𝑦 𝑜𝑓 𝑓𝑎𝑖𝑙𝑢𝑟𝑒.

For example, tossing a coin is an example of a binomial distribution since:1. There are only two possible outcomes, Head or tail.

2. The probability of a success (Head or tail) remains constant at π = 0.5 for all tosses.

3. The probability of a success on any toss is not affected by the results of any other toss.

4. A coin may be tossed many times

Examples

a) Suppose a coin is tossed 10 times, what is the probability that there will be 3 tails.

A tail is a success

Then, X=3, n= 10

𝑃 (𝑥) = 𝑛𝐶𝑥 𝜋𝑥 1 − 𝜋 𝑛−𝑥

𝑃 (𝑥) = 3 = 10𝐶3 (0.5)3 0.5 7

b) A credit manager for American express has found that 10% of their card users do not pay

the full amount of indebtedness during any given month. She wants to determine the

probability that if 20 accounts are randomly selected, 5 of them are not paid.

𝑃(𝑥 = 5 𝑔𝑖𝑣𝑒𝑛 𝑛 = 20)

𝜋 = 0.1

𝑃(𝑥) = 𝑛𝐶𝑥 𝜋𝑥 (1 – 𝜋) 𝑛−𝑥

𝑃 (𝑥 = 5) = 20𝐶5 (0.1)5 (0.9) 15

𝑃 (𝑥) = 5 = 0.0319

When the probability if some event is measured over a range of values, it is known as

Cumulative Binomial Probability.

𝑃 𝑋≤𝑥 =𝑃𝑋=0+𝑃𝑋=1+𝑃 𝑋=2+⋯+𝑃𝑋=𝑥

For example 𝑃 𝑋 ≤ 2

𝑃𝑋≤2=𝑃𝑋=0+𝑃𝑋=1+𝑃𝑋=2

While 𝑃 𝑋 > 𝑥 = 1 − 𝑃 𝑋 ≤ 𝑥

For example, 𝑃 𝑋 > 2

𝑃 𝑋>2 =1−𝑃 𝑋≤2

Worked example,

Sales person for widgets make a sale to 15% of the customers on whom they call. If a member

of the sales calls on 15 customers today, what is the probability that he/she will sell,

i.

exactly two widgets

ii.

at most two widgets

iii.

at least three widgets

𝑛 = 15 𝑎𝑛𝑑 𝜋 = 0.15

1. 𝑃 (𝑋 = 2)

𝑃 (𝑋 = 2) = 15𝐶2 (0.15)

2

(0.85) 13

𝑃( 𝑋 = 2 ) = 𝟎. 𝟐𝟖𝟓𝟔

2. 𝑃 (𝑋 ≤ 2) = 𝑃 𝑋 = 0 + 𝑃 𝑋 = 1 + 𝑃 𝑋 = 2

𝑃( 𝑋 ≤ 2) = 15𝐶0 (0.15) 0 (0.85) 15 + 15𝐶1 (0.15) 1 (0.85) 14 + 15𝐶2 (0.15) 2 (0.85

)13

𝑃 (𝑋 ≤ 2 ) =

3. 𝑃( 𝑋 > 2)

𝑃 (𝑋 > 2) = 1 − 𝑃 ( 𝑋 ≤ 2)

Mean & Variance of Binomial Distribution

The mean and variance for a Binomial distribution are calculated as

𝜇 = 𝑛𝜋

𝛿2 = 𝑛𝜋 ( 1 − 𝜋 )

Where n is the number of trials (sample size) and is the probability of a success on any given

trial. i.e. we would expect that on the average, there are 𝑛𝜋 success out of n trials.

From the previous example of sales of widgets, we had.

= 0.15 n = 15

The sales personnel should average

𝜇 = 15(0.15) = 2.25

Sales for every 15 sales calls. That is, if a sales person makes 15 calls a day, for many days,

he/she will average over the long run 2.25 sales per day. The variance in the number of daily

sales is

𝛿2 = 15(0.15) 0.85) = 1.91

= 1.38

SHAPE OF THE BINOMIAL DISTRIBUTION

i.

ii.

iii.

iv.

If 𝜋 = 0.5 the binomial distribution will be perfectly symmetrical.

If 𝜋 < 0.5, the curve will be skewed to the right.

If 𝜋 > 0.5, the curve is skewed to the left.

Holding 𝜋 constant, as n increase, the binomial distribution will appear normality.

THE POISSON DISTRIBUTION

A French Mathematicians Simeon Poisson developed it. Poisson distribution measures the

probability of a random event over some interval of time or space. It measures the relative

frequency of an event over some unit of time or space.

For example,

- The number of arrivals of customers per hour.

- Number of industrial accidents per month.

- Number of detective electrical connections per mile of wiring. etc.

In each of these cases, the random variable (customers, accidents, detects, machines etc) is

measured per unit of time or space (distance).

Two assumptions are necessary to the application of the Poisson distribution.

i) The probability of the occurrence of the event is constant for any two intervals of time or

space.

ii) The occurrence of the event in any interval is independent of the occurrence in any other

interval.

Given these assumptions, the Poisson distribution can be expressed as

𝑃𝑋=

𝜇𝑥 𝑒−𝜇

𝑥!

Where 𝑋 𝑖𝑠 the 𝑓𝑟𝑒𝑞𝑢𝑒𝑛𝑐𝑦 𝑜𝑟 the 𝑛𝑢𝑚𝑏𝑒𝑟 𝑜𝑓 𝑡𝑖𝑚𝑒𝑠 the 𝑒𝑣𝑒𝑛𝑡 𝑜𝑐𝑐𝑢𝑟𝑠.

µ 𝑖𝑠 th𝑒 𝑚𝑒𝑎𝑛 𝑛𝑢𝑚𝑏𝑒𝑟 𝑜𝑓 𝑜𝑐𝑐𝑢𝑟𝑟𝑒𝑛𝑐𝑒 𝑝𝑒𝑟 𝑢𝑛𝑖𝑡 𝑜𝑓 𝑡𝑖𝑚𝑒 𝑜𝑟 𝑠𝑝𝑎𝑐𝑒.

𝑒 = 2.71828 𝑖𝑠 the 𝑏𝑎𝑠𝑒 𝑜𝑓 the 𝑁𝑎𝑡𝑢𝑟𝑎𝑙 𝑙𝑜𝑔𝑎𝑟𝑖th𝑚 𝑠𝑦𝑠𝑡𝑒𝑚

Worked example

A simple observation over the last 80 hours has shown that 800 customers enter a certain bank.

What is the probability that 5 customers will arrive in the bank during the next one hour?

µ = 10 𝑐𝑢𝑠𝑡𝑜𝑚𝑒𝑟𝑠 𝑝𝑒𝑟 h𝑜𝑢𝑟

𝑥 =5

𝑃𝑋=

𝜇𝑥 𝑒−𝜇

𝑥!

𝑃𝑋=5=

105 𝑒−10

5!

𝑃 𝑋 = 𝟎. 𝟎𝟑𝟕𝟖

A local paving company obtained a contract with the city council to maintain roads

servicing a large urban centre. The roads recently paved by this company revealed an

average of two defects per mile after being used for one year. If the council retains this

company, what is the probability of one defect in any given mile of road after carrying

traffic for one year?

𝜇𝑥 𝑒−𝜇

𝑃𝑋=

𝑥!

21 𝑒−2

𝑃𝑋=1=

1!

𝑃 𝑋 = 1 = 𝟎. 𝟐𝟕𝟎𝟕

POISSON VS BINOMIAL DISTRIBUTIONS

The Poisson distribution is useful an approximation for Binomial Probabilities. This

approximation is often necessary if the number of trials is large, since binomial tables for large

values of n are often not available. The approximation process is permitted only if n is large and

is small. As a rule of thumb, the approximation is reasonably accurate if 𝑛 ≥ 20 and 𝜋 ≤ 0.10.

If π is large simply, reverse the definition of a success and a failure so that π becomes small.

For example, assume that industry records show that 10% of employees steal from their

employers. The personnel manager of a firm in that industry wishes to determine the probability

that from a sample of 20 employees, three have illegally taken company property.

Using the Binomial Distribution,

𝑃 𝑋 = 3 = 20𝐶3 (0.10) 3 (0.9) 17 = 0.1901 ≅ 𝟎. 𝟐

Using Poisson distribution,

𝜇 = 𝑛𝜋 = 20 0.1 = 2

23𝑒−2

= 0.1804 ≅ 𝟎. 𝟐

𝑃𝑋=3=

3!

For larger values of n and /or smaller values of π, the approximation may be even closer.

Assume n=100

𝜇 = 𝑛𝜋 = 100 0.1 = 10

𝑃𝑋=3=

103 𝑒−10 = 0.0076

3!

THE HYPERGEOMETRIC DISTRIBUTION

If a sample is selected without replacement from a known finite population and contains a

relatively large proportion of the population, such that the probability of a success is measurably

altered from one selection to the other, the hypergeometric distribution should be used.

The Binomial distribution is appropriate only if the probability of a success remains constant for

each trial. This will occur if the sampling is done from an infinite (or very large) population.

However, if the population is rather small, or the sample contains a large portion of the

population, the probability of a success will vary between trials.

For example, suppose we have a group of 30 people of whom 10 are female. The probability of

choosing a female (success) =10/30, during the first trial. The probability of a choosing a female

during the second trial without replacement will either be 9/29 if the first was either a success or

10/29 if the first was a failure. Hence, the probability cannot remain constant. That is, for N-30,

10/30 and 9/29 or 10/29 could be significantly different. As N increases, the difference is

negligible.

Therefore, if the probability of a success is not constant, the hypergeometric distribution is given

by

𝑃(𝑋)

𝑟𝐶𝑥 𝑁 − 𝑟 𝐶𝑛−𝑥

𝑁𝐶𝑛

Where, 𝑁 = 𝑝𝑜𝑝𝑢𝑙𝑎𝑡𝑖𝑜𝑛 𝑠𝑖𝑧𝑒

𝑛 = 𝑠𝑎𝑚𝑝𝑙𝑒 𝑠𝑖𝑧𝑒

𝑟 = 𝑁𝑜. 𝑖𝑛 th𝑒 𝑝𝑜𝑝𝑢𝑙𝑎𝑡𝑖𝑜𝑛 𝑎𝑟𝑒 𝑎 𝑠𝑢𝑐𝑐𝑒𝑠𝑠

𝑋 = 𝑁𝑜. 𝑖𝑛 th𝑒 𝑠𝑎𝑚𝑝𝑙𝑒 𝑖𝑑𝑒𝑛𝑡𝑖𝑓𝑖𝑒𝑑 𝑎𝑠 𝑎 𝑠𝑢𝑐𝑐𝑒𝑠𝑠

Example

In a population of 10 racing horses, 4 are known to have a contagious disease. What is

the probability that out of 3 horses selected, 2 horses have a disease?

𝑁 = 10, 𝑛 = 3, 𝑟 = 4, 𝑋 = 2

4𝐶2∗6𝐶1

𝑃 𝑋 =2 =

= 0.3

10𝐶3

Variance of the hypergeometric distribution.

The variance is equal to the number of trials multiplied by the proportion of success in the

population and multiplied again by the proportion of failures in the population.

𝛿2 = 𝑛

𝑟

𝑁

𝑁−𝑟

𝑁

𝑁−𝑛

𝑁−1

Again, similar to the variance of the Binomial since 𝑟/𝑁 = 𝜋,

difference is the last factor

Nr

= 1 − 𝜋.

N

The only

Nn

known as the finite population correction factor.

N 1

HYPERGEOMETRIC VS BINOMIAL DISTRIBUTION

We have noted that the means and variances of the binomial and hypergeometric distributions

N n

are identical except for the finite population correction factor

. If however the sample size

N 1

or number of the population N, then the finite population correction factor approaches 1 and

hence ignored. As a rule of thumb, whenever 𝑛 ≤ 5% of a finite population or when the

population size N approaches , the finite population correction factor can be ignored and the

Binomial distribution can be used to approximate the Hypogeometric distribution.

For example, N=40, n=2 , r=4

But since

,we can use the binomial distribution where

n=2

THE EXPONENTIAL DISTRIBUTION

The Poisson distribution is a discrete probability distribution, which measures the number of

occurrences of some event of over time or space. For example, the number of customers who

might arrive during some given period. The exponential distribution measures the passage of

time between those occurrences. Therefore, while the Poisson distribution describes arrival rates

of units (people, trucks, telephone calls etc) within same time period, the exponential distribution

estimates the lapse of time between arrivals. It can measure the time lapse asi) The time that passes/elapses between two successive arrivals or

ii) The amount of time that it takes to complete one action ,for example, serving one

customer, loading one truck, handling one call etc.

Exponential probability distributions are depicted by a steadily decreasing/decaying which shows

that the larger the value of the random variable, as measured in units of elapsed time, the less

likely it is to occur.

If the arrival process is Poisson distributed, then lapse of time between arrivals is exponentially

distributed.

Let be the mean number of arrivals in a given time period, and let

be the mean lapse of time

between arrivals.

Then

e.g If an average of 4 customers arrive in a bank 1 hour,

, then on the average, one

customer arrives every 0.25 hr.

i.e

Based on the relationship between Poisson and Exponential distributions, it is possible to

determine the probability that a specified time period will lapse given knowledge of the average

arrival rate.

The probability that no more than t units of time elapse between successive occurrences is

Where µ is the mean rate of occurrence

Worked examples

Trucks arrive at a loading duck at a mean of 1.5 per hour. What is the probability that no

more than 2 hours will lapse between the arrivals of successive trucks?

The probability that a second truck will arrive with 2 hours of the first one is 95.02%

Hence,

Taxis arrive at the airport at the rate of 12 per hour. You have just landed at the airport

and must get to town as fast as possible for a business deal. What is the probability that

your will get a taxi in the next 5 minutes?

similarly, 5 minutes = 1/12 hrs

NB: The mean of the hyper geometric distribution is

and the standard deviation is

Note.

The expected value of a discrete random variable is the weighted mean of all possible

outcomes, in which the weights are the respective probabilities of those outcomes. It is

the sum of the product of each possible outcome and its probability. That is,

Variance is the mean of the squared deviations from the mean (expected value).

SELF-TEST QUESTIONS

i.

The investment firm employs 20 investment analysts. Every morning each analyst is

assigned up to five stocks to evaluate. The assignments that were made in a certain day

are:

Outcome (xi)

Frequency of xi

(No. of stocks)

(No. of analysts)

1

2

3

4

5

4

2

3

5

6

Determine

-

ii.

Probability Distribution

Mean

Variance

Over the last 100 business days, Harry has had 20 customers on 20 of those days, 25

customers on 20 days, 35 customers on 30 days, 40 customers on 10 days and 45

customers 10 days.

How many customers might be expected today, and what is the variance in the no. of

customers.

FURTHER READINGS.

1. Allan Webster (1992) Applied Statistics for Business and Economics, Truin,

Boston.

2. David Groebner and Patrick Shannon, Business Statistics: A

Decision-Making Approach, 3rd edition merril publishing company,

Ohio

3. Wannacott T.H. and Wannocott: Introduction Statistics for Business

and Economics, John Wiley and sons, New York.

4. Other text books on Statistics for Business and Economics