CONTINUOUS AND DISCRETE

SIGNALS AND SYSTEMS

Iru! csnG lc yqtfiqdl

I(ARIU INTEBNAIIOT{AL

Ohak -I2rg. BengLdcth.

Pho.. el3oa87, el38a2e

.:d

r,r

lt

.

I

jli:,

ni.

r.

t

CONTINUOUS AND DISCRETE

SIGNALS AND SYSTEMS

SECOND EDITION

SAMIR S. SOLIMAN

QUALCOMM Incorporated

San Diego, California

MANDYAM D. SRINATH

Southe

rn M ethodis t U niv e rs ity

Dallas, Texas

Prenlice-Holl ol lndio Frfivde

*"* ,"jH;10 001

Mmn80cd

Thls lndl8n

Raprlil4!. &E.O

(Otlghal U.S.

Etltffis.

5544.00)

OOIfflNUOIJS AND DISCRETE 536N,lUt AND SYSTEMS,

by Samh S. SolLrun ad mandyam D. Stfuth

zrd

E.L

lSS by PrcnbHa[, llt., (]Ew lmorvn as Pear8on Educauon, lnc.), Ono lako Stest, Uppst

Saddle Rlver, Nfl Jsr8oy 074{i8, U.SA. All rlghts reslved. No Pan o, thb bmk may bo

roprduEsd h any totm, by mlmeogmph ot any o$Er rneans, wlthout Potmlssbn h s'ddng tlotr

Ole Brblbhor.

@

bk

ffi

Bffi

Edu.le

Tlieso

Tho adE srd Brb&itp c, ods !@f hsvs used tlalt b€8t efiotb h Pr€Darrt8 0&

efrsdiYBt@. The auttE

0D (bvBloF rsd, r€sger4 8rd bdtg d tho UEotbs ard Fqtams b (labmets

ard grubtrsr nal(o no rsnat$y ol any ldrd, etg€ss€d @ lmpfled, wlth tggard b those Programs @ ths

lrtusrtal

!o ]bbb h any sYsnl

dcfiordetcl cmtehed h 0lfs !@k Ihe autlor ad trINshet thal

or colrssglr€tdal datttsgss h cglrE(tqt rdlh, B artdrE oul ot, Ulo hrrdsl'fiE, P€rlormarEo' ot usa ot tlr@

pro0rans.

d

E

tsBN€t-20&zu€

Pubtbhod by Asol(g

New Dolhl:lloool

N$v Dolrl-11(815.

K

GhGh, Prantbs-tlatl ol lndla P.hats LEnibd, M'c/, Connaught Cbas'

and Prlnted by V.K. Balra at Pearl Onset Prsos Prlvato Llmlted,

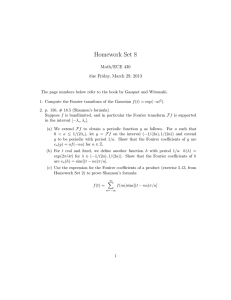

Contents

rlll

PNEFACE

1

NEPRESENNNG S'GA'AIS

1.1 Introduction 1

1.2 Continuous-Time vs. Discrete-Time Signals

1.3 Periodic vs. Aperiodic Signals 4

1.4 Energy and Power Signals 7

1.5 Transformations of the Independent Variable

1.5.1 The Shifting Operation, l0

1.5.2 The Retlection Operotion, 13

1.5.3 The Time-Scaling Operarion, 17

1.6 Elementary Signals 19

L6.I The Unit Step Function, 19

1.6.2 The Ramp Function, 2l

L6.3 The Sompling Function, 22

1.6.4 The Unit Impulse Function, 22

1.6.5 Derivatives of the lmpulse Function, 30

1.7 Other Types of Signals 32

1.8 Summary 33

1.9 Checklist of Important Terms 35

1.10 Problems 35

l0

,._,:v'.

I

, i

:

I

:.

.Its,'I

vl

ContenB

2 CONNNUOU$NMESYSTEMS

4I

2,1 Introduction 4l

2.2 Classification of Continuous-Time Systems

22.1

2.2.2

2.2.3

2.2.4

2.25

2.26

2.3

2.4

Linear Time-Invariant

2.3.1

2.3.2

Systems

2.4.4

Graphical Inrerpretation ol Convoluiot, 58

Systems U

Causal LTI Systems, &

Invertible LTI Systems, 65

Stoble

LTI

Systems, 65

Equations 67

Lincar,Corctant-Coefftcieu DiffereruialEquations,6T

Sptems Described by Differential

2.5J

2.52

2-53

2,5,4

Basic System Components,

6

Sinulation Diagrans for Contiauous-Tine Systems, 70

Fiading the Impulse Resporce,73

2.6 State-VariableRepresentation

2.6.1 Sute Equations, V

2.6.2

2.63

2.6.4

2.65

76

Time-Domah Solwion ol the State Equations, TE

State Equations h Fint Canonical Form, M

State Equztions h Second Canonical Fon4 E7

Stability Consideruions, 9l

2.7 Srrmmary 94

2.8 Checklist of Important Terms

2.9 Problems 96

S

52

The Convolution Integral 52

Properties of Linear, Time-Invariant

2,4.1 Memorylas LTI Systems, O4

2.42

2.43

2.5

42

Lineat and Nonlinear Sysums, 42

Tbne-Varying ond TimeJnvariant Systems, ,16

Systems with and without Memory, 47

CausolSysetta,4E

Invenibility and lnverce Sysums,50

Sublc Systerns,5l

96

FOURIER SEF'ES

3.1 Introduction 106

3.2 Orthogonal Representations of Sipals lUl

3.3 The Exponential Fourier Series lLz

3.4 Dirichlet Conditions 122

t@

vll

Contents

3.5

Properties of Fourier

3,5J

Series

125

Least Squores Approximation Property, 125

Elfecs of Symmetry, 127

Lineairy, 129

Product of Two Signals, 130

3.5.2

3.5.3

3.5.4

3.5.5 Convolution of Two Signals, 131

3.5-6 Paneval)s Theoretry lj2

3.5.7 Shilt in Time, 133

J.5.8 Inregration of Periodic Signab, 134

3.6 Systems with Periodic tnputs 135

3.7 The Gibbs Phenomenon 142

3.8 Summary 145

3.9 Checklist of Important Terms 148

Problems f48

3.11 Computer Problems

3.10

4

1@

,62

THE FOURIER TRANSFORM

4.1 Introduction 162

4.2 The Continuous-Time Fourier Transform 163

4.2.1 Development of the Fourier Transform, 163

4.2.2 Existence of the Fourier Tratsform, 165

4.2.3 Examples of the Continuous-Time Fourier Trarsform, 166

4.3 Properties of the Fourier Transform l7l

4.3.1 Lineafiy, I7I

4.3.2 Symmetry, l7i

4.3.3 Time Shifting, 175

4.3.4 Time Scaling 175

4.3.5 Differentiation,IV

4,3.6 Energy ofAperiodic Signab, 179

4.3.7 Convolution, lEI

4.3.8 Duality, 184

4.i.9

4.4

4.5

Modulatio+

185

Applications of the Fourier

Transform

4.4.1 Amplitude Modubrion, 190

4.4.2 Multipl*ing 192

4.4.3 The Sampling Theorem, 194

4.4,4 Sigtul Filteriag 2N

190

Duration-BandwidthRelationships 2U

4.5.1

4.5.2

Defiaitiotts of Duration and Bandwidrh,2M

The Uncertainty Priacipk,2$

(hntents

4.6 Summary 2ll

4.7 Checklist of Important Terms

4.8 Problems 2L2

5

212

224

THELAPLACETRANSFORM

5,1 Introduction 224

5.2 The Bilateral l-aplace Trensform 225

5.3 The Unilateral I-aplace Transform 228

5.4 Bilateral Transforms Using Unilateral Transforms 229

5.5 Properties of the Unilateral l:place Transform 231

J.s.t

Lineanry,232

5.5.2 Tine Shifiing,232

5.5.3 Shifiing in the s Domain,2i3

5.5.4 Time Scaling,234

5.5.5 Differentiatibn in the Time Domairy 234

5.5.6 Integration in the Time Domairy 237

5.5.7 DWrentiotion in the s Domain, 238

5.5.E Modulation,239

5.5.9 Convolutiory 240

5.5.10

5.5.1I

5.6, The

Initial-Value Theorer4 243

Final'Volue Theorem 2'14

Ihverse

l:place Transform 246

5.'l

Simulation Diagrams for Continuous-Time

5.8

Applications of the [:place

5.9

Transform

State Equations and the l:place

5.ll Summary

250

257

Problems

Transform

263

26

268

5.12 Checklist of Important

6

Systems

5.8.1 Solution of Differential Equatioru, 257

5.8.2 Application to RLC Circuit Analysb, 258

5.8.3 Application to Control" 2ffi

5.10 Stability in the s Domain

5.13

t

6

Terms

270

27O

D//SCNETE.NMESYSTEMS

6.1 Introduction 278

6.1.1 Clossification of Discrete-Time Signab,279

6.1.2 Transfornutions of the lndependent Variable, 281

278

lx

ConientB

6.2

Elementary Disrete'Time Signals 282

6,2.1 Dblete Imputse and Step Functlotts' 283

6,2,2

ExPonentialSequences,2E4

6.3 Discrete-Time SYstems 287

6.4 Periodic Convolution 294

6.5 Difference-EquationRepresentationofDiscrete-TimeSystems

6.5.1 Homogeneow Solution of the Difference Eqwtion' 299

6,5,2 The Panicular Solution, 302

6.5,3 Determlnation of the Impube Response' 305

6.6 Simulation Diagrams for Discrete'Time Systems 306

6.7 State-Variable Representation of Discrete-Time Systems 310

6.7.1 Solution of Smte-Space Equotions, 3li

6.7.2 lmpulse Response of Systems Described by State Equations, 316

6.8 Stability of Discrete'Time Systems 376

6.9 Summary 318

6.10 Checklist of Important

6.11

7

Problems

Terms

298

320

320

329

FOUN//EN ANALYS'S OF D//SCRETE.NME SYSTEMS

7.1 Introduction 329

7.2 Fourier-Series Representation of Discrete-Time Periodic Signals

7.3 The Discrete-Time Fourier Transform 340

7.4 Properties of the Discrete-Time Fourier Transform 345

7.4.1 Periodiciry,345

7.4.2 Linearity, j45

7.4.3 Time and Frequency Shifting, 345

7.4.4 DifferentiationinFrequency,346

7.4.5 Convolution,3tl6

7.4.6 Modulation,350

7.4.7 Fourier Transform of Dbcrete'Time Periodic Sequences' '150

7.5 Fourier Transform of Sampled Continuous-Time Signals 351

Reconsttuction of Sampled Signols,356

- 7-5.1

7.5.2 Sampling-Rate Conversion, 359

7.5.3 A/D and D/A Conversion,364

7.6 Summary 367

7.7 Checklist of Important Terms 369

7.8 Problems 369

331

x

8

Contens

THEZ.TRANSFO.AM

8.1 [ntroduction

E.Z

375*.

375

Z-Transform 376

8.3 C.onvergence ofthe Z-Transform 378

8.4 hoperties of the Z-Transform 383

The

8.4.1 Linearity,3E5

8.4.2 TimeShifting,386

8.4.3 FrequencyScaling,3ST

E.4.4

E.4.5

E.4.6

E,4.7

8.5

8.6

Differeruiation with Rapecr to

InitialValue,3S9

Fual Value, i89

Convolwio4 390

The lnyerse

E.sJ

8.5.2

z,38

Z-Transform

392

Invenion by a Power-Series Expansior* 394

Invenion by Panial-Fraction Exparcio4 j95

Systems 399

8,7 Z-Transform Analysis of State-Variable Systems M2

8.8 Relation Between the Z-Trdnsform and the Laplace Transform

8.9 Summary 4ll

Z-Trunsfer Functions of Causal Discrete-Time

8.10 Checklist of Important

. 8.11 Problems

Terms

4lO

414

414

9 THED/SCNETEFOUA//ERTRANSFOAM

9.1 Introduction 419

9,2 The Discrete Fourier Transform atrd Its Inverse 4Zl

9.3 Properties of the DFT 422

9.3.1 Linearity,422

9.3.2 TimeShiftitrg,422

9.3.3 Akemative Invenion Formulq 42j

9.3.4 Time Coivolwio4 42j

9.3.5 Relation a the Discrete-Time Fourier and Z-Transforms, 424

9.3.6 Mitrix Interpretarion of the DFT, 425

9.4 Linear Convolution Using the DFT 426

9.5 Fast Fourier Transforms 428

9.5.1 The Decimation-in-Time Algoritha 429

9.5.2 The Decination-in-Frequency Algoritlua,4j3

4Ig

xr

Contents

9.6

Spectral Estimation of Analog Signals Using the

DFI

4*46

91 Summary 445

9.8 Checklist of ImPortant Terms

9.9 Problems MB

10

448

tts2

DES//GN OF ANALOG AND DIGITAL FILTERS

10.1

Introduction

452

10.2 FrequencyTransformations 455

10.3.1

10.3.2

10.4 Digital

Filters

457

The Buttenoonh Filrer' 458

The ChebYshev Filrer,462

10.3 Design of Analog

Filters

468

10.2.1 Design of IIR Digitat Fitters lJshg Impube Invariance, 469

10.4.2 IIR Design Using the Bilineor Translormatio4 473

10.4.3 FIR Filter Desig4 475

10.4.4 Computer-Aided Design of Digirol Filters, tlEI

10.5

Summary

482

10.6 Checklist of Important

10.7

Problems

APPENDIX

A

Arithmetic

A.2.1

A,2.2

A.2.3

483

483

,lE5

Operations

487

Addiuon and Subtraction, tE7

Muhiplication,4ET

Division,488

A.3 Powers and Roots of Complex Numbers

A.4 Inequalities 490

APPENDIX

&5

COMPLEX NUMBENS

A.l Definition

A.2

Terms

B

489

491

MATHEMANCAL RELANONS

B.l

Trigonometric

B.2

Exponential and Logarithmic

ldentities

491

Functions

492

xll

8.3

ContentE

tspecial

B.i.I

Functions

8.3.2

8,3,3

8.4

8.5

Expansion 494

Sums of Powers of Natural Numbers

Power-Series

B.sJ

8.5.2

8.6

4g3

GanmaFuctlons,493

Incomplete Gatrutu Functlors, 494

Beu Funaions,494

495

Suns of Blnomial Coefficiens,496

Series of Exponentials, 496

Integrals 496

B,7 Indefinite Integrals 498

Definite

APPENDIX

C.l

Basic

C.2

Basic

C

ELEMEMARY MATRIX THEONY

Definition

5(2

Operations 503

C.2.1 Matrir Additio4 503

C.2.2 Differentiation and Inegrarton

C.2.3 Marrix Multiplicatiot, 503

C.3 Special Matrices 504

C.4

C.5

C.6

The Inverse of a

Matrix

Eigenvalues and

Eigenvectors

Functions of a

APPEND'X

D

Matrix

PARNAL

602

503

506

507

508

FNACNONS

D.l

Case I: Nonrepeated Linear

D.3

D.4

Case

III: Nourepeated Irreducible

Case

IV: Repeated Irreducible Second-Degree

512

Factors 513

D.2 Case II: Repeated Linear Factors 514

Factors 515

Factors 517

Second-Degree

BIBLIOGRAPHY

519

INDEX

521

Preface

The second edition of Continuous and Disqete Signak and Systems is a modified ver.

sion of the fint edition based on our experience in using it as a textbook in the intro'

ductory course on signals and systems at Southern Methodist Universily, as well as the

coEments of numerous colleagues who have used the book at other universities. The

result, we hope, is a book that provides an introductory, but comprehensive treatment

of the subjeci of continuous and disqrete.time signals and systems, Some changes that

we have made to enhance the quality of the book is to move the section on orthoSo'

nal representations of signals from Chapter I to the beginning of Chapter 3 on Fourier

serles, which permlts us to treat Fourier series as a epecial case of more general repre'

sentations. Oiher features are the addition of sections on practical reconstruction fil'

tera, rampling-rate conversion, and A/D and D/A converters to Chapter 7, We have

aleo added reveral problems in various chapters, emphasizing comPuter usage. How'

ever, we have not suggested or requlred the use of any specific mathematiQal software

be left to the preference of the lnstructor,

packages

-Overall, as we feel that this choice should

about a third of the problems and about a fifth of the examples in the book

have been changed,

As noted in the first edition, the aim of building complex systems that perform

sophisticated tasks imposes on engineering students a need to enhance their knowl'

edge of slgnals and syitems, so that they are able to use effectively the rich variety of

anilyeis and synthesis techniques that are available. Thus signals and systems,is a_core

course ln the Electrical Engineering curriculum in most schools. In writlng this book

we have tried to preBent the most widely used techniques of signal and system analy'

sls ln an appropriate fashion for instruction at the junior or senior level in electrical

engineerlng" The concepts and technlques that form the core of the book are of fun'

damental lmportance and ghould prove useful also to engineers wishing to update or

extend thelr understanding of signals and eyetems through self-study,

xlll

The book is divided into two major parts. In the,first part. a comprehensive treatment of continuous-time signals and systems is presented. In the second part, the

results are extended to discrete-time signals and systems. In our experience, we have

found that covering both continuous-time and discrete-time systems together, frequently confuses students and they often are not clear as to whether a particular concept or technique applies to continuous-time or discrete-time systems, or both, The

result is that they often use solution techniques that simply do not apply to particular

problems. Since most students are familiar with continuous-time sigaals and systems in

the basic oourses leading up to this course. they are able to follow the development of

the theory and analysis of continuous-time systems without difficulty. Once they have

become familiar with this material which is covered in the tint five chapters, students

should be ready to handle discrete+ime signals and systems.

The book is organized such that all the chapters are distinct but closely related with

smooth transitions between chapters, thereby providing considerable flexibility in

course design. By appropriate choice of material. the book can be used as a text in several courses such as transform theory (Chapters l, 3, 4,5,7, and 8), coutinlsus-1ims

signals and systems (1,2,3,4, and 5), discrete-time signals and systems (Chapters 6,7,

8, and 9), and sipals and systems: continuous and discrete (Chapters 1,2,3,4,6,7,and

8). We have been using the book at Southern Methodist University for a one-semester course covering both continuous-time and discrete-time systems and it has proved

successful.

Normally, a signals and systems course is taught in the third year of a four-year

undergraduate curriculum. Although the book is designed to be self-contained, a

knowledge of calculus through integration of trigonometric functions, as well as some

knowledge of differential equations, is presumed. A prior exposure to matrix algebra

as well as a course in circuit analysis is preferable but not necessary. These prerequisite skills should be mastered by all electrical engineering students by their junior year.

No prior experience with system analysis is required. While we use mathematics extensively, we have done so, not rigorously, but in an engineering context. We use examples extensively to illustrate the theoretical material in an intuitive manner.

As with all subjects involving problem solving, we feel that it is imperative that a

student sees many solved problems related to the rqaterial covered. We have included

a large number of examples that are worked out in detail to illustrate concepts and to

show the student the application of the theory developed in the text. In order to make

the student aware of the wide range of applications of the principles that are covered,

applications with practical significance are mentioned. These applications are selected

to illustrate key concepts, stimulate interest, and bring out connections with other

branches of electrical engineering.

It is well recognized that the student does not fully understand a subject of this

nature unless he or she is given the opportunity to work out problems in using and

applying the basic tools that are developed in each chapter. This not only reinforces

the understanding of the subject matter, but. in some casesr allows foi the extension of

various concepts discussed in the text, In certain cases, even new material is introduced

via the ptoblem sets. Consequently, over 260 end-of-chapter problems have been

straightforward pPli3tions

included. These problems are of various types, some being

that the stuof the basic ideis presented in the chapiers, and are included to ensure

and other problems

dent understands the material fully. Sonre are moderately difficult,

problems

i"quir. that the student apply the theory he or she leamed in the chapter to

of practical imPortance.

the

ihe relative amount of "Design" work in various courses is always a concern for

and digital-filter

engineering faculty. ihe inclusion in this text of analog*;fi as othe-r design-related material is.in dir. ect response to that concern'

of all the

At the end of each chapier, we have included an item-by-item summary.

of all

irpott*t.orcepts ana formulas covered in that chapter *:X,1t-:^th:^llist

that

,"r*s iiscussed. This tist serves as a remindir to the student of materid

sPecial attention.

deserves

-systems.-The.focus

Tt roughout the book, the emphasis is on linear time-invariant

remainder of the book'

io CfruptJ. I is on signals. This material, which is basic to the

this chapter, we cover.a vari'

considers the mathemati*ii.pi.t""t",ion of signals. In

signals' transformations of

ety of .uUi".s such as p.ti.JiL tig,"ft' energy ind power

signals'

thl

--- indepindent variable, and elementary

(CT)

CU.pi.r 2 is devoted to the time-domain iharacterization of continuous-timeof conurith the classificatioo

Iinear time-invariant (LTIV' systems. The chaPter starts

of

tinuous-time systems anO tire'n introduces thi impulse-response-characterization

discussion of.slntems

and the convolurion integral. This is followed by a

equations' Simulation diagrams

characterized by linear constant-coeffici-ent differential

to introduce the state varifor such system, ur" pr"."ir[anA used as a stepping stone

with a discussion of stability'

able

- conclpt. The chapter concludes

io this point the focus is on the time-domain description of signals.and systems.

"f..ii*i

;;.;;;

i:

i.["""ri

irrv rpt".,

startingwithchaPter3'weconsiderfrequency-domaindescriptions.Webeginthe

signals' The

t a consiaeratioi "iit " ortUogiial representation of arbitrary

orthogonal rePresentation

"i"pt"i*i

Fourier series are then iniroJuced as a slecial cise of the

forperiodicsignals.PropertiesoftheFourierseriesarepresented.Theconceptoflineof

signals is given' The response

spectra for describing tfr" tt"qu1n"V content of such

concludes with a discussion

iinear ryst.m, to perilodic i"pii. it iitt*ted' The chapier

of the Gibbs phenomenon.

Chapter4beginswiththedevelopmentoftheFouriertransform.Conditionsunder

propertiesdiscussed' Appli'

which the Fourier tr.nrfor,n .*i.t. lie presented and its

modulation, multiplexing'

cations of the Fourier transform in areai such as amplitud-e

The usi of the transfer function in detersampling, and signal tilteriig a,e

is

"onsiaered'

is discu-"ed' The Nvquist sampling theorem

,".p6nr"

;;G;il.

Liiv;tti;s

"f

derivedfromtheimpulse-modulationmodelforsampling.Theseveraldefinitionsof

bandwidthareintroducedandduration.bandwidthrelationshipsdiscussed..-l-aplace

Ct.ft", 5 deals with ,i" LpU.. ,t*sform. Both unilateral and bilateral

are derived and examples

transforms are defined. n.p.atl"r of the Laplace transform

used to evaluate new laplace transare given to demonstrate t oL in.r" propertils are

of the transfer funcfoffip.ir. or to find ,tr. i'i""tt" Lif..lt transform. The concept

transform such as for the

tion is introduced and *tLi"pp-fii"tions of the Laplace

nl

solution of differential equations, circuit andysis, and control systems ale presented.

The state.variable representation of syeteme in the frequency domain and the solution

of the state equations using Laplace transforms are discu$ed.

The treatmentof cotrtinuous-time sipals atrd systens ends with Chapter 5, and a

course emphasizing only CT material can be ended at thir point. By the end of this

chapter, the reader ehould have acquired a good undentandiag of contiuuous-time signals and systems aod should be ready for the second half nf the book in which discretetime signals and eystems analysis are c,overed.

We itart our consideration of diecrete.tipe syBtems in Chapter 6 with a dlecueeiou

of elementary diegrete-time signals. The impulse-reeponse characterlzatiou of diEerete'

ti11e systems is presented and the convolutiotr sum for determining the regPonse to

arbitrary inputs is derived. The difference equatiou rePresenUtion of discrete-tine sye

tems and their eolution is given. As itr CT systeos, einulation diagrams are diesussed

as a means of obtainiag the state-variable representation of dissrete'tine systems'

Chapter 7 considerB the Fourier analysis of discrete-tine signals, The Fourier eeriee

for periodic sequences and the Fourier transform for arbitrary signals are derived. The

similarities and differencee between these atrd their cootinuous-tine couterParts 8re

brought out and their propertles and applications discu$ed. The relation between the

coutinuoue-tlme and discrete-time Fourier trsnsfotrrs of sampled analog slgpalo ie

derived and used to obtain the impulse.modulation model for samPlirg that ls consld'

ered in Chapter 4. Reconstruction of sampled analog slgnals uslng practlcal recon'

struction devices such as the zero-order hold ig considered. Sampllng rate converBion

by decimatlon and interpolatigpof sampled signals ie dlscussed. The chapter concludes

wlth a brief deecriptldri df[i/D ahit D/A coqvg[sjptt, i

Chapter E dlscusses lhe (p.transform of dlsgete'tHe slgnals. The derelopment fol'

lowe clooely that of Chapter5for tte Iaplace fransf6dn. Properties of the Z.traneform

are derived and thelr application in the analysis of diecrqte'time systems developed.

The solution of difference equations and the analysle of gtate-vadable systems using

the Z-transform are also dissussed, Flnally, the relation,between the Laplace and the

Z-transforms of sampled signals is derived aud the mapplng of the s'plane lnto the z'

plane'i8 discussed.

Chapter 9 introduceg the discrete Fourler trBnsform (DFT) for uralyzlng ftnite'

longth iequences, The properties of the DFT are derlved and the dlfferences wlth the

other transfotms dlscusged in the book are uoted. The interpretatiou of the DFf as a

matrix operation on a data vector is used to briefly note its relatlon to other orthogo'

nal traneforms. The application of the DFT to linear system analysis and to spectral

estimation of analog signale is discussed. TVo popular fast Fourier tralsform (FFT)

algorithms for the efficient computation of the DFI are preeented.

The final chapter, Chapter 10, congiders Eom€ techuiques for the deslgp of analog

and digttal 6lters, Techniquee for the deelgo of two low.pass analog flltern, namely, the

Butterworth and the Chebyshev filters, are given. The lmpulse invarlance and billnear

technlques for designing digital IIR filters are derlved. Deeign of FIR dlgital ftlters

uslng window functions is also discussed, An example to lllustrate the appllcatlon of

FIR filters to approximate nonconventional filtera ls prssented, Tbe chapter concludes

wlth a very brief overvlew of computer-alded techniques'

Pretacs

xvll

In addition, four appendices are included. They should prove useful

as a readily

variables

aud matrix

available sourse for some of the background material in complex

algebra necessary for the course, A somewhat extensive list of frequently'used formu'

las is also included.

we wish to acknowledge the many people who have helped us in writiag this book,

especially the students on whom much of lhis material was classroom tested, a[d the

reviewers whose comments were very useful. We have tried to incorporate mOst of

their comments in preparing this second edition of the book. we wish to thaEk Dyan

Muratalla, who typed a subbtantial part of the manuscript. Finally, we would like to

thank our wives and families for their Patienc€ druing the completion of this book.

S, Soltmaa

M.D, Sttruth

.,

lii-i

j

, .,,

'tf

rI

.f,

,r

'.

4t

i

I

Chapter

1

Representing Signals

1

.1

INTRODUCTION

Signals are detectable physrcal quantities or variables by mcans of which messages or

iniormation can be transmitted. A wide variety of signals are of practical importance

in describing physical phenomena. Examples include the human voice. television pictures, teletypC data, and atmospheric temperature. Electrical signals are the most eas'

ily measuied and the most simply represented type of signals. Therefore, many

engineers prefer to transform physical variables to electrical signals. For example,

light

ma'ny physical quantities. such as temperature, humidity, specch, wind speed, and

intensity, can bi transformed, usirig trinsducers, to time-valying current or voltage signals. Ellctrical engineers deal with signals that have a broad range of shapes, amplitudes, durations, and perhaps other physical properties. For example, a radar-system

designer analyzes higir-eneigy microwave pulses, a communication-system engineer

whols concemed wiitr signai detection and signal design anall-zes information-carrying signals, a power engineer deals with high-voltage signals, and a comPuter engineer

deals with millions of pulses per second.

..presented as functions of one or more independent

Mathematically. sifnals

"ie

variables. For eximple. time-varying current or voltage signals are functions of one

variable (time), the vibration of a reciangular membrane can be represented as a function of lwo spatial variables (.r and y coordinates), the electrical field intensity can he

looked upon as a function of two variables (time and space). and finally. an image signal can be regarded as a function of two variables (.r and.v coordinates). ln this introductory courie of signals and svstems. we focus attention on signals involving one

independent variable, which we take to be time. although it can be different in some

specific aPPlications.

2

Roprosentlng

.

Slgnals

Chapter

I

We'begin this chapter with atr htroduction to two classes of eignals that we are concemed with throughout the text, namely, continuous-time and discrete-time siguals,

Then, in Section 1.3, we detine periodic signals. Section 1.4 deals with the.iseue of

power and energy signals. A number of traruformations of the independent variable

are discussed in Section 1.5. In Section 1.6, we introduce several inportatrt elementary

sigaals that not ooly occur frequently in applications, but also serve as a basis for rep.

resenting other signals. Other types of signals that are of importance to engineers are

mentioned in Section 1.7.

1,2 CONTINUOUS.TIME

VS. DISCRETE.TIME

SIGNALS

One way to classify signals is according to the nature of the independent variable. If

the independent variable is continuous, the corresponding signal is called a continuous-thre signal and ie defined for a continuum of values of the iadependent variable.

A telephone or radio signal as a function of time and an atmospherlc preesure as a

function of altitude are examples of continuous-time slgnah. (See Figure 1.2.1.)

Corresponding to any instant l, and an infiniteslmally small posltlve real

number e, let ue denote the instants rr I and l, * e by ri and ri, respectively. If

r(ri) xOl) = x(4), we say that x(t) is continuous at, = ,r. Otherwlge it is discontlnuous Bt 11, and the amplitude of signal r(t) has a jump at that point. Slgnal rO is

eaid to be contlnuous if it ls continuous for all t, A eignal that has only a flnlte or a

countably lnffnlte number of discontinultles ls said to be plecewlse condnuous lf the

jump ln amplltude at each discontinuity ls flnlte.

There are many contlnuous-tlrae signale of loterest that are not coutlnuour, An exasr.

ple ir the rectangular pulse function rect(t/t) (eee Figure 1.2.2), which ls de8aed as

-

-

l

rect(t/t) = {

r,

[o'

lrl

a;

(1.2.1)

hl ,;

u(r)

I

(u)

tlgure l2.l

(b)

,

Exampleo of contlnuous.tlmo elgnalr,

S6c.

1.2

!l

Contlnuouo-Tlme vB. Dlscrete-Timo Signals

rsct

-rl2

Ilgure

122

0

A reaangular

-3-2-1

Flgure

(r/t)

pulse siggal.

0t2

L2.3 A pulse train.

This sipal is piecevise conthuous, since it is continuous everywhere except at I = ts 12

and the magnitude of the jump at these poins is 1. Another example is the pulee trail

shom in Figure 1.2.3. This sigpal is continuous at all , except , = 0, 1, t2, ... ,

At a point of discontinuity r,, the value of the sipal.r(r) is usually considered to be

undefined. However, in order to be able to consider both continuous aud piecevise

continuous signals in a similar manner, we will assigp the value

t

,tal =f,h(,i) + r(,i)I

(t2.2)

r(t)

at the point of discontinuity r = rr.

If the independent variable takes on only discrete values t : k[, where I is a fixed

positive real number and & ranges over the set of integen (i.e., & = 0, tl, t2, etc,),

to

the corresponding signal x(&[) is called a discrete-time sipal. Discrete-time signals

arise naturally in many areas of business, economics, science, and engineering. Examples are the amount of a loan payment in the && month, the weekly Dow Jones stock

index, and the output of an information source that produces one of the digits 1, 2, ...,

M every seconds. We consider discrete-time signals in more detail in Chapter 5.

I

Representing

4

PERIODI

Signals

Chapter I

APERIODIC SIGNALS

Any continuous-time signal that satisfies the condition

.r(t)=..1r*rrr.

n = 1.2.3,...

(

1.3.1)

I

where > 0 is a constant known as the fundamental period. is classified as a periodic

signal. A signal .r(r) that is not periodic is referred to as an aperiodic signal. Familiar

eiamples olperiodic signals arethe sinusoidal furrctions. A real-valued sinusoidal signal can be eipressed mathematically by a time-varying function of the form

(1.3.2)

x(t)=4sin(r,rnl+$)

where

A = amplitude

= radian frequency in rad/s

6 = initial phase angle with respect to the time origin in rad

oo

This sinusoidal signat is periodic with fundamental Period T = 2t /aotor all values of roo'

The sinusoidal time function described in Equation (1.3.2) is usually referred to as

a sine wave. Examples of physical phenomena that approximately produce sinusoidal

signals are the vottage ourput of an electrical alternator and the vertical displacement

attached tJ a spring under the assumPtion that the spring has negligible mass

of1

and no damping. Tne putse irain shown in Figure 1.2.3 is another example of a peri'

odic signal, witfi fundamental period T = 2. Notice that if r(r) is periodic with tundamentaiperiod I, then r(r) is also periodic with period 2I, 37,4T, . ... The fundamental

frequericy, in radians, liaaian friquency) of the periodic signal r(t) is related to the

fundamental period by the relationship

tn*

Zrt

ttO=7

(1.3.3)

'Engineers and most mathematicians refer to the sinusoidal signal with_radian fre'

qrJn.y ro* = 1,oo as the tth harmonic. For example, the signal shown in Figure l'2.3

h"t . iuot.*"nial radian frequency @o = rr, a second harmonic radian frequency

= 3t. Figure 1.3.1 shows. the first'

-, = Zn,and a third harmonic iadian friquenry

A, 0ro, and

se'cond, and third harmonics of signal x(t) in Eq. (1.3.2) for specific values_of

harmonic are distinct. In theory' we

O. Note that the waveforms coresPonding to each

-,

.rr (r) =

x2

+cos 2t,

Flgure

Lt.l

(r) = cos 4rl

Harnonically related sinusoids.

13

(r)

=

+cos 6u,

Sec.

1.3

Periodic vs. Aperiodic Signals

can associate an infinite number of distioct harmonic signals with a given sinusoidal

waveform.

Periodic signals occur frequently in physical problems. ln this section, we discuss the

mathematical representation of such sipals. In Chapter 3, we show how to represent

any periodic signal in terms of simple ones, such as sine and cosine.

Eranple

l3.l

Harmonically related continuous-time exponentials are sets of complex exponentials

with fundamental frequencies that are all multiples of a single positive ftequency r,ro.

Mathematically,

$r() = exp[lk<rrdl' k = 0, +1, -+2, ..

(1.3.4)

We show that for k * O, +t(t) is periodic with fundamental period 2rr/ltool or frudamental frequency I kr,rol.

In order for signal Qr(t) to be p€riodic wilh period T > 0, we must have

exp [/<roo(t

+

I)l

= exp[korot]

or, equivalently,

^=

'

2tt

(13.5)

-le;J

Note that since a signal that is periodic with period I is also periodic with period

any positive integer ( then all signals Q.(l) have a common period of 2rr/roo.

lI

for

The sum of rwo periodic signals may or may not be periodic. Consider the two periodic sigrals r(t) and y(t) with fundamental periods T, and Tr, respectively. We investigate under what conditions the sum

z(t)=ax(t)+by(t\

is periodic and what the fundamental period of this signal is

Since x(t) is periodic with period fr, it follows that

r(r) = r(, + /<f

if the sigual is periodic.

)

$imil61ly,

y(t)=y(t+lTr)

where k and I are iategers such that

z(t) -- ax(t + kT) + by(t + lT2)

In order for z(r) to be periodic with period T, one needs

ax(t

+ T) + bv(, + T) = ou(t + trr) + by(t + lTr)

We therefore must have

T=kTr=lTz

Repreeenllng

6

Slgnals

Ohapter

1

or, equivalently,

T,t

---l

_-

T2k

-

In other words, the sum of two periodic signals is periodic only if the ratio of their

respective periods can be expressed as a rational number'

ranple 1.82

We wlsh to determine which of the following sigrals are periodic'

(a)

,n

r'(r) = sin ?r

(b) rz0) =

'in?rto'llr

(c) .rr(t) = sin 3t

(d) xo(| = rr(r) - 2r!(r)

write:r(r) as.the sum of two

For there signals, 11(l) is periodic with period Tr = 3. We

sinusoids wiih periiii rri = L5tl3 and-T.-= 15fl' Since 13T2, ='l7r'i1lollon: that

= 15. rl(r) is periodic with period rl = 2r'll3. Since we cannot

is periodic with period

frnd integen k and I such that kT1 = lT3, it follows that ro(t) is not periodic'

t!

r'()

i,

Note that if x(r) and y(l) have the same period T, then z(r) = x(t) + y(O-is periperioOic wittr period T; i.e., linear operations (addition in this case) do not affect the

odicity of the resulting signal. Nonlinear oPerations on periodic sigrrals (such as

multiilication) produce peiodic signals with different fundamental Periods.The following example demonstrates this fact.

kanple r.$-l

l,et:(r) = oostrrrr and y(l) = cosr,ly'. Consider the signal a0) = :(t)y(t)'

Signal

x(t)

is

perioriic with periodic itr/'or, aad signat y(r) is periodic with period 2n/ur,The fact that

z(t): ,1rrrr1, has two componenti, one with radian frequency o2 -'o, and the other

wit-hradianfrequency(l,2+.r'canbeseenbyrewritingtheproduct:(t)y(t)as

cosorr coso2, =

|t o.tr, -

to,)t + cos(o2 + or)d

have a constant term (ll2) and a second-harmonic term

z,,ir). ln general,'nonlinear operations on periodic sigtals can produce higher order

irE

harmonics.

if

or1

=

t.

to2

=

ro,

ihen e(t)

wi[

and

Since a periodic sigrral is a signal of infinite duration that should start at, = -o

go on toi = o, it dlows that ilt practical signals are aperiodic. Nevertheless, the study

of the system response to periodic inputs is essential (as we shall see in ChaPter 4) in

the process of developing the system response to all practical inpus'

h

Sec.

1.4

1.4

7

Energy and Power Signals

ENERGY AND POWER SI NALS

Let x(r) be a real-valued signal. If r(t) represents the voltage across a-resistance R. it

ptoOucet a c.urrent i(t'1 = ,1'71*' The instantaneous Power of the sigoal is

hr1r1 = ,'(t)i?., and the energy expended during the incremental interval dr is

,ri)i ndl. in general, we do not know whetherr(r) is a voltage or a current signal, and

in oiaer to normatize power, we assume that R = 1 ohm. Hence, the instantatreous

power associated wittrsignal r(l) is r2(r). The signal energy over a time interval of

lenglh2L is defined as

(r.4.1)

dt

r., = [' lxktlz

l-1' '"

and the total energy in the signal over the range, € (--, -) can be defined as

E=

li,,,

The average power can then be defined

P=

I' ,l,r,rl, o,

(t.4.2)

as

li,n l+,1:,1,(,)t,d,]

(1.4.3)

Although we have used electrical sigrals to develop Equations (1.4.2) and (1.4.3), these

equatio'ns define the energy and power, respectively, of any arbitrary signal.:.(t)'

When the limir in Equation (1.4.2) exists and yields 0 I f,, < a, signal r(t) is said to

be an energy signal. Inspection of Equation (1.43) reveals that energy signals hav_e zero

1n,

power. Oriitre-ottrer trand, if the limit in Equation (1.4.3) exists and yields 0 < P

itren x(l) is a power signal. fower signals have infinite energy'

+c!

es statea earlier, p-riodic signals afe assumed to exist for all time from -o to

If it happens that these periodic signals_have finite

and, therefore, have intinite

"nJrgy.

u""iug" power (which they do in most cases), then they are power signals. In contrast'

bounded finite-duration signals are energy signals.

Example 1.4.1

Inrhisexample,weshowrhatforaperiodicsignalwithperiodl,theaveragepoweris

, = +( r()t,at

(1.4.4)

any

If r(r) is periodic with period I, then the integral in Equalion ( 1.4.3) is the same ovet

interval of length L Aliowing the limit to be taken in a manner such that 2L is an integral

iultipte of thJ period (i.e.,it = m7),we find thar rhe total energy of .r(l) over an inter'

val oi length 2L is rn times the energy over one period' The average power is then

p = rim

=

l*^

l,'l,o)1,d4

]7[, vurl'o'

I

-il.t

ti.

I

Reprcsentng

.t1(r)

Chapter

I

x2O)

,

0

0

(a)

o)

Ilgure

Ixanple

Slgnals

L41

Signals for Examplel.4.2.

1.,1J

Coosider ths nignels in Figure 1.4.1. We wish to determine whether these sigpals are

energy or power sigpals. The signal in Figure 1.4.1(a) is aperiodic with total eoergr

z= l.a,eryl-ald,=+

which is fiaite. Therefore this signal is an energy signal with energy

age power is

P=

A2 /2.

The aver-

li- (*l_,o, "*r-40)

=m#=o

and is zero as expected.

The energy in the sigpal in Figure 1.42(b) is found as

E=

HI

U_,o,

"

+

["

n, expl-zr]r,]

=

H

nf, * lO- expt-zr)]

which is clearly unbounded. Thus this sigral is not an energy signal. Its power can be found as

E=

li- riU_rn " + IL a' expl-ztl ")= +

so that this is a power signal

with average pwer A2/2.

kample L4a

Consider the sinusoidal signal

x(t)=Ssin(or/+Q)

This signal is periodic with period

-2t

u)o

S€a.

1.4

I

Energy and Power Slgnals

The average power of the signal is

r = |[o' errn (<rror + rb) dr

=

* f^E- j

cos(z'or

+ zotlat

A7

2

The last step follows because the sipal cos (2r,rol + 20) is periodic with period Tl2 ad

the area under a cqsine sigpal over any ioterval of length lI, where I is a positive integer,

is always zero. (You should have no trouble confrming this result if you draw tso conplete periods of cos (2oot + 2$)).

tr'.rqnrple 1.4.4

Consider the two aperiodic signals shovu in Figure 1.4.2. These two sigpals are eramples

of energy sigpals. The rectatrgular pulse shown in Figure 1.4.2(a) is stricily time limited,

since .r, (l) is identically zero outside the duration of the pulse. The other signel b aslmP

totically time limited in the sense that r;(t) -+ 0 as t -+ t.o. Such sigpals may also be

described loosely as "pulses." In either case, the average power equals zero. The energr

for signal .x,(t)

,r

E, =

=

For

H

..

J_,r?@a,

r:, A2 at = l,)

xr(),

E, =

=

!y-

0

a2

A2

exPl-zaLl)

=

tS ?(1

-

x2U\= A exp [-a

r1(r)

-tl2

f ,A' "*el- ultll at

rl2

0

(a)

(

Flgure

L4J

Sigpals for Examgle 1.4.4.

b)

lrll

't0

Representng

Slgnals

Chapter

1

Since E1-and E2 are finite, rr(r) aod t2(r) are energy signals. Almost all time-Iimited signals of practical interest are energy sigrals.

I.5

TRANSFORMATIONS OF THE

INDEPENDENT VARIABLE

A oumber of importanl operatiorui are often Perfofmed on sigpals. Most of thase operations involve transformatioas of the independent variable. It is imPortant that the

reader know how to perform such operations and understand the physical meaning of

each one. The three operations ve discrrss in ihis section are sffiing, reflecting, and

time scaling.

15.1 flre Shfffing Qreradon

!( -

Signal

6) represents a time-shifted version of .r(t); see Figure 1.5.1. The shift in

time is ro. If ro > 0, then the signal is delayed by 6 seconds. Physically, ts cantrot take

on negative vdues, but from the analytical viewpoint, r(, ,0), ,o < 0, rePresents an

advanced replica of .r(t). Sigpds that are related in this fashion arise in applications

such as radar, sonar, communication systems, and seismic sigral processing.

-

trranple 15.1

C,onsider the signal r(r) shown in Figure 1.5.2. We want to

can easily be seen that

(t+t,

lr.

'(')= 1-r*r,

[0,

plot:(r

-

2) and

:(

-l=rso

o<t=z

2<t=3

otherwise

To perform the time-shifting operation, rePlace t by t

-

2 in the expression for

r(t):

(Q-z)+t, -tst-2=o

ost-2=2

lr.

,(t-2) = 1_t,

_2)+3, 2<t_2=3

[0,

otherwise

.r

(,

- ,o)

'u+'l

ngure

l5.f

+ 3).

The shifting operation.

lt

Sec.

1,5

Translormatons ol the lndependent Variable

11

or, equivalently,

x(t

(r-t,

t=t=2

- 2)= 1 l,.

[0,

,. 1:',:i

othervise

-

The sigrral x(t 2) is plotted in Figure 15.3(a) and can be described as

units to the right oo the time axis. Similarly, it catr be shown that

(t

r(, + 3) = .|

+

t,

r(t)

ahiffed tvo

-4=rs -3

'j,, -i::: ;'

[0,

othersise

The sigpal r(, + 3) is ploned in Figure 153(b) and represents a shifted version of

shifted thee udts to the left.

r(t),

Eanple 153

Vibration sensors are mounted on the front and rear axles of

a moving vehicle

to pick up

vibrations due to the roughness of the road surface. The signal from the front seosor is r(l)

1Z)).

and is shown in Figure 15.4. The signal from the rear axle sensor is modeled asr(t

by

vehicle

of

the

speed

possible

the

to

determine

If the sensors are placed 5 ft apart' it is

eensor.

comparing the signal from the rear ade sensor with the sigpal from the front ade

Figure 1.55 illustrates the time{elayed version of .r(t) where the delay is 1Z) ms, or

-

Flgure 152 Plot of .r(t) for

Example 1.5.1.

r(r -

r(, +3)

2)

-2

(b)

(a)

Hgue

15J

The shifting of

:(t)

of Example 1.5.1.

12

Representlng

r(r

-

Signats

Chapter

,

(ms)

Flgure 15.4 Front axle sensor

signal for Example 1.5.2.

t

(ms)

figurc 155 Rear axle sensor

signal for Example 1.5.2.

1

120)

0.12 s.

rhe delay t between the

sensor sigpals from the front and rear axles is related tb

the distance d between the two axles and the speed o of the vehicle by

d=ut

so that

a=-dT

=#=sofi/s

karnple 1.63

A radar placed to detect aircraft at a range R of 45 nautical miles (nmi) (l nautical mile

= fi16.115 ft) transmits the pulse-train signal shown in Figure 1.5.6. If there is a target,

the transmitted signal is reflected back to the radar's receiver. The radar operates by mia-

*1 l*

Flgure

15.6

roo

Radar-transmitted signal for Example 1.5.3.

r (gs)

Sec.

1.5

Translormations ol the lndependeni Variable

13

Received

pulse

, (tts)

f*ssot

Ilgure 15.7 Transmitted and received pulse train of Example 1.53.

suring the time delay between each transmitted pulse 8nd the corresponding returq or

echo. The velocity of propagation of the radar sigral. C is equal to 161,S/5 omi/g

The round-trip delay is

":T=##=o.s56ms

Therefore, the received pulse train is the same as the transmitted pulse train, but shifted

to the right by 0.556 ms; see Figure 1.5.7.

1.6.2 the Reflection Operation

is obtained from the signal r(t) by a reflection about f = 0 (i.e., by

reversingr(t)), as shown in Figure 1.5.8. Thus, ifr(t) represents a signal out of a video

recorder, then x(-r) is the signal out of a video player when the rewind switch is

pushed on (assuming that the rewind and play speeds are the same).

The sigral

&ample

x(-r)

1.6.4

We want to draw.r(-r) and.r(3

can be written as

-

r)if .t(r)

is as shown in Figure 1.5.9(a). The signal

.r(-r)

-l

I

L

Flgure l.SJ

-2

-l

ilhe reflection operation.

r(t)

Representng

14

(t+t,

,0)={1,

[0,

The sigpat

r(-l)

is obtained by replacing r

x,-,, =

Slgnals

ChaPter

1

-1sr=0

o<t=z

othervise

by

-t

in the last equation so that

-l

''

I -:: I

{i'*

Lq

otherwise

or, equivalently,

l-t+t,

r(-r)={1,

0,

0sr=l

-2<tso

othersise

L

The sigpal:(-r) is ilhutrared in Figure 15.9(b) and can be described as:(r) reflected

about the vertical axis. Similarly, it caD be shown that

(q-L

3=t=4

.r(r-r)={t,

Lo,

1<r<3

otherwise

The signal r(3 r) is shown in FigUre 15.9(c) and can be viesed as r(t) reflected and then

shifted three urits to the right. This result is obtained as follows:

-

.r(3-t)=r(-(t-3))

.r(3

-

r(-, - 3)

,)

l34t-5-4-3-2-l

(d)

ngnre

Uu

Plots of

x(-t)

and

x(3

-

t) for Example lS.zi.

Sec.

1.5

15

Transtormations ol the lndep€ndenl Variable

Note that if we first shifi.r(I) by thrce units and then reflecl thc shifted signal, the result

is .r( - I - 3). which is shorvn in Figure 1.5.9(d). Therefore. thc operalions of shifting and

reflecting are not com]Irutative,

In addition to its use in representing physical phenomena such as that in the video

recorder example, reflection is extremely useful in examining lhc symmetry properties

that the signal may possess. A signal r(r) is referred to as an cvcn signal, or is said to

be even symmetric, if it is ideatical to its reflection about the trrigin-that is, if

r(-t)

=

(1.s.1)

1111

A signal is referred to as odd symmetric if

'r(-t) = -'1';

(1.s.2)

An arbitrary signal .r(r) can always be expressed as a sum of even and odd signals as

(1.5.3)

.r(r) = x.(r) +

r,(t)

where.r"(r)

is called the even

part of .r(t) and is given by (see Problem 1.14)

1

.r"(,) =

and

r,(r)

is called the odd part of

;[r(r)

x(t) and

.,,,(,) =

+.r(-r)]

(1.s.4)

is expressed as

i.[r(r) -,r(-

r)]

(1.5.s)

Erample 1.6.6

Consider the signal .r(t) defined by

r(r) =

[1. r > o

[0, r<o

The even and odd parts of this signal are, respectively,

r.(r)

I

=;.

all texceptr = t)

t:

r"(r) = {

l. ;

,<o

r>0

The only problem herc is thc value of thesc functions at , = 0. If we define x(0) = 112

(the definition here is consistent with our detinition of thc signal at a point of discontinuity), then

,.(o)=j

Signals

-r.()

and

r.(r)

and x,(0)=0

are plotled in Figure 1.5.10.

16

RopresenUng

Slgnals

xo(t I

ngure

f5.f0

Plots of .r.(l) and .r,(r) for

r(r) in Example

1.5.5.

f,kar,rple 15.8

Consider the sigral

,u, =

exp[-cr]'

td

,>0

,<0

The even part of the sigral is

r.(r): {f)e"*e1-,,1,

"

[ia"nt"'t'

=

The odd part

]a

,>0

r<0

expl-,lrl1

ofrG) is

x"g)=

[]

lz

a

"

[-i

",p1-"r1,

e

"'nl"']'

r>0

,<0

Signals .r.(t) and .r,(r ) are as shown in Figure 1.5.11.

x.(l)

Ilgure 15.11 Plots of r"(r) and x,(l) for Example

1.5.6.

Chapler

1

Sec.

1.5

Translormations ol th€ lndependeni Variable

( t.1r

-l

0

I

t

17

)

-l0] tt

0

(b)

(.1

3

(a)

Figure

15.12 The time-scaling operalion.

1.6.9 lbe li66gsating Operation

Consider the signals r(t), x(3t), and x(t/2), as shown in Figure 1.5.12. As is seen in the

figure, x(3r) can be described as x(r) contracted by a factor of 3. Similarly, x(t/z) catl

be described as.r(t) expanded by a factor of 2. Both.r(3t) and x(t/2) are said to be

time-scaled versions of -r(t). In general, if the independent variable is scaled by a parameter 1, then r(11) is a compressed version of r(r) if hl , t (thc signal exists in a

smaller time interval) and is an expanded version of .r(t) it lrrl . 1 (the signal exists in

a larger time interval). If we think of r(l) as the output of a videotape recorder, then

.r(31) is the signal obtained when the recording is played back at thtee times the speed

at which it was recorded,and. x(t/2) is the signal obtained when thc recording is played

back at half speed.

r.vnrnplo 15.7

Suppose we want

1.5.2. Using the definition of

r(t)

:(3t

-

-

6), where.r(t) is the signal shown in Figure

in Example 1.5.1 we obtain

lo plot the signal r(3r

O1

3r-5,

!-r=2

I,

2.r=:

-3, + 9,

!''='

0,

otherwise

=

3

6) versus r is illustrated in Figure 1.5.13 and can be viewed as r() compressed by a faclor of 3 (or time scaled by a factor of lB) and then shifted two units of

time to the right. Note that if .r(l) is shifted first and then time scaled by a factor of lB,

we will obtain a different signal: therefore, shifting and time scaling are not commutative.

The result we did get can be justified as follows:

A plot of r(3r

-

Representng

18

.r

(

-1,

-

Slgnals

Chapter

I

(r)

sl

I

.l

.r(3t

-

Hg[re 15.13 Plot of r(3,

,

B-l

6) =

:(3(t

Example 15.7.

-

-

6) of

2))

This equation indicates that we perform the scaling operation first and then the shifting operation.

Exanple 15.8

We oflen encounter signals of the type

r(r) = I -

.4

exp[-ol]cos(.ot + Q)

Figure 1.5.14 shows.r(r) for typical values of ,4, o and roo. As can tre see1, rhis 5igtal eventually goes to a steady stale value of I as I becomes infinite. In practice, it is assumed that

the signal has settled down to a final value when it stays within a specified percentage of

its final theoretical value. This percentage is usually chosen to be 5% and the time ,, after

which the sigral stays within this range is defined as the settling time ,,. As can be seen

from Figure 1.5.14, r, can be determined by solving

I + A exp[-ot,] = 1.05

so that

,,

ffgore

fJ.14 Sigpal:(t)

for Example 15.8.

Sec.

1.6

19

Elementary Signals

t' = -!ornlo'osl

LA l

Let

r(r) = I

-

2.3

exp[-

10.356t] cos[5t]

We will find l, for t(t), x(t/2) and r(2t).

For x(l), since A = 2.3 and c = 10.356. we get rr = 0.3697

Since

x(t12)

=I

-

2.3

s.

exp[-5.178r] cos[2'5t]

and

x(Zt)

: | - 2.3 expl- 2O.7l2tl cos Il0r]

we get ,r = o.7394 s and t, = 0.1849 s for .r (r/2) and x(?t) respectivcly. These results are

expected since.r(t) is compressed by a factor of 2 in the first casc and is expanded by the

same factor in the second case.

tn conclusion, for any general signal x(r), the transformation aI + B on the indep€ndent variable can be performed as follows:

.r(or+p)=r(o(,+F/o))

(1.s.6)

where a and p are assumed to be real numbers. The operations should be performed

in the following order:

1. Scale by cr. If c is negative, reflect about the vertical axis.

2. Shift to the right by p/a if p and o have different signs, and to the left by F/o if F

and

c

have the same sign.

Note that the operation of reflecting and time scaling is commutative, whereas the

operation of shifting and reflecting or shifting and time scaling is not.

ELEMENTARY SIGNALS

Several important elementary signals that occur frequently in applications al5o serve

as a basis for representing other signals. Throughout the book, we will find that representhg signals in terms of these elementary signals allows us to better understand the

properties of both signals and systems. Furthermore, many of thesc signals have fea'

tures that make them particularly useful in the solution of engineering problems and,

therefore, of importance in our subsequent studies.

1.6.f

The Unit Step Function

The continuous-time unit step function is defined as

[trttt)=to'

and is shown in Figure 1.6.1.

r>o

,<0

(1.5.1)

Representing

20

L6.l

tlgure

Signals

Chapter

1

Continuous-time unit

step function.

This signal is an important signal for analytic studies, and it also has many practical

applications. Note that the unit step function is continuous for all t except at , = 0,

where there is a discontinuity. According to our earlier discussion, we define

u(0) = 112. An example of a unit step function is the output of a 1-V dc voltage souroe

in series with a switch that is tumed on at time t = 0.

Erample 1.6.1

The rectangular pulse signal shown in Figure 1.6.2 is the result of an on-off switching operation of a constant vohage source in an electric circuit.

In general, a reclangular pulse that extends from -a to +a and has an amplitude A can

be written as a difference betwecn appropriately shifted step functions, i.e.,

A rcct(t/?a\ = Alu(t + a) - u(t

-

a)l

(t.6.2)

In our specific example.

2recr(r/21 = 21u(t

+ l)

-

a(t

-

1)l

Elqqrmple 1.69

Consider the signum lunction (written sgn) shown in Figure 1.6.3. The unit sgn function

is defrned by

s$nl =

2 rect

{r I,

r>0

r=0

r<0

(r.6.3)

(r/2)

Flgure 1.62 Rectangular pulse

signal of Example 1.6.1.

Ssc.

1.6

21

Elementary Signals

r8n (, )

Ftgure

l.6J

The signum function.

The signum function can be expressed in terms of the unit step function as

sgnr=-1+2a(r)

The signum function is one of the most oflen used signals in communication aud in control theory.

1.6.2 lhe Ramp Function

The ramp function shown in Figure 1.6.4 is defined by

(t.

,tr)=to,

,>

o

r<o

(r.6.4)

The ramp function is obtained by integrating the unit step function:

I

ur-)h = r(t)

The device that accomplishes this operation is called an integrator. In contrast to both

the unit step and the signum functions, the ramp function is continuous at t = 0. Time

scaling a unit ramp by a factor o corresponds to a ramp function with slope a' (A unit

ramp function has a slope of unity.) An example of a ramp function is the linear-sweep

waveform of a cathode-ray tube.

Erample 1.63

Lrtx(l) = u(t+2) -Zu(r + 1)+Zu(r)- u(t -2) -2u(r - 3) +2u(t -

4). Lety(t)

denote its integral. Then

Flgure 1.5.4 The ramp function.

22

Representing

y(t) = t(t + 2) - zt(t + l) + zt(t)

Signal y(t) is sketched in Figure 1.6.5.

Signals

Chapter

1

' r(t - 2l - 2r(t - 3) + 2r(t - 4\

Flgure l.5S The signal used in

Example 1.6.3.

1.63 lhe SamplingFunction

A

function frequently encountered in spectral analysis is the sampling function

Sa(.r), defined by

S"t)=Y

(1.6.s)

Since the denominator is an increasing function of r and the numerator is bounded

( | sinx | < 1), Sa (r) is simply a damped sine wave. Figure 1.6.5(a) shows that Sa (r) is

an even function ofx having its peak at r = 0 and zero-crossings at x = tnzr. The value

of the function at x = 0 is established by using I'H0pital's rule. A closely related function is sinc r, which is defined by

sinc.r

sln-r

= nx

-

Sa(rr-r)

(1.6.6)

and is shown in Figure 1.6.6(b). Note that sinc x is a compressed version of Sa (.r); the

compression factor is n.

1.6.4 The Unit Impulee Function

The unit impulse signal 6(t), often called the Dirac delta function or, simply, rhe delta

function, occupies a central place in signal analysis. Many physical phenomena such as

point sources, point charges, concentrated loads on structures, and voltage or current

sources acting for very short times can be modeled as delta functions. Mathematically,

the Dirac delta function is defined by

.["

r0)a0) dt = x(o\, tt < o < t2

(1.6.71

provided that x(l) is continuous at I = 0. The tunction 6O is depicted gaphically by a

spike at the origin, as shown in Figure 1.6.7, and possesses the following properties:

Sec.

1.6

n

Elementary Signals

Sa

(x)

sinc

(r)

(b)

Figure 1.6-5 The sampling function.

Ilgure 1.6.7 Representation of the

unit impulse tunction 6(l).

l.

6(0) -+

co

2.60)=0,,+0

3. I 6(t)dt=l

4. 6(t) is an even function; i.e.,6(t)

:

5(- t)

As just defined, the 6 function does nol conform to the usual definition of a function. liowever, it is sometimes convenient to consider it as the limit of a conventional

function as some parameter e approaches zero. Several examples are shown in Figure

1.6.8; all such funitions have the following properties for "small" e:

24

!..

Pt0l

_S 0

22

Represenffng

Slgnals

o,=

.

tt rr\2

;/

tt2u)

e

"(*

Chapter

I

-2e

t

tigure

l.6J

Engineering models for 6(r).

1. The value at r = 0 is very large and becomes infinity as I approaches zero.

2. The duration is relatively very short and becomes zero as e becomes zero.

3. The total area under the function is constant and equal to l.

4. The functions are all even.

Eramplo 1.6.4

Consider the function defined as

p0) =

"ts6.'(*..#)'

This function satisfies all the properties of a delta function,

Po)="rrB

as can be

shown by rewriting it as

:w#r

so thal

1- p(0) =

Jl$,

2.

(l/e) = o. Here we used the well-known

For values of t

*

linu't

liq (sinr)/t = t.

0,

,(*,rT)'

p(,) =

"rijm.

=("rg.

"r["u*

The second limit is bounded by l, but the

(-r.*i;'1

fi'.t limit

vanishes as e

P(,)=0, t+o

3. To show that the area under p(r)

['

-o(o

is unity, we note that

at =

=

"'s

:

l- (''*]'i"')' "

In 1- sin'(")

r' r,

I

--

+

0+; therefore,

Sec.

1.6

Elementary Signals

where the last step follo

Itl

a

Since (see Appendix B)

sln'r

r_

--;- dr = t

T.

I

p(t)dt = |

it follows that

4. It is clear that p(t) = p(-t)i

therefore,

p(l)

is an even funcrion.

Three important propenies repeatedly uried when operating with delta functions are

the sifting property, the sampling property. and the scaling property.

Sifting Property.

The sifting property is expressed in the equation

J"x(r)6(r

- ,rrr,=

{Xlt'' :;I;""

This can be seen by using the change of variables

J"ros(r

r

- 6)dt = l,':::,n

=t

-

,o

(1.6.8)

to obtain

+,0)6(r)d"

=r0o), \<to<t2

by Equation (1.6.7). Notice that the right-hand side of Equation (1.6.8) can be looked

at as a function of ro. This function is discontinuous at r0 = t, and ro ,2. Following our

notation, the value of the function aa \ ot tzshould be given by

:

ttt

| ,(r)t( - ti

Jtt

dt =

1

,r<^1,

,o

: t or

to

=

tz

(1.6.9)

The sifting property is usually used in lieu of Equation (1.6.7) as the de6nition of a

delta function located at ro. In general, the property can be written as

,{,)=f11116(r-t)dr

(1.6.10)

which implies that the signal .r(t) can be expressed as a continuous sum of weighted

impulses. This result can be interpreted graphically if we approximate r(r) by a sum of

rectangular pulses, each of width A seconds and of varying heights, as shown in Figure

1.6.9. That is,

i(,)= i

t'-o

r(&A)rect((r -kL)/L\

Representing

r(:a)

recr

((r

-

Chapter

1

2AyA)

lo

----l

Signals

Figure 1.6.9

signal r(r).

a

Approximation of

which cante written as

iot

=

oi.'(ml[]*.t1tr -

ka)/^)]tkA

- (k - 1)AI

Now, each term in the sum represents the area under the /<th pulse in the approximation iO. We thus let A -+ 0 and replace kA by t, so that /<A (k l)A = dt, and the

summation becomes an integral. Also, as A -r 0, 1/A rect((t - tA)/A) approaches

6(t r), and Equation (1.6.10) follows. The representation of Equation (1.6.10), along

with the superposition principle, is used in Chapter 2 to study the behavior ofa special

and important class of systems known as Iinear time-invariant systems.

-

-

-

Sampling Property. If x(t)

x(r)6(t

-

is continuous at r0, then

to)

:

r(to)6(t

-

t)

(r.6.11)

Graphically, this property can be illustrated by approximating the impulse signal by a

rectangular pulse of width A and height 1/A, as shown in Figure 1.6.10, and then allowing A to approach zero, to obtain

l,$ ,Cl I

r(r0t rcct

tt, -

rectllr

- h)lL) = :(ro)D(r - ro)

,u)/a)

Ilgure L6.10 The sampling

property of the impulse function.

Sec.

1.6

Elementary

Signals

27

Mathematically. two functions /, (s(r)) and /r(s(r)) are equivalent over the interval

(t,, rr) if, for any continuous function y(l),

ett

I

Jt

Therefore, .r(l)S (r

-

y(,)/'(s(,)) dt =

ro) and.r(ro)6(r

'l'-y(r)x(r)60

|

J\

-

fl,

|

J"

y(t)fr(6(t)) dt

,0) are equivalent, since

fl'

- t)dt =y(ro)x(o) = J,,| y(r)x(to)6(t

- rldt

Note the difference between the sifting property and the sampling ProPerty: The

right-hand side of Equation (1.6.8) is the value of the function evaluated at some Point.

*-her.ur the right-hand side of Equation (1.6.11) is still a delta iunction with strength

equat to the value of .r(t) evaluated at I = lo.

Scaling Property. The scaling proPerty is given by

E(ar

+ D) =

#rt. i)

0.6.12)

This result is interpreted by considering E(l) as the limit of a unit area pulse p(l) as

some parameter e iends to zero. The pulse p(at) is a compressed (expanded) -version

By

of. p(ti if a > I (a < 1), and its area is l/lal. (Notc that lhe arca is always positive.)

tu[ii! tn" limit as e -+ 0, the result is a delia function with strength l/la I' We show this

by co-nsidering the two cases a > 0 and a ( 0 separately. For a > 0, we have to show

that

1",,Q)t1o,

+ b)dt --

. t)*, ,, .

I'l,alu(,

-

,l

.

,,

Applying the sifting property to the right'hand side yields

1,r-q\

a \a I

To evaluate the left-hand side, we use the transformation of variables

r-al*b

Then dl = (lla)dt,and therangerr

hand side now becomes

ltltzbecomesal,'r b<t < at2+ D'Thelefr

t)'("1

+ b)dt

= J''*t'\

["r()6('r

[''no '1t:a l''a

-'-Jt''-''''\''

I /-b\

=;'\

"

which is the same as the right-hand side.

When a < 0, we have to show that

)

''"

28

Representing

l,',,rU)6(ar

+ b)d,=

l" fr16111, .ur)*,

Signals

Chapter

1

,,.--!.,,

Using the sifting property, we evaluate the right-hand side. The result is

I /-b\

E'[7/

For the left-haad side, we use the transformation

I

= at +

b, so that

at=!d., = -!a,

a

lal

andtherangeofrbecomes

l,',,,{,)t1,,

-lolq+ b1r < -lalr,

+

b) dt

+ D,resultingin

il:,'::,(f) rt,l pi*

=hl-':,::'(#)",,'

=

1 l-b\

=EI'|.7/

Notice that before using the sifting property in the last stepr we interchanged the limis of integration and changed the sign of the integrand, since

-lalq+b<-lalq+b

lit-arnple 1.65

Consider the Gaussian pulse

p(l.. =

The area under this pulse is alwap

1;

I

f-t21

t6?,*L;pl

that is,

l"#'*l#)"='

It can be shown that p(l) approaches 6(l) as e -r 0. (See problem 1.19.) l*t a > I be any

cotNtant. Then p(ar) is a compressed version ofp(l). It can be showtr that the area under

p(ar) is \la, and as e approaches 0, p(ar) approaches 6(ar).

llrerrple

1.0.6

Suppose we want to evaluate the following integrals:

a.

.t

I

t-2

ra

0+t2yt1t-z1ar

b. J-2

I (r+12)6(r-3)d,

Sec.

1.6

Elementary Slgnals

t3

c. I

Jo

d.

exp[r -216(a

29

- gdt

ll

J_-6(t)dt

a- Using the sifting property yields

?l

J_r(,+,')to-3)dr=o

since

b.

l = 3 is not in the interval -2 < t <

Using the sifting property yields

i_r(,

since

c.

* r1oc -

I = 3 is within the interval

3)dt = 3 +

32

= t2

-2<t<4.

Using the scaling property and then the sifting properly yields

f

"ro[

-

216ra

-

4)dt

=/ *oU =

d.

7.

l*Ptol

:

z1la1r

-

zlar

I

Consider the following two cases:

Case

l: t <o

In this case, point

gral is zero.

r:0is

not within the interval

-€ < r (

l,and the result of the inte-

Cose2:t>0

In this

case,

r = 0lies within the interval

-co

(r(

l, and the value of the integral is 1.

Summarizing, we obtain

f_,r"r*

=

{i;

;:3

But this is by definition a unit step function; therefore, the functions 6(t) and z(l) form an

integralderivative pair. That is,

fi,1,y =

u1,'1

(1.6.13)

t_ 6(t)dt = z(t)

(1.6.r4)

The unit impulse is one of a class of functions known as singularity functions. Note

that the definition of 6() in Equation (1.6.7) does not make sense if D(t) is an ordinary

function. It is meaningful only if 6O is interpreted as a functional, i.e., as a process of

assigning the value r(0) to the signal r(t). The integral notation is used merely as a con-

Signals

Representing

'

Chapter

1

venient way of describing the properties of this functional, such as linearity, shifting.

and scaling. We now consider how to represent the derivatives of the impulse function.

1.6.6 Derivatives of the Impulee Furction

The (first) derivative of the impulse function. or unit d0ublet, denoted by 6'(l), is

defined by

I

J

x(r)6'(r

t,

- \)dt = -r'(4,), rr ( r,r ( rz

provided that r(t) possesses a derivative x'(1,) at t

strated using integration by parts as follows:

['r(r)6'(r J,,

h)dt

= J,,['r()d[6(r =

r (,) s (, _ ,r)

-

(1.6.15)

t,. This result can be demon-

ro)]

_

l"

l,',,'

,ur6

(t _ t,,)dt

=0_0-x,(to)

since

1.

2.

3.

6(t) = 0 for t #

x(t)6',0

rl

-

0. It can be shown that 6'(r) possesses the following properties:

to) = .rGo)D',(t

-

| 6'(t -

tn)dr

:

5'(ar.

=

r'(,. :)

J_-

D)

#

6(t

to)

-

-r',(o)5(r

-

rn)

-(r)

Higher order derivatives of 6(t) can be defined by extending the definition of 6'(t1.

For example, the n th-order derivative of 6(t) is defined by

f"r{r;u,",U

-

ts)dt

=

(-t)'r,",((,), lr ( r,, (

provided that such derivative exists at r =

shown in Figure 1.6.11.

,o.

r,

(1.6.16)

The graphical representation of 6'(t) is

Sec. 1 .6

31

Elementary Signals

6'(, )

Iigure

l.6.ll

Representation

of

D',(r).

Exanple 1.6.7

The current through an inductor of l-mH is i('l)

voltage drop across the inductor is given by

a1t1

=

ro-!

fi[10

= -2 x l0=

-2 x

2

l0-2

exp[-2r]u(r)

cxp

l-

-

= l0 exp[- zrlu(t)

-

6(t) ampers' The

s(r)l

2rlu(t\ + 10 2exp[-2r]6(r)

cxp[-2r]a(r) + 10-280)

-

-

10-28'(l) volts

10-2E'(I) volts

where the last step follows from Equation (1.6'l l).

Figures 1.6.12(i) and (b) demonitrate the behavior ofthe inductor current i(l) and the

voltage z(t) respectively.

v

(r)

2

(a)

Flgure 1.6.111 o(t)

x

(b)

afi du(t)ldt

for Example 1.6.7.

l0 -2 erp [-2 r l u (r)

Representing

Note that rhe derivarive of

tion" i.e.

r(r)z(r)

Signals

Chapter

1

is obtained using the product rule of differenria-

d,

*!x(t)u(t)l = x(r)b (r) + x' (t) u(t)

whereas the derivative of

.rO6(r)

*4

is

kt,lut,)r = ,4 r,tolool]

This result cannot be obtained by direct differentiation of the product, because 6(r) is

interpreted as a functional rather than an ordinary function.

I'.vomple [.6S