Random variables

Discrete RVs on a discrete S

Discrete probability models

STAT 391

Discrete Random Variables

Emanuela Furfaro

1

Winter 2023

1

These slides are based on Chapter 3 and Chapter 8 of the textbook “Probability and

Statistics for Computer Science" by Marina Meila

1 / 32

Random variables

Discrete RVs on a discrete S

Discrete probability models

Random variables

É A random variable (r.v.) is defined as a function that associates

a number to each element of the outcome space. Hence, any r,

r : S → (−∞, +∞) is a random variable.

É In general, for a random variable Y and an outcome space S,

with Y : S → SY ⊂ (−∞, +∞): the range of Y, SY is called the

outcome space of the random variable Y.

É The SY cannot have more elements than the original S.

É If the range (i.e the outcome space) SY of a RV Y is discrete,

then Y is called a discrete random variable.

É If SY is continuous then the RV is a continuous.

É On a discrete S one can have only discrete RV’s.

É If S is continuous, one can construct both discrete and

continuous RV’s.

2 / 32

Random variables

Discrete RVs on a discrete S

Discrete probability models

Probability distribution of a RV

É The probability distribution of a RV Y denoted by PY is a

probability over SY

PY (y) = P ({s ∈ S|Y(s) = y})

É We will indicate random variables by capital letters and their

values by the same letter, in lower case

É PY (y) is the probability of the event Y(s) = y and we will often

simply write P(y).

3 / 32

Random variables

Discrete RVs on a discrete S

Discrete probability models

Example

É We toss a fair coin (coin with P(H) = P(T) = 0.5).

É The sample space contains the following: SY = {H, T}.

É Let Y be the random variable which interprets the number of

tails observed the random variable Y takes values: S = {0, 1}

4 / 32

Random variables

Discrete RVs on a discrete S

Discrete probability models

Repeated independent trials

É A coin is tossed n times, a series of n die rolls are both

examples of experiments with repeated trials.

É In a repeated trial, the outcome space Sn is S × S × ... × S n times

É The elements of Sn are length n sequences of elements of S.

É If in a set of repeated trials, the outcome of a trial is not

influenced in any way by the outcomes of the other trials,

either taken together or separately, we say that the trials are

independent.

É The probability of the sequence, it is given by multiplying the

probability of the each event.

5 / 32

Random variables

Discrete RVs on a discrete S

Discrete probability models

Example

É A fair coin (P(T) = P(H) = 0.5) is tossed 3 times.

É The following 2 numbers that can be associated with each outcome of

the experiment are random variables on this space:

É Y: the number of heads in 3 tosses

É X: the position of the first toss that is heads (0 if no heads appear)

outcome

Y

X

P(outcome)

TTT

HTT

THT

TTH

HHT

HTH

THH

HHH

0

1

1

1

2

2

2

3

0

1

2

3

1

1

2

1

1/8

1/8

1/8

1/8

1/8

1/8

1/8

1/8

É We can use the definition of independent event to compute P(outcome).

É P(outcome) =

1 1 1

2 2 2

for all outcomes.

6 / 32

Random variables

Discrete RVs on a discrete S

Discrete probability models

Example (cnt.)

É It follows that SY = {0, 1, 2, 3} and SX = {0, 1, 2, 3}.

É An important question that will often occur is “What is the probability

that a RV takes a certain value"?

É Since the outcomes are disjoint:

0

1

2

3

PY (y)

PX (x)

PY (0) = 1/ 8

PY (1) = 1/ 8 + 1/ 8 + 1/ 8 = 3/ 8

PY (2) = 1/ 8 + 1/ 8 + 1/ 8 = 3/ 8

PY (3) = 1/ 8

PX (0) = 1/ 8

PX (1) = 1/ 8 + 1/ 8 + 1/ 8 + 1/ 8 = 4/ 8

PX (2) = 1/ 8 + 1/ 8 = 2/ 8

PX (3) = 1/ 8

É The events Y = y for y = 0, 1, 2, 3 are disjoint events, and their union is

equal to the whole sample space S.

É Similarly, the events X = x for x = 0, 1, 2, 3 are disjoint events, and their

union is equal to the whole sample space S.

É If we are interested only in Y (or only in X) instead of the experiments

outcome itself, then we can ignore the original outcome space S and

instead look at the outcome space SY of Y or SX of X.

7 / 32

Random variables

Discrete RVs on a discrete S

Discrete probability models

Expectation of a discrete RV

É The expectation of a discrete RV Y is a real number computed

as:

E [Y] =

X

y · PY (y)

Y∈SY

É The expectation is often also called expected value, average,

mean.

É It is the “center of balance" of a distribution.

8 / 32

Random variables

Discrete RVs on a discrete S

Discrete probability models

Properties

NOTE: This is valid for continuous and discrete RVs, but here we

only refer to discrete RVs.

1. The expectation of a constant random variable is the constant

itself.

2. Let X be random variable, c a real constant, and Y = cX. Then

E [Y] = cE [X]

3. The expectation of the sum of n r.v’s Y1 , Y2 , ..., Yn is equal to the

sum of their expectations.

E

n

X

i=1

Yi =

n

X

E [Yi ]

i=1

9 / 32

Random variables

Discrete RVs on a discrete S

Discrete probability models

Expectation of a discrete RV (ctd.)

NOTE: This is valid for continuous and discrete RVs, but here we

only refer to discrete RVs.

É Given a random variable Y with probability distribution PY (y),

and a function g(Y):

E [g(Y)] =

X

g(y)PY (y)

y∈SY

É In other words, the transformed random variable g(Y) can be

found without finding the distribution of the transformed

random variable, simply by applying the probability weights of

the original random variable to the transformed values.

É This theorem sometimes referred to as “Law of the unconscious

statistician" (LOTUS).

10 / 32

Random variables

Discrete RVs on a discrete S

Discrete probability models

Example (slide 6)

Calculate the expected value of X and the expected value of Y.

11 / 32

Random variables

Discrete RVs on a discrete S

Discrete probability models

Variance

É The variance is defined as

Var [Y] = E (Y − E [Y])2

É It can be thought of as a special kind of expectation which

measures the average squared deviations from the expected

value E [Y].

É The variance is often computed using the following formula:

Var [Y] = E Y 2 − E [Y]2

which for the discrete case translates into

P

2

P

2

y∈SY y PY (y) −

y∈SY yPY (y) .

É The square root of the variance is called standard deviation.

12 / 32

Random variables

Discrete RVs on a discrete S

Discrete probability models

Properties of the variance

1. The variance is always ≥ 0. When the variance is 0, the RV is

deterministic (in other words it takes one value only).

2. Given a RV Y and a real constant c, Var [cY] = c2 Var [Y]

3. Given two RVs X and Y, the variance of their sum

Var [X + Y] = Var [X] + Var [Y] + 2Cov [X, Y].

É If X and Y are independent: Var [X + Y] = Var [X] + Var [Y].

É Given a sequence of independent variables Y1 , Y2 , ..., Yn :

P

P

n

n

Var

Y = i=1 Var [Yi ].

i=1 i

13 / 32

Random variables

Discrete RVs on a discrete S

Discrete probability models

Example (slide 6)

Calculate the Variance of X and the variance of Y.

14 / 32

Random variables

Discrete RVs on a discrete S

Discrete probability models

Discrete probability models

É In this section we describe a few naturally occurring families of

discrete probability models that arise out of certain idealized

random experiments.

É We will say that a random variable belongs to a certain family

of probability models if it follows a specific distribution.

É The term “family” here refers to the fact that the distributions

of these random variables have specific functional forms that

can be described in terms of one or more parameters.

15 / 32

Random variables

Discrete RVs on a discrete S

Discrete probability models

Bernoulli trials and Binomial experiments

Bernoulli trials or Binomial experiment refers to the following

random experiment:

É the experiment consists of a sequence of independent trials,

É each trial results in one of two possible outcomes – success or

failure (this is often called binary experiment),

É the probability of success in each trial is a fixed number θ with

0 < θ < 1.

16 / 32

Random variables

Discrete RVs on a discrete S

Discrete probability models

The Binomial distribution

É Suppose we conduct a binomial experiment consisting of n

trials, where n is predetermined, and count the number of

successes in these n trials.

É The latter random variable Y is said to be a Binomial random

variable with parameters (n, θ) if it follows the Binomial

distribution given by:

PY (y) = P(Y = y) =

n y

θ (1 − θ)n−y ,

y

y = 0, 1, 2, . . . , n.

É The expectation and variance are given by:

E [Y] = nθ and Var [Y] = nθ(1 − θ)

17 / 32

Random variables

Discrete RVs on a discrete S

Discrete probability models

Example (slide 6)

In the experiment described in slide 6, we had obtained the

following table:

PY (y)

0

PY (0) = 1/ 8

1

PY (1) = 1/ 8 + 1/ 8 + 1/ 8 = 3/ 8

2

PY (2) = 1/ 8 + 1/ 8 + 1/ 8 = 3/ 8

3 PY (3) = 1/ 8

Once we establish that Y ∼ Binomial(3, 0.5), we can readily

compute the probability distribution without having to construct S

and SY . For instance:

PY (1) =

3

0.5y (1 − 0.5)3−1 = 0.375

1

18 / 32

Random variables

Discrete RVs on a discrete S

Discrete probability models

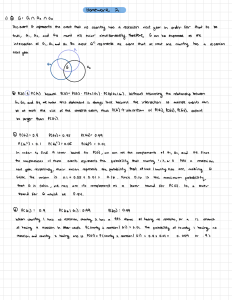

The Binomial distribution

3

4

p(x)

0

2

3

4

5

1

2

4

6

8

10

7

10 13 16 19 22

19

9

12 15 18 21

x

23

25

27

29

0.12

p(x)

0.00

6

21

0.00

p(x)

0.04

0.12

0.06

3

5

x

Y~Binom(p=0.9,

n=100)

0.08

x

Y~Binom(p=0.5,

n=100)

0.00

0

4

0.20

4

x

Y~Binom(p=0.1,

n=100)

3

0.00

p(x)

0.10

p(x)

0

2

x

Y~Binom(p=0.9,

n=30)

0.00

p(x)

0.00 0.10 0.20

1

x

Y~Binom(p=0.5,

n=30)

0.10

2

0.06

1

x

Y~Binom(p=0.1,

n=30)

0.0 0.2 0.4 0.6

0.30

0.15

p(x)

0

p(x)

Y~Binom(p=0.9, n=5)

0.00

p(x)

Y~Binom(p=0.5, n=5)

0.0 0.2 0.4 0.6

Y~Binom(p=0.1, n=5)

31

37

43

49

x

55

61

67

78

82

86

90

x

94

98

19 / 32

Random variables

Discrete RVs on a discrete S

Discrete probability models

The Bernoulli distribution

É The special case where Y ∼ Binomial(n, θ) and n = 1 is referred

to as Bernoulli distribution.

É A Bernoulli has only one parameter θ and its probability

distribution is given by:

É E [Y] =

É Var [Y] =

É The Binomial RV is often derived as the sum of n independent

and identical Bernoullis.

20 / 32

Random variables

Discrete RVs on a discrete S

Discrete probability models

Exercise: Binomial RV as the sum of Bernoullis

The Binomial RV is often derived as the sum of n independent and

identical Bernoullis. Let X1 , X2 , ...Xn independent and identical

Pn

Bernoulli(θ) and Y = i=1 Xi . Find E [Y] and Var [Y].

21 / 32

Random variables

Discrete RVs on a discrete S

Discrete probability models

Historical fact (Ross, 2005)

Independent trials having a common probability of success were first

studied by the Swiss mathematician Jacques Bernoulli (1654–1705). His

book Ars Conjectandi (The Art of Conjecturing) was published by his nephew

Nicholas eight years after his death in 1713. Jacques Bernoulli was from the

first generation of the most famous mathematical family of all time.

Altogether, there were between 8 and 12 Bernoullis, spread over three

generations, who made fundamental contributions to probability, statistics,

and mathematics. One difficulty in knowing their exact number is the fact

that several had the same name. (For example, two of the sons of Jacques’s

brother Jean were named Jacques and Jean.) Another difficulty is that

several of the Bernoullis were known by different names in different places.

Our Jacques (sometimes written Jaques) was, for instance, also known as

Jakob (sometimes written Jacob) and as James Bernoulli. But whatever their

number, their influence and output were prodigious.

22 / 32

Random variables

Discrete RVs on a discrete S

Discrete probability models

Multinomial distribution

É The multinomial distribution is a generalization of the binomial

distribution

É The generalization consists in allowing to model experiments

which are not binary experiments.

É Let’s consider a trial that has m outcomes or m categories, with

each category having a given fixed success probability.

É For n independent trials each of which leads to a success for

exactly one of the m categories, the multinomial distribution

gives the probability of any particular combination of numbers

of successes for the various categories.

23 / 32

Random variables

Discrete RVs on a discrete S

Discrete probability models

Multinomial distribution: Example

É Let’s start with the simple example of a binary experiment.

É Let’s assume a box contains 5 red balls and 15 yellow balls.

É We draw 3 balls with replacement.

É The following 2 numbers that can be associated with each outcome of

the experiment are random variables on this space:

É n1 : the number of red balls in 3 draws

É n2 : the number of yellow balls in 3 draws

É Let θ1 = 5/ 20 = 1/ 4 indicate the probability of a red ball and

θ2 = 15/ 20 = 3/ 4 the probability of a yellow ball,

outcome

n1

n2

RRR

RYY

YRY

YYR

RRY

RYR

YRR

RRR

0

1

1

1

2

2

2

3

0

2

2

2

1

1

1

0

P(outcome)

24 / 32

Random variables

Discrete RVs on a discrete S

Discrete probability models

Multinomial distribution: Example

É Note that n = n1 + n2 and therefore the combination of n1 , n2

n

n

can occur n n!

= n = n times.

!n !

1

2

1

2

É The probability of observing n1 and n2 counts is then given by:

n n1 n2

θ1 θ2

n

1

É Let’s assume that the box contains 5 red balls, 10 yellow balls

and 5 blue balls.

É We then have θ1 = 5/ 20 = 1/ 4, θ2 = 10/ 20 = 3/ 4 and

θ3 = 5/ 20 = 1/ 4:

outcome

n1

n2

n3

RRR

3

0

0

YYY

0

3

0

BBB

0

0

3

RYY

1

2

0

RYB

1

1

1

...

...

...

...

P(outcome)

25 / 32

Random variables

Discrete RVs on a discrete S

Discrete probability models

Multinomial distribution: Example

É Note that n = n1 + n2 + n3 and therefore the combination of

n outcomes n1 , n2 , n3 can occur n !nn!!n ! = n ,n times.

0

1

2

0

1

É The combination of outcomes n1 , n2 , n3 has probability:

n n1 n2 n3

θ1 θ2 θ3

n ,n

1

2

É Generally speaking the probability of observing a set of counts

(n1 , ..., nm ) is obtained by multiplying the probability of one

sequence, given by with the total number of sequences

exhibiting those counts:

n!

P(n1 , n2 , ..., nm ) =

n1 n2 ...nm

m

Y

n

θi i

i=1

26 / 32

Random variables

Discrete RVs on a discrete S

Discrete probability models

Multinomial distribution

É Denote the variable which is the number of successes in

category i (i = 1, ..., m) as Yi , and denote as θi the probability

that a given extraction will be a success in category i, the

following denotes the probability distribution of a multinomial

random variable.

PY1 ,Y2 ,...,Ym (y1 , y2 , ..., ym ) =

n!

m

Y

y1 y2 ...ym

i=1

θyi

É For m = 2, the above equation is the binomial distribution.

27 / 32

Random variables

Discrete RVs on a discrete S

Discrete probability models

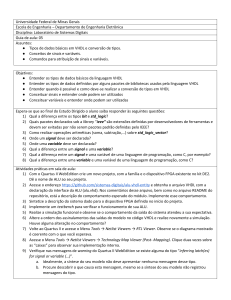

Geometric distribution

É Suppose that independent trials, each having a probability θ,

0 < θ < 1, of being a success, are performed until a success

occurs. If we let Y equal the number of trials required, then

PY (y) = (1 − θ)y−1 θ

with y = 1, 2, ....

É Any random variable Y whose probability distribution is given

by equation above is said to be a geometric random variable

with parameter θ.

É This model is useful when we are interested to know the

number of trials needed to obtain one success.

É E [Y] = 1 and Var [Y] = 1−θ

θ

θ2

28 / 32

Random variables

Discrete RVs on a discrete S

Discrete probability models

Geometric distribution

0.4

y_dgeom

y_dgeom

0.0

0.0

0.00

0.1

0.02

0.2

0.2

0.04

y_dgeom

0.3

0.06

0.6

0.4

0.08

0.5

Y~geom(p=0.8)

0.8

Y~geom(p=0.5)

0.10

Y~geom(p=0.1)

0

10

20

30

Index

40

50

2

4

6

Index

8

10

2

4

6

8

10

Index

29 / 32

Random variables

Discrete RVs on a discrete S

Discrete probability models

The Poisson distribution

É A random variable Y is called to be a Poisson RV with parameter

λ if i follows the Poisson distribution given by:

PY (y) = P(Y = y) = e−λ

λy

,

y!

y = 0, 1, 2, . . .

É The Mean and variance are given by: E(X) = λ, Var(X) = λ.

É λ is called the rate parameter and it represents the average

number of occurrences in a given interval.

É It expresses the probability of a given number of events

occurring in a fixed interval of time or space if these events

occur with a known constant mean rate and independently of

the time since the last event.

É It can be derived as a limit of the Binomial distribution when θ

is small and n → ∞.

30 / 32

Random variables

Discrete RVs on a discrete S

Discrete probability models

The Poisson approximation to Binomial

Consider a large number n of Bernoulli trials, and let the probability

of success p for each trial be such that θ → 0 and nθ → λ > 0 as

n → ∞. Then the probability distribution of Y, the number of

successes in these n trials (which has a Binomial(n, p) distribution)

is approximately Poisson(λ), in the sense that, for every fixed

nonnegative integer y,

λy

n y

θ (1 − θ)n−y → e−λ

PY (y) =

y

y!

as n → ∞.

31 / 32

Random variables

Discrete RVs on a discrete S

Discrete probability models

The Poisson distribution

Y~Pois(2)

Y~Pois(5)

0.15

0.05

0.00

0.00

4

6

8

10

2

4

8

10

5

10

Index

15

15

0.06

0.08

y_dpois

0.06

0.02

0.00

0.02

0.00

5

10

Index

Y~Pois(30)

0.04

y_dpois

0.08

0.04

0.00

y_dpois

6

Index

Y~Pois(20)

0.12

Index

Y~Pois(10)

0.04

2

0.10

y_dpois

0.20

y_dpois

0.10

0.4

0.2

0.0

y_dpois

0.6

Y~Pois(0.5)

0

5

10

15

Index

20

25

0

10

20

30

Index

40

32 / 32