Semantic Communication for SWIPT systems

Nizar Khalfet, Costas Psomas, Symeon Chatzinotas, and Ioannis Krikidis

IRIDA Research center for Communication Technologies

Department of Electrical and Computer Engineering

University of Cyprus

IRIDA Workshop

1 / 24

1

Introduction/Motivation

2

Contributions

3

DM channel with SWIPT and semantics

Achievable region for the DM channel

Converse Semantic information-energy region for DM Channel

4

Gaussian channel with SWIPT and semantics

5

Numerical results

6

Conclusion/Extension

2 / 24

Introduction/Motivation

It is predicted that the global amount of data generated by network nodes will

increase to 175 zetta-bytes in 2025.

SemCom: transmit only the key information to the destination: safely remove the

information irrelevant to the specific task without causing any performance

degradation.

Existing research contributions reveals that SemCom is promising to be used when

the SNR is low and/or the available wireless resource is limited.

SemCom generally requires less power/bandwidth than BitCom.

Introducing SemTrans: enables users to achieve a comparable performance as

BitTrans but consmuing low transmit power, and causing less interference.

2 / 24

Contributions

A novel framework is proposed that enables the study of the fundamental limits of

SWIPT with semantic communication for a discrete memoryless (DM) channel and

a Gaussian channel.

An achievable information-energy region as well its converse for both the DM

channel and the Gaussian channel are characterized by including the semantic

context into the communication.

A higher performance is observed in comparison to conventional communication

approaches (i.e., without semantics) by considering a low semantic ambiguity code.

3 / 24

DM channel with SWIPT and semantics

Semantic channel: P(Q|W )

PY |X

Transmitter

Y

IR

X

PS|X

ER

S

The output y is observed at the receiver, with probability

P(Y = y | X = x) =

n

Y

P(yi |xi ),

(1)

i=1

P(yi |xi ) is the transition probability distribution.

Q: semantic context random variable, with respect to a probability distribution

P(Q|W )

X

P (Q = q|W = w ) = 1.

(2)

q∈Q

4 / 24

DM channel with SWIPT and semantics

Semantic channel: P(Q|W )

PY |X

Transmitter

Y

IR

X

PS|X

ER

S

semantic distance between two words as

d(w , ŵ ) = 1 − sim(w , ŵ ),

(3)

0 ≤ sim(w , ŵ ) ≤ 1: semantic similarity between w and ŵ

the average semantic error denoted by PSE is given by

X

P(Y = y |X = x)

PSE (q) =

w ∈W,q∈Q,y ∈Y n ,x∈X n

×P(Q = q|W = w )P(W = w )d(w , ŵ )

(4)

5 / 24

DM channel with SWIPT and semantics

Semantic channel: P(Q|W )

PY |X

Transmitter

Y

IR

X

PS|X

ER

S

The output s at the EH is observed at the receiver, with probability

P(S = s | X = x) =

n

Y

P(si |xi ),

(5)

i=1

E(s): the average harvested energy function given by

E(s) =

n

1X

g (si ),

n i=1

(6)

g : S → R+ : the energy harvested from the output symbols.

6 / 24

DM channel with SWIPT and semantics

Semantic channel: P(Q|W )

PY |X

Transmitter

Y

IR

X

PS|X

ER

S

Achievable rates

From a semantic communication standpoint, an information-energy rate (R, b) is

achievable, if the probability of the miss-interpretation of message w , given the context

Q, PSE , satisfies the limit PSE (q) → 0, for n → ∞ and the energy shortage probability,

PES (b) satisfies PES [E(y ) ≤ b] → 0, for n → ∞.

7 / 24

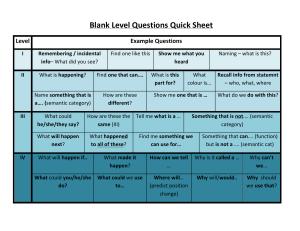

Achievable region for the DM channel

Semantic Information energy achievable region

The information energy capacity in the case of non-colocated EH is upper bounded by

the function C : [b1 , b0 ] → R+ , is

C(b) ≥

max

ρ.P(X |W )|E(y )≥b

I (X ; Y ) − H(X |W ) + H(Q),

I (X ; Y ) = H(X ) − H(X |Y ) is the mutual information between X and Y .

H(X |W ) is the equivocation of the semantic encoder, given the semantic context Q.

H(Q) measures the semantic context source, or the local information available at

the transmitter and receiver.

A higher H(Q) means strong ability of the receiver to interpret received messages.

8 / 24

Proof of Achievable region: Jointly typical sequences

Definition

Let An jointly typical sequences {(x n , y n )} with respect to p(x, y ) is the set of n

sequences with empirical entropy close to the true entropies, i.e.,

An = {(x n , y n )} ∈ X n × Y n :

1

− log p(x n ) − H(X ) < n

1

− log p(y n ) − H(Y ) < n

1

− log p(x n , y n ) − H(X , Y ) < n

p(x n , y n ) =

Qn

i=1

(7)

(8)

(9)

(10)

p(xi , yi )

9 / 24

Proof of Achievable region: Jointly typical sequences

Theorem

P{(X n , Y n ) ∈ An } → 1 as n → ∞

|An | ≤ 2n(H(X ,Y )+)

if (X n , Y n ) has a distribution p(x n )p(y n ) then

P{(X n , Y n ) ∈ An } ≤ 2n(I (X ;Y )−3)

(11)

10 / 24

Proof of the Achievable region

A semantic error appears if a received message is not decoded by the receiver using

the context Q.

Let n be sufficiently large number, Q1 , Q2 , . . . , QN is the sequence of the observed

context, X1 , X2 , . . . , XN is the sequence of the transmitted signals, Y1 , X2 , . . . , XN is

the sequence of the received signals.

According to AEP: there are 2nH(Q) typical sequence of context

According to AEP: and the channel coding Theorem : there are 2(I (X ;Y )−R)N typical

input sequence.

According to AEP: there are 2−NH(X |W ) typical sequence of X given the context.

Hence there are : 2(I (X ;Y )−H(X |W )+H(Q))N typical sequence of input given the context.

11 / 24

Converse region for the DM channel

Semantic Information energy converse region

The information energy capacity in the case of non-colocated EH is upper bounded by

the function C : [b1 , b0 ] → R+ , is

X

C(b) ≤

max

H(X ) − H(X |W ) − H(X |W , Y ) +

p(W )H(Q|W )

ρ.P(X |W )|E (ρ)≥b

W

12 / 24

Sketch of the Proof

By using Fano inequality:

NR ≤

N

X

NI (Xi ; Yi ) + o(N)

(12)

i=1

X , Y and W are random variables such that W → X → Y that form a Markov

chain, i.e., W and Y are independent given X

I (Y ; W |X ) = 0.

(13)

I (X ; W ) ≥ I (Y ; W )

(14)

Data procession inequality, i.e,

13 / 24

SWIPT Gaussian channel with semantics

Semantic channel: P(Q|W )

zt

Transmitter

xt

⊗

h1

⊕

y1,t

IR

h2

y2,t

ER

Information Decoder: y1,t = h1 xt + zt ,

Energy harvester:

y2,t = h2 xt

zt ∼ N (0, 1) is the Gaussian noise with unit variance.

The conditional probability:

(y − h1 x 2 )

1

p(y |x) = √ exp −

.

2

2π

(15)

14 / 24

SWIPT Gaussian channel with semantics

Semantic loss for the Guassian case by using the maximum likelihood (ML) detector:

s

n

n

kxi − xj k2

1X X

,

(16)

PSE (q) =

d(xi , xj )Q P

n i=1

2

j=1,j6=i

d(xi , xj ) = 1 − sim(xi , xj )

sim(xi , xj ) =

BΦ (mi )BΦ (mj )T

,

kBΦ (mi )k kBΦ (mj )k

(17)

BΦ (·) : the pretrained bidirectional encoder representation from transformers

(BERT) model

The average harvested energy:

E(Y2 ) =

n

1X 2

Y2,t .

n t=1

(18)

15 / 24

Gaussian channel with SWIPT and semantics

Semantic channel: P(Q|W )

zt

Transmitter

xt

⊗

h1

⊕

y1,t

IR

h2

Y2,t

ER

Achievable region for Gaussian channel

The information energy capacity is lower bounded by the function C : R+ → R+ , is

C(b) ≥

max

P(X |W )|E(Y2 )≥b

1

log(1 + λ1 P) − h(X |W )

2

1

+ log(1 + λ2 P),

2

(19)

where λ1 ≥ 0 and λ2 ≥ 0 denotes the fraction of the power dedicated to the information

signal component and the semantic context signals, respectively.

16 / 24

Proof of the achievable region for the Gaussian channel

Context random variable satisfies, Q ∼ N (0, λ2 P) and X ∼ N (0, λ1 P)

λ1 + λ2 = 1,

(20)

Codewords are chosen to be i.i.d, with variance P − and satisfies

E[I0 (Bh2 |Xi |)] = b + .

The context Q is sent through a feedback link using a faction λ2 of the power P.

The receiver observes the codeword list and the sequence of the context

Q1 , Q2 , . . . , Qn generated via feedback by the transmitter, and decides on w if

{X n (w ), Y } are jointly typical, {Q n (w )} is a typical sequence, and

{X n (w )|W } is a typical sequence given the context.

using the AEP, the semantic error probability is upper bounded as follows

PSE ≤ 2N(−I (X :Y )+H(X |Q)−H(Q)−3) ,

(21)

17 / 24

Gaussian channel with SWIPT and semantics

Semantic channel: P(Q|W )

zt

Transmitter

xt

⊗

h1

⊕

y1,t

IR

h2

Y2,t

ER

Converse region for Gaussian channel

The information-energy capacity region for the SWIPT semantic communication is upper

bounded by the function C : R+ → R+ , i.e.,

C(b) ≤ max

1

E(Y2 )≥b 2

log(1 + µ1 Preq ) +

1

log(1 + µ2 Preq ),

2

where µ1 ≥ 0 and µ2 ≥ 0 denote the fraction of the power dedicated to the input X and

the semantic context Q, respectively.

18 / 24

Proof of the converse region for the Gaussian channel

From the assumption that the message indice W ∈ {1, 2, . . . , 2nR } are i.i.d.

following a uniform distribution,and using Fano’s inequality

nR = H(W ) = I (W ; Ŵ ) + H(W |Ŵ )

n

X

≤

h(Yi |Qi ) − h(Z ) + n,

(22)

(23)

i=1

→ 0 as PSE → 0.

By using a power splitting technique between the information component and the

semantic context Q, i.e.,

Y = µ1 X + µ2 Q + Z ,

(24)

µ1 + µ2 = 1.

By applying Jensen’s inequality, we obtain

R≤

1

1

log(1 + µ1 P) + log(1 + µ2 P).

2

2

(25)

19 / 24

Example: Semantic through binary symmetric channel

Binary symmetric channel (BSC) with a crossover probability of ρ

p(Y = y | X = x) = ρl(y,x) (1 − ρ)n−l(y,x)

(26)

l(y, x) is the Hamming distance between y and x.

Two sets of contexts Q = {q1 , q2 }

q1 = things originating from non-living beings

q2 = things originating from living beings.

1,

P(Q = q1 |W = w ) = 0,

0.5.

E (ρ) =

if w = car,automobile

if w = bird

if w = Crane

(27)

n

1X

[(1 − ρ)P(xn = 1) + ρP(xn = 0)]b1

n n=1

+[ρP(xn = 1) + (1 − ρ)P(xn = 0)]b0 ,

(28)

20 / 24

Numerical results: Achievable semantic information-energy region versus

converse region over a DM channel

21 / 24

Achievable semantic information-energy region versus conventional capacity

over a DM channel

22 / 24

Impact of semantic context on the information-energy capacity region over

the Gaussian channel

23 / 24

Conclusion/Extension

For the point-to-point case, we have developed an information theoretic framework

to characterize achievable information-energy regions and their converses,

considering both the DM and the Gaussian channels.

Numerical results indicate that employing low semantic ambiguity codes can lead to

improved performance compared to conventional communication approaches.

Extension: The Gaussian MAC case has been considered: A system with a semantic

transmitter and a conventional transmitter, operating under EH constraints at the

receiver has been considered.

24 / 24