UNIVERSITY OF ENGINEERING AND TECHNOLOGY TAXILA

DEPARTMENT OF ELECTRICAL ENGINEERING

DIGITAL IMAGE PROCESSING

CEP REPORT

SUBMITTED TO:

DR JUNAID MIR

SUBMITTED BY:

1|Page

RABEAH FARRAKH

(20-EE-02)

FARIHA KHAWAJA

(20-EE-03)

AYESHA NASIR

(20-EE-05)

SANA PERVEEN

(20-EE-08)

MUNAZZA JAVED

(20-EE-11)

FATIMA SHAHZAD

(20-EE-14)

Table of Contents

OBJECTIVE: ...................................................................................................................................... 3

ABSTRACT: ....................................................................................................................................... 3

LITERATURE REVIEW: ...................................................................................................................... 3

INTRODUCTION:.............................................................................................................................. 3

Melanoma: .................................................................................................................................. 3

DETECTION OF MELANOMA: .......................................................................................................... 5

1.

Skin Lesion Segmentation Using Convolution Neural Networks: ........................................ 5

2. Recognition System for Diagnosing Melanoma Skin Lesions Using Artificial Intelligence

Algorithms:.................................................................................................................................. 6

3.

Automatic segmentation and melanoma detection based on color and texture features: 6

PRE-REQUISITES: ............................................................................................................................. 7

Types of Filtering ......................................................................................................................... 7

1.

Low pass filters (Smoothing) ................................................................................................ 7

2.

High pass filters (Edge Detection, Sharpening) .................................................................... 8

Morphological Image Processing: ............................................................................................... 8

Grayscale Morphology: ............................................................................................................... 9

Grayscale Dilation:....................................................................................................................... 9

Grayscale Erosion: ..................................................................................................................... 10

Grayscale-Opening: ................................................................................................................... 10

Grayscale Closing: ..................................................................................................................... 11

Morphological operation on color: ........................................................................................... 12

Application of Morphology: ...................................................................................................... 12

• Image segmentation:.............................................................................................................. 13

Mask image: .............................................................................................................................. 13

• Jaccard Index: ......................................................................................................................... 13

• Dice Coefficient: ..................................................................................................................... 14

METHODOLOGY: ........................................................................................................................... 14

Block diagram: ........................................................................................................................... 14

• MATLAB codes: ....................................................................................................................... 19

• MATLAB PLOTS: ...................................................................................................................... 20

RESULTS:........................................................................................................................................ 21

REFERENCES: ................................................................................................................................. 22

2|Page

OBJECTIVE:

Designing an algorithm in MATLAB to segment out the region of interest , i.e., the melanoma

region, from the dermoscopic image.

ABSTRACT:

This report introduces an algorithm designed to automatically diagnose skin cancer lesions in

Dermoscopic images, aiming to enhance early detection and reduce melanoma-induced

mortality. Image segmentation plays a crucial role in the automated skin lesion diagnosis

process. The primary focus is on developing a method for effectively segmenting skin lesions in

Dermoscopic images. One of the significant challenges lies in eliminating noise, particularly

hairs, from Dermoscopic images while accurately segmenting the Melanoma Region without

distorting the original content. Fortunately, it is possible to filter out hair removal without

compromising the required region in the image. This report outlines the process: initially

identifying a suitable hair removal algorithm for the given image, followed by the application of

morphological image processing for segmentation using thresholding techniques. The final step

involves comparing the Image Segmented Region with the provided Masked Image, evaluating

the performance using standard segmentation metrics such as the Jaccard Index and Dice

Coefficient.

LITERATURE REVIEW:

INTRODUCTION:

Melanoma:

Melanoma, the most serious type of skin cancer, develops in the cells that produce melanin (the

pigment that gives your skin its color). Skin is the largest organ of the human body. It protects

the body's internal tissues from the external environment. The skin also helps maintain the body

3|Page

temperature at a steady level and shields our body. Melanoma is an abnormal proliferation of

skin cells that arises and develops, in most cases, on the surface of the skin that is exposed to

copious amounts of sunlight. However, this common type of cancer can also develop in skin

areas that are not exposed to too much sunlight.

Melanoma is the fifth most common lesion of skin cancer. As recently reported by the Skin

Cancer Foundation (SCF), it is considered the most serious form of skin cancer because it can

spread to other parts of the body. [1] Once melanoma spreads elsewhere, it becomes difficult to

treat. However, early detection protects lives, which is rare but the most dangerous. In the past

couple of years, the incidence of skin cancer has been increasing, and reports show that the

recurrence of melanoma occurs at regular intervals.

•

In 2023, an estimated 97,610 adults (58,120 men and 39,490 women) in the United States

will be diagnosed with invasive melanoma of the skin. Worldwide, an estimated 324,635 people

were diagnosed with melanoma in 2020. [2]

•

In the United States, melanoma is the fifth most common cancer among men. It is also

the fifth most common cancer among women. Melanoma is 20 times more common in White

people than in Black people. The average age of diagnosis is 65. Before age 50, more women are

diagnosed with melanoma than men. After age 50, rates are higher in men. [2]

•

The development of melanoma is more common as people grow older. But it also

develops in younger people, including those younger than 30 years old. It is one of the most

common cancers diagnosed in young adults, particularly for women. In 2020, about 2,400 cases

of melanoma were estimated to be diagnosed in people aged 15 to 29. [2]

Many researchers are attempting to develop algorithms for detecting melanoma regions because

of the increase in cases mentioned above. Computer vision is crucial in medical image

diagnosis. Many computer-aided methods for detecting Melanoma Skin Cancer are being

developed, and Image Processing tools are being used for early detection to save lives.

4|Page

DETECTION OF MELANOMA:

The aim of this CEP is to devise an algorithm in MATLAB to segment out the melanoma region,

from the dermoscopic image. The literature review has been conducted to learn about the

different approaches to solve all parts of this problem. The different approaches include:

1. Skin Lesion Segmentation from Dermoscopic Images Using Convolutional Neural

Network

2. Developing a Recognition System for Diagnosing Melanoma Skin Lesions Using

Artificial Intelligence Algorithms.

3. Automatic segmentation and melanoma detection based on color and texture features in

dermoscopic images.

1. Skin Lesion Segmentation Using Convolution Neural Networks:

Various methods have been used for lesion boundary segmentation, but their accuracy is low for

proper clinical treatment. An automated method called Res-Unet is used which combines the UNet and ResNet architectures for segmenting lesion boundaries. The proposed method also

includes image inpainting for hair removal, which significantly improves segmentation results.

The model was trained on the ISIC 2017 dataset and validated on the ISIC 2017 test set and the

PH 2 dataset. The proposed model achieved a Jaccard Index of 0.772 on the ISIC 2017 test set

and 0.854 on the PH 2 dataset, comparable to state-of-the-art techniques. The methodology

outperformed other available methods in terms of model accuracy and pixel-by-pixel similarity

measure. The performance of the model was evaluated using the receiver operative

characteristics (ROC) curve, which showed the separability between lesion and non-lesion

regions. Image preprocessing techniques, including hair removal using morphological operations

and inpainting, were employed to improve the segmentation results. [3]

5|Page

2. Recognition System for Diagnosing Melanoma Skin Lesions Using Artificial

Intelligence Algorithms:

Skin cancer is also being diagnosed using deep learning and traditional artificial intelligence

machine learning algorithms.

The proposed system is divided into feature-based and deep learning approaches. The featurebased system uses methods like Local Binary Pattern (LBP) and Gray Level Co-occurrence

Matrix (GLCM) for texture feature extraction, followed by artificial neural network (ANN)

algorithm for processing the features. The deep learning approach utilizes convolutional neural

network (CNN) algorithms pretrained with large AlexNet and ResNet50 transfer learning models

for efficient classification of skin diseases.

The experimental results show that the proposed method outperforms state-of-the-art methods

for HP2 and ISIC 2018 datasets, with the ANN model achieving the highest accuracy for both

datasets. Standard evaluation metrics like accuracy, specificity, sensitivity, precision, recall, and

F-score were employed to evaluate the results. [4]

3. Automatic segmentation and melanoma detection based on color and texture

features:

An algorithm has been designed for automatic segmentation and melanoma detection in

dermoscopic images based on color and texture features. This algorithm uses k-means for

automatic segmentation, generating accurate masks for each lesion. The feature extraction

technique includes existing and novel color and texture attributes, measuring how color and

texture vary inside the lesion. The algorithm evaluates the performance using the PH2 dataset

and compares the results with published articles, showing that the proposed method outperforms

the state of the art with high sensitivity, specificity, and accuracy.

Use of cocurrence matrix and variances of normalized color between classes is a part of the

algorithm. The proposed algorithm is accurate and sensitive in identifying melanomas, achieving

high sensitivity, specificity, and accuracy with the SVM classifier. The color features in the

algorithm measure the disorder or variation between classes, which is consistent with the

uncontrolled growth of melanomas. Overall, the algorithm shows promise in accurately

6|Page

identifying melanomas by effectively utilizing color and texture features in dermoscopic images.

[3]

PRE-REQUISITES:

Thresholding:

Image thresholding is a simple form of image segmentation. It is a simple yet effective, way of

partitioning an image into a foreground and background. Image thresholding is most effective

in images with high levels of contrast.

In MATLAB, the function imbinarize(I, level) creates a binary image using a threshold obtained

using Otsu’s method. This default threshold is identical to the threshold returned by graythresh.

However, imbinarize only returns the binary image. If you want to know the level or the

effectiveness metric, use graythresh before calling imbinarize.

Filtering:

Image filtering is changing the appearance of an image by altering the colors of the pixels.

Increasing the contrast as well as adding a variety of special effects to images are some of the

results of applying filters.

Types of Filtering

1) Spatial Domain Filtering

2) Frequency Domain Filtering

Spatial domain filtering can be grouped in two depending on the effects:

1. Low pass filters (Smoothing)

Low pass filtering (aka smoothing), is employed to remove high spatial frequency noise

from a digital image. The low-pass filters usually employ moving window operator which

7|Page

affects one pixel of the image at a time, changing its value by some function of a local

region (window) of pixels. The operator moves over the image to affect all the pixels in

the image.

Types of Smoothing Spatial Filter:

•

•

Mean Filters

Averaging filter

Weighted averaging filter

Order statistical Filter

Minimum filter

Median filter

Maximum filter

2. High pass filters (Edge Detection, Sharpening)

A high-pass filter can be used to make an image appear sharper. These filters emphasize

fine details in the image - the opposite of the low-pass filter. High-pass filtering works in

the same way as low-pass filtering; it just uses a different convolution kernel.

Types of Sharpening Spatial Filter:

•

High Pass Filters (Uni Crisp)

•

Laplacian of Gaussian / Mexican Hat filters

•

Unsharp Masking

When filtering an image, each pixel is affected by its neighbors, and the net effect of

filtering is moving information around the image.

Morphological Image Processing:

Morphology is a broad set of image processing operations that process images based on

shapes. In a morphological operation, each pixel in the image is adjusted based on the value of

other pixels in its neighborhood.

Basic Operations of Morphological Image Processing

8|Page

•

Erosion

•

Dilation

•

Opening

•

Closing

•

Hit or Miss Transform

Grayscale Morphology:

The elementary binary morphological operations can be extended to grayscale images through

the use of min and max operations. – To perform morphological analysis on a grayscale image,

regard the image as a

height map. – min and max filters

attribute to each image

pixel a new value equal to the minimum

or maximum value

in a neighborhood around

that pixel. • The

neighborhood

the shape of the

structuring element.[5]

Grayscale

Dilation:

The

dilation

grayscale

involves assigning

of

represents

an

image

to each pixel, the maximum

value found over the neighborhood of the structuring element. The dilated value of a pixel x is

the maximum value of the image in the neighborhood defined by the SE when its origin is at x:

9|Page

Figure 1 Example of Dilation [5].

Grayscale Erosion:

The

grayscale

assigning to

found

over

erosion of an image involves

each

pixel, the minimum value

the

neighborhood

of

the

structuring element.

The eroded value of a pixel x

is

value of the image in the

the

minimum

neighborhood

defined by the SE when its

origin is at x:

Figure 2 Example of Erosion [5].

Grayscale-Opening:

The grayscale opening of an image involves performing a grayscale erosion, followed by

grayscale dilation. The opened value of a pixel is the maximum of the minimum value of the

10 | P a g e

image

in

the neighborhood .

The

opened value of a

pixel is the

maximum

minimum

value of the image in

the

neighborhood

defined by

the SE:

of

the

Figure 3 Example

of Opening [5].

Grayscale

The grayscale closing of an image

followed by grayscale erosion.

11 | P a g e

Closing:

involves performing a grayscale dilation,

Figure 5 Example of Closing [5].

Morphological operation on color:

Morphological operations on color images can be defined in two main ways: considering colors

as labels associated with each pixel or using color values to establish a total ordering in the color

space. Most approaches belong to the latter type, where a total ordering is established based on

the color values in the image.[6]

Total ordering of colors in an image is established which induced by its color histogram. To

address the limitations of this approach in smoothly colored images, a refinement is introduced,

which involves smoothing the histogram and using a joint histogram of several images. It also

highlights the importance of fulfilling certain conditions for any reasonable generalization of

morphological operations.[6]

Application of Morphology:

Dilate an Image to Enlarge a Shape

Dilation adds pixels to boundary of an object. Dilation makes objects more visible and

fills in small holes in the object.

Remove Thin Lines Using Erosion

Erosion removes pixels from the boundary of an object. Erosion removes islands and

small objects so that only substantive objects remain.

Use Morphological Opening to Extract Large Image Features

You can use morphological opening to remove small objects from an image while

preserving the shape and size of larger objects in the image.

Flood-Fill Operations

12 | P a g e

A flood fill operation assigns a uniform pixel value to connected pixels, stopping at

object boundaries.

Find Image Peaks and Valleys

You can use neighborhood processing to find global and regional minima and maxima in

images.

• Image segmentation:

One of the most important tasks in image processing is image segmentation. Segmentation is a

technique for isolating and extracting an image’s region of interest (ROI). It is especially

important for computer vision techniques that involve object classification or recognition.

Segmentation is critical in medical image processing for isolating the ROI, which could be a

tumor, cancer, or any other object of interest. The ROI can be used to extract unique features for

disease classification and detection. The main aim of image segmentation is to optimize the

physician’s diagnosis by automatically detecting suspicious patterns and classifying the

abnormalities. In order to achieve this, several methods are employed.

Mask image:

A mask is a binary image consisting of zero- and ones. If a mask is applied to another binary or

to a grayscale image of the same size, all pixels which are zero in the mask are set to zero in the

output image. All others remain unchanged.

In image segmentation, a mask image is used to extract the region of interest from an image. To

get the desired area, we simply multiply the given image by its mask.

• Jaccard Index:

It is simply a similarity-checking parameter between two images.

Similarity = jaccard( BW1, BW2 ) computes the intersection of binary images BW1 and BW2

divided by the union of BW1 and BW2.

13 | P a g e

Jaccard similarity coefficient, returned as a numeric scalar or numeric vector with values in

the range [0, 1]. A similarity of 1 means that the segmentations in the two images are a perfect

match and 0 means no similarity in the images. The Jaccard similarity coefficient of two sets A

and B (also known as intersection over union or IoU) is expressed as:

jaccard(A,B) = | intersection(A,B) | / | union(A,B) |

The Jaccard index is related to the Dice index according to:

jaccard(A,B) = dice(A,B) / (2 - dice(A,B) )

• Dice Coefficient:

The Dice coefficient is a statistic used to determine how similar two samples are. It also ranges

from 0 to 1, with 0 indicating no similarity and 1 indicating a perfect match.

Similarity = dice(BW1, BW2) computes the Dice similarity coefficient between binary images

BW1 and BW2. The Dice similarity coefficient of two sets A and B is expressed as:

dice(A,B) = 2 * | intersection(A,B) | / ( | A | + | B | )

The Dice index is related to the Jaccard index according to:

dice(A,B) = 2 * jaccard(A,B) / (1 + jaccard(A,B) )

METHODOLOGY:

Block diagram:

14 | P a g e

Read the

images

provided

Convert

rgb image

to

grayscale

Remove

hairs

using

morphological

operations

Initial pre-processing

Convert

grayscale

image to

binary

image

Apply

morpholog

-ical

operations

Extract

the

melanoma

region

without

hairs

Compare

via Jaccard

index and

dice

Coefficient

Extract

the

melanoma

region

In order to solve this complex engineering problem we shall follow the mentioned steps:

1. Read the dermoscopic image named ‘G3 Image.jpg’ with melanoma region using

‘imread(‘’)’ command and display it using ‘imshow()’ command.

Read the ground truth image provided named ‘G3MASK.png’ using ‘imread(‘’)’

command and then display it using ‘imshow()’.

15 | P a g e

2. Convert the original RGB image to gray image to apply morphological operations using

command ‘rgb2gray()’.

3. Apply morphological operation ‘imclose’ to remove hairs.

16 | P a g e

4. Convert gray to binary image to generate the required mask with melanoma region in

white and non-melanoma region in black using ‘im2bw()’. And apply morphological

operations ‘imdilate()’ or ‘bwareaopen()’ to retain the largest part.

5. Use given segmentation performance metrics i.e., Dice Coefficient and Jaccard Index to

compare the obtained mask and ground truth image provided using ‘dice similarity’ and

Jaccard similarity.

6. Finally, segment out the melanoma region from the background in the image and apply

morphological operation ‘imclose()’ for removing hairs in melamona region.

17 | P a g e

Intuitions behind decisions taken:

1. Read the RGB and ground truth image as provided for comparison with our obtained

mask.

2. Conversion to gray or binary image is necessary because most (if not all) of the

morphological operations (e.g. imdilate, imerode, imtophat, imbothat) specify that the

input image should be grayscale or binary.

3. We have applied morphological operation “imclose()” because it is actually a process of

dilation followed by an erosion maintaining the image size.

4. We have used the structuring element 'disk’ with the specified size, R>10 to remove the

hairs. R specifies the distance from the structuring element origin to the points of the

disk. Since hairs are thin, a minimum of width 10 is required for these black hairs to be

removed from our image. R<10 will remove hairs to a certain point but not completely.

(the hairs can also be removed by using any other structuring element like a sphere , or

octagon, but while using any of these, R adjustment is done accordingly and the

possibility is the program becomes slow as the width R is too large).

5. Conversion of the gray image to a binary image is necessary to generate the required

mask with melanoma region in the white and non-melanoma region in black.

18 | P a g e

6. Then we have again applied “imdilate()” command which will smoothen the boundares

of the mask image and ultimately similarity will be improved.

7. Then we have applied “bwareaopen()” command which will retain the largest object in

binary image.

8. We have compared the ground truth image (provided mask) with obtained mask to

calculate the Jaccard index and dice similarity coefficient. The maximum the similarity

is in between 0 to 1 (closer to 1), the more accurate the mask obtained. The similarity

depends on the structuring element size which must be appropriate. The maximum

similarity that is obtained by both coefficients is obtained with the structuring element

‘diamond’ of size ‘15’.

9. Then, extraction and enhancement of the melanoma region from the background in the

image are done by multiplying the obtained mask with the original RGB image.

10. Extracted image have hairs , we will apply ‘imclose()’, where SE size R must be greater

than 10 to remove hairs in melanoma region.

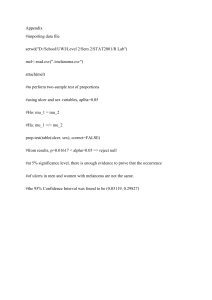

• MATLAB codes:

clc;

clear all;

close all;

% read the dermoscopic image with melanoma region and display the image

rgbImage = imread('G3 Image.jpg');

subplot(231)

imshow(rgbImage)

title('Original RGB Image');

%read the ground truth image for comparison with the obtained mask

subplot(232)

given_mask=imread('G3MASK.png');

imshow(given_mask)

title('Given Mask Image')

%convert rgb to gray image

subplot(233);

gray=rgb2gray(rgbImage);

imshow(gray) ; title('Gray Scale Image');

%apply morphological operation "imclose"

subplot(234);

se1=strel('disk' , 14); % R must not be less than 10

Closed= imclose(gray,se1);

imshow(Closed)

19 | P a g e

title('Gray image without hairs')

L=graythresh(Closed)

mask = imbinarize(Closed,L);

se2=strel('diamond',15);

mask1 = imdilate(~(mask), se2);

b = bwconncomp(mask1)

numPixels = cellfun(@numel,b.PixelIdxList);

m = max(numPixels);

final_mask = bwareaopen(mask1,m);

subplot(235);

imshow(final_mask)

title('Obtained Mask Image')

%using segmentation performance metrics

%dice similarity coefficient

Dice_similarity = dice(final_mask,im2bw(given_mask))

%jaccard similarity index

Jaccard_similarity = jaccard(final_mask,im2bw(given_mask))

%Extracted melanoma region

subplot(236)

rgbImage=double(rgbImage);

Extracted_region=final_mask.*(rgbImage);

Extracted_region=mat2gray(Extracted_region);

se1=strel('square' , 14);

Extracted_region= imclose(Extracted_region,se1);

imshow(Extracted_region);

title('Extracted Melanoma Region')

suptitle('DIP CEP Group 3')

• MATLAB PLOTS:

Command window

20 | P a g e

Workspace window

Figure obtained:

RESULTS:

Similarity using Dice Coefficient: 0.9304 (i.e., 93.76% similar mask obtained)

Similarity using Jaccard Index: 0.8699 (i.e., 88.26% similar mask obtained)

21 | P a g e

REFERENCES:

[1] https://www.ncbi.nlm.nih.gov/pmc/articles/PMC8143893/#B1

[2] https://www.cancer.net/cancer-types/melanoma/statistics

[3] Zafar, Kashan et al. “Skin Lesion Segmentation from Dermoscopic Images Using

Convolutional Neural Network.” Sensors (Basel, Switzerland) vol. 20,6 1601. 13 Mar. 2020,

doi:10.3390/s20061601

[4] S Oukil, Reda Kasmi, K Mokrani, B García-Zapirain “Automatic segmentation and

melanoma detection based on color and texture features in dermoscopic images” ,25 Sept. 2021

doi: 10.1111/srt.13111

[5] https://www.cyto.purdue.edu/cdroms/micro2/content/education/wirth08.pdf

[6] Xaro Benavent, Esther Dura, Francisco Vegara, Juan Domingo. “Mathematical Morphology

for Color Images: An Image-Dependent Approach”, vol. 2012, Article ID 678326

22 | P a g e