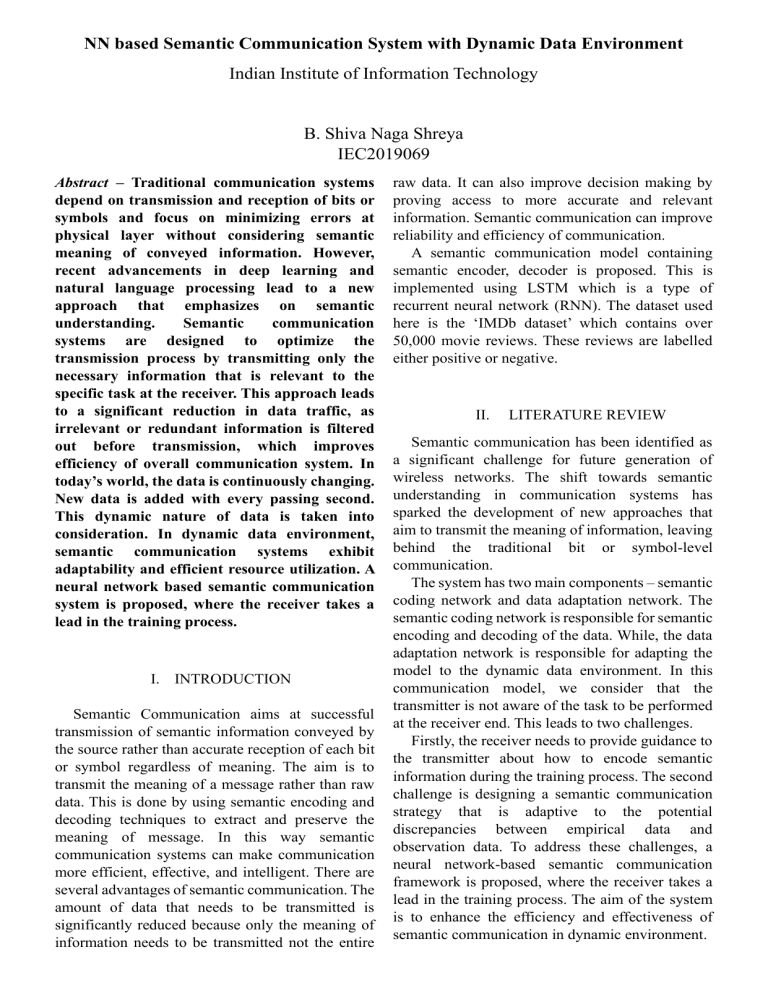

NN based Semantic Communication System with Dynamic Data Environment Indian Institute of Information Technology B. Shiva Naga Shreya IEC2019069 Abstract – Traditional communication systems depend on transmission and reception of bits or symbols and focus on minimizing errors at physical layer without considering semantic meaning of conveyed information. However, recent advancements in deep learning and natural language processing lead to a new approach that emphasizes on semantic understanding. Semantic communication systems are designed to optimize the transmission process by transmitting only the necessary information that is relevant to the specific task at the receiver. This approach leads to a significant reduction in data traffic, as irrelevant or redundant information is filtered out before transmission, which improves efficiency of overall communication system. In today’s world, the data is continuously changing. New data is added with every passing second. This dynamic nature of data is taken into consideration. In dynamic data environment, semantic communication systems exhibit adaptability and efficient resource utilization. A neural network based semantic communication system is proposed, where the receiver takes a lead in the training process. I. INTRODUCTION Semantic Communication aims at successful transmission of semantic information conveyed by the source rather than accurate reception of each bit or symbol regardless of meaning. The aim is to transmit the meaning of a message rather than raw data. This is done by using semantic encoding and decoding techniques to extract and preserve the meaning of message. In this way semantic communication systems can make communication more efficient, effective, and intelligent. There are several advantages of semantic communication. The amount of data that needs to be transmitted is significantly reduced because only the meaning of information needs to be transmitted not the entire raw data. It can also improve decision making by proving access to more accurate and relevant information. Semantic communication can improve reliability and efficiency of communication. A semantic communication model containing semantic encoder, decoder is proposed. This is implemented using LSTM which is a type of recurrent neural network (RNN). The dataset used here is the ‘IMDb dataset’ which contains over 50,000 movie reviews. These reviews are labelled either positive or negative. II. LITERATURE REVIEW Semantic communication has been identified as a significant challenge for future generation of wireless networks. The shift towards semantic understanding in communication systems has sparked the development of new approaches that aim to transmit the meaning of information, leaving behind the traditional bit or symbol-level communication. The system has two main components – semantic coding network and data adaptation network. The semantic coding network is responsible for semantic encoding and decoding of the data. While, the data adaptation network is responsible for adapting the model to the dynamic data environment. In this communication model, we consider that the transmitter is not aware of the task to be performed at the receiver end. This leads to two challenges. Firstly, the receiver needs to provide guidance to the transmitter about how to encode semantic information during the training process. The second challenge is designing a semantic communication strategy that is adaptive to the potential discrepancies between empirical data and observation data. To address these challenges, a neural network-based semantic communication framework is proposed, where the receiver takes a lead in the training process. The aim of the system is to enhance the efficiency and effectiveness of semantic communication in dynamic environment. III. PROBLEM STATEMENT Given a movie review, which is passed through a communication system, where the data is encoded and sent through a noisy channel. The semantic decoder must decode and reconstruct the distorted data to recover the original semantic meaning. The model must work for new dynamic data also. Objective: The development of neural network based semantic communication system for text transmission in dynamic data environments. The system should aim to maximize the transmission capacity while minimizing semantic errors, ensuring that the meaning of the transmitted text is accurately conveyed to the receiver. The system should be capable of adapting to changing network conditions and efficiently utilizing available resources. IV. PROPOSED METHODOLOGY A neural network-based semantic communication system is developed for text transmission. The methodology consists of several steps: pre-process, encode, transmit, and decode the data. The receiver task for the model is prediction of sentiment of reviews. The first step is data pre-processing. The text data is loaded and undergoes pre-processing techniques. This ensures that the text is in a suitable format for further processing. Then, the pre-processed data is encoded using a neural network. The network learns to represent semantic relationships and patterns within the text data during training. Once the data is encoded, it is transmitted over a noisy channel. Random distortions or noise are introduced, which can alter the meaning of the transmitted text. The aim of decoding process is to reduce the effects of noise and restore the meaning of the transmitted information. Fig. 1. Flow Diagram Semantic decoding: Semantic decoding is the process of converting a message from its encoded form back into its original meaning. The aim of decoder is to reconstruct the original message from the distorted data. Long Short-Term Memory (LSTMs): LSTMs are a type of Recurrent Neural Networks (RNNs), which can capture long term dependencies between the words in the sequence. The LSTMs read the input sequence one word at a time until it reaches the end of input sequence. At each timestep, the LSTM updates its state. After reaching the end of sequence, the LSTM has a state that represents the entire input sequence meaning. This state is then used by the decoder while decoding the text. Encoder – Decoder Network: The input data (a movie review from IMDb dataset) is first loaded into the system. The data is then pre-processed to get input vectors of same length. The pre-processed data is then converted into semantic embeddings. This is done by the embedding layer that takes each word in the input sequence and maps it to a fixedlength vector. This vector represents the meaning of the word. Semantic encoding: Semantic encoding is the process of converting a message into a form that can be understood by the receiver. This is done by extracting the meaning of the message and representing it in a way that can be processed by the receiver. Fig. 2. The Model Architecture The LSTM then uses these vectors to learn the long-term dependencies between the words in the sequence. These embeddings are then read one at a time. At each timestep the LSTM updates its state using forget gate, input gate and the output gate. At the end of sequence, the LSTM has a state that represents the meaning of sequence. This state is then used by the dense layer, which is acting as a decoder, to predict the next word in the output sequence. The encoded text is then sent through noisy channel where noise is introduced that may distort the semantic data. The dense layer predicts the next word by taking the encoded state as input and outputting a probability distribution over the possible next words. The dense layer continues to predict the next word until it reaches the end of the sentence. In this way, the output sequence is predicted from the distorted input. To handle new dynamic data without retraining the entire model, online learning techniques such as stochastic gradient descent (SGD) are used. In this approach, the model parameters are updated incrementally based on small batches of data as they become available. The model continuously learns and incorporates new information without forgetting the previously learned knowledge. Instead of training the model with the entire dataset at once, the model can be updated using minibatches of new data as they arrive. Thereby, the model adapts to new patterns and adjusts its predictions accordingly. Fig. 3. The Model Summary Model Training: The training process is done by feeding the LSTM with a set of input and output sequences. The LSTM learns to encode the input sequences into a fixed-length vector. The dense layer then learns to decode the encoded state into the output sequences. The training process involves updating the neural network parameters based on the loss discovered between the decoded semantic data and the original semantic data. Through this process, the model learns to optimize the process, improving the overall accuracy and reliability of the communication system. We will train the model on a batch size of 128. V. RESULTS We train our semantic communication model for 10 epochs on “IMDb dataset”. We are not using any pretrained word embeddings instead we are using embedding layer which is also trained along with the model. Fig. 4. Model Training The model is showing an accuracy of 98%. In dynamic data environment, when new data is added gradually, we test the model on new data for accurate sentiment prediction which is the final task at the receiver end. This happens without retraining of the model. Fig. 5. Accuracy Fig. 6. Dynamic Data These are few examples of accurate outcomes for dynamic data. Here, the pre-trained model is first loaded and then the model is tested for the dynamic data to check if it is giving accurate outcome without retraining the model. This is shown in Fig. 6. VII. REFERENCES 1. H. Zhang, S. Shao, M. Tao, X. Bi and K. B. Letaief, "Deep Learning-Enabled Semantic Communication Systems With Task-Unaware Transmitter and Dynamic Data," in IEEE Journal on Selected Areas in Communications, vol. 41, no. 1, pp. 170-185, Jan. 2023. 2. H. Xie, Z. Qin, G. Y. Li and B. -H. Juang, "Deep Learning Enabled Semantic Communication Systems," in IEEE Transactions on Signal Processing, vol. 69, pp. 2663-2675, 2021. Fig. 7. Loss VI. OUTCOMES The Neural Network architecture is designed to capture semantic relationships between words from input data and encode the semantic information. The model effectively recovers the input data from the distorted output of the noise channel. This facilitates effective communication and understanding. The system's stability is maintained by carefully managing the model updates. A Neural Network-based semantic communication system is implemented that allows the task execution in a dynamic data environment without retraining the model. So, without forgetting previously learned information, it enables the system to handle new data thus avoiding the issue of catastrophic forgetting. It can retain important knowledge while incorporating new information, by incrementally updating the model. 3. H. Xie, Z. Qin, G. Y. Li and B. -H. Juang, "Deep Learning based Semantic Communications: An Initial Investigation," GLOBECOM 2020 - 2020 IEEE Global Communications Conference, Taipei, Taiwan, 2020. 4. G. Shi et al., “A new communication paradigm: From bit accuracy to semantic fidelity,” 2021, arXiv:2101.12649. 5. J. Bao et al., “Towards a theory of semantic communication,” in Proc. IEEE Netw. Sci. Workshop, Jun. 2011, pp. 110–117.