Concrete compressive strength using artificial neural networks

advertisement

Neural Computing and Applications (2020) 32:11807–11826

https://doi.org/10.1007/s00521-019-04663-2

(0123456789().,-volV)(0123456789().

,- volV)

ORIGINAL ARTICLE

Concrete compressive strength using artificial neural networks

Panagiotis G. Asteris1

•

Vaseilios G. Mokos1

Received: 19 June 2019 / Accepted: 3 December 2019 / Published online: 10 December 2019

Ó Springer-Verlag London Ltd., part of Springer Nature 2019

Abstract

The non-destructive testing of concrete structures with methods such as ultrasonic pulse velocity and Schmidt rebound

hammer test is of utmost technical importance. Non-destructive testing methods do not require sampling, and they are

simple, fast to perform, and efficient. However, these methods result in large dispersion of the values they estimate, with

significant deviation from the actual (experimental) values of compressive strength. In this paper, the application of

artificial neural networks (ANNs) for predicting the compressive strength of concrete in existing structures has been

investigated. ANNs have been systematically used for predicting the compressive strength of concrete, utilizing both the

ultrasonic pulse velocity and the Schmidt rebound hammer experimental results, which are available in the literature. The

comparison of the ANN-derived results with the experimental findings, which are in very good agreement, demonstrates

the ability of ANNs to estimate the compressive strength of concrete in a reliable and robust manner. Thus, the (quantitative) values of weights for the proposed neural network model are provided, so that the proposed model can be readily

implemented in a spreadsheet and accessible to everyone interested in the procedure of simulation.

Keywords Artificial neural networks Compressive strength Concrete Non-destructive testing methods Soft computing

List of symbols

B

Vector of bias values

fc

Compressive strength of concrete

IW Matrix of weights values for input layer

LW Matrix of weights values for hidden layer

R

Rebound hammer

Vp

Ultrasonic pulse velocity

Abbreviations

ANNs Artificial neural networks

BP

Back propagation

RH

Rebound hammer

UPV

Ultrasonic pulse velocity

& Panagiotis G. Asteris

asteris@aspete.gr; panagiotisasteris@gmail.com

1

Computational Mechanics Laboratory, School of Pedagogical

and Technological Education, 14121 Heraklion, Athens,

Greece

1 Introduction

Assessment of the bearing capacity of existing concrete

structures is an important issue, which is attracting the

interest of researchers, especially in recent years. Before

any design or intervention action is carried out, it is necessary to investigate and document the existing concrete

structure to a sufficient extent and depth, so as to obtain the

maximum amount of data with high reliability, on which to

base the assessment or redesign. This involves surveying

the structure and assessing its condition, recording any

wear or damage as well as conducting on site investigation

works and measurements. Notice that the tests available for

testing concrete range from (a) completely non-destructive,

(b) partially destructive tests, and (c) destructive tests, for

which the concrete surface has to be repaired after the test.

Measurements for concrete strength are usually performed

with non-destructive methods, as they do not require

destructive sampling, while their usage is simple and quick.

The investigation of concrete aims mainly at determining

the compressive strength for each area of the structure.

Other properties, such as modulus of elasticity, tensile

strength, etc., can be determined indirectly based on

compressive strength. The expected systematic differentiation of concrete strength must be taken into account,

123

11808

depending on its characteristic position in the structure, and

the conditions of concreting, compaction and maintenance.

It is possible that there are significant differences in

strength between slabs, beams, upper and lower parts of

columns, while in cases of poor workmanship in column

concreting, it cannot be ruled out that the lower part may

also develop lower strength due to segregation and

cavitations.

Non-destructive testing can be applied to both old and

new structures. For new structures, the principal applications focus on quality control and the resolution of issues

related to the quality of materials or construction. Testing

existing structures is focused on the assessment of structural integrity or adequacy. The ultrasonic pulse velocity

method and the rebound hammer test are the most commonly used non-destructive techniques for the estimation

of mechanical characteristics of concrete, both in the laboratory and in situ. In the international literature, a number

of relationships have been proposed via which the compressive strength of concrete is correlated with the speed of

ultrasound and the rate of bounce in case of rebound

hammer test. The main drawback of these methods is the

large dispersion of the values they predict, and the significant deviation from the actual (experimental) value of the

compressive strength of the concrete. The lack of appropriate and reliable empirical relationships to estimate the

compressive strength of concrete has attracted the interest

of researchers—over the past decade—toward the application of non-deterministic techniques.

Although research has been mainly concerned with the

determination of compressive strength values through nondestructive techniques, both on a theoretical and experimental levels, this important issue still remains unresolved.

The latter observation is manifested via various facts; most

available proposals result in the estimation of different

values, while predicted values are almost always found

either to be overestimated or underestimated in relation to

experimental values. This fact can be attributed to the

nonlinear behavior which governs the influence of the

parameters on a concrete material’s compressive strength.

Thus, the use non-deterministic techniques, such as soft

computing techniques, is of utmost importance in order to

achieve an optimum solution and reveal the complex

influence of each parameter (while at the same time taking

all parameters into account).

An artificial neural network (ANN) is a computational

model that is inspired by the biological neural networks in

the human brain, which process information. Neural networks are capable of ‘‘learning’’ and correlating large

datasets obtained from experiments or simulations. The

trained neural network serves as an analytical tool for

qualified prognoses of the actual results. There are efficient

123

Neural Computing and Applications (2020) 32:11807–11826

methods for their training and validation, and they can

yield high accuracy scores in their predictions.

In this paper, the application of ANNs for predicting the

compressive strength of concrete structure has been

investigated. For the training of the ANN models, an

experimental database, based on ultrasonic pulse velocity

and Schmidt rebound hammer experimental results (available in the literature) has been utilized, in conjunction with

respective compressive strength tests, conducted on cores

of the same sample. The good comparison of the ANNderived results with the experimental findings and the

theoretical results demonstrates the ability of ANNs to

approximate the compressive strength of concrete, based

on non-destructive technique results, and in particular

ultrasonic pulse velocity measurements and Schmidt

hammer rebound tests, in a reliable and robust manner.

A MATLAB-Program has been developed, and representative examples have been studied in order to demonstrate

the efficiency, the accuracy and the range of applications of

the developed method. In addition to the architecture of the

proposed optimum neural system, a supplementary materials section is included which provides a simple design/

education tool which can assist both in teaching, as well as

the estimation of concrete compressive strength based on

non-destructive testing, By including all the necessary

information, anyone can test the proposed model. Additionally to the reliability it ensures, including this information also provides the means for other researchers,

students or field practitioners to further test the reliability

of the proposed model.

2 Literature review

The rebound hammer (RH) and the ultrasonic pulse

velocity (UPV) comprise the most utilized non-destructive

methods in determining the compressive strength of concrete. The results obtained by the RH and UPV tests can be

influenced by a sufficiently large set of factors, depending

on the investigated concrete element.

The rebound (Schmidt) hammer is one of the oldest and

best-known methods. It is used in comparing the concrete

in various parts of a structure and indirectly in assessing the

concrete strength. The rebound of an elastic mass depends

on the hardness of the surface against which its mass

strikes. The results of rebound hammer are significantly

influenced by factors such as: the smoothness of the test

surface; the size, the shape, and the rigidity of the specimens; the age of the specimen; the surface and internal

moisture conditions of the concrete; the type of coarse

aggregate; the type of cement; and the carbonation of

concrete surface [1]. It is worth noticing that the hammer

method can be used in the horizontal, vertically overhead

Neural Computing and Applications (2020) 32:11807–11826

or vertically downward positions as well as at any intermediate angle, provided that the hammer is perpendicular

to the surface under test. However, the position of the mass

relative to the vertical affects the rebound number due to

the action of gravity on the mass in the hammer. This

method has gained popularity due to its simple use and the

possibility of using it on a single concrete surface without

requiring access to the construction from both sides (which

is necessary for direct ultrasonic testing methods).

The ultrasonic pulse velocity (UPV) method is one of

the most popular non-destructive techniques used in the

assessment of concrete properties. The use of UPV to the

non-destructive assessment of concrete quality has been

extensively investigated for decades. Ultrasonic pulse

velocity testing of concrete is based on the pulse velocity

method to provide information on the uniformity of concrete, cracks and defects. The pulse velocity in a material

depends on its density and its elastic properties, which in

turn are related to the quality and the compressive strength

of the concrete. This test method is applicable to assess the

uniformity and relative quality of concrete, to indicate the

presence of voids and cracks, and to evaluate the effectiveness of crack repairs. It is also applicable to indicate

changes in the properties of concrete, and in the survey of

structures, to estimate the severity of deterioration or

cracking. However, it is difficult to accurately evaluate the

concrete compressive strength with this method since UPV

values are affected by a number of factors, which do not

necessarily influence the concrete compressive strength in

the same way or to the same extent [2]. The UPV test is

described in ASTM C 597-83 [3] and BS 1881-203 [4] in

detail.

It is worth noticing that a plethora of experimental data

and theoretical correlation relationships between compressive concrete strength and pulse velocity (as well as the

rebound hammer results) have been presented and proposed. Table 1, suggested by Whitehurst [5], shows how

the values of the obtained velocity can be utilized to

classify the quality of concrete. In addition, in Table 2 the

most accepted empirical relationships in the international

literature [2, 6–13], which allow the compressive strength

Table 1 Quality of concrete with respect to ultrasonic pulse velocity

[5]

Quality of concrete

Ultrasonic pulse velocity Vp in m/s

Above 4500

Excellent

3500–4500

Generally good

3000–3500

Questionable

2000–3000

Generally poor

Below 2000

Very poor

11809

of the concrete to be assessed by using (a) ultrasound rate

measurements Vp , (b) rebound (Schmidt) hammer results

R and (c) a combination of measurements by both methods

Vp ; R , are presented. Detailed and in-depth state-of-theart report can be found in the works of Erdal [13],

Mohammed and Rahman [14], Alwash et al. [15] as well as

in PhD thesis of Alwash [16].

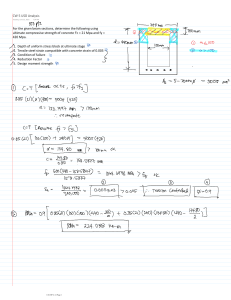

Figures 1 and 2 present a comparison between some of

the aforementioned expressions (Table 2) for the evaluation of the concrete compressive strength. Especially,

Fig. 1 presents a comparison with respect to ultrasonic

pulse velocity value [single-variable equations (E1)–(E6)],

while Fig. 2 with respect to rebound hammer [singlevariable equations (E7)–(E9)]. Furthermore, Fig. 3 shows

the contours of the aforementioned expressions (Table 2)

for the evaluation of the concrete compressive strength

using the multi-variable equations (E10)–(E14). From

Figs. 1, 2 and 3, it is obvious that the concrete compressive

strength calculated based on these expressions shows

considerable variation, revealing the need for further

investigation and refinement of the proposals. The purpose

of this paper is to improve this situation through the use of

appropriate neural networks.

3 Materials and methods

3.1 Artificial neural networks

Artificial neural networks (ANNs) are models which process information and make predictions. They are designed

in order to learn from the available experimental or analytical/theoretical data. Such models have the ability to

classify data, predict values, and assist in decision-making

processes. A trained ANN achieves mapping of input

parameters onto a specific output; it presents similarities to

a response surface method. A trained ANN can achieve

more reliable results over conventional numerical analysis

procedures (e.g., regression analysis) and, furthermore, it is

produced with considerably less computational effort

[17–31].

ANNs work in a manner similar to that of the biological

neural network of the human brain [32–44]. The artificial

neuron is the basic building block of an ANN; in fact, it is a

mathematical model which attempts to mimic the behavior

of the biological neuron (Fig. 4).

Input data is passed into the artificial neuron and, after

being processed via a mathematical function, it can lead to

an output (same as the biological neuron’s fire-or-not situation). Before the input data enters the neuron, weights

are attributed to the input parameters in order to mimic the

random nature of the biological neuron. The ANN is

123

11810

Neural Computing and Applications (2020) 32:11807–11826

Table 2 Empirical relationships for estimating compressive strength of concrete ðfc Þ

Parameters

Vp

R

Equation

fc Vp ¼ 1:146e0:77Vp

fc Vp ¼ 1:119e0:715Vp

fc Vp ¼ 0:0854e1:288Vp

2

fc Vp ¼ 176:9 96:467Vp þ 13:906 Vp

1:7447

fc Vp ¼ 1:2 105 1000Vp

fc Vp ¼ 36:73Vp 129:077

Nr.

References

(E1)

Turgut [6]

(E2)

Nash’t et al. [7]

(E3)

Trtnik et al. [2]

(E4)

Logothetis [8]

(E5)

Kheder [9]

(E6)

Qasrawi [10]

fc ðRÞ ¼ 9:40 þ 0:52R þ 0:02R2

(E7)

Logothetis [8]

1:2083

VP ; R

fc ðRÞ ¼ 0:4030R

fc ðRÞ ¼ 1:353R 17:393

fc Vp ; R ¼ e1:78 lnðVp Þþ0:85 lnðRÞ0:02 0:0981

fc Vp ; R ¼ 18:6e0:515Vp þ0:019R 0:0981

2

fc Vp ; R ¼ ð0:10983 þ 0:00157R 0:79315 Vp =10 0:00002R2 þ 1:29261 Vp =10 Þ 103

fc Vp ; R ¼ 0:42R þ 13:166Vp 40:255

0:4254 1:1171

fc Vp ; R ¼ 0:0158 1000Vp

R

(E8)

Kheder [9]

(E9)

(E10)

Qasrawi [10]

Logothetis [8]

(E11)

Arioglu et al. [11]

(E12)

Amini et al. [12]

(E13)

Erdal 2009 [13]

(E14)

Kheder [9]

In the above relations, Vp in (km/s), R (rebound number) and fc in MPa

Fig. 1 Comparison of equations related to ultrasonic pulse velocity

for the evaluation of concrete compressive strength

consisted of these neurons in the same way as biological

neural networks work. Three main phases are necessary in

order to set up an ANN: (1) the ANN’s architecture must

be established; (2) the appropriate training algorithm,

which is necessary for the ANN learning phase, must be

defined; and (3) the mathematical functions describing the

mathematical model must be determined. The architecture

or topology of the ANN is related and describes the manner

in which the artificial neurons are organized in the group,

as well as the manner with which information flows within

123

Fig. 2 Comparison of equations related to rebound hammer for the

evaluation of concrete compressive strength

the network. When neurons are organized in more than one

layer, the network is called a multilayer ANN. The training

phase of the ANN is considered crucial; it is a function

minimization problem, where an error function is minimized, thus assisting in the determination of the optimum

value of weights. Different ANNs use different optimization algorithms for this purpose. The summation functions

and the activation functions are mathematical functions

that define the behavior of each neuron. In the present

study, a back propagation neural network (BPNN) is

implemented and described in the following section.

Neural Computing and Applications (2020) 32:11807–11826

30

30

fc(MPa)

28

42

40

38

36

34

32

30

28

26

24

22

20

18

16

14

12

10

8

26

25

24

23

22

29

fc(MPa)

29

28

70

28

27

100

55

26

50

45

25

40

35

24

30

23

90

26

80

70

25

60

24

50

40

23

20

30

15

22

20

6

20

22

10

10

21

20

20

5

110

27

60

21

4

120

25

21

3

fc(MPa)

65

R (Rebound Number)

27

30

R (Rebound Number)

29

R (Rebound Number)

11811

3

4

5

Vp(km/m)

Vp(km/m)

E10

E11

3

6

4

5

6

Vp(km/m)

E12

30

30

29

fc(MPa)

29

28

50

28

fc(MPa)

28

27

26

25

24

23

22

21

20

19

18

17

16

15

14

13

46

42

38

26

34

30

25

26

24

22

18

23

14

10

22

27

R (Rebound Number)

R (Rebound Number)

27

26

25

24

23

22

6

21

21

20

20

3

4

5

6

3

4

5

Vp(km/m)

Vp(km/m)

E13

E14

6

Fig. 3 Contours of the concrete compressive strength using multi-variable equations

123

11812

Neural Computing and Applications (2020) 32:11807–11826

Fig. 4 Schematic representation

of biological neuron structure

3.2 Architecture of BPNN

A BPNN is a feed-forward, multilayer [38] artificial neural

network. In BPNN, information flows from the input

toward the output with no feedback loops. Furthermore, the

neurons of the same layer are not connected to each other;

however, they are connected with all the neurons of the

previous and subsequent layer. A BPNN has a standard

structure. This structure can be described as

N H1 H2 HNHL M

ð1Þ

where N is the number of input neurons (input parameters);

Hi is the number of neurons in the i-th hidden layer for

i ¼ 1; . . .; NHL; NHL is the number of hidden layers; and

M is the number of output neurons (output parameters). For

example, the code 3-5-2-1 means that the model has an

architecture composed of an input layer consisting of 3

neurons, two hidden layers with 5 and 2 neurons, respectively, and an output layer with 1 neurons, i.e., a 3-5-2-1

BPNN.

Although multilayer NN models are most frequently

proposed by researches, it should be noted the ANN

models with only one hidden layer are capable to predict

any forecast problem in a reliable manner.

In Fig. 5, a notation for a single node (with the corresponding R-element input vector) of a hidden layer is

presented.

For each neuron i, the individual element inputs

p1 ; . . .; pR are multiplied by the corresponding weights

wi;1 ; . . .; wi;R . The weighted values are fed to the junction of

the summation function, in which the dot product (W p) of

the weight vector W ¼ wi;1 ; . . .; wi;R and the input vector

p ¼ ½p1 ; . . .; pR T is generated. The threshold b (bias) is

added to the dot-product forming the net input n, which is

the argument of the transfer function f:

n ¼ W p ¼ wi;1 p1 þ wi;2 p2 þ þ wi;R pR þ b

123

ð2Þ

Fig. 5 A neuron with a single R-element input vector

The transfer (or activation) function f which is chosen

may influence the complexity and performance of the ANN

to a great degree. Even though sigmoidal transfer functions

are the transfer functions most commonly come across,

different types of function may be used and may even be

more appropriate for certain problems. A large variety of

alternative transfer functions have been proposed in the

relevant literature [45, 46]. In this study, the Logistic

Sigmoid and the Hyperbolic Tangent transfer functions

were found to be the most appropriate accurately determining the issue investigated. The training data are fed into

the network during the training phase; during this phase,

the ANN achieves a mapping between the input values and

Neural Computing and Applications (2020) 32:11807–11826

11813

Table 3 Database

No

Sample

(1)

Batch

Nr.

(2)

1

1

Table 3 (continued)

Input

parameters

Specimen

Nr.

(3)

Vp

(km/s)

(4)

Output

parameter

R

fc (MPa)

(5)

(6)

Dataset

No

Sample

(7)

(1)

Batch

Nr.

(2)

Input

parameters

Output

parameter

Dataset

Specimen

Nr.

(3)

Vp

(km/s)

(4)

R

fc (MPa)

(5)

(6)

(7)

1

4.61

26.40

20.20

T

46

4

4.55

33.00

36.19

T

2

2

4.44

28.50

19.91

T

47

5

4.52

32.60

30.30

T

3

3

4.55

27.00

20.30

V

48

6

4.55

33.80

35.30

Test

4

4

4.58

26.60

22.16

T

49

1

4.60

28.00

22.16

T

5

5

4.62

27.00

20.89

T

50

2

4.52

28.00

22.26

T

6

4.51

26.80

21.67

Test

51

3

4.55

30.00

23.63

V

1

4.32

26.00

18.63

T

2

4.35

25.50

20.10

T

52

53

4

5

4.50

4.51

28.00

28.90

22.26

22.36

T

T

9

3

4.35

27.00

20.40

V

54

6

4.51

27.50

22.16

Test

10

11

4

5

4.37

4.37

26.00

26.10

19.22

21.57

T

T

55

1

4.62

31.00

27.85

T

56

2

4.67

31.20

27.26

T

12

6

4.32

27.30

19.22

Test

57

3

4.60

30.20

25.10

V

1

4.18

22.80

15.00

T

58

4

4.62

30.00

28.44

T

14

2

4.25

25.00

17.36

T

59

5

4.65

30.80

26.77

T

15

3

4.19

23.00

15.30

V

60

6

4.54

31.00

29.03

Test

16

4

4.20

24.50

15.89

T

61

1

4.74

28.60

23.63

T

17

5

4.13

23.80

14.22

T

62

2

4.70

30.70

25.69

T

6

4.26

24.00

16.67

Test

63

3

4.48

28.60

23.05

V

1

4.76

32.20

32.36

T

64

4

4.51

28.50

25.20

T

20

2

4.76

32.00

30.60

T

65

5

4.55

29.40

24.03

T

21

3

4.88

30.00

35.79

V

66

6

4.54

29.20

25.50

Test

22

4

4.78

31.80

31.58

T

23

5

4.73

32.50

30.89

T

67

68

1

2

4.69

4.73

30.00

30.00

28.44

25.30

T

T

6

4.77

32.00

32.36

Test

69

3

4.77

30.00

28.05

V

1

2

4.88

4.80

32.80

33.60

34.62

34.62

T

T

70

4

4.73

29.40

28.14

T

71

5

4.69

30.60

27.85

T

27

3

4.88

33.00

35.50

V

72

6

4.77

30.30

27.36

Test

28

4

4.85

33.80

35.50

T

73

1

4.65

25.10

17.16

T

29

5

4.80

33.50

34.32

T

74

2

4.48

26.00

19.61

T

6

4.85

34.00

37.46

Test

75

3

4.51

25.40

16.67

V

1

4.61

30.00

28.14

T

76

4

4.58

24.40

16.67

T

32

2

4.65

30.50

27.07

T

77

5

4.54

26.00

18.34

T

33

3

4.66

31.00

30.11

V

78

6

4.54

26.00

19.12

Test

34

4

4.66

30.80

28.14

T

79

1

4.48

28.60

22.16

T

35

5

4.64

29.00

28.44

T

80

2

4.52

28.00

23.14

T

6

4.64

31.00

28.44

Test

81

3

4.45

28.30

22.95

V

1

4.55

30.50

27.46

T

38

2

4.65

30.00

28.64

T

82

83

4

5

4.48

4.48

26.30

29.10

23.05

22.36

T

T

39

3

4.62

31.90

28.14

V

84

6

4.55

27.40

22.36

Test

40

4

4.62

30.80

28.93

T

85

1

4.45

26.60

19.61

T

41

42

5

6

4.65

4.62

31.30

30.80

29.62

29.52

T

Test

86

2

4.45

24.60

16.67

T

87

3

4.44

25.50

17.65

V

1

4.55

30.10

29.62

T

88

4

4.44

25.40

18.34

T

44

2

4.55

30.70

35.50

T

89

5

4.46

26.40

17.85

T

45

3

4.55

32.40

34.81

V

90

6

4.53

25.40

20.10

Test

6

7

2

8

13

3

18

19

4

24

25

26

5

30

31

6

36

37

43

7

8

9

10

11

12

13

14

15

123

11814

Neural Computing and Applications (2020) 32:11807–11826

Table 3 (continued)

No

Sample

(1)

Batch

Nr.

(2)

91

16

Specimen

Nr.

(3)

Table 3 (continued)

Input

parameters

Output

parameter

Vp

(km/s)

(4)

R

fc (MPa)

(5)

(6)

Dataset

No

Sample

(7)

(1)

Batch

Nr.

(2)

Input

parameters

Output

parameter

Dataset

Specimen

Nr.

(3)

Vp

(km/s)

(4)

R

fc (MPa)

(5)

(6)

(7)

1

4.63

29.70

24.32

T

136

5

4.10

26.20

14.42

T

92

2

4.58

29.30

24.52

T

137

6

3.85

22.90

14.81

T

93

3

4.77

30.80

25.20

V

138

1

5.05

38.20

43.25

Test

94

4

4.66

29.70

26.87

T

139

2

5.05

37.20

40.31

T

95

5

4.62

29.00

26.87

T

140

3

5.10

39.00

43.44

T

6

4.69

30.00

26.18

Test

141

4

5.10

38.00

39.32

V

1

2

4.17

4.15

23.00

23.50

14.51

16.18

T

T

142

143

5

6

5.05

5.05

36.80

37.10

42.27

41.58

T

T

99

3

4.19

23.50

14.42

V

144

1

4.80

36.00

47.56

Test

100

4

4.08

23.10

14.22

T

145

2

4.88

34.40

42.95

T

101

5

4.12

24.00

14.91

T

146

3

4.93

35.40

40.70

T

102

6

4.17

22.00

15.20

Test

147

4

4.81

34.60

41.97

V

1

4.03

21.60

12.85

T

148

5

4.92

35.20

44.33

T

104

2

3.94

20.50

12.16

T

149

6

4.92

35.50

43.64

T

105

3

3.90

21.80

12.45

V

150

1

5.22

41.50

50.21

Test

106

4

3.93

21.10

12.45

T

151

2

5.22

41.50

50.01

T

107

5

3.95

20.00

12.65

T

152

3

5.18

40.60

49.33

T

108

6

3.95

22.00

14.32

Test

153

4

5.22

42.00

50.01

V

2

4.35

24.90

15.79

T

154

5

5.22

40.00

45.40

T

110

3

4.36

25.80

16.18

T

155

6

5.22

41.40

50.01

T

111

4

4.35

24.30

15.89

V

156

1

5.00

41.00

50.41

Test

112

113

5

6

4.31

4.40

24.40

25.60

15.30

16.38

T

T

157

158

2

3

4.95

4.92

41.00

40.80

52.17

50.80

T

T

96

97

98

103

109

114

17

18

19

20

24

25

26

27

1

4.20

22.20

15.40

Test

159

4

5.00

41.00

50.01

V

115

2

4.17

22.00

14.42

T

160

5

4.98

40.00

49.13

T

116

3

4.22

21.90

14.32

T

161

6

4.99

41.20

50.80

T

117

4

4.20

22.10

15.10

V

162

1

4.96

38.90

42.95

Test

118

5

4.17

21.80

15.49

T

163

2

4.96

37.30

40.99

T

6

4.14

21.90

15.00

T

164

3

4.96

38.50

41.68

T

1

4.35

27.20

20.10

Test

165

4

4.96

38.30

40.99

V

121

2

4.35

28.40

19.81

T

166

5

4.96

37.30

41.19

T

122

3

4.45

27.90

20.89

T

167

6

5.00

37.00

41.78

T

123

4

4.48

28.60

22.85

V

168

1

4.92

36.20

39.91

Test

124

5

4.45

29.20

22.56

T

169

2

4.95

36.20

39.72

T

6

4.41

29.00

21.08

T

170

3

4.80

37.30

38.25

T

1

4.62

28.70

23.05

Test

171

4

4.80

38.10

40.40

V

127

128

2

3

4.55

4.58

28.00

29.80

24.22

23.54

T

T

172

173

5

6

4.78

4.85

37.20

35.20

39.72

39.72

T

T

129

4

4.62

28.40

24.52

V

174

1

4.77

36.10

38.83

Test

130

5

4.65

29.00

23.83

T

175

2

4.77

37.30

41.19

T

6

4.55

29.40

23.73

T

176

3

4.77

37.10

39.72

T

1

4.38

28.10

21.38

Test

177

4

4.73

36.80

38.05

V

133

2

4.45

28.20

20.99

T

178

5

4.77

37.10

39.42

T

134

3

4.40

28.60

21.67

T

179

6

4.88

36.10

40.21

T

135

4

4.45

28.00

24.42

V

180

1

4.84

33.00

31.87

Test

119

120

21

125

126

22

131

132

23

123

28

29

30

31

Neural Computing and Applications (2020) 32:11807–11826

11815

Table 3 (continued)

No

Sample

Input

parameters

Output

parameter

(1)

Batch

Nr.

(2)

Specimen

Nr.

(3)

Vp

(km/s)

(4)

R

fc (MPa)

(5)

(6)

(7)

181

2

4.75

33.90

33.05

T

182

3

4.72

33.80

33.93

T

183

4

4.75

34.60

33.93

V

184

5

4.72

33.30

34.81

T

185

6

4.75

33.80

34.81

T

186

33

Dataset

3.3 Experimental—database

1

4.52

30.00

26.38

Test

187

188

2

3

4.42

4.45

30.00

30.90

25.01

26.97

T

T

189

4

4.45

30.40

26.28

V

190

5

4.45

30.90

26.58

T

6

4.45

30.50

25.99

T

1

4.52

28.30

26.09

Test

193

2

4.48

30.00

26.58

T

194

3

4.45

30.00

27.46

T

195

4

4.45

29.90

27.46

V

196

5

4.45

30.90

27.46

T

197

6

4.45

31.00

27.46

T

1

4.26

28.00

22.85

Test

199

2

4.28

28.30

23.14

T

200

3

4.28

27.00

22.36

T

201

4

4.32

28.70

23.54

V

202

203

5

6

4.32

4.11

28.20

29.00

23.34

24.52

T

T

191

192

198

204

34

35

36

1

4.29

27.00

22.46

Test

205

2

4.26

28.50

22.16

T

206

3

4.20

28.20

21.38

T

207

4

4.29

29.60

22.85

V

208

5

4.29

28.00

23.54

T

209

6

4.29

28.00

23.73

T

the respective output values, by adjusting the weights in

order to minimize the following error function:

X

E¼

ðxi yi Þ2

ð3Þ

Table 4 The input and output

parameters used in the

development of BPNNs

where xi and yi are the measured and the prediction values

of the network, respectively, within an optimization

framework.

Variable

An extended and reliable database is a prerequisite for the

successful function of any artificial neuron network. The

database must be capable of training the system; it should

contain datasets which cover the whole range of possible

values of the parameters influencing the problem under

examination. Compiling such a database comes with a

number of difficulties. For one, it is impossible for one

researcher alone to obtain an amount of experimental data

large enough to be capable of adequately training the ANN.

Another issue is related to the reliability of available data,

as the optimum developed network is trained by the database; thus, if data is incorrect, the trained system will not

be able to predict correct values, thus confirming the

Garbage In, Garbage Out (GIGO) expression. Predictive

analytics demands good data. A higher amount of data can

be harmful, if the data is not correct. In order to predict one

requires, above all, accurate data. The optimization of the

ANNs proposed for the prediction of the compressive

strength of concrete is based on the experimental datasets

presented briefly in the following paragraphs.

An experimental database consists of experimental data

sets available in the literature has been prepared. Namely,

the database consists of 209 datasets based on experimental

results from the PhD thesis by Logothetis [8]. Leonidas

Logothetis under the supervision of Prof. Theodosios

Tassios, in order to support his PhD thesis entitled

‘‘Combination of three non-destructive methods for the

determination of the strength of concrete’’ at the National

Technical University of Athens, Athens, Greece, prepared

and tested 36 batches of cubic concrete specimens. Each

one from the 36 batches (except one) consisted of 6

specimens. Each specimen was initially measured through

non-destructive techniques; first ultrasonic pulse velocity

measurements were conducted and then Schmidt hammer

rebound tests. After the non-destructive measurements

were concluded, each specimen was subjected under uniaxial compressive test in order to measure its compressive

Unit

Parameter type

Data used in NN models

Min

Ultrasonic pulse velocity Vp

Rebound ðRÞ

Concrete compressive strength ðfc Þ

km/s

Input

–

MPa

Input

Output

Average

Max

STD

3.85

4.57

5.22

0.28

20.00

12.16

30.18

27.58

42.00

52.17

5.01

9.97

123

11816

Neural Computing and Applications (2020) 32:11807–11826

of rebound. The corresponding output training vectors are

of dimension 1 9 1 and consist of the value of the compressive strength of the concrete cubic specimens. Their

mean values together with the minimum, maximum values,

as well as standard deviation (STD) values are listed in

Table 4. Moreover, Fig. 6 demonstrates the frequency

histograms of the parameters.

The main advantages of this database are:

1. A sufficient number of experimental data is present

2. All cores have undergone the same environmental

conditions

3. All cores have been built and tested by the same

researchers

4. All cores have been built at the same time, complying

to the same standards

5. The tests were carried out by the same devices. This is

particularly important for the reliability of the

database, since it has been observed that for the same

concrete compositions significant variations/differences in the measured sizes are recorded when they

are performed by different laboratories, and

6. As shown in Table 4 and Fig. 6, all measured parameters cover almost all possible cases. Thus, values for

ultrasonic pulse velocity Vp range from 3.85 to 5.22

(km/s), for Rebound ðRÞ from 20.00 to 42.00 and for

the concrete compressive strength ðfc Þ from 12.15

(light concrete) to 52.17 MPa (usual concrete

material).

3.4 Training algorithms

Fig. 6 Histograms of the parameters

strength. This extended database is presented in detail, for

first time, in Table 3. Based on the above database, each

input training vector p is of dimension 1 9 2 and consists

of the value of the ultrasonic pulse velocity and the value

123

A large set of training functions, such as quasi-Newton,

Resilient, One-step secant, Gradient descent with momentum and adaptive learning rate and Levenberg–Marquardt

back propagation algorithms has been investigated for the

training phase of the BPNN models. Among these algorithms, the Levenberg–Marquardt implemented by levmar

seems capable to achieve the optimum prediction,

describing the nonlinear behavior of concrete compressive

strength. It should be noted that the difference of the

Levenberg–Marquardt implemented by levmar with the

other algorithms is vast [47]. This algorithm appears to be

the method which is the fastest for training moderate-sized

feed-forward neural networks (up to several hundred

weights), in addition to nonlinear problems. It also has an

efficient implementation in MATLABÒ software. In fact,

the solution of the matrix equation is a built-in function,

and therefore, its attributes are enhanced within a

MATLAB environment.

Neural Computing and Applications (2020) 32:11807–11826

11817

Table 5 Training parameters of BBNN models

Parameter

Value

Training algorithm

Levenberg–Marquardt algorithm

Normalization

Minmax in the range 0.10–0.90

Number of hidden layers

1; 2

Number of neurons per hidden layer

1 to 30 by step 1

Control random number generation

rand(seed, generator) where generator range from 1 to 10 by step 1

Training goal

0

Epochs

250

Cost function

MSE; SSE

Transfer functions

Tansig (T); Logsig (L); Purelin (P)

MSE mean square error, SSE sum square error

Tansig (T): Hyperbolic Tangent Sigmoid transfer function

Logsig (L): Log-sigmoid transfer function

Purelin (P): Linear transfer function

Table 6 Cases of NN

architectures based on the

number of input parameters that

were used

Case

Number of input parameters

Number of hidden layers

Input parameters

Vp

R

I

1

1

H

II

1

2

H

III

1

1

IV

1

2

V

2

1

H

H

VI

2

2

H

H

3.5 Normalization of data

The most crucial step for any type of problem in the field of

soft computing techniques, such as artificial neural networks techniques, is considered to be the normalization of

data. This is a pre-processing phase. In the present study,

the Min–Max [48] and the ZScore normalization methods

have been applied during the pre-processing stage. The two

input parameters (Table 4) and the single output parameter

have been normalized utilizing the Min–Max normalization method. Iruansi et al. [49] stated that in order to avoid

problems associated with low learning rates of the ANN,

the normalization of the data should be made within a

range defined by appropriate upper and lower limit values

of the corresponding parameter. The input and output

parameters, in the present study, have been normalized in

the range [0.10, 0.90].

3.6 Model validation

Three different statistical parameters were employed to

evaluate the performance of the derived NN models as well

as the available in the literature formulae for the

H

H

estimations of concrete compressive strength based on nondestructive measurements. Namely, root mean square error

(RMSE), the mean absolute percentage error (MAPE), and

the Pearson Correlation Coefficient R2 are used. The lower

RMSE and MAPE values represent more accurate prediction results. The higher R2 values represent a greater fit

between the analytical and predicted values. The aforementioned statistical parameters have been calculated by

the following expressions [50]:

sffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffi

n

1X

RMSE ¼

ð4Þ

ðxi y i Þ2

n i¼1

n 1X

xi yi MAPE ¼

ð5Þ

n i¼1 xi !

Pn

2

ð

x

y

Þ

i

i

R2 ¼ 1 Pi¼1

ð6Þ

n

Þ2

i¼1 ðxi x

where n denotes the total number of datasets, and xi and yi

represent the predicted and target values, respectively.

Furthermore, the following new engineering index, the

a20-inex, is proposed for the reliability assessment of the

developed ANN models:

123

11818

Neural Computing and Applications (2020) 32:11807–11826

Table 7 Statistical indexes of

the optimum BPNN for each

one from the five cases of NN

architectures based on input

parameters used (see also

Table 6)

Case

I

III

IV

V

VI

m20

M

1-28-1

1-21-29-1

1-26-1

1-21-14-1

2-25-1

2-20-14-1

ð7Þ

where M is the number of dataset sample and m20 is the

number of samples with the value of the ratio Experimental-value/Predicted-value falling between 0.80 and

1.20. Note that for a perfect predictive model, the values of

a20-index values are expected to be 1. The proposed a20index has the advantage that its value has a physical

engineering meaning: It represents the number of the

samples that predict values with a deviation of ± 20%

compared to experimental values.

4 Results and discussion

4.1 BPNN model development

In this work, a large number of different BPNN models

have been developed and implemented. Each one of these

ANN models was trained with over 140 data-points out of

the total of 209 data-points, (66.99% of the total number)

and the validation and testing of the trained ANN were

performed with the remaining 69 data-points. More

123

Dataset

Indexes

R

II

a20 - index ¼

Optimum BPNN model

RMSE

a20-index

Training

0.9576

2.8655

0.9429

Validation

0.8920

4.4661

0.8000

Test

0.9040

4.3668

0.7941

All

0.9379

3.4558

0.8947

Training

0.9616

2.7319

0.9500

Validation

0.9019

4.2697

0.8286

Test

0.9051

4.3270

0.7941

All

0.9423

3.3313

0.9043

Training

0.9878

1.5480

0.9857

Validation

0.9666

2.5857

0.9714

Test

All

0.9868

0.9838

1.6845

1.7850

1.0000

0.9856

Training

0.9911

1.3281

0.9929

Validation

0.9683

2.5026

0.9714

Test

0.9827

1.9120

0.9706

All

0.9857

1.6808

0.9856

Training

0.9929

1.1874

1.0000

Validation

0.9821

1.8948

1.0000

Test

0.9816

1.9344

1.0000

All

0.9891

1.4678

1.0000

Training

0.9938

1.1094

1.00

Validation

0.9849

1.7168

0.9714

Test

0.9803

2.0015

0.9412

All

0.9900

1.4035

0.9856

specifically, 35 data-points (16.75%) were used for the

validation of the trained ANN and 34 (16.27%) data-points

were used for the testing.

The architecture of the ANNs comprises a number of

hidden layers, usually ranging from 1 to 2, and with a

number of neurons ranging from 1 to 30 for each hidden

layer. Each one of the ANNs is developed and trained for a

number of different activation functions, such as the Logsigmoid transfer function (logsig), the Linear transfer

function (purelin) and the Hyperbolic tangent sigmoid

transfer function (tansig) [19, 20, 50–58].

The parameters used for the ANN training are summarized in Table 5. In order to achieve a fair comparison of

the predictions of the various ANNs used, the datasets are

split into training, validation and testing sets, using

appropriate indices to state whether the data belongs to the

training, validation or testing sets. In the general case, the

division of the data-points into the three groups is made

randomly.

Neural Computing and Applications (2020) 32:11807–11826

11819

Fig. 7 Architecture

of the optimum 2-25-1 BPNN model with one hidden layer and two input parameters the value of the ultrasonic pulse

velocity Vp and the value of the rebound ðRÞ

4.2 Optimum proposed NN model(s)

Based on the above, a total of 1,474,200 different BPNN

models have been developed and investigated in order to

find the optimum NN model for the prediction of the

compressive strength of concrete. Namely, six different

structures of ANNs (Table 6) based on the combinations of

the use of 1 or 2 hidden layers, as well the use of only the

ultrasonic velocity or only the rebound or both of them, as

input parameters, have been developed.

The developed ANN models were sorted in a decreasing

order based on the RMSE value and the optimum for each

of the six cases is presented in Table 7. Based on this

ranking, the optimum BPNN model based on RMSE index

for the prediction of the compressive strength is that of

2-25-1, which corresponds to a NN architecture with two

input parameters, the value of the ultrasonic pulse velocity

and the value of the rebound, and based on one hidden

layer (Fig. 7). As it is presented in Fig. 7, the transfer

functions are the Hyperbolic Tangent Sigmoid transfer

function for the hidden and output layer. In Table 7, the

values of statistical indexes R, RMSE and the value of the

proposed engineering index a20-inex are presented. At this

point, it is worth noting that all three optimal neural networks are useful (Figs. 7, 8, 9), and this is because one can

only measure ultrasound or only the rate of rebound or

123

Neural Computing and Applications (2020) 32:11807–11826

Hidden Layer

Input Layer

11820

Rebound (R)

b1

1

Hyperbolic tangent sigmoid

transfer function

1

2

3

...

4

23

24

25

26

b2

Output Layer

`

1

Hyperbolic tangent sigmoid

transfer function

Compressive Strength

Output Layer

Hidden Layer

Input Layer

Fig. 8 Architecture of the optimum 1-26-1 BPNN model with one hidden layer and one input parameter the value of the rebound ðRÞ

Ultrasonic Pulse Velocity (Vp)

b1

1

Hyperbolic tangent sigmoid

transfer function

1

2

3

4

...

25

26

27

28

b2

1

Linear transfer function

Compressive Strength

Fig.

9 Architecture of the optimum 1-28-1 BPNN model with one hidden layer and one input parameter value of the ultrasonic pulse velocity

Vp

both. Depending on the available data, one uses the corresponding neural network. Especially for the case where

measurements are only available for the rebound method,

123

the optimal neural network is 1-26-1 (Fig. 8), whereas if

only ultrasound measurements are available, it is suggested

to use 1-28-1 (Fig. 8).

Neural Computing and Applications (2020) 32:11807–11826

11821

Fig. 11 Experimental versus predicted values of the concrete compressive strength for the test datasets

Fig. 10 Experimental versus predicted values of compressive strength

for the training and test process

Figure 10 depicts the comparison of the exact experimental values with the predicted values of the optimum

BPNN model for the case of training and test datasets. It is

clearly shown that the proposed optimum 2-25-1 BPNN

reliably predicts the compressive strength of concrete

materials. It is worth noting that all samples used for the

testing process have a deviation less than ± 20% (points

between the two dotted lines in Fig. 10). The same conclusion is drawn from Figs. 11 and 12, where the experimental values are compared with the corresponding values

provided by the proposed optimum 2-25-1 NN model for

the case of test datasets.

Fig. 12 Experimental versus predicted values of the concrete compressive strength for the test datasets

4.3 Comparisons

In Table 8, the 14 bibliographic suggestions presented in

Table 2, as well as the results of the proposed neural network, are presented in descending order, based on the alpha

factor aligned with the RMSE index.

Additionally, in Fig. 13 the results of the ratio of the

predicted to the experimental value of the compressive

strength of the concrete for the 6 proposals that give the

best results compared to the experimental values are presented. Based on this classification, the proposed neural

123

11822

Table 8 Ranking of

Mathematical models for the

estimation of concrete

compressive strength based on

the value of the proposed

engineering index a-20

Neural Computing and Applications (2020) 32:11807–11826

Ranking

Mathematical model

Parameters

References

1

ANN 2-25-1

Vp ; R

Proposed herein

0.9891

1.4678

2

E.7

R

Logothetis [8]

0.9521

2.18

96.65

3

E10

Vp ; R

Logothetis [8]

0.9342

4.19

86.60

4

E4

Vp

Logothetis [8]

0.8198

4.23

80.86

RMSE

a20-index

100.00

5

E14

Vp ; R

Kheder [9]

0.9759

5.15

72.73

6

E8

R

Kheder [9]

0.9745

5.90

66.99

7

E9

R

Qasrawi [10]

0.9732

5.56

64.59

8

E3

Vp

Trtnik et al. [2]

0.8119

7.71

60.77

9

E2

Vp

Nash’t et al. [7]

0.9032

6.59

53.59

10

E13

Vp ; R

Erdal [13]

0.9421

7.09

51.20

11

E12

VP ; R

Amini et al. [12]

0.8961

8.27

49.28

12

E5

Vp

Kheder [9]

0.8942

7.46

45.45

13

E11

VP ; R

Arioglu et al. [11]

0.9535

8.13

43.06

14

E6

Vp

Qasrawi [10]

0.8893

12.24

19.14

15

E1

Vp

Turgut [6]

0.9035

12.84

13.88

network is preceded, followed by the three Logothetis

proposals (1978) and followed by Kheder’s two proposals

(1999).

Moreover in Fig. 14, based on the proposed NN model

1-26-1 that corresponds to the case with only one input

parameter, the value of the Rebound, the distribution of

compressive strength of the concrete with respect to

Rebound is presented, as it results from the presented

method, the Logothetis experimental results [8] and the

proposed Logothetis E7 [8] relationship. Also, in Fig. 15,

based on the proposed NN model 1-28-1 that corresponds

to the case with only one input parameter, the value of the

Ultrasonic Pulse Velocity, the distribution of compressive

strength of the concrete with respect to Ultrasonic Pulse

Velocity is presented, as it results from the presented

method, the Logothetis experimental results [8] and the

proposed Logothetis E7 [8] relationship. From Figs. 14 and

15, it can be seen that the proposed process approximates

the experimental results better and therefore the reliability

of the presented method results.

5 Final values of weights and bias of the NN

models

Even though it is common practice for authors to present

the architecture of an optimum NN model, without any

information related to the final values of NN weights, it

must be stressed that any architecture which does not

present these values is of limited assistance to others

researchers and practicing engineers. If, on the other hand,

a proposed NN architecture is accompanied by the

123

R

(quantitative) values of weights, it can be of great use,

making it possible for the NN model to be readily implemented in an MS-Excel file, thus available to anyone

interested in modeling issues.

With this in mind, in Table 9, the final weights for both

hidden layers and bias are stated. Based on Figs. 7, 8 and 9,

by employing the properties defined in Table 4 and

applying the weights and bias values between different

layers of ANN, it is possible to estimate the predicted value

of the concrete compressive strength.

6 Limitations

The (two) proposed ANN models can be applied only in

the case that the researcher, or the practitioner has the

experimental values of both ultrasonic velocity and Schmidt hammer rebound tests (or in the case with known

experimental value of the Schmidt hammer rebound test).

It should be stressed that the neural network models can be

reliably applied for parameter values ranging between the

lowest and highest values of each parameter (as presented

in Table 4); otherwise, the predicted value is unreliable.

7 Conclusions

A plethora of works based on non-destructive methods for

the estimation of concrete compressive strength has been

presented on the basis of conventional computational

techniques, such as multiple regression analysis. However,

the issue of the estimation of concrete compressive strength

Neural Computing and Applications (2020) 32:11807–11826

11823

Fig. 13 Comparison of the proposed NN model with available in literature analytical formulae for the estimation of concrete compressive

strength

123

11824

Neural Computing and Applications (2020) 32:11807–11826

Fig. 15 Concrete compressive strength versus ultrasonic pulse

velocity

Fig. 14 Concrete compressive strength versus rebound

Table 9 Final values of weights

and bias of the optimum NN

models

NN 1-26-1

NN 2-25-1

IW{1,1}

LW{2,1}S

B{1,1}

B{2,1}

IW{1,1}

LW{2,1}S

B{1,1}

B{2,1}

(2691)

(1926)

(2691)

(191)

(2592)

(1925)

(2591)

(191)

- 36.4000

- 0.3491

36.4000

0.5706

- 6.9186

- 1.0643

- 0.4535

- 7.0000

0.8432

36.4000

0.3117

- 33.4880

- 4.3197

5.5082

0.2887

6.4167

- 36.4000

0.4148

30.5760

1.7406

6.7801

- 0.2997

5.8333

- 36.4000

0.3779

27.6640

- 5.4113

- 4.4405

- 0.2244

5.2500

36.4000

0.0993

- 24.7520

4.0614

5.7013

0.3137

4.6667

36.4000

- 0.0521

- 21.8400

6.9743

- 0.5995

- 0.1784

- 4.0833

- 36.4000

0.2398

18.9280

5.7002

- 4.0630

- 0.1387

3.5000

- 36.4000

- 0.2703

16.0160

3.2325

6.2089

0.2014

2.9167

- 36.4000

0.0938

13.1040

- 3.2466

- 6.2016

0.2474

2.3333

36.4000

- 0.0265

- 10.1920

6.9728

0.6166

0.4585

1.7500

- 36.4000

- 0.0293

7.2800

- 4.1596

- 5.6301

- 0.4624

1.1667

0.1486

- 4.3680

2.2763

- 6.6195

0.2185

0.5833

36.4000

- 36.4000

- 0.3656

1.4560

- 1.5137

- 6.8344

0.3554

0.0000

- 36.4000

- 0.3397

- 1.4560

- 0.5814

- 6.9758

0.1435

0.5833

- 36.4000

36.4000

0.3776

- 0.2611

- 4.3680

7.2800

2.5492

- 6.9866

6.5193

0.4336

- 0.1393

0.1694

1.1667

- 1.7500

- 36.4000

- 0.2352

- 10.1920

- 4.8254

5.0711

0.0915

- 2.3333

- 36.4000

- 0.3490

- 13.1040

5.8421

- 3.8561

- 0.2345

2.9167

- 36.4000

- 0.2410

- 16.0160

- 5.8799

3.7982

0.3753

3.5000

36.4000

0.4441

18.9280

- 4.0396

- 5.7168

0.4255

4.0833

36.4000

- 0.1849

21.8400

- 6.8016

- 1.6549

- 0.1955

- 4.6667

36.4000

0.2360

24.7520

- 6.6740

2.1113

- 0.2942

- 5.2500

36.4000

- 0.0627

27.6640

5.4924

- 4.3397

- 0.1410

- 5.8333

- 36.4000

0.2329

- 30.5760

- 5.7825

- 3.9450

- 0.2063

- 6.4167

- 36.4000

0.3581

- 33.4880

5.1135

4.7804

0.2007

- 7.0000

- 0.2865

36.4000

36.4000

IW{1,1} = Matrix of weights values for between input layer and the first hidden Layer, LW{2,1} = Matrix

of weights values between the first hidden Layer and the 2nd hidden Layer

B{1,1} = Bias values for hidden layer, B{2,1} = Bias values for output layer

123

Neural Computing and Applications (2020) 32:11807–11826

is still open due to the fact that the available in the literature formulae depict a large dispersion of the values they

estimate, as well as a significant deviation from the actual

(experimental) value of the compressive strength of the

concrete.

In the present work, based on a large experimental

database, consisting of datasets from non-destructive tests

and compressive strength implemented on respective concrete cores, three different optimum ANN models are

proposed for the estimation of concrete compressive

strength. Namely, an optimum ANN model, for the case of

using only rebound test measurements, one for the case of

using only ultrasonic pulse velocity measurements and one

for the case where both ultrasonic and rebound methods

measurements are available, are developed and presented.

The comparison of the derived results with experimental

findings, as well as with available in literature analytical

formulae, demonstrates the promising potential of using

back propagation neural networks for the reliable and

robust approximation of the compressive strength of concrete based on non-destructive techniques measurements.

Also, the proposed NN models can continuously re-train

new data, so that it can conveniently adapt to new data in

order to expand the range of suitability of the ANN.

Using the architecture of the proposed optimum neural

network and the resulting values of final weights of the

parameters (see supplementary materials), a useful tool is

developed for researchers, engineers, and for supporting

the teaching and interpretation of the relationship between

non-destructive testing results and compressive strength

values.

Compliance with ethical standards

Conflict of interest The authors confirm that this article content has

no conflict of interest.

References

1. Bungey JH, Millard SG (1996) Testing of concrete in structures,

3rd edn. Blackie Academic & Professional, London

2. Trtnik G, Kavčič F, Turk G (2009) Prediction of concrete strength

using ultrasonic pulse velocity and artificial neural networks.

Ultrasonic 49(1):53–60

3. ASTM C 597-83 (1991) Test for pulse velocity through concrete.

ASTM, West Conshohocken

4. BS 1881-203 (1986) Recommendations for measurement of

velocity of ultrasonic pulses in concrete. BSI, London

5. Whitehurst EA (1951) Soniscope tests concrete structures. J Am

Concr Inst 47(2):433–444

6. Turgut P (2004) Evaluation of the ultrasonic pulse velocity data

coming on the field. In: Fourth international conference on NDE

in relation to structural integrity for nuclear and pressurised

components, London, 2004, pp 573–578

11825

7. Nash’t IH, A’bour SH, Sadoon AA (2005) Finding an unified

relationship between crushing strength of concrete and non-destructive tests. In: Middle East nondestructive testing conference

and exhibition, 27–30 Nov 2005 Bahrain, Manama

8. Logothetis LA (1978) Combination of three non-destructive

methods for the determination of the strength of concrete, Ph.D.

thesis, National Technical University of Athens, Athens, Greece

9. Kheder GF (1999) A two stage procedure for assessment of

in situ concrete strength using combined non-destructive testing.

Mater Struct 32:410–417

10. Qasrawi HY (2000) Concrete strength by combined nondestructive methods Simply and reliably predicted. Cem Concr Res

30:739–746

11. Arioglu E, Manzak O (1991) Application of ‘‘Sonreb’’ method to

concrete samples produced in yedpa construction site. Prefabrication Union, 5–12 (in Turkish)

12. Amini K, Jalalpour M, Delatte N (2016) Advancing concrete

strength prediction using non-destructive testing: development

and verification of a generalizable model. Constr Build Mater

102:762–768

13. Erdal M (2009) Prediction of the compressive strength of vacuum

processed concretes using artificial neural network and regression

techniques. Sci Res Essay 4(10):1057–1065

14. Mohammed TU, Rahman MN (2016) Effect of types of aggregate

and sand-to-aggregate volume ratio on UPV in concrete. Constr

Build Mater 125:832–841

15. Alwash M, Breysse D, Sbartaı̈ ZM (2015) Non-destructive

strength evaluation of concrete: analysis of some key factors

using synthetic simulations. Constr Build Mater 99(7179):

235–245

16. Alwash M (2017) Assessment of concrete strength in existing

structures using nondestructive tests and cores: analysis of current

methodology and recommendations for more reliable assessment,

Ph.D. thesis, Université de Bordeaux

17. Hornik K, Stinchcombe M, White H (1989) Multilayer feedforward networks are universal approximators. Neural Netw

2:359–366

18. Asteris PG, Kolovos KG, Douvika MG, Roinos K (2016) Prediction of self-compacting concrete strength using artificial neural networks. Eur J Environ Civ Eng 20:s102–s122

19. Asteris PG, Tsaris AK, Cavaleri L, Repapis CC, Papalou A, Di

Trapani F, Karypidis DF (2016) Prediction of the fundamental

period of infilled rc frame structures using artificial neural networks. Comput Intell Neurosci 2016:5104907

20. Asteris PG, Roussis PC, Douvika MG (2017) Feed-forward

neural network prediction of the mechanical properties of sandcrete materials. Sensors (Switzerland) 17(6):1344

21. Asteris PG, Moropoulou A, Skentou AD, Apostolopoulou M,

Mohebkhah A, Cavaleri L, Rodrigues H, Varum H (2019)

Stochastic vulnerabilityassessment of masonry structures: Concepts, modeling and restoration Aspects. Appl Sci 9(2):243

22. Psyllaki P, Stamatiou K, Iliadis I, Mourlas A, Asteris P, Vaxevanidis N (2018). Surface treatment of tool steels against galling

failure. In: MATEC web of conferences, 188, No 4024

23. Kotsovou GM, Cotsovos DM, Lagaros ND (2017) Assessment of

RC exterior beam-column joints based on artificial neural networks and other methods. Eng Struct 144:1–18

24. Ahmad A, Kotsovou G, Cotsovos DM, Lagaros ND (2018)

Assessing the accuracy of RC design code predictions through the

use of artificial neural networks. Int J Adv Struct Eng

10(4):349–365

25. Momeni E, Jahed Armaghani D, Hajihassani M, Mohd Amin MF

(2015) Prediction of uniaxial compressive strength of rock samples using hybrid particle swarm optimization-based artificial

neural networks. Meas J Int Meas Confed 60:50–63

123

11826

26. Momeni E, Nazir R, Jahed Armaghani D, Maizir H (2014) Prediction of pile bearing capacity using a hybrid genetic algorithmbased ANN. Meas J Int Meas Confed 57:122–131

27. Bunawan AR, Momeni E, Armaghani DJ, Nissa Binti Mat Said

K, Rashid ASA (2018) Experimental and intelligent techniques to

estimate bearing capacity of cohesive soft soils reinforced with

soil-cement columns. Meas J Int Meas Confed 124:529–538

28. Wang G-G, Guo L, Gandomi AH, Hao G-S, Wang H (2014)

Chaotic krill herd algorithm. Inf Sci 274:17–34

29. Wang G, Guo L, Duan H, Wang H, Liu L, Shao M (2013)

Hybridizing harmony search with biogeography based optimization for global numerical optimization. J Comput Theor

Nanosci 10(10):2312–2322

30. Wang G, Guo L, Wang H, Duan H, Liu L, Li J (2014) Incorporating mutation scheme into krill herd algorithm for global

numerical optimization. Neural Comput Appl 24(3–4):853–871

31. Asteris PG, Kolovos KG (2018) Self-compacting concrete

strength prediction using surrogate models. Neural Comput Appl.

https://doi.org/10.1007/s00521-017-3007-7

32. McCulloch WS, Pitts W (1943) A logical calculus of the ideas

immanent in nervous activity. Bull Math Biophys 5(4):115–133

33. Rosenblatt F (1958) The perceptron: a probabilistic model for

information storage and organization in the brain. Psychol Rev

65(6):386–408

34. Minsky M, Papert S (1969) Perceptrons: an introduction to

computational geometry. MIT Press, Cambridge. ISBN 0-26263022-2

35. Ackley DH, Hinton GE, Sejnowski TJ (1985) A learning algorithm for Boltzmann machines. Cognit Sci 9(1):147–169

36. Fukushima K (1988) Neocognitron: a hierarchical neural network

capable of visual pattern recognition. Neural Netw 1(2):119–130

37. LeCun Y, Botoo L, Bengio Y, Haffner P (1998) Gradient-based

learning applied to document recognition. Proc IEEE

86(11):2278–2324

38. Hinton GE, Osindero S, Teh Y-W (2006) A fast learning algorithm for deep belief nets. Neural Comput 18(7):1527–1554

39. Widrow B, Lehr MA (1990) 30 Years of adaptive neural networks: perceptron, madaline, and backpropagation. Proc IEEE

78(9):1415–1442

40. Cheng B, Titterington DM (1994) Neural networks: a review

from a statistical perspective. Stat Sci 9(1):2–30

41. Ripley BD (1996) Pattern recognition and neural networks.

Cambridge University Press, Cambridge

42. Zhang G, Eddy Patuwo BY, Hu M (1998) Forecasting with

artificial neural networks: the state of the art. Int J Forecast

14(1):35–62

43. Schmidhuber J (2015) Deep learning in neural networks: an

overview. Neural Netw 61:85–117

44. LeCun Y, Bengio Y, Hinton G (2015) Deep learning. Nature

521(7553):436–444

45. Bartlett PL (1998) The sample complexity of pattern classification with neural networks: the size of the weights is more

important than the size of the network. IEEE Trans Inf Theory

44(2):525–536

46. Karlik B, Olgac AV (2011) Performance analysis of various

activation functions in generalized MLP architectures of neural

networks. Int J Artif Intell Expert Syst 1:111–122

123

Neural Computing and Applications (2020) 32:11807–11826

47. Lourakis MIA (2005) A brief description of the Levenberg–

Marquardt algorithm Implemened by levmar. Hellas (FORTH),

Institute of Computer Science Foundation for Research and

Technology, Heraklion

48. Delen D, Sharda R, Bessonov M (2006) Identifying significant

predictors of injury severity in traffic accidents using a series of

artificial neural networks. Accid Anal Prev 38:434–444

49. Iruansi O, Guadagnini M, Pilakoutas K, Neocleous K (2010)

Predicting the shear strength of RC beams without stirrups using

bayesian neural network. In: Proceedings of the 4th international

workshop on reliable engineering computing, robust design—

coping with hazards, risk and uncertainty, Singapore, 3–5 March

2010

50. Asteris PG, Nozhati S, Nikoo M, Cavaleri L, Nikoo M (Article in

Press) Krill herd algorithm-based neural network in structural

seismic reliability evaluation. Mech Adv Mater Struct 26(13):

1146–1153. https://doi.org/10.1080/15376494.2018.1430874

51. Apostolopoulou M, Armaghani DJ, Bakolas A, Douvika MG,

Moropoulou A, Asteris PG (2019) Compressive strength of natural hydraulic limemortars using soft computing techniques.

Procedia Structural Integrity 17:914–923

52. Cavaleri L, Chatzarakis GE, Di Trapani FD, Douvika MG, Roinos K, Vaxevanidis NM, Asteris PG (2017) Modeling of surface

roughness in electro-discharge machining using artificial neural

networks. Adv Mater Res (South Korea) 6(2):169–184

53. Armaghani DJ, Hatzigeorgiou GD, Karamani Ch, Skentou A,

Zoumpoulaki I, Asteris PG (2019) Soft computing-based techniques for concretebeams shear strength. Procedia Structural

Integrity 17(2019):924–933

52. Apostolopoulou M, Douvika MG, Kanellopoulos IN, Moropoulou A, Asteris PG (2018) Prediction of compressive strength

of mortars using artificial neural networks. In: 1st international

conference TMM_CH, transdisciplinary multispectral modelling

and cooperation for the preservation of cultural heritage, 10–13

October, 2018, Athens, Greece

53. Asteris PG, Argyropoulos I, Cavaleri L, Rodrigues H, Varum H,

Thomas J, Lourenço PB (2018) Masonry compressive strength

prediction using artificial neural networks. In: 1st International

conference TMM_CH, transdisciplinary multispectral modelling

and cooperation for the preservation of cultural heritage, 10–13