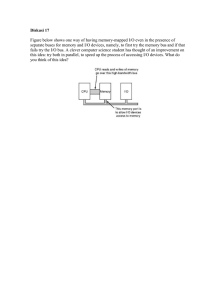

3.1. General categories of functions are specified by computer instructions: Processor-Memory data transfer. Processor-I/O data transfer. Data processing. Control. 3.2. 1. Instruction address calculation: Determine the address of the next instruction to be executed. 2. Instruction fetch: Read instruction from its memory location into the processor. 3. Instruction operation decoding: Analyze instruction to determine the type of operation to be performed and operands to be used. 4. Operand address calculation: If the operation involves reference to an operand in memory or available via I/O, then determine the address of the operand. 5. Operand fetch: Fetch the operand from memory or read it in from I/O. 6. Data operation: Perform the operation indicated in the instruction. 7. Operand store: Write the result into memory or out to I/O. 3.3. Disabling interrupts: the processor has the ability to and will ignore specific interrupts. Those interrupts remain pending and will be checked after the processor has enabled interrupts. Interrupt service routine (ISR): priorities assigned to the different types of interrupts. ISRs with higher priorities can interrupt ones with lower priority, in which case the ISR with the lower priority is put on the stack until that ISR is completed. 3.4. Types of transfers must a computer’s interconnection structure (e.g., bus) support: 1. 2. 3. 4. 5. Memory to processor: The processor reads an instruction or a unit of data from memory. Processor to memory: The processor writes a unit of data to memory. I/O to processor: The processor reads data from an I/O device via an I/O module. Processor to I/O: The processor sends data to the I/O device. I/O to or from memory: For these two cases, an I/O module is allowed to exchange data directly with memory, without going through the processor, using direct memory access. 3.5. A multiple-bus architecture offers several benefits compared to a single-bus architecture: 1. Increased Bandwidth: Multiple buses allow for concurrent data transfers, which can significantly increase the overall bandwidth of the system. By dividing the data and instructions across multiple buses, more information can be transferred simultaneously, leading to improved performance. 2. Reduced Bottlenecks: In a single-bus architecture, all data transfers must share the same bus, creating a potential bottleneck. With multiple buses, different types of data or instructions can be assigned to different buses, reducing contention and avoiding congestion on a single bus. 3. Improved Scalability: Multiple buses enable better scalability in terms of adding new components or devices to the system. As more devices are added, additional buses can be introduced to handle the increased traffic, ensuring efficient communication between components and avoiding overcrowding on a single bus. 4. Enhanced Parallelism: Parallelism is a crucial aspect of modern computing systems. Multiple buses facilitate parallel processing by allowing different components to operate simultaneously. This can lead to faster execution of tasks and improved overall system performance. 5. Enhanced Fault Tolerance: With multiple buses, it's possible to isolate faults or errors in one bus while keeping other buses operational. This provides better fault tolerance and reliability compared to a single-bus architecture, where a failure in the single bus could bring the entire system to a halt. 6. Support for Complex Systems: Multiple-bus architectures are particularly beneficial in complex systems, such as multiprocessor systems or large-scale servers. They provide a means to efficiently manage the increased communication demands and data transfers between numerous components, ensuring smooth operation and optimized performance.