Image processing

Nestor Arana Arexolaleiba

narana@mondragon.edu

https://www.linkedin.com/in/nestor-arana-arexolaleiba-26180b8/

2023

Image

Loading

Loading Image Dataset –Dataset

Classification

…

Detection o

segmentation

Custom

Video

Classification

and prediction

Stereo Images

Subtitles

images

Pytorch https://pytorch.org/vision/stable/datasets.html

03.10.23

Mondragon Unibertsitatea

3

Loading Image Dataset

•

The MS COCO (Microsoft Common Objects in Context)

dataset is consisting of 328K images. It contains

annotations for object detection, key points detection,

panoptic segmentation, stuff image segmentation,

captioning, and Dense human pose estimation.

https://cocodataset.org/

03.10.23

Mondragon Unibertsitatea

4

Loading Image Dataset

•

ImageNet is one of the most popular image databases

with more than 14 million hand-annotated images.

https://medium.com/@704/first‐summary‐imagenet‐classification‐606c904ecd86

https://www.image‐net.org/update‐mar‐11‐2021.php

03.10.23

Mondragon Unibertsitatea

5

Loading Image Dataset

•

03.10.23

MNIST https://en.wikipedia.org/wiki/MNIST_database

Mondragon Unibertsitatea

6

Loading Image Dataset

•

The CIFAR-10 and CIFAR-100 are labeled subsets of

the 80 million tiny images dataset collected by Alex

Krizhevsky, Vinod Nair, and Geoffrey Hinton.

• The CIFAR-10 dataset consists of 60.000 32x32 color images in

10 classes, with 6.000 images per class.

• There are 50000 training images and 10.000 test images.

• CIFAR-100 consists of 100 classes containing 600 images each.

There are 500 training images and 100 testing images per class.

https://www.cs.toronto.edu/~kriz/cifar.html

03.10.23

Mondragon Unibertsitatea

7

Loading Image Dataset

•

Torchvision provides many built-in datasets in the

torchvision.datasets module, as well as utility classes for

creating your own datasets.

dataset = torchvision.datasets.MNIST('./data/cifar', train=True, download=True,

transform=transform)

dataset = torchvision.datasets.CIFAR10('./data/cifar', train=True, download=True,

transform=transform)

dataset = torchvision.datasets.ImageFolder(‘path/to/data', train=True, download=True,

transform=transform)

03.10.23

Mondragon Unibertsitatea

8

Exercise

•

•

03.10.23

Check the result with the code M.6.0

What happen if we use shuffle=True?

Mondragon Unibertsitatea

9

Data Augmentation

•

A common strategy for training neural networks is to introduce

randomness into the input data itself. For example, you can

•

•

•

•

•

•

rotate,

mirror,

scale and/or

crop images

randomly during training.

This will help your network generalize, since you are seeing the

same images but in different locations, with different sizes, in

different orientations, etc.

#Data augmentation

transform = transforms.Compose([

transforms.Resize(32),

transforms.RandomHorizontalFlip()

transforms.ToTensor()])

https://pytorch.org/vision/stable/transforms.html

03.10.23

Mondragon Unibertsitatea

10

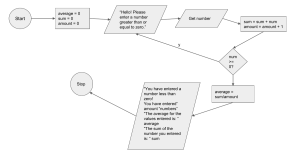

Loading Dataset – MIST

import torchvision

from torchvision import transforms

import torch

transform = transforms.Compose([

transforms.Resize(32),

transforms.RandomHorizontalFlip()

transforms.ToTensor()])

dataset = torchvision.datasets.MNIST('./data/cifar’,

train=True,

download=True,

transform=transform)

data_loader = torch.utils.data.DataLoader(dataset,

batch_size=8,

shuffle=True,

num_workers=2)

03.10.23

Mondragon Unibertsitatea

11

Exercise

•

•

03.10.23

What happen if we use

transforms.RandomHorizontalFlip() with the

MNIST images?

Check the result with the code M.6.0

Mondragon Unibertsitatea

12

Loading Dataset – MIST

images, labels = next(iter(val_loader))

plt.figure(figsize=(20, 4))

for index in range(batch_size):

ax = plt.subplot(2, batch_size, index + 1)

plt.imshow(images[index].numpy().reshape (28,28))

plt.gray()

ax.get_xaxis().set_visible(False)

ax.get_yaxis().set_visible(False)

plt.show()

print('GroundTruth: ', ' '.join(f'{labels[j]:10}' for j in range(batch_size)))

[3 3 2 9 6 1 3 6]

03.10.23

Mondragon Unibertsitatea

13

Exercise

1. Get a batch of 4 random images from CIFAR10 data set

2. Include the following data augmentation function

• transforms.RandomHorizontalFlip()

03.10.23

Mondragon Unibertsitatea

14

Loading Dataset – Flowers102

1. What happens when the image size of the images are

not always the same?

train_dataset = torchvision.datasets.Flowers102(

root="~/torch_datasets", transform=transform, download=True

)

train_loader = torch.utils.data.DataLoader(

train_dataset, batch_size=train_batch_size, shuffle=True

)

https://www.robots.ox.ac.uk/~vgg/data/flowers/102/categories.html

03.10.23

Mondragon Unibertsitatea

15

Loading Dataset – Custom

•

The easiest way to load your own image data is with

torchvision's ImageFolder datasets

dataset = torchvision.datasets.ImageFolder('path/to/data', transform=transforms)

ddbb/obj1/1.png

ddbb/obj1/2.png

ddbb/obj1/3.png

ddbb/obj2/1.png

ddbb/obj2/2.png

ddbb/obj2/3.png

03.10.23

Mondragon Unibertsitatea

16

Exercise

1. Create your own data set

• 2 categories

• 2-6 images per category

2. Get a batch of 2 random images from your own data set

and show the result

3. Include one relevant transforms function

• Justify why is relevant

• and show the result

03.10.23

Mondragon Unibertsitatea

17

References

•

Beginner’s Guide to Loading Image Data with PyTorch

• https://towardsdatascience.com/beginners-guide-to-loadingimage-data-with-pytorch-289c60b7afec

03.10.23

Mondragon Unibertsitatea

18

Convolutional

Neural

Network - CNN

Convolutional Neural Network

Mondragon Unibertsitatea

20

Convolutional filter

03.10.23

Mondragon Unibertsitatea

21

Convolutional filter

https://towardsdatascience.com/intuitively‐understanding‐convolutions‐for‐deep‐learning‐1f6f42faee1

03.10.23

Mondragon Unibertsitatea

22

Stride

03.10.23

Mondragon Unibertsitatea

23

Max Pooling Layer

https://pub.towardsai.net/introduction‐to‐pooling‐layers‐in‐cnn‐dafe61eabe34

03.10.23

Mondragon Unibertsitatea

24

Padding

•

03.10.23

Padding

Mondragon Unibertsitatea

25

Convolutional Neural Network

import torch.nn as nn

import torch.nn.functional as F

class Net(nn.Module):

def __init__(self):

super().__init__()

self.conv1 = nn.Conv2d(in_channels=3, out_channels=6, kernet_size=5)

self.pool = nn.MaxPool2d(kernet_size=2)

self.conv2 = nn.Conv2d(in_channels=6, out_channels=16, kernet_size=5)

self.fc1 = nn.Linear(in_channels=16 * 5 * 5, out_channels= 120)

self.fc2 = nn.Linear(in_channels=120, out_channels=84)

self.fc3 = nn.Linear(in_channels=84, out_channels=10)

def forward(self, x):

x = F.relu(self.conv1(x))

x = self.pool(x)

x = F.relu(self.conv2(x))

x = self.pool(x)

# flatten all dimensions except batch

x = torch.flatten(x, 1)

x = F.relu(self.fc1(x))

x = F.relu(self.fc2(x))

x = self.fc3(x)

return x

03.10.23

Mondragon Unibertsitatea

26

Exercise

•

•

03.10.23

Considering that all the convolutional filter are the same

as the previous code what will be the size of the first

convolutional layer if the size if the input image is

Design graphically its shape

Mondragon Unibertsitatea

27

Example

•

Design the shape of the information along the following

CNN.

class NN (nn.Module):

def __init__(self, output_dim):

super().__init__()

self.conv1 = nn.Conv2d(in_channels=3, out_channels=32, kernel_size=5, stride=2)

self.conv2 = nn.Conv2d(in_channels=32, out_channels=64, kernel_size=5, stride=2)

self.conv3 = nn.Conv2d(in_channels=64, out_channels=64, kernel_size=3, stride=1)

self.fc1 = nn.Linear(576, 256)

self.fc2 = nn.Linear(256, output_dim)

def forward(self, x):

x = F.relu(self.conv1(x))

x = F.relu(self.conv2(x))

x = F.relu(self.conv3(x))

x = torch.flatten(x, start_dim=1) # flatten all dimensions except batch

x = F.relu(self.fc1(x))

x = F.relu(self.fc2(x))

return x

03.10.23

Mondragon Unibertsitatea

28

Example

•

03.10.23

Cifar10 tutorial

Mondragon Unibertsitatea

29

Exercises

•

03.10.23

Update the code to plot the training and validation curve

during the training process

Mondragon Unibertsitatea

30

Exercise

•

Perform a CIFAR-10 image classification

• Load the CIFAR-10 using the data loader

• Plot the training and validation curve during the training process

03.10.23

Mondragon Unibertsitatea

31

Extra-Exercise

•

03.10.23

Perform a Custom image classification

Mondragon Unibertsitatea

32

Predefined

Neural

Network

Predefined Neural Network

•

•

03.10.23

Predefined

Pretrained

Mondragon Unibertsitatea

34

Alexnet Architecture

import torchvision

from torchvision.models import models

# Alexnet

model = models.alexnet(pretrained=use_pretrained)

set_parameter_requires_grad(model, feature_extract)

num_ftrs = model.classifier[6].in_features

model.classifier[6] = nn.Linear(num_ftrs,num_classes)

input_size = 224

# Forward

outputs = model(inputs)

03.10.23

Mondragon Unibertsitatea

35

VGG-Net Architecture

import torchvision

from torchvision.models import models

# VGG

model = models.vgg16(pretrained=use_pretrained)

set_parameter_requires_grad(model, feature_extract)

num_ftrs = model.classifier[6].in_features

model.classifier[6] = nn.Linear(num_ftrs,num_classes)

input_size = 224

# Forward

outputs = model(inputs)

03.10.23

Mondragon Unibertsitatea

36

RESNET Architecture

import torchvision

from torchvision.models import models

# RESNET

model= models.resnet18(pretrained=use_pretrained)

set_parameter_requires_grad(model, feature_extract)

num_ftrs = model.fc.in_features

model.fc = nn.Linear(num_ftrs, num_classes)

input_size = 224

# Forward

outputs = model(inputs)

03.10.23

Mondragon Unibertsitatea

37

Inception Architecture

import torchvision

from torchvision.models import models

# INCEPTION

model = models.inception_v3(pretrained=use_pretrained)

set_parameter_requires_grad(model, feature_extract)

# Handle the auxilary net

num_ftrs = model.AuxLogits.fc.in_features

model.AuxLogits.fc = nn.Linear(num_ftrs, num_classes)

# Handle the primary net

num_ftrs = model.fc.in_features

model.fc = nn.Linear(num_ftrs,num_classes)

input_size = 299

# Forward

outputs = model(inputs)

03.10.23

Mondragon Unibertsitatea

38

Summary

https://towardsdatascience.com/the‐w3h‐of‐alexnet‐vggnet‐resnet‐and‐inception‐7baaaecccc96

03.10.23

Mondragon Unibertsitatea

39

Segmentation

•

•

•

03.10.23

DeepLabV3

FCN

LRASPP

Mondragon Unibertsitatea

40

Object detection

•

•

•

•

•

Faster R-CNN

FCOS

RetinaNet

SSD

SSDlite

https://jonathan‐hui.medium.com/object‐detection‐speed‐and‐accuracy‐comparison‐faster‐r‐cnn‐r‐fcn‐

ssd‐and‐yolo‐5425656ae359

03.10.23

Mondragon Unibertsitatea

41

Skeleton tracking

•

•

03.10.23

Regression problem

Keypoint R-CNN

Mondragon Unibertsitatea

42

Exercise

•

Perform a CIFAR-10 image classification

• Using Resnet-18 backbone

import torchvision

from torchvision.models import resnet18, ResNet18_Weights

# RESNET 18

weights = ResNet18_Weights.DEFAULT

net2 = torchvision.models.resnet18(weights=weights, progress=False)

net2.fc = torch.nn.Linear(512, 10)

# Forward

outputs = model(inputs)

03.10.23

Mondragon Unibertsitatea

43

(VNHUULN DVNR

0 XFKDVJUDFLDV

7KDQN \RX

Nestor Arana-Arexolaleiba

narana@mondragon.edu

Loramendi, 4. Apartado 23

20500 Arrasate – Mondragon

T. 943 71 21 85

info@mondragon.edu