You have 1 free member-only story left this month. Upgrade for unlimited access.

Member-only story

DEEP LEARNING

Pragmatic Deep Learning Model for Forex

Forecasting

Using LSTM and TensorFlow on the GBPUSD Time Series for multi-step prediction

Adam Tibi · Follow

Published in Towards AI

23 min read · Oct 7, 2020

Listen

Share

More

In an attempt to solve the classical question, “Can machine learning predict the

market?”, I landed on Forex GBPUSD as a challenging financial series with an

abundant and free data set. Although there are tens of stories on this platform on stock

ML prediction and a handful on Forex ML prediction, here you will see me delve into

the peculiarities that are often missed and aim to take my model to the reality

spectrum:

By implementing multi-step predictions such as 30 or 60 steps (minutes in this

case) or more, as opposed to a single step (1 minute) prediction

By consuming the model using an algorithmic trading bot to let the profit, or loss,

be the judge (the next story)

At the end of the story, readers with some Python and ML experience will be able to

use the concepts and modify the linked code to produce their own variation of the

model. In part 2, reader will be able to use a commercial algo trading platform with

the model.

Source Code and Following Along

The model is built in Python 3.8 using TensorFlow/Keras 2.3. To keep this story focused

on concepts, the full source code and the environment preparation, along with the

explanation related to running and changing the code are here:

AdamTibi/LSTM-FX

This is the companion code to Pragmatic Deep Learning Model for

Forex Forecasting. So, if you want to understand the…

github.com

Also, you can view the environment setup and the steps to run the model, visually

explained:

Pragmatic Deep Learning Model for Forex Forecasting

Explaining the environment setup and the steps to run the model

Table of Contents

Forex Trading Primer

What is Forex?

Commission, Spread and Pips

Tick Data

Open High Low Close Data

Candlestick Charts

Forex Trading

Algorithmic Trading

Backtesting

The ML Model: Concept and Plan

Model Choice

Technical Stack Choice

Hardware Choice

The Plan

1 — Data Sourcing

2 — Data Preparation

Time Interval and OHLC

Smoothing

Stationarity

Batch Size

Train, Test Split

Process Summary

Scaling

LSTM Data Input Overview

Windows Size

Converting Samples

3 — Model Training

Training Statistics

4 — Predictions

Single-Step Prediction

Multi-Step Prediction

Continuing and Expanding the Research

Date Feature Engineering

Minimising the Effect of Outliers

Very Small Mini Batch Size

Different Smoothing Method

Interval Aggregation

Sequence-to-Sequence Forecasting

Disclaimer

Conclusion

Part Two: Using the Model from a Trading Platform

More Readings

About Me

References

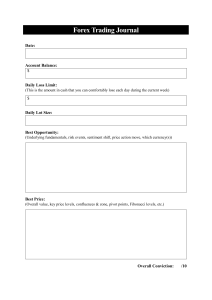

Forex Trading Primer

I will define the basics of Forex Trading in relation to this story. If you are familiar with

Forex basics, then you can skip this section.

GBPUSD exchange rate buy = 1.28820, sell = 1.28816 captured from cTrader

What is Forex?

Forex, Foreign Exchange, is a currency price relationship between two economies, e.g.

British Pound vs US Dollar or GBPUSD. The first three letters in the symbol represent

the first economy called “Base Currency” and the last three letters represent the

second economy called “Quote Currency”. If the exchange rate of GBPUSD is 1.28818 it

means that to buy $1.28818 you pay £1, plus commission and/or Spread.

Commission, Spread and Pips

If you are exchanging with a friend, then you might use two decimal points and

exchange the GBPUSD at 1.29, however, if you are exchanging via a Forex trading

platform through a Forex broker, then there are fees.

Commission: It’s a fixed fee that the broker charges per transaction. The commission

amount is broker-dependant.

Spread: Is the difference between the buying price and selling price. This is how the

broker makes a profit.

Let’s take an example, if you have pounds and you want to buy dollars then the

GBPUSD buy is 1.28820, conversely sell price is 1.28816. That makes the spread:

Spread = Buy - Sell = 1.28820 - 1.28816 = 0.00004 = 0.4e-4

The change in price in Forex is usually very small unless there is an event affecting the

economy, so traders use PIPs to express

thein change.

Open

app

PIP: Price Interest

is currency specific. For most currencies, including GBPUSD it

SearchPoint

Medium

is: Change x 10000

We can consider the spread as a price change, so we can express it as:

Spread = 0.4e-4 = 0.4 pips

For example, if the selling price of GBPUSD changed from 1.28816 to 1.28827, we say

the price moved up by:

1.28827 - 1.28816 = 0.00011 = 1.1 pips

Tick Data

A change in price, also known as “tick”, happens at random times, e.g. multiple

changes per second or a single change in 2 minutes. Forex generates tick data rapidly,

this is an example of GBPUSD tick data for the first 5 seconds of 2020–09–30:

Date

20200930

20200930

20200930

20200930

20200930

20200930

20200930

20200930

20200930

20200930

00:00:00.220

00:00:00.322

00:00:01.025

00:00:01.754

00:00:03.403

00:00:04.204

00:00:04.255

00:00:04.356

00:00:05.520

00:00:05.853

|

|

|

|

|

|

|

|

|

|

|

Sell

1.28643

1.28643

1.28641

1.28641

1.28642

1.28642

1.28643

1.28644

1.28645

1.28647

|

|

|

|

|

|

|

|

|

|

|

Buy

1.28654

1.28653

1.28655

1.28654

1.28653

1.28655

1.28654

1.28656

1.28657

1.28657

There is another way to look at the data, especially when you want to check the rate

price on longer periods (minutes, hours, weeks, etc…).

Open High Low Close Data

OHLC is another way to aggregate the data. OHLC can apply to any time interval such

as a minute or an hour. The Open captures the sell price at the beginning of the time

interval and Close captures the price directly before the start of the second interval.

High captures the max that the price reached during the interval and Low captures the

min reached. This is an example of 1 minute OHLC data for the first few minutes of the

GBPUSD on 2020–09–30:

Date

20200930

20200930

20200930

20200930

20200930

20200930

20200930

20200930

00:00

00:01

00:02

00:03

00:04

00:05

00:06

00:07

|

|

|

|

|

|

|

|

|

Open

1.28643

1.28663

1.28649

1.28630

1.28639

1.28641

1.28650

1.28653

|

|

|

|

|

|

|

|

|

High

1.28663

1.28675

1.28650

1.28648

1.28647

1.28654

1.28655

1.28654

|

|

|

|

|

|

|

|

|

Low

1.28641

1.28649

1.28627

1.28626

1.28635

1.28641

1.28648

1.28647

|

|

|

|

|

|

|

|

|

Close

1.28659

1.28649

1.28630

1.28638

1.28640

1.28651

1.28653

1.28649

Candlestick Charts

Traders usually look at charts with OHLC data, this is why they use “Candlestick Chart”

to better represent this type of data:

Candlestick Bars. Image by author

Note that the candlestick colours are arbitrary, the convention is to use a colour and an

inverted colour such as black and grey, I used green and orange across this story. Next

is our OHLC table above represented as a candlestick chart:

Candlestick Chart Starting 2020–09–30 midnight. Image by author

Forex Trading

Essentially, if you believe the price is going to increase, you buy the base currency

(GBP in our case) using the quote currency (USD in our case) and if you believe the

price is going to decrease, you sell the base currency.

Trading is associated with a strategy, take this over-simplistic strategy as an example

“Buy if you believe the price will increase by at least 10 pips and sell if you believe the

price will decrease by at least 10 pips.”

Your belief in price change could come from many sources, the sky is the limit,

examples:

You think a political decision would affect one of the pair economies

You expect an out of the ordinary announcement on GDP

You use some technical indicators and base your decision on them

You train an ML model on historic data and ask it to predict future prices

Algorithmic Trading

Algo trading is using a bot, a strategy written in code, and executing the trade

automatically via an API or other means based on the bot recommendation.

An example is using a bot that will push an input data into an ML model and consult

the model about the price change then trade accordingly.

In part 2, I will show how the model in this story will be used in algo trading.

Backtesting

When you have built a bot, you want to make sure the bot can make a profit, one way is

to run this bot using a backtesting platform, preferably the same one as your

production one.

Another level of backtesting is to run it in demo mode (virtual money) on the same

production platform for a while, but with live data.

This way you mitigate the risk of loss, but, you still have other risks such as the risk of

having a market pattern shift.

In part 2, I will show how to backtest the bot that is based on this model.

The ML Model: Concept and Plan

In trading, if we want to know at a particular time whether to buy, sell or do nothing,

we want to forecast if the price will go up or down and by how much.

To make a trade decision, a technical trader uses indicators that analyse a fixed

number of previous time steps (price changes). If the indicators match a particular

pattern this means a buy or a sell signal. The indicators, in essence, are trying to

extract patterns out of the previous prices.

Candlestick chart with Bollinger Bands (Green) and EMA (Cyan) indicators. Captured from cTrader

The idea here is to have our model to act as an indicator. We will train our model, using

historic data, on price changes and whether they resulted in the price going up or

down. If there are recurring patterns in the historical data, then we want our model to

recognise them.

In brief, we want our model to recognise price patterns and advise us with the

expected price change when encountering a pattern.

Model Choice

To meet our objective, we will need an ML model that would recognise time series

patterns and forecasts the next pattern, so we can narrow down our selection to the

applicable models.

Regression models, such as GARCH, ARIMA and Facebook Prophet, are good for less

sophisticated time series prediction, so I excluded them in favour of deep learning

neural network models such as Attention Networks and Long Short-Term Memory

(LSTM) because they are more suitable for this prediction.

I favoured LSTM as the model is heavily researched compared to the newer Attention

Networks, although I might do another research with the Attention Networks.

Technical Stack Choice

Development: Python 3.8, Tensorflow 2.3 (with built-in Keras), Visual Studio Code

with Jupyter Notebook, Visual Studio, Pandas, NumPy, Scikit-Learn, Matplotlib,

Ubuntu 20.04

Production: Python 3.8, C#, CTrader Algorithmic Trading Platform (CTrader

Automate), Flask Web Server, Windows 10

Hardware Choice

This wasn’t really a choice, this was what I already have.

Laptop (for development): Dell Precision M4800, 32GB RAM, 8 Logical Cores Intel

i7 2.9GHz, 2GB RAM Nvidia Quadro K2100M

Server (for training): Dell Precision Tower 7910, 24GB RAM, 28 Logical Cores Intel

Xeon 2.6GHz, Nvidia GeForce RTX 2080 8GB RAM

The Plan

The Plan. Image by author

We will go through the standard ML supervised learning process, we will source the

data, prepare it in a structure suitable for the model, train the model and then use the

model for predictions.

1 — Data Sourcing

I selected the GBPUSD Forex because there are abundance of free quality data

available, down to the tick data and I am familiar with the data itself as I live in the UK

(I can blindly pinpoint the 2008 Credit Crunch, Brexit Vote Day and COVID-19

Lockdown).

You can download the GBPUSD data from Python using sources like Quandl or as a

CSV, as I have done. I used a Windows desktop software called Quant Data Manager to

download the GBPUSD 1 minute data from Dukascopy Swiss online bank. This is a

sample data:

Date,High,Low

2010-01-01 00:00,1.61673,1.61659

2010-01-01 00:01,1.61670,1.61670

...

2020-10-01 23:58,1.28852,1.28838

2020-10-01 23:59,1.28853,1.28846

2 — Data Preparation

The Forex data is usually clean, so I have invested a little on this front. Also, rather

than focusing on the code, I will put the effort into highlighting the quantitative

finance concepts which will make the linked code self-explanatory.

Time Interval and OHLC

With Forex, you have easy access to tick price. However, tick data is highly volatile and

the price change rate is not predictable and can be many changes per second or a

single change in two minutes. Also, tick price generates too much data and this would

increase ML training time.

I chose the 1 minute OHLC (Open, High, Low, Close) as I think the 1-min is a good

balance between having a good amount of samples and a good training time. It is a

common practice to use the closing price out of the OHLC. However, I don’t think this

is the best representation of the time interval so I took the average price between the

high-low and I called it HLAvg across the code:

df['HLAvg'] = df['High'].add(df['Low']).div(2)

Smoothing

Given the factors affecting Forex rate, I believe that using the smoothed time series

instead of the actual change in price will yield a better prediction accuracy. I stuck

with the basics of smoothing and used the simple moving average (SMA) with 14

periods. I chose 14 as this is the default period used in most technical analysis tools.

SMA with 14 periods on GBPUSD 1-minute chart. Chart is from TradeView.com and the data source is FXCM.

df['MA'] = df['HLAvg'].rolling(window=14).mean()

Note that when calculating the MA, you will have the first periods minus one without a

moving average (the first 13 rows in our case). We will delete these rows.

Stationarity

Stationarity in plain English means a flat looking series without trend. In brief,

stationarity is the opposite of trending. There are statistical tests that will tell you the

status of a particular time series readily available. For a deeper analysis:

How to Check if Time Series Data is Stationary with Python —

Machine Learning Mastery

Time series is different from more traditional classification and

regression predictive modeling problems. The temporal…

machinelearningmastery.com

However, Forex and stocks are non stationary, based on empirical evidence. So, we will

continue on the assumption that our instrument is non stationary.

While our LSTM deep learning model does not require a time series to be stationary,

many sources are advising to use a stationary time series anyway.

[1] If your series is trending up or down, estimating [the minimum and maximum

observable] values maybe difficult and normalization may not be the best method to use on

your problem.

[1] In time series forecasting, it is good practice to make the series stationary, that is remove

any systematic trends and seasonality from the series before modeling the problem. This is

recommended when working with LSTMs.

We can make a financial instrument stationary by calculating the returns. The quant

finance way is to use the Log Returns. This is the link to a brilliant and classical post

that explains the reason of using Log Returns:

https://quantivity.wordpress.com/2011/02/21/why-log-returns

Making a series stationary via Log Returns is reversible as we are not losing any data,

unlike smoothing with a simple moving average. This is important as we want to be

able to reconstruct our time series back from the prediction, as you will see later.

Calculating the log returns from the simple moving average MA at time step t:

df['Returns'] = np.log(df['MA']/df['MA'].shift(1))

To calculate the Future Moving Average from the returns, which is needed after

prediction:

df['MA'] = df['MA'].mul(np.exp(df['Returns'].shift(-1))).shift(1)

Time series with the simple moving average and the log returns

In the graphs above, there is not much difference between the first two graphs, as the

smoothing operating on the 1 minute series wouldn’t be clear at this zoom-level.

Note that you won’t be able to calculate the returns of the first row, so we will delete

this row.

Batch Size

Batch size is the number of model samples used in the training of a neural network

before the gradient gets updated.

For practicality, we need to understand that the batch size:

Is a hyperparameter that affects data training and needs to change to minimise

prediction errors

By convention can take a value between 2 to 32, called a mini batch. Other

common values are 64 and 128

The larger it is, the faster to train over a GPU. However, as downside, this results in

more training error than a smaller batch

Best batch size is a debatable topic and I would recommend a trial and error to balance

best training time with fewest errors.

[2] The presented results confirm that using small batch sizes achieves the best training

stability and generalization performance, for a given computational cost, across a wide range

of experiments. In all cases the best results have been obtained with batch sizes m = 32 or

smaller, often as small as m = 2 or m = 4.

After numerous batch sizes trial and error, I landed on 32. For this size, it took me

around 25 min per single epoch on my hardware (described on my GitHub repo).

Train, Test Split

All ML practitioners are familiar with the Train/Test split. I followed the traditional

approach but added the batch size as an additional constraint.

I restricted my data length to a multiple of my batch size, given that I had nearly 4

million records, sacrificing few records from the beginning of the data would not have

an effect. The lost data will be less than the max of the batch size which is less than 32

minutes in this case.

df = df[df.shape[0] % batch_size:]

After that, I split my data with the batch size in mind. Note that val_size, test_size and

window_size (we will talk about the window_size later) are also all multiples of

batch_size. I have not used the traditional 80/20 or 90/10 for training/test split.

df_train = df[:- val_size - test_size]

df_val = df[- val_size - test_size - window_size:- test_size]

df_test = df[- test_size - window_size:]

I took this batch size constraint to reduce the complexity of the arithmetic required

when working with the LSTM model.

The three data frames will be saved to three independent CSVs to be used when

training, validating and testing the model.

Process Summary

First step summary of the data preparation process

The earlier data preparation process produced three separate CSV files for training,

validation and testing. This will help splitting our full process into separate Jupyter

Notebooks.

Scaling

Training Set: Scaled Log Returns between 0 and 1

I have used the MinMaxScaler for this research because this was the most efficient

scaler, as with the other scalers a single epoch time was three to four times more than

the MinMaxScaler.

MinMaxScaler normalises the data values to reside between a min and a max, by

default the min and the max are 0 and 1. LSTM performs better when the input values

are scaled to a standard range.

scaler = MinMaxScaler()

train_values = scaler.fit_transform(train_df[['Returns']].values)

...

test_values = scaler.transform(test_df[['Returns']].values)

Later on after prediction, the model will be predicting scaled values, so you will have

to invert the transformation to return it back to a real value:

df['Returns_Prediction'] =

scaler.inverse_transform(df[['Returns_Prediction_Scaled']].values)

This scaler should be fit once on the training data and then reused from this point

onward to scale other data sets: validation data, test data, backtesting data and

production data. This is important to note, as if the scaler is fit across all the data set it

will introduce a look-ahead bias.

To reuse the scaler, the quickest way is to persist it (storing it as a file) and loading it

when needed:

joblib.dump(scaler, 'scalers/scaler.bin') # For persisting to file

...

scaler = joblib.load('scalers/scaler.bin') # For loading from file

One caveat to this process is that you need to use the same SciKit Learn version for

persisting and for loading.

So far, we have performed the following operations on the row data:

Calculated the High Low Average (HLAvg)

Calculated the Simple Moving Average (SMA)

Calculated the Log Returns of the SMA

Calculated the Scaled Log Returns

We started from the Date, High and Low and ended with the Scaled Log Returns, this is

a 10-record snapshot of our raw data with our processed data:

LSTM Data Input Overview

What we need from a model is feeding it a fixed number of last samples and getting

back the prediction. Let’s use the table above and assume the time now is 2010–01–01

00:23, this is the 6th sample. We want to predict the HLAvg at sample 7, like this:

Previous prices and their prediction. Image by author

The nearer our prediction matches the market real value, a minute later (Sample 7,

00:24), the more accurate our prediction is.

The LSTM model expects input training data that looks like the earlier one but with all

the data preparation applied. Based on the table above, it is expecting data that looks

like:

A feature and a label. Image by author

In our implementation, the length of the Single Feature is Window Size, in the previous

example, it is 6.

Window Size

A window size, also known as “look-back period” is the amount of past samples, in our

case minutes, that you want to take into consideration at a point of time to predict the

next sample. Think of it as the relevant immediate past samples that you want to rely

on to decide if the financial instrument will go up or down.

To train our model on the whole data set, we have to structure the training set as

Model-Samples of Feature (X) and Label (y), if we take the earlier table as an example

with window size of 6:

An illustration of samples shifted by 1 each. Image by author

Next we will show the code necessary to create this data structure, to simplify the

arithmetic and comply with the LSTM input, I made the window_size as a multiple of

the batch_size.

batch_size = 32

window_size = 8 * batch_size # 256 minutes, 4.3 hours

Converting Samples

As “sample” is a loose term, let’s give it a precise definition by calling it “modelsample”. We need to convert all our processed samples (scaled log returns) to model-

samples (a collection of features of window size and labels) in order to train the model.

As a convention, the collection of features is referred to as X and their labels are y.

def convert_raw_samples_to_model_samples(scd_log_rtns, window_size):

X, y = [], []

len_log_rtns = len(scd_log_rtns)

for i in range(window_size, len_log_rtns):

X.append(values[i-window_size:i])

y.append(values[i])

X, y = np.asarray(X), np.asarray(y)

X = np.reshape(X, (X.shape[0], X.shape[1], 1))

return X, y

Explaining the previous function by example, if the scd_log_rtns has 10 data samples

and the window_size=6, the for-loop can be illustrated as:

An illustration of the for-loop. Image by author

LSTM input expects a 3D array of shape: Number of Processed Data Samples, Windows

Size and Features

Number of Processed Data Samples is all the size of the scaled log returns minus

the window size. Remember that we cannot use all the training data, because the

first window_size samples are not usable.

Features in our case are 1, which is the scaled log returns

The last reshapes of X will convert X to the LSTM 3D compatible input.

Also, the same process is applied on the validation data set.

3 — Model Training

The dataflow to the model. Image by author

Keras became part of TensorFlow in v2, we are using Keras for our model:

model = Sequential()

model.add(LSTM(76, input_shape=(X.shape[1], 1), return_sequences =

False))

model.add(Dropout(0.2))

model.add(Dense(1))

model.compile(loss="mse", optimizer='Adam')

Sequential: Keras way for stacking our layers. For details:

Keras documentation: The Sequential model

Author: fchollet Date created: 2020/04/12 Last modified: 2020/04/12

Description: Complete guide to the Sequential…

keras.io

LSTM: The LSTM networks are well-suited for time series problems. Explaining the

details of this layer is outside the scope of this story, for details:

Illustrated Guide to LSTM’s and GRU’s: A step by step explanation

Hi and welcome to an Illustrated Guide to Long Short-Term Memory

(LSTM) and Gated Recurrent Units (GRU). I’m Michael…

towardsdatascience.com

Dropout: Is a regularisation layer, it is used for LSTM and other RNN networks to

reduce overfitting. It usually comes after every LSTM layer. More details:

How to Reduce Overfitting With Dropout Regularization in Keras Machine Learning Mastery

Last Updated on August 25, 2020 Dropout regularization is a

computationally cheap way to regularize a deep neural…

machinelearningmastery.com

I am using a single layer of LSTM that has 76 neurons. For regularisation, I used a

dropout layer of 20%. In my trial and error process of building the network, I used

multiple pairs of LSTM and Dropout layers, I tried 1, 2, 3 and 4 pairs (making the

network deeper with hidden layers), I also tried varying the number of neurons per

layer and the dropout percentage.

I landed on one pair layer, this produced the least errors and least training time.

Dense: My input has multi neurons (76), which will create an output of multiple

dimensions. The dense layer will create a weighted linear combination of the input

(with bias), this creates a single output, in our case it is the single prediction, which is

the next minute.

Training Statistics

I tried minutes data from 2010–01–01 until 2020–10–01 and they took around 25

minutes per epoch and 100 epochs seemed to be good.

Training test MSE, Mean Square Error, was around 3.2e-6 and validation loss was

around 2e-6. I tried to increase the epochs to 200 as I thought the model is

undertrained, but that didn’t reduce the MSE of the testing. I think this difference is

because the validation set had different patterns than the testing one and because

there are less patterns in Forex compared to other time series.

4 — Predictions

Remember that your model understands scaled log returns only, as this is what we’ve

trained it on. Now every time we want a prediction, we will have to go through this

process:

Dataflow before and after prediction. Image by author

The code below implements the process above, where X being the data acting as a

feature list:

y_pred = model.predict(X)

df['Pred_Scaled'] = np.pad(y_pred.reshape(y_pred.shape[0]),

(window_size, 0), mode='constant', constant_values=np.nan)

df['Pred_Returns'] =

scaler.inverse_transform(df[['Pred_Scaled']].values)

df['Pred_MA'] =

df['MA'].mul(np.exp(df['Pred_Returns'].shift(-1))).shift(1)

It is worth noting that to reconstruct the SMA from the returns, you will require the

initial capital. As a simple example, if I know you’ve got +£2, +£3 and -£4 returns, I

wouldn’t know your capital, but if you tell me your initial capital, say £1000, I will be

able to construct a full investment (think SMA), this will be £1002, £1005, £1001. The

last line in the code is doing so, but the returns are not arithmetic returns, so I am

using the exp function to reverse the operation.

Single-Step Prediction

This is a single-step prediction from the model:

Single Prediction (1 minute). Image by author

In the previous graph, the prediction is not far away from the SMA. This doesn’t mean

much, because we are predicting one minute only and any semi-decent model should

give a good result when it comes to this.

Multi-Step Prediction

The single step prediction is not useful for trading, you need over one minute

projection, as if you are planning to trade for 1 min the commission and spread will act

against you.

One way to predict on multiple steps is to predict one minute forward, then use that

minute in a new prediction and so forth. In the next graph, I did several multi-step

predictions to visualise more than one case:

Several Multi-Step Predictions. Image by author

I was inspired by Jakob Aungiers, in his article which I referenced in the More

Readings section, to have this graph with the several predictions.

There were challenges in convincing Matplotlib to draw these short red lines on the

same graph, so I used a workaround. I added to the plot a normal line, but filled it

from the start of the graph to the point where the red line starts with ‘np.nan’, the code

below is preparing the graph and doing the predictions simultaneously:

Continuing and Expanding the Research

There are more experiment concepts that I haven’t tried, due to time constraints or

hardware limitation. I am listing some here so that interested readers can expand on

this research.

Date Feature Engineering

Forex might have certain patterns depending on the date components like hour, week,

month and/or year.

In this research, I have not considered the date value, I just took the price change of

every minute as one sample. Feature engineering the date components and using

multiple data inputs (Multivariate Time Series Forecasting) might reveal more

patterns.

Minimising the Effect of Outliers

The de-trended data in Log Return have several outliers, most notably the Brexit

period outliers:

A highlight of some outliers. Image by author

I have tried other scalers that are specialised in reducing the impact of outliers but the

model training time increased 3 to 4 folds.

Taking the decision that this model is not suitable for economic turbulence, I would

physically exclude samples coming from the Credit Crunch, Brexit and COVID-19

lockdown periods.

Very Small Mini Batch Size

A single epoch with 32 batch size for 15 years, 1-min GBPUSD was taking around 45

min. So, running 200 epochs would take around a week (6.25 days).

Reducing the size to less than 32 might yield better predictions, but will increase the

training time.

Different Smoothing Method

I have used the simple moving average with 14 periods to smooth the price. But the

SMA is less popular than the exponentially weighted moving average EMA with the

traders, as the EMA is more sensitive to the last data samples.

Interval Aggregation

I have used the 1 minute time step, however, this can also be 15 sec, 30 sec, 2 min, 5

min, 1 hour, etc…

Different aggregations might be suitable for different trading styles and may reveal

more patterns.

Sequence-to-Sequence Forecasting

I simulated multi-step predictions, beyond one, by performing multiple single

predictions. There is another interesting approach known as sequence-to-sequence

prediction or seq2seq.

In seq2seq, rather than predicting a single next value, a new sequence of variable

length is predicted. e.g.

1.2752, 1.2751, 1.2754, 1.2756 -> 1.2758, 1.2760, 1.2761

[3] seq2seq learning, at its core, uses recurrent neural networks to map variable-length input

sequences to variable-length output sequences. While relatively new, the seq2seq approach has

achieved state-of-the-art results in not only its original application — machine translation —

(Luong et al., 2015b; Jean et al., 2015a; Luong et al., 2015a; Jean et al., 2015b; Luong &

Manning, 2015), but also image caption generation (Vinyals et al., 2015b), and constituency

parsing (Vinyals et al., 2015a).

Disclaimer

These stories are meant as research on the capabilities of deep learning and are not

meant to provide any financial or trading advice. Do not use this research and/or code

with real money.

Conclusion

Although, in our multi-step predictions graph, not all predictions were right,

remember two things:

1 — This is only the start and this model has a huge room for improvement as

suggested in the “Continuing and Expanding The Research” section.

2 — It is enough to have a certain percentage of predictions right, to make a profit.

I feared in the beginning that the results will follow a “mean reversion” trend, where

the prediction will try to go back to the previous price average. But this wasn’t the case.

Part Two: Using the Model from a Trading Platform

In part two of this story, I will use the same model built here in a commercial

algorithmic trading platform, cTrader, to test if it is going to make a profit. I will

describe the remainder of the end-to-end approach to take this model to production. I

will set the model to run under a web server and expose a RESTful API and have the

algo trading platform request predictions at real-time, then showing a profit/loss

graph, like the one below:

Backtesting of profit simulation of £1000 between 24/08/2020 and 30/08/2020 using this model. To be discussed in

part 2. Captured from cTrader

Using a TensorFlow Deep Learning Model for Forex Trading

Building an algorithmic bot, in a commercial platform, to trade based on

a model’s prediction

medium.com

More Readings

Time Series Prediction Using LSTM Deep Neural Networks a well-written article

with professional grade code by Jakob Aungiers

Long Short-Term Memory Networks With Python ebook by Jason Brownlee of

Machine Learning Mastery. This is the best written book on the LSTM with

pragmatic and updated Python code.

Practical Time Series Analysis book by Aileen Nielsen. This is the best practical

book on the subject with one small caveat: the book swings between Python and R

from time to time.

Modern Time Series Analysis | SciPy 2019 Tutorial on YouTube by Aileen Nielsen.

About Me

My background is 20 years in software engineering with specialisation in finance. I

work as a software architect in the City of London and my favourite languages are C#

and Python. I have a love relationship with practical mathematics and an affair with

machine learning.

References

[1] Jason Brownlee, Long Short-Term Memory Networks With Python (2019)

[2] Dominic Masters and Carlo Luschi, Revisiting Small Batch Training for Deep Neural

Networks, arXiv:1804.07612v1 [cs.LG] 20 Apr 2018

[3] Minh-Thang Luong, Quoc V. Le, Ilya Sutskever, Oriol Vinyals, Lukasz Kaiser, MultiTask Sequence to Sequence Learning, Published as a conference paper at ICLR 2016

Lstm

TensorFlow

Some rights reserved

Forex Trading

Algorithmic Trading

Deep Learning

Follow

Written by Adam Tibi

197 Followers · Writer for Towards AI

Software consultant from London, UK. Passionate about machine learning, C# and Python. Author of: Pragmatic

Test-Driven Development in C# and .NET

More from Adam Tibi and Towards AI

Adam Tibi in Towards AI

Using a TensorFlow Deep Learning Model for Forex Trading

Building an algorithmic bot, in a commercial platform, to trade based on a model’s prediction

· 8 min read · Oct 11, 2020

340

2

Bex T. in Towards AI

Forget PIP, Conda, requirements.txt! Use Poetry Instead And Thank Me

Later

Pain-free dependency management is finally here

· 8 min read · Jun 24

1.4K

17

Bex T. in Towards AI

10 Advanced Matplotlib Concepts You Must Know To Create Killer Visuals

Become Leonardo da Matplotlib

· 9 min read · Jun 30

690

6

Dr. Mandar Karhade, MD. PhD. in Towards AI

GPT-4: 8 Models in One ; The Secret is Out

GPT4 kept the model secret to avoid competition, now the secret is out!

· 9 min read · Jun 24

745

4

See all from Adam Tibi

See all from Towards AI

Recommended from Medium

Coucou Camille in CodeX

Time Series Prediction Using LSTM in Python

Implementation of Machine Learning Algorithm for Time Series Data Prediction.

· 6 min read · Feb 10

173

2

Kristen Walters in Adventures In AI

5 Ways I’m Using AI to Make Money in 2023

These doubled my income last year

· 9 min read · Jul 19

16.9K

267

Lists

Practical Guides to Machine Learning

10 stories · 184 saves

Natural Language Processing

435 stories · 78 saves

Now in AI: Handpicked by Better Programming

260 stories · 50 saves

Staff Picks

421 stories · 178 saves

Zain Baquar in Towards Data Science

Time Series Forecasting with Deep Learning in PyTorch (LSTM-RNN)

An in depth tutorial on forecasting a univariate time series using deep learning with PyTorch

· 12 min read · Feb 9

434

10

Matt Chapman in Towards Data Science

The Portfolio that Got Me a Data Scientist Job

Spoiler alert: It was surprisingly easy (and free) to make

· 10 min read · Mar 24

3.9K

68

Pradeep

Time Series Forecasting using ARIMA

Introduction

· 10 min read · Feb 22

211

1

The PyCoach in Artificial Corner

You’re Using ChatGPT Wrong! Here’s How to Be Ahead of 99% of ChatGPT

Users

Master ChatGPT by learning prompt engineering.

· 7 min read · Mar 17

29K

522

See more recommendations