Question 1 :

(8p) In your opinion, which steps are compulsory when conducting Bayesian data analysis? Please explain what

steps one takes when designing models, so that we ultimately can place some confidence in the results.

You can either draw a flowchart and explain each step, or write a numbered list explaining each step. (It’s ok to

write on the backside, if they haven’t printed on the backside again. . . )

Solution: Bayesian data analysis involves several key steps to ensure that we can place confidence in the results. Here's a

numbered list of the essential steps in Bayesian data analysis:

Define the Research Question:

Clearly articulate the problem or question you want to address through your analysis.

Data Collection:

Gather relevant data that can help answer the research question.

Prior Belief Specification:

Define your prior beliefs or prior probability distributions about the parameters of interest. This step reflects your knowledge

before observing the data.

Likelihood Function:

Describe the likelihood function, which quantifies how your data is generated given the model parameters.

Model Selection:

Choose an appropriate probabilistic model that describes the relationship between the data and the parameters. This could be a

linear regression, Bayesian network, etc.

Bayesian Inference:

Use Bayes' theorem to update your prior beliefs with the observed data, resulting in the posterior distribution of the parameters.

Sampling or Computational Methods:

Depending on the complexity of the model, use sampling methods like Markov Chain Monte Carlo (MCMC) or variational

inference to approximate the posterior distribution.

Model Checking:

Assess the model's goodness of fit by comparing its predictions to the observed data. Diagnostic tests, such as posterior

predictive checks, can be helpful.

Sensitivity Analysis:

Explore how different choices of priors or model structures affect the results to assess the robustness of your conclusions.

Interpretation:

Interpret the posterior distribution to make meaningful or predictions about the parameters.

Report Results:

Clearly communicate the results, including point estimates and credible intervals, in a way that is understandable to others.

Sensitivity to Assumptions:

Acknowledge the limitations and uncertainties in the analysis, especially regarding the choice of priors and model assumptions.

Decision or Inference:

Based on the posterior distribution and the research question, make decisions or inferences. This may involve hypothesis testing,

prediction, or parameter estimation.

Question 2 :

(12p) Underfitting and overfitting are two concepts not always stressed a lot with black-box machine learning

approaches. In this course, however, you’ve probably heard me talk about these concepts a hundred times. . .

Multilevel models can be one way to handle overfitting, i.e., employing partial pooling. Please design (write down)

three models. The first one should use complete pooling, the second one should employ no pooling, and the

final one should use partial pooling. (Remember to use math notation!) (9p)

Explain the different behaviors of each model. (3p)

Solution:

This question can be answered in many different ways. Here’s one way.

complete pooling

yi ∼ Binomial(n, pi)

logit(pi) = α

α ∼ Normal(0, 2.5)

no pooling

yi ∼ Binomial(n, pi)

logit(pi) = αCLUSTER[j]

αj ∼ Normal(0, 2)

partial pooling using hyper-parameters and hyper-priors

yi ∼ Binomial(n, pi)

logit(pi) = αCLUSTER[j]

αj ∼ Normal( ̄α, σ)

̄α ∼ Normal(0, 1)

σ ∼ Exponential(1)

Complete Pooling (Pooled Model):

All data points share a common parameter $\mu$. This model assumes that all data points are essentially identical in terms of

the parameter of interest. It is useful when there's strong prior belief that the data is highly homogeneous. However, it may not

capture individual variability, potentially leading to underfitting.

No Pooling (Unpooled Model):

Each data point has its own individual parameter $\mu_i$, offering the most flexibility. This model can capture individual

variations but may lead to overfitting, especially when there's limited data. It does not leverage information from other data

points.

Partial Pooling (Hierarchical Model):

Data points are grouped, and each group shares a common parameter $\mu_j". This model balances between the extremes of

complete pooling and no pooling. It allows for sharing of information among similar data points within a group, reducing

overfitting while still accommodating individual variability. It is a good choice when there is some heterogeneity in the data.

Question 2.1:

(9p) In Bayesian data analysis we have three principled ways of avoiding overfitting.

The first way makes sure that the model doesn’t get too excited by the data. The second way is to use some type of scoring

device to model the prediction task and estimate predictive accuracy. The third, and final way is to, if suitable data is at hand,

design models that actually do something about overfitting.

Which are the three ways? (3p)

Explain and provide examples for each of the three ways (2p+2p+2p)

Paper Solution: In the first way we use regularizing priors to capture the regular features of the data. In particular it might be

wise to contrast this with sample size!

In the second way we rely on information theory (entropy and max ent distributions) and the concept of information criteria,

e.g., BIC, DIC, AIC, LOO, and WAIC.

For the final, third way, the below is a good answer:

complete pooling

yi ∼ Binomial(n, pi)

logit(pi) = α

α ∼ Normal(0, 2.5)

no pooling

yi ∼ Binomial(n, pi)

logit(pi) = αCLUSTER[j]

αj ∼ Normal(0, 2)

partial pooling using hyper-parameters and hyper-priors

yi ∼ Binomial(n, pi)

logit(pi) = αCLUSTER[j]

αj ∼ Normal( ̄α, σ)

̄α ∼ Normal(0, 1)

σ ∼ Exponential(1)

Solution:

Three Ways to Avoid Overfitting in Bayesian Data Analysis:

Regularization:

Explanation: Regularization techniques are used to prevent models from becoming overly complex or "too excited" by the data.

They introduce constraints on the model parameters to discourage extreme values and reduce overfitting.

Example: In Bayesian regression, you can use L1 regularization (Lasso) or L2 regularization (Ridge) to add penalty terms to the

likelihood, encouraging model parameters to remain close to zero. For example, adding a penalty term to the regression

coefficients in linear regression discourages the model from assigning excessive importance to any single predictor.

Model Scoring and Cross-Validation:

Explanation: Scoring devices and cross-validation are used to assess the predictive accuracy of a model. These techniques

evaluate how well a model generalizes to unseen data, helping to identify overfitting.

Example: Cross-validation involves splitting the data into training and testing sets multiple times and assessing the model's

performance on the testing data. If a model performs exceptionally well on the training data but poorly on the testing data, it

may be overfitting. Common metrics for scoring include mean squared error, log-likelihood, or the widely used Watanabe-Akaike

Information Criterion (WAIC) in Bayesian analysis.

Hierarchical or Multilevel Models:

Explanation: Hierarchical or multilevel models are designed to address overfitting by explicitly modeling the structure of the

data, accounting for variability at different levels. These models can capture data patterns while controlling for overfitting.

Example: In education research, a multilevel model can be used to analyze student performance in different schools. The model

considers both school-level and student-level factors. By incorporating school-level effects, the model accounts for variations

between schools while still capturing individual student characteristics. This helps prevent overfitting and provides more robust

inferences.

complete pooling

yi ∼ Binomial(n, pi)

logit(pi) = α

α ∼ Normal(0, 2.5)

no pooling

yi ∼ Binomial(n, pi)

logit(pi) = αCLUSTER[j]

αj ∼ Normal(0, 2)

partial pooling using hyper-parameters and hyper-priors

yi ∼ Binomial(n, pi)

logit(pi) = αCLUSTER[j]

αj ∼ Normal( ̄α, σ)

̄α ∼ Normal(0, 1)

σ ∼ Exponential(1)

These three ways provide a principled approach to mitigating overfitting in Bayesian data analysis. Regularization constrains

parameter estimates, model scoring helps evaluate predictive accuracy, and hierarchical models account for data structure to

prevent overfitting and improve the robustness of inferences.

Question 3 :

(5p) What is epistemological justification and how does it differ from ontological justification, when we design models and

choose likelihoods/priors? Please provide an example where you list epistemological and ontological reasons for a likelihood.

Solution:

Epistemological Justification:

Epistemological justification is concerned with the epistemological beliefs and knowledge that we have about the data and the

underlying system we are modeling. It relates to our understanding of how data is generated and what we know or believe about

it based on existing information or expertise. In other words, epistemological justification is about what we know and what we

think we know about the problem at hand.

Example of Epistemological Justification for Likelihood:

Suppose you are modeling the height of a certain plant species. Your epistemological justification for the likelihood function

might involve:

Knowledge that plant heights typically follow a normal distribution based on previous studies or domain expertise.

Understanding that external factors like soil quality and sunlight influence plant height, leads you to choose a normal distribution

as it is appropriate for capturing these influences.

Ontological Justification:

Ontological justification, on the other hand, is concerned with the ontological assumptions about the underlying nature of the

system. It pertains to what is true or exists in reality, independent of our beliefs or knowledge. Ontological justification is about

the structure of the world, the actual physical or statistical processes generating the data, and the properties of the system we

are modeling.

Example of Ontological Justification for Likelihood:

Continuing with the plant height example, ontological justification for the likelihood might involve:

The physical properties of plant growth and genetic factors that lead you to consider that the true distribution of plant heights

should be normal.

The belief that the data generation process itself follows certain underlying physical laws, and that these laws imply a normal

distribution of plant heights.

In summary, the key difference between epistemological and ontological justification is in the source and nature of the

justifications:

Epistemological justification is based on our knowledge, beliefs, and understanding of the data and the problem. It reflects what

we think we know.

Ontological justification is grounded in the actual nature of the world and the system we are modeling. It reflects what is true or

exists independently of our beliefs.

Paper Solution: Epistemological, rooted in information theory and the concept of maximum entropy distributions.

Ontological, the way nature works, i.e., a physical assumption about the world.

One example could be the Normal likelihood for real (continuous) numbers where we have additive noise in

the process (ontological), and where we know that in such cases Normal is the maximum entropy distribution.

Question 4 :

(8p) List and explain the four main benefits of using multilevel models.

Paper Solution:

• Improved estimates for repeat sampling. When more than one observation arises from the same individual,

location, or time, then traditional, single-level models either maximally underfit or overfit the

data.

• Improved estimates for imbalance in sampling. When some individuals, locations, or times are sampled

more than others, multilevel models automatically cope with differing uncertainty across these clusters.

This prevents over-sampled clusters from unfairly dominating inference.

• Estimates of variation. If our research questions include variation among individuals or other groups

within the data, then multilevel models are a big help, because they model variation explicitly.

• Avoid averaging, retain variation. Frequently, scholars pre-average some data to construct variables.

This can be dangerous, because averaging removes variation, and there are also typically several different

ways to perform the averaging. Averaging therefore both manufactures false confidence and introduces

arbitrary data transformations. Multilevel models allow us to preserve the uncertainty and avoid data

transformations.

Solution:

Handling Hierarchical Data Structures:

Multilevel models are well-suited for analyzing data with hierarchical or nested structures, such as students within schools,

patients within hospitals, or measurements taken over time. These models account for the correlation and variability at multiple

levels in the data. For example, in educational research, you can use multilevel models to assess both individual student

performance and school-level effects on academic achievement.

Improved Precision and Efficiency:

By modeling data hierarchically, multilevel models can often provide more precise and efficient parameter estimates compared

to traditional linear models. They "borrow strength" from higher-level units to estimate parameters for lower-level units. This

reduces the risk of overfitting and provides stable estimates, particularly when there are few observations per group. This is

particularly useful in scenarios with limited data at lower levels of the hierarchy.

Handling Variation and Heterogeneity:

Multilevel models account for variation within and between groups or clusters. They allow for the estimation of both fixed effects

(global trends) and random effects (group-specific deviations from those trends). This is useful for capturing heterogeneity in the

data, as it acknowledges that not all groups or individuals are identical. For example, in medical studies, random effects can

capture the differences in patient responses to treatments.

Modeling Changes Over Time:

Multilevel models can handle longitudinal or repeated measures data effectively. They allow for the modeling of temporal

changes within subjects or entities while also considering the variation between subjects. This is vital in various fields, such as

clinical trials, where patients are monitored over time, or in social sciences to study individual trajectories of development.

Question 5 :

(6p) Below you see a Generalized Linear Model

yi ∼ Poisson(λ)

f(λ) = α + βxi

What is f(), and why is it needed? (2p)

Provide at least two examples of f() and when you would use them? (4p)

Paper Solution: Generalized linear models need a link function, because rarely is there a “μ”, a parameter describing the

average outcome, and rarely are parameters unbounded in both directions, like μ is. For example, the shape of the binomial

distribution is determined, like the Gaussian, by two parameters. But unlike the Gaussian, neither of these parameters is the

mean. Instead, the mean outcome is np, which is a function of both parameters. For Poisson we have λ which is both the mean

and the variance. The link function f provides a solution to this common problem. A link function’s job is to map the linear space

of a model like α + βxi onto the non-linear space of a parameter!

Solution: In the context of the given Generalized Linear Model (GLM), the function "f()" is the link function. It's a fundamental

component of GLMs and is needed to establish a connection between the linear combination of predictors (α + βxi) and the

mean parameter (λ) of the Poisson distribution. The link function transforms the linear predictor to ensure that it is within a

valid range and adheres to the assumptions of the distribution.

Two common link functions in GLMs are the identity link and the log link:

Identity Link (f(λ) = λ):

In this case, the linear combination (α + βxi) is directly used as the mean parameter (λ) of the Poisson distribution. This implies

that the response variable (yi) is linearly related to the predictors without any transformation.

Use when modeling count data where the predictor variables have a linear and additive effect on the mean response. For

example, in modeling the number of customer calls as a function of time spent on the website, an identity link could be

appropriate if you believe that each additional minute spent directly increases the number of calls.

Log Link (f(λ) = log(λ)):

Here, the linear combination (α + βxi) is transformed by taking the natural logarithm. This transformation is often used when

modeling data with multiplicative relationships.

Use when dealing with count data where you expect a multiplicative effect of predictors on the mean response. For example, in

epidemiology, when modeling disease rates, a log link might be suitable because it captures relative changes (multiplicative)

rather than absolute changes.

Other common link functions used in GLMs include the logit link for binary data (e.g., logistic regression) and the inverse link for

gamma-distributed data, among others.

Question 5.1:

(4p) Below you see a Generalized Linear Model

yi ∼ Binomial(m, pi)

f(pi) = α + βxi

What is f(), and How does it work? Provide at least two examples of f().

Paper Solution:

Generalized linear models need a link function, because rarely is there a “µ”, a parameter describing the average outcome, and

rarely are parameters unbounded in both directions, like µ is. For example, the shape of the binomial distribution is determined,

like the Gaussian, by two parameters. But unlike the Gaussian, neither of these parameters is the mean. Instead, the mean

outcome is np, which is a function of both parameters.

Since n is usually known (but not always), it is most common to attach a linear model to the unknown part,

p. But p is a probability mass, so pi must lie between zero and one. But there’s nothing to stop the linear model α

falling below zero or exceeding one.

+ βxi from

The link function f provides a solution to this common problem. A link function’s job is to map the linear space of a model like α

+ βxi onto the non-linear space of a parameter.

Solution:

In the context of a Generalized Linear Model (GLM), the function f() is called the link function. It plays a crucial role in connecting

the linear predictor with the mean of the response variable, which is a non-linear relationship. The link function transforms the

linear combination of predictors into a range that is suitable for modeling the response variable. There are various link functions

available in GLMs, and the choice of the link function depends on the nature of the data and the specific modeling requirements.

Two common examples of link functions and how they work are as follows:

Logit Link Function (f() = log(π / (1 - π))):

•

•

•

This link function is often used when modeling binary or binomial response data, where π represents the probability of

success (in a binary outcome) or the probability of an event occurring (in a binomial count).

The logit link function maps the linear combination of predictors (α + βxi) to the log-odds scale. It transforms the linear

predictor into a range from negative infinity to positive infinity, which is suitable for modeling probabilities.

Example: Logistic regression models often use the logit link function to model the probability of a binary event, such as

the likelihood of a customer making a purchase (success/failure) based on predictor variables like age, income, and

gender.

Identity Link Function (f() = π):

•

The identity link function is used when modeling response variables that are continuous and directly interpretable as

probabilities. In this case, π represents the conditional mean of the response variable.

• The identity link function essentially maintains the linear relationship between the predictors and the response without

any transformation. It is often used in simple linear regression when the response variable is continuous and can take

any real value.

• Example: In a linear regression model, the identity link function is used when modeling the relationship between a

predictor variable (e.g., temperature) and a continuous response variable (e.g., sales volume) to predict the expected

sales volume based on temperature.

The choice of the link function in a GLM is crucial for modeling the response variable effectively. Different link functions are

suitable for different types of data, and they ensure that the relationship between predictors and the response is appropriately

captured. The link function transforms the linear predictor into a form that aligns with the distribution and characteristics of the

response variable, allowing for meaningful modeling and interpretation.

Question 5.2:

(5p) Below you see a Generalized Linear Model

yi ∼ Binomial(n, p)

f(p) = α + βxi

What is f () called and why is it needed? (2p) What should f () be in this case? (1p)

Does f () somehow affect your priors? If yes, provide an example. (2p)

Paper Solution:

Generalized linear models need a link function, because rarely is there a “ µ”, a parameter describing the average outcome, and

rarely are parameters unbounded in both directions, like µ is. For example, the shape of the binomial distribution is determined,

like the Gaussian, by two parameters. But unlike the Gaussian, neither of these parameters is the mean. Instead, the mean

outcome is np, which is a function of both parameters. For Poisson we have λ which is both the mean and the variance.

The link function f provides a solution to this common problem. A link function’s job is to map the linear space of a model like α +

βxi onto the non-linear space of a parameter!

In the above case the link function is the logit(). If you set priors and use a link function the often concentrate probability mass in

unexpected

ways.

Solution:

What is f() and Why is it Needed?

• f() in this context is the link function, and it's needed to transform the linear combination of predictors into a valid

probability value for the response variable. It links the linear predictor to the range of the response variable. The link

function is essential in Generalized Linear Models (GLMs) because it ensures that the predicted probabilities lie within

the (0, 1) range, which is necessary for modeling binary or count data.

What Should f() Be in this Case?

• In this case, since the response variable follows a Binomial distribution with parameters n (the number of trials) and p

(the probability of success in each trial), the appropriate link function should be the logit link function. The logit link

function transforms the linear predictor to the log-odds scale, ensuring that the predicted probabilities are within the (0,

1) range.

Does f() Affect Your Priors? Provide an Example.

• Yes, the choice of the link function can affect your choice of priors. The link function influences the interpretation of the

model coefficients and the prior distribution for the coefficients. For example, if you use the logit link function, which

models the log-odds of success, your choice of priors for α and β might be based on prior knowledge of log-odds or logistic

regression coefficients. The link function impacts the scale and meaning of the coefficients, which can guide your prior

specifications.

•

Suppose you are modeling the probability of a customer making a purchase (purchasing vs. not purchasing) based on

their age (xi) using a Binomial distribution. If you choose the logit link function, your priors for α and β might reflect prior

information about the log-odds of making a purchase based on age. For instance, you might specify a Normal prior for β

with a mean reflecting your prior belief about the effect of age on the log-odds of purchasing.

The choice of the link function should align with the characteristics of your data and the interpretability of the model, and it can

influence the specification of prior distributions for model parameters.

Question 6 :

(6p) What is the purpose and limitations of using Laboratory Experiments and Field Experiments as a research

strategy? Provide examples, i.e., methods for each of the two categories, and clarify if one use mostly qualitative

or quantitative approaches (or both).

Paper Solution:

Laboratory Experiments: Real actors’ behavior

Non-natural settings (contrived)

The setting is more controlled

Obtrusiveness: High level of obtrusiveness

Goal:

Minimize confounding factors and extraneous conditions

Maximize the precision of the measurement of the behavior

Establish causality between variables

Limited number of subjects

Limits the generalizability

Unrealistic context

Examples of research methods: Randomized controlled experiments and quasi-experiments, Benchmark studies

Mostly quantitative data

Field Experiments: Natural settings.

Obtrusiveness: Researcher manipulates some variables/properties to observe an effect. Researcher does not

control the setting.

Goal: Investigate/Evaluate/Compare techniques in concrete and realistic settings.

Limitations: Low statistical generalizability (claim analytical generalizability!) Fairly low precision of the

measurement of the behavior (confounding factors).

Not the same as an experimental study (changes are made and results observed), but does not have control

over the natural setting.

Correlation observed but not causation.

Examples: Evaluative case study, Quasi-experiment, and Action research.

Often qualitative and quantitative.

Solution:

Laboratory Experiments:

Purpose: Laboratory experiments are controlled research studies conducted in a controlled environment (laboratory) to

investigate causal relationships between variables. The purpose is to establish cause-and-effect relationships by manipulating

independent variables and observing their impact on dependent variables under controlled conditions.

Limitations:

Artificial Environment: Laboratory experiments may suffer from artificiality, as the controlled environment may not fully

represent real-world settings.

Limited Generalizability: Findings from lab experiments may not easily generalize to real-world situations.

Ethical Concerns: Some experiments may pose ethical challenges when manipulating variables, especially in social and

psychological research.

Examples:

Qualitative or Quantitative? Both approaches can be used in laboratory experiments, depending on the research question. For

instance, in a psychological study on the impact of stress on memory, quantitative measures such as reaction times and memory

scores could be collected, and qualitative interviews might explore participants' experiences.

Field Experiments:

Purpose: Field experiments are conducted in real-world settings to study the effects of manipulated variables on a target

population. The purpose is to increase the external validity of research by observing phenomena in natural contexts with less

control than laboratory experiments.

Limitations:

Less Control: Field experiments have less control over extraneous variables, making it challenging to establish causality.

Logistical Challenges: Conducting experiments in real-world settings can be logistically complex and expensive.

Ethical and Privacy Concerns: Researchers must consider ethical issues and privacy concerns when conducting field experiments,

especially in social and behavioral sciences.

Examples:

Qualitative or Quantitative? Both approaches can be used in field experiments. For instance, a field experiment in public health

assessing the effectiveness of a smoking cessation program may use quantitative data (e.g., reduction in smoking rates) and

qualitative data (e.g., participant interviews) to gain a comprehensive understanding of the program's impact.

Question 7 :

(8p) Below follows an abstract from a research paper. Answer the questions,

• Which of the eight research strategies presented in the ABC framework does this paper likely fit? Justify

and argue!

• Can you argue the main validity threats of the paper, based on the research strategy you picked?

– It would be very good if you can list threats in the four common categories we usually work with in software

engineering, i.e., internal, external, construct, and conclusion validity threats.

Context: The term technical debt (TD) describes the aggregation of sub-optimal solutions that serve to

impede the evolution and maintenance of a system. Some claim that the broken windows theory (BWT),

a concept borrowed from criminology, also applies to software development projects. The theory states

that the presence of indications of previous crime (such as a broken window) will increase the likelihood

of further criminal activity; TD could be considered the broken windows of software systems.

Objective: To empirically investigate the causal relationship between the TD density of a system and

the propensity of developers to introduce new TD during the extension of that system.

Method: The study used a mixed-methods research strategy consisting of a controlled experiment

with an accompanying survey and follow-up interviews. The experiment had a total of 29 developers of

varying experience levels completing a system extension tasks in an already existing systems with high

or low TD density. The solutions were scanned for TD, both manually and automatically. Six of the

subjects participated in follow-up interviews, where the results were analyzed using thematic analysis.

Result: The analysis revealed significant effects of TD level on the subjects’ tendency to re-implement

(rather than reuse) functionality, choose non-descriptive variable names, and introduce other code smells

identified by the software tool SonarQube, all with at least 95% credible intervals. Additionally, the

developers appeared to be, at least partially, aware of when they had introduced TD.

Conclusion: Three separate significant results along with a validating qualitative result combine to

form substantial evidence of the BWT’s applicability to software engineering contexts. This study finds

that existing TD has a mayor impact on developers propensity to introduce new TD of various types

during development. While mimicry seems to be part of the explanation it can not alone describe the

observed effects.

Paper Solution: This is a mixed method study, i.e., an experiment (but not an RCT!), but where we also see qualitative parts, like

from a field study.

Solution:

Research Strategy:

Based on the information provided in the abstract, the paper likely fits into the research strategy known as a "Mixed-Methods

Approach."

This strategy is supported by the following evidence:

The study explicitly mentions using "a controlled experiment with an accompanying survey and follow-up interviews." This

indicates the integration of both quantitative (controlled experiment) and qualitative (survey and interviews) methods in the

research.

Justification:

The mixed-methods approach combines both quantitative and qualitative research techniques to provide a more comprehensive

understanding of the research problem. In this case, the paper incorporates controlled experiments to gather quantitative data

about technical debt (TD) and its effects on developers' behavior. It also employs surveys and follow-up interviews to gather

qualitative insights from the developers. This combination of methods helps validate the results and provides a richer context for

understanding the causal relationship between TD density and developers' actions.

Validity Threats:

The main validity threats in the paper can be categorized into four common categories used in software engineering research:

Internal Validity Threats:

• Selection Bias: The choice of 29 developers with varying experience levels could introduce selection bias. Developers

with different levels of experience may respond differently to TD, affecting the internal validity of the study.

• Experimenter Effects: Any influence or bias introduced by the experimenters during the controlled experiment or

interviews could impact the internal validity.

External Validity Threats:

• Population Generalizability: The results from a specific set of developers may not generalize to all software development

contexts. This threatens the external validity of the findings.

• Contextual Generalizability: The study's context (high or low TD density systems) may not represent the diversity of

software development projects, impacting the external validity.

Construct Validity Threats:

•

Operationalization of TD: How TD is defined, measured, and categorized can be a construct validity threat. In this case,

the paper mentions scanning for TD both manually and automatically, which may introduce variations in the

measurement.

• Measurement of Developer Behavior: The paper discusses various behaviors of developers, such as re-implementing

functionality and choosing non-descriptive variable names. The construct validity depends on the accurate

measurement of these behaviors.

Conclusion Validity Threats:

• Multiple Comparisons: To ensure that the results are not spurious, the paper should address the issue of multiple

comparisons due to examining multiple outcomes (e.g., re-implementing functionality, choosing variable names,

introducing code smells).

• Extraneous Variables: The presence of uncontrolled variables that may confound the results, such as other external

factors affecting developers' decisions, could threaten the conclusion validity.

In summary, the mixed-methods research approach in the paper offers a holistic view of the research problem but introduces

various validity threats that researchers should consider when interpreting the results and generalizing them to broader software

engineering contexts.

Question 7.1:

(6p) Below follows an abstract from a research paper. Answer the questions,

• Which of the eight research strategies presented in the ABC framework does this paper likely fit? Justify and argue!

• Can you argue the main validity threats of the paper, based on the research strategy you picked?

– It would be very good if you can list threats in the four common categories we usually work with in software

engineering, i.e., internal, external, construct, and conclusion validity threats.

Software engineering is a human activity. Despite this, human aspects are under-represented in technical debt research, perhaps

because they are challenging to evaluate.

This study’s objective was to investigate the relationship between technical debt and affective states (feelings, emotions, and

moods) from software practitioners. Forty participants (N = 40) from twelve companies took part in a mixed-methods approach,

consisting of a repeated-measures (r = 5) experiment (n = 200), a survey, and semi-structured interviews.

The statistical analysis shows that different design smells (strong indicators of technical debt) negatively or positively impact

affective states. From the qualitative data, it is clear that technical debt activates a substantial portion of the emotional spectrum

and is psychologically taxing. Further, the practitioners’ reactions to technical debt appear to fall in different levels of maturity.

We argue that human aspects in technical debt are important factors to consider, as they may result in, e.g., procrastination,

apprehension, and burnout.

Solution:

Research Strategy:

This research paper likely fits the "Mixed Methods" research strategy in the ABC framework. Here's the justification and

argument:

• The paper mentions using a mixed-methods approach, which combines quantitative (experiment and survey) and

qualitative (semi-structured interviews) data collection methods.

• The objective is to investigate the relationship between technical debt and affective states, a complex phenomenon that

benefits from both quantitative and qualitative insights.

• The use of both experimental and interview data aligns with the mixed-methods strategy's focus on gaining a

comprehensive understanding of the research question.

Validity Threats:

Validity threats in this study can be categorized into four common categories in software engineering research:

Internal Validity Threats:

• Selection bias: The choice of participants from specific companies may not represent the entire software engineering

population.

• History effects: Changes in participants' affective states over time may confound the results, particularly in the repeatedmeasures experiment.

External Validity Threats:

• Generalizability: Findings from 12 companies may not generalize to the broader software engineering community.

• Interaction effects: The impact of technical debt on affective states may vary based on company-specific factors.

Construct Validity Threats:

• Measurement validity: Ensuring that the instruments used to measure affective states and technical debt are accurate

and reliable.

• Construct underrepresentation: The study acknowledges that human aspects in technical debt are challenging to

evaluate, indicating potential limitations in capturing the full scope of the construct.

Conclusion Validity Threats:

• Observer bias: Interviewers or data analysts' preconceived notions or biases may affect the interpretation of qualitative

data.

•

Ecological validity: The study may face challenges in capturing the complexity of real-world software development

environments in a controlled experiment.

In summary, this study falls under the mixed-methods research strategy and demonstrates a focus on understanding the

relationship between technical debt and affective states among software practitioners. To enhance the study's validity,

researchers should address threats related to participant selection, generalizability, measurement validity, and potential biases in

interpreting data. The complexity of human aspects in technical debt research adds to the challenges in construct validity and

ecological validity.

Question 8 :

(6p)

In the paper Guidelines for conducting and reporting case study research in software engineering by Runeson &

Höst the authors present an overview of research methodology characteristics. They present their claims in three

dimensions: Primary objective, primary data, and design.

Primary objective indicates what the purpose is with the methodology (e.g., explanatory), primary data indicates

what type of data we mainly see when using the methodology (e.g., qualitative), while design indicates how flexible

the methodology is from a design perspective (e.g., flexible)

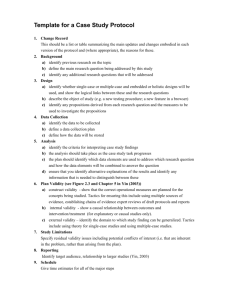

Please fill out the table below according to the authors’ presentation.

Methodology

Survey

Case study

Experiment

Action research

Primary objective

Descriptive

Exploratory

Exploratory

Improving

Primary data

Quantitative

Qualitative

Quantitative

Qualitative

Design

Fixed

Flexible

Fixed

Flexible

Question 9 :

(4p) See the DAG below. We want to estimate the total causal effect of Screen time on Obesity.

Design a model where Obesity is approximately distributed as a Gaussian likelihood, and then write down a linear

model for μ to clearly show which variable(s) we should condition on.

Also add, what you believe to be, suitable priors on all parameters. If needed to you can always state your

assumptions.

Parental Education

Screen time

Physical Activity

Obesity

Self-harm

Paper Solution: 1 p for correct conditioning, 3p for appropriate model as further down below.

> dag <− da g i t t y (" dag {

PE −> O

S −> PA −> O

PE −> S −> SH

O −> SH

}")

> adjus tmentSe t s ( dag , exposure = "S" , outcome = "O")

{ PE }

and the answer is { PE }, i.e., Parental education. In short, check what elementary confounds we have in

the DAG (DAGs can only contain four), follow the paths, decide what to condition on. If we condition on

Self-harm we’re toast. . .

Oi ∼ Normal(μi, σ)

μi = α + βSTSTi + βPEPEi

α ∼ Normal(0, 5)

{βST, βPE} ∼ Normal(0, 1)

σ ∼ Exponential(1)

Solution:

To estimate the total causal effect of Screen time on Obesity, we can create a causal model and write down a linear model for μ

(the mean of Obesity) to show which variables we should condition on. We'll also specify suitable priors for the parameters. In

this causal model, it's essential to condition on variables that are potential confounders or mediators. Based on the given causal

relationships, here's the linear model:

Causal Model:

Parental education is a parent-level variable.

Screen time is a child-level variable influenced by parental education.

Physical Activity is a child-level variable influenced by both parental education and Screen time.

Self-harm is a child-level variable influenced by parental education and Screen time.

Obesity is the outcome variable influenced by all the mentioned variables.

Linear Model for μ:

To estimate the total causal effect of Screen time on Obesity while controlling for potential confounders, we can write a linear

model for μ as follows:

μ=α+β1Parental Education + β2Screen Time + β3Physical Activity + β4Self-Harm

In this model, μ represents the mean of Obesity. We include Parental Education, Screen Time, Physical Activity, and Self-Harm as

covariates to account for their potential influence on Obesity.

Priors:

For suitable priors, we can make some assumptions based on the nature of the data and our beliefs. Common prior choices for

regression parameters like β include normal distributions with mean 0 and precision (inverse of variance) τ. The specific choice of

priors may depend on your data and prior knowledge. Here's a general form for the priors:

Priors for β coefficients: You can assume that β coefficients follow normal distributions with mean 0 and precision τ (τ controls

the spread of the distribution, with higher values indicating less spread).

Priors for α: The intercept α can also follow a normal distribution, typically centered around 0.

Priors for error terms: You can assume normally distributed errors (likelihood) with mean 0 and a suitable variance (σ^2).

Keep in mind that the choice of priors can influence the results, so it's important to select priors that are appropriate for your

specific dataset and research context. Bayesian methods allow you to specify these priors based on your prior knowledge or

beliefs about the parameters.

Question 10 :

([−15, 15]p)

Below follows a number of multiple choice questions. There can be more than one correct answer! Mark the

correct answer by crossing the answer. In the case of DAGs, X is the treatment and Y is the outcome.

Correct answer gives 0.5 points, wrong or no answer deducts 0.5 points.

Q1 What is this construct called: X ← Z → Y ?

{Collider} {Pipe} {Fork} {Descendant}

Q2 What is this construct called: X → Z ← Y ?

{Collider} {Pipe} {Fork} {Descendant}

Q3 What is this construct called: X → Z → Y ?

{Collider} {Pipe} {Fork} {Descendant}

Q4 If we condition on Z we close the path X → Z ← Y .

{True} {False}

Q5 What should we condition on?

Z

A

X

Y

{A} {Z} {Z and A} {Nothing}

Q6 What should we condition on?

A

Z

X

Y

{A} {Z} {Z and A} {Nothing}

Q7 What should we condition on?

A

X

Z

Y

{A} {Z} {Z and A} {Nothing}

Q8 How can overfitting be avoided?

{cross-validation} {unregularizing priors} {Use a desinformation criteria}

Q9 Berkson’s paradox is when two events are. . .

{correlated but should not be} {correlated but has no causal statement} {not correlated but should be}

Q10 An interaction is an influence of predictor. . .

{conditional on parameter} {on a parameter} {conditional on other predictor}

Q11 How does an interaction look like in a DAG?

{X ← Z → Y } {X → Z → Y } {X → Z ← Y}

Q12 To measure the same size of an effect in an interaction as in a main/population/β parameter requires, as a

rule of thumb, at least a sample size that is. . .

{4x larger} {16x larger} {16x smaller} {8x smaller}

Q13 We interpret interaction effects mainly through. . .

{tables} {posterior means and standard deviations} {plots}

Q14 In Hamiltonian Monte Carlo, what does a divergent transition usually indicate?

{A steep region in parameter space} {A flat region in parameter space} {Both}

Q15 Your high bR values indicate that you have a non-stationary posterior. What do you do now?

{Visually check you chains} {Run chains for longer} {Check effective sample size} {Check E-BMFI values}

Q16 What distribution maximizes this?

H(p) = −∑ ni=1 pilog(pi)

{Flattest} {Most complex} {Most structured} {Distribution that can happen the most ways}

Q17 What distribution to pick if it’s a real value in an interval?

{Uniform} {Normal} {Multinomial}

Q18 What distribution to pick if it’s a real value with finite variance?

{Gaussian} {Normal} {Binomial}

Q19 Dichotomous variables, varying probability?

{Binomial} {Beta-Binomial} {Negative-Binomial/Gamma-Poisson}

Q20 Non-negative real value with a mean?

{Exponential} {Beta} {Half-Cauchy}

Q21 Natural value, positive, mean, and variance are equal?

{Gamma-Poisson} {Multinomial} {Poisson}

Q22 We want to model probabilities?

{Gamma} {Beta} {Delta}

Q23 Unordered (labeled) values?

{Categorical} {Cumulative} {Nominal}

Q24 Why do we use link functions in a GLM?

{Translate from likelihood to linear model} {Translate from linear model to likelihood} {To avoid absurd values}

Q25 On which effect scale are parameters?

{Absolute} {None} {Relative}

Q26 On which effect scale are predictions?

{Absolute} {None} {Relative}

Q27 We can use . . . to handle over-dispersion.

{Beta-Binomial} {Negative-Binomial} {Exponential-Poisson}

Q28 In zi models we assume the data generation process consist of two disparate parts?

{Yes} {No}

Q29 Ordered categories have. . .

{a defined maximum and minimum} {continuous value} {an undefined order} {unknown ‘distances’ between

categories}

Q30 When modeling ordered categorical predictors we can express them in math like this: β∑j=0 δj. Cross

correct statement:

{δ is the total effect of the predictor and β are proportions of total effect} {β is total effect of predictor and δ are

proportions of total effect}

Question 1 :

(3p) What is underfitting and overfitting and why does it lead to poor prediction?

Write a short example (perhaps as a model specification?) and discuss what would be a possible condition for underfitting and

overfitting.

Paper Solution: One straightforward way to to discuss this is to show two simple models, one where we only have a general

intercept (complete pooling), and one where you estimate an intercept for each cluster in the data (varying intercept), but

where we don’t share information between the clusters (think of the pond example in the coursebook), i.e., no pooling. This

question can be answered in many different ways.

yi ∼ Binomial(n, pi)

logit(pi) = α

α ∼ Normal(0, 2.5)

above complete pooling, below no pooling

yi ∼ Binomial(n, pi)

logit(pi) = αCLUSTER[j]

αj ∼ Normal(0, 2)

for j = 1, ……….., n

Solution:

Underfitting and overfitting are two common issues in machine learning and statistical modeling that can lead to poor prediction

performance:

Underfitting: Underfitting occurs when a model is too simple to capture the underlying patterns in the data. It typically results in

a high bias, meaning the model's predictions are systematically off the mark, either by consistently overestimating or

underestimating the true values.

Example:

Model Specification: Linear regression with only one feature to predict house prices.

Condition for Underfitting: If the housing market depends on multiple features such as square footage, number of bedrooms,

and neighborhood, and you use a simple linear model with just one of these features, the model may underfit the data because

it doesn't account for the complexity of the relationship.

In this condition, the model is too simplistic to capture the nuances of the housing market, leading to underfitting. The model

might predict the same price for all houses, which would result in poor predictions.

Overfitting: Overfitting occurs when a model is too complex and fits the noise in the data rather than the true underlying

patterns. It typically results in a high variance, meaning the model performs well on the training data but poorly on unseen or

new data.

Example:

Model Specification: A decision tree with many branches that perfectly fits all training data points.

Condition for Overfitting: If you create a very complex decision tree with too many branches and leaves, it might fit the training

data points exactly, even the noise, by creating intricate rules for each data point.

In this condition, the model is overly complex, fitting the training data to the point of memorization, but it doesn't generalize

well to new data, leading to overfitting. As a result, the model might make poor predictions on unseen houses because it's too

tailored to the training data.

Both underfitting and overfitting can lead to poor prediction performance because they compromise the model's ability to

generalize to new or unseen data. In machine learning, the goal is to find the right balance between model complexity and

performance, ensuring that the model captures the underlying patterns without being overly simplistic or overly complex.

Techniques like cross-validation and regularization can help mitigate these issues.

Question 2 :

(3p) What is epistemological justification and how does it differ from ontological justification, when we design models and pick

likelihoods? Please provide an example where you argue epistemological and ontological reasons for selecting a likelihood.

Paper Solution: Epistemological, rooted in information theory and the concept of maximum entropy distributions. Ontological,

the way nature works, i.e., a physical assumption about the world.

One example could be the Normal() likelihood for real (continuous) numbers where we have additive noise in the process

(ontological), and where we know that in such cases Normal is the maximum entropy distribution.

Solution:

Epistemological justification and ontological justification are two distinct aspects of model design in statistics and data analysis,

particularly when choosing likelihoods:

Epistemological Justification:

Epistemological justification is based on our knowledge, beliefs, and understanding of the data and the underlying processes we

are modeling. It relates to what we know, think, or infer about the data generation process. Epistemological reasons for selecting

a likelihood are driven by existing knowledge, theory, and information.

Example - Epistemological Justification for Likelihood:

Imagine you are modeling the time it takes for a website user to complete a certain task. Based on prior research and domain

expertise, you know that user task completion times often follow a log-normal distribution. Your epistemological justification for

selecting a log-normal likelihood is based on the understanding that this distribution aligns with your prior knowledge and

observations of similar scenarios.

Ontological Justification:

Ontological justification, on the other hand, is based on the nature of reality, regardless of our knowledge or beliefs. It pertains to

the actual properties and characteristics of the system or phenomenon being modeled. Ontological reasons for selecting a

likelihood are driven by the belief that a particular distribution reflects the inherent properties of the data-generating process.

Example - Ontological Justification for Likelihood:

Consider modeling the number of defects in a manufacturing process. You have no prior knowledge or information, but you

believe that defects are inherently rare events in the process. In this case, you might choose a Poisson distribution as the

likelihood because it reflects the inherent property of rare events occurring independently over time, aligning with the nature of

defects.

In summary, the key difference lies in the source of justification:

•

•

Epistemological Justification relies on what we know, our prior beliefs, and domain-specific knowledge to choose a

likelihood.

Ontological Justification is based on the belief that a particular likelihood reflects the inherent properties or

characteristics of the data-generating process, regardless of prior knowledge or beliefs. It assumes that the chosen

distribution is a fundamental aspect of the phenomenon being modeled.

Question 3 :

(2p) What are the two types of predictive checks? Why do we have to do these predictive checks?

Paper Solution:

Prior predictive checks: To check we have sane priors.

Posterior predictive checks: To see if we have correctly captured the regular features of the data.

Solution:

The two types of predictive checks are posterior predictive checks and prior predictive checks. These checks are conducted in the

context of Bayesian data analysis to assess the performance and validity of a Bayesian model.

Posterior Predictive Checks:

Posterior predictive checks involve generating new data points, often referred to as "replicates," using the posterior distribution

of the model parameters. These replicates are simulated based on the estimated model and parameter uncertainty.

The observed data are then compared to the replicates generated from the model. By assessing how well the observed data align

with the replicated data, you can evaluate the model's ability to capture the underlying data-generating process. Discrepancies

can reveal model misspecifications, bias, or other issues.

Prior Predictive Checks:

Prior predictive checks are similar to posterior predictive checks but are based on the prior distribution of the model parameters,

without incorporating information from the observed data.

Prior predictive checks help assess the appropriateness of the prior distribution, which captures your beliefs or expectations

about the parameters before seeing any data. By simulating data from the prior and comparing it to the observed data, you can

identify if the prior assumptions align with the actual data.

Why We Do Predictive Checks:

Predictive checks serve several crucial purposes in Bayesian data analysis:

Model Assessment: Predictive checks allow us to assess the quality and validity of our Bayesian model. They help detect model

inadequacies, including underfitting and overfitting, and identify potential misspecifications.

Model Comparison: By comparing the observed data to the replicates or data generated under different models, we can choose

the model that best describes the observed data. This is particularly important when considering multiple competing models.

Quantifying Uncertainty: Predictive checks provide a way to quantify the uncertainty inherent in Bayesian analysis. They help us

understand how well the model accounts for the inherent randomness in the data.

Identifying Outliers: Predictive checks can help identify outliers or anomalies in the data. Unusual discrepancies between

observed data and replicates may indicate data points that need further investigation.

In summary, predictive checks are essential in Bayesian data analysis to assess model performance, make informed model

choices, evaluate prior assumptions, and ensure that the model aligns with the data and the underlying data-generating process.

Question 4 :

(6p) When diagnosing Markov chains, we often look at three diagnostics to form an opinion of how well things have gone. Which

three diagnostics do we commonly use? What do we look for (i.e, what thresholds or signs do we look for)? Finally, what do they

tell us?

Paper Solution:

Effective sample size: A measure of the efficiency of the chains. 10% of total sample size (after warmup) or at least 200 for each

parameter.

R-hat: A measure of the between and the within variance of our chains. Indicates if we have reached a stationary posterior

probability distribution. Should approach 1:0 and be below 1:01.

Traceplots: Check if the chains are mixing well. Are they converging around the same parameter space?

Solution:

When diagnosing the performance of Markov chains, three common diagnostics are often used to assess how well the chains

have converged and whether they have adequately sampled the target distribution. These diagnostics are:

Autocorrelation Function (ACF):

• What to Look For: In the ACF, we look for a rapid decay in autocorrelation as the lag increases. Ideally, we want to see

the autocorrelations quickly drop close to zero for lags beyond a certain point.

• What It Tells Us: A rapidly decaying ACF suggests that the chain is efficiently exploring the parameter space and is

producing independent samples from the target distribution. Slowly decaying autocorrelations indicate that the chain

might be stuck, leading to inefficient sampling and longer mixing times.

Gelman-Rubin Diagnostic (R-hat or $\hat{R}$):

• What to Look For: The Gelman-Rubin diagnostic compares the within-chain variability to between-chain variability for

multiple chains started from different initial values. $\hat{R}$ should be close to 1 (e.g., less than 1.1) for all model

parameters.

• What It Tells Us: A $\hat{R}$ close to 1 indicates that different chains have converged to the same target distribution,

suggesting good convergence. Values significantly greater than 1.1 may indicate that the chains have not mixed well,

and further sampling is needed.

Effective Sample Size (ESS):

• What to Look For: ESS measures the number of effectively independent samples that the chain provides. A higher ESS

indicates a more efficient sampling process. Ideally, you want an ESS that is a substantial fraction of the total number of

samples.

• What It Tells Us: A high ESS implies that the chain is providing a sufficient number of effectively independent samples,

ensuring that you have enough information to estimate parameters and make valid inferences. A low ESS suggests that

the samples are highly correlated and might not be representative of the target distribution.

In summary, these diagnostics are used to evaluate the convergence and performance of Markov chains in Bayesian analysis.

A rapidly decaying ACF, a Gelman-Rubin diagnostic close to 1, and a high ESS are all indicative of well-behaved chains that

have adequately explored the parameter space and are providing reliable samples from the target distribution. These

diagnostics help ensure that the results obtained from Markov chains are valid and representative of the posterior

distribution of interest.

Question 5 :

(3p) Quadratic approximation and grid-approximation works very well in many cases, however in the end we fall back on

Hamiltonian Monte Carlo (HMC) at many times. Why do we use HMC, i.e., what are the limitations of the other approaches?

Additionally, how does HMC work conceptually, i.e., describe with your own words?

Paper Solution:

Well, quap and other techniques assume a Normal likelihood. Additionally, they don’t see levels in a model (they simply do some

hill climbing to find the maximum a posterior estimate), i.e., when a prior is itself a function of parameters, there are two levels

of uncertainty. HMC simulates a physical system, i.e., the hockey puck, which it flips around the log-posterior (i.e., the valleys are

the interesting things, and not the hills as in the case of quap).

Soution:

Why Use Hamiltonian Monte Carlo (HMC):

Hamiltonian Monte Carlo (HMC) is a powerful and versatile sampling technique used in Bayesian statistics and data analysis. We

use HMC for several reasons, primarily because it overcomes some of the limitations associated with other approaches like

quadratic approximation and grid-approximation:

•

Handling Complex and Multimodal Distributions:

o Quadratic approximation methods, such as Laplace approximation, are effective for simple, unimodal

distributions but may struggle with more complex, multimodal distributions. HMC is particularly well-suited to

explore complex, multi-peaked posteriors effectively.

•

Reducing Computational Inefficiency:

o Grid-approximation techniques involve exhaustive evaluation of the likelihood function at a grid of points,

which can be computationally demanding and may not be feasible for high-dimensional problems. HMC

provides more efficient sampling by using gradient information to guide the sampling process.

•

Handling High-Dimensional Spaces:

o In high-dimensional parameter spaces, quadratic approximation may not capture the curvature and

dependencies accurately, leading to poor approximations. HMC leverages gradient information to navigate

high-dimensional spaces more effectively.

How HMC Works Conceptually:

Hamiltonian Monte Carlo is a more advanced Markov chain Monte Carlo (MCMC) method that combines ideas from classical

physics with MCMC techniques. Conceptually, HMC works as follows:

•

•

•

•

•

Mimicking Physical Systems: HMC treats the posterior distribution as a landscape or energy surface, where the

probability distribution corresponds to the height of the landscape. It then simulates a physical system traveling on this

landscape.

Introduction of Hamiltonian Dynamics: HMC introduces a concept from physics called Hamiltonian dynamics, which

includes the idea of particles moving with kinetic energy (related to momentum) and potential energy (related to the

probability distribution).

Leapfrog Integration: To simulate Hamiltonian dynamics, HMC uses a numerical integration scheme called "leapfrog" to

calculate how the particle's position and momentum change over time. The kinetic energy represents the uncertainty of

the particle's position, while the potential energy represents the likelihood of the parameter values.

Metropolis-Hastings Step: After simulating the Hamiltonian dynamics, HMC proposes a new state by altering the

momentum and position of the particle. The Metropolis-Hastings acceptance criteria are then applied to accept or

reject the proposed state based on the energy difference and the detailed balance property.

Rejection-Free Updates: HMC can take large steps through the parameter space efficiently because of its use of gradient

information. This results in more efficient exploration and often fewer correlated samples, reducing the need for

autocorrelation adjustments.

In summary, HMC combines principles from physics to efficiently explore the posterior distribution by simulating a particle's

movement in an energy landscape. It leverages gradient information to guide the exploration and can handle complex, highdimensional, and multimodal distributions that other methods may struggle with.

dWAIC

Weight

SE

dSE

0.0

1

14.26

NA

40.9

0

11.28

10.48

44.0

0

11.66

12.23

Question 6 :

(4p) As a result of comparing three models, we get the above output. What does each column (WAIC, pWAIC, dWAIC, weight, SE,

and dSE) mean? Which model would you select based on the output?

Paper Solution:

WAIC: information criteria (the lower the better).

pWAIC: effective number of parameters in the model.

dWAIC: the difference in WAIC between the models.

weight: an approximate way to indicate which model WAIC prefers.

SE: standard error. How much noise are we not capturing.

dSE: the difference in SE between the models.

If you have approximately 4–6 times larger dWAIC than dSE you have fairly strong indications

there is a ‘significant’ difference. Here M1 is, relatively speaking, the best.

Solution:

The output provided appears to be the result of model comparison using the Widely Applicable Information Criterion (WAIC) and

related statistics. Here's the interpretation of each column:

m1

m2

m3

WAIC

361.9

401.8

405.9

pWAIC

3.8

2.6

1.1

1.

WAIC (Widely Applicable Information Criterion): WAIC is a measure of the out-of-sample predictive accuracy of a model.

It quantifies how well the model predicts new, unseen data. Lower WAIC values indicate better predictive performance.

2.

pWAIC (Penalized WAIC): pWAIC is a version of WAIC that incorporates a penalty for model complexity. It adjusts WAIC

for overfitting, favoring simpler models. Lower pWAIC values suggest more parsimonious models.

3.

dWAIC (Difference in WAIC): dWAIC represents the difference in WAIC between the current model and the model with

the lowest WAIC. It quantifies how much worse the model is compared to the best-performing model.

4.

Weight: The weight represents the model's relative support in the model comparison. It quantifies the probability that

each model is the best among the ones considered. Models with higher weights are more likely to be the best choice.

5.

SE (Standard Error of WAIC): The standard error of WAIC estimates the uncertainty associated with the WAIC

calculation. Smaller SE values indicate more confidence in the WAIC estimate.

6.

dSE (Standard Error of dWAIC): The standard error of dWAIC quantifies the uncertainty in the difference in WAIC values

between the current model and the best model. Smaller dSE values suggest greater confidence in the dWAIC estimate.

Model Selection:

Based on the output, you would typically select the model with the lowest WAIC or pWAIC because these values represent

predictive accuracy and model fit. In this case, model "m1" has the lowest WAIC (361.9), making it the top choice for predictive

performance.

However, it's essential to consider the dWAIC values and their associated SE when deciding between models. The models "m2"

and "m3" have considerably higher WAIC values, and their dWAIC values are substantially larger than the standard errors (dSE).

This suggests that model "m1" is significantly better than the other two models in terms of predictive performance.

In summary, you would select model "m1" based on the output because it has the lowest WAIC and is well-supported by the

relative weight. Model "m2" and "m3" do not perform as well in predicting new data, as indicated by their higher WAIC values

and dWAIC differences from the best model.

Question 7 :

(6p) The DAG below contains an exposure of interest X, an outcome of interest Y , an unobserved

variable U, and three observed covariates (A, B, and C). Analyze the DAG.

a) Write down the paths connecting X to Y (not counting X -> Y )?

b) Which must be closed?

c) Which variable(s) should you condition on?

d) What are these constructs called in the DAG universe?

• U -> B <- C

• U <- A -> C

A

(U)

C

B

X

Y

Paper Solution: First is, X <- U <- A -> C -> Y , and second is, X <- U -> B <- C -> Y . These are both backdoor paths that could

confound inference. Now ask which of these paths is open. If

a backdoor path is open, then we must close it. If a backdoor path is closed already, then we must not

accidentally open it and create a confound.

Consider the first path, passing through A. This path is open, because there is no collider within it. There is

just a fork at the top and two pipes, one on each side. Information will flow through this path, confounding

X -> Y . It is a backdoor. To shut this backdoor, we need to condition on one of its variables. We can’t

condition on U, since it is unobserved. That leaves A or C. Either will shut the backdoor, but conditioning

on C is the better idea, from the perspective of efficiency, since it could also help with the precision of the

estimate of X -> Y (we’ll give you points for conditioning on A also).

Now consider the second path, passing through B. This path does contain a collider, U -> B <- C. It is

therefore already closed. If we do condition on B, it will open the path, creating a confound. Then our

inference about X -> Y will change, but without the DAG, we won’t know whether that change is helping us

or rather misleading us. The fact that including a variable changes the X -> Y coefficient does not always

mean that the coefficient is better now. You could have just conditioned on a collider!

First construct is a collider. If you condition on B you open up the path. The second construct is a confounder

(fork). If you condition on A you close the path.

Solution:

In the provided Directed Acyclic Graph (DAG), let's analyze the connections and answer the questions:

a) Paths Connecting X to Y (excluding X -> Y):

X -> A -> C -> Y

X -> A -> C <- B -> Y

X -> A <- U -> B -> Y

X -> A <- U -> B <- C -> Y

b) Which Paths Must Be Closed:

To identify the causal effect of X on Y while blocking other paths, we need to close the following paths:

X -> A -> C -> Y (Close at A or C)

X -> A <- U -> B -> Y (Close at A or B)

X -> A <- U -> B <- C -> Y (Close at A, B, or C)

c) Variables to Condition On:

To block the identified paths and estimate the causal effect of X on Y, you should condition on the variables that are immediate or

downstream ancestors of both X and Y. In this case, you should condition on variable A and variable C.

d) Constructs in the DAG Universe:

U -> B <- C: This structure represents a collider in the DAG universe. The presence of U makes B and C conditionally dependent

when observing their common effect on Y, introducing conditional dependence (a non-causal relationship).

U <- A -> C: This structure represents a fork in the DAG universe. A is a common cause of both U and C, making A a confounder in

the relationship between U and C.

In summary, to estimate the causal effect of X on Y while blocking confounding paths, you should condition on variables A and C.

The DAG analysis helps identify the paths of interest and the necessary variables to condition on to obtain a valid causal

inference.

Question 8 :

(8p) Conducting Bayesian data analysis requires us to do several things for us to trust the results. Can you describe the workflow

and what the purpose is with each step?

Paper Solution: Start with a null model, do prior checks, check diagnostics, do posterior checks, then conduct inferential

statistics if needed. Also, it would be good if you mention comparisons of models and that it is an iterative approach.

Solution:

Conducting Bayesian data analysis is a systematic process that involves several key steps to ensure the trustworthiness of the

results. Here's a comprehensive workflow with descriptions of the purpose of each step:

Define the Research Question and Objectives:

Purpose: Clearly articulate the research question and what you aim to achieve through Bayesian analysis. This step sets the

foundation for the entire analysis.

Data Collection and Preprocessing:

• Purpose: Gather relevant data, clean, and preprocess it as needed. High-quality data is crucial for accurate modeling.

Null Model Specification:

• Purpose: Start with a null model (often the simplest model) to establish a baseline for comparison. This step helps

assess the improvement of more complex models.

Prior Specification:

• Purpose: Define prior distributions for model parameters based on prior knowledge or expert judgment. Priors encode

existing information and beliefs about the parameters.

Prior Checks:

• Purpose: Evaluate the appropriateness of the chosen priors. This step helps identify potential issues with prior

specifications, such as informative or uninformative priors.

Model Building and Selection:

• Purpose: Develop a Bayesian model that describes the data generation process. This includes specifying likelihood

functions and modeling relationships among variables. Model selection involves comparing different models to choose

the most suitable one.

Bayesian Inference:

• Purpose: Use Bayesian techniques, such as Markov chain Monte Carlo (MCMC) or variational inference, to estimate the

posterior distribution of model parameters. This step provides parameter estimates and their uncertainty.

Model Diagnostics:

• Purpose: Assess the performance and convergence of the MCMC algorithm. Diagnostics, like trace plots,

autocorrelation, and Gelmn-Rubin statistics, help ensure that the MCMC samples are reliable.

Posterior Checks

• Purpose: Evaluate the fit of the model to the observed data. Posterior predictive checks involve simulating new data

from the posterior distribution and comparing it to the observed data.

Comparison of Models:

• Purpose: Compare different models using techniques like the Widely Applicable Information Criterion (WAIC) to assess

predictive performance and determine which model best fits the data.

Iterative Approach:

• Purpose: Bayesian data analysis is often an iterative process. You may need to revisit earlier steps, adjust priors, or

refine models based on diagnostics and model comparisons.

Inferential Statistics and Reporting:

• Purpose: If the goal is hypothesis testing or parameter estimation, conduct inferential statistics to draw conclusions and

make inferences based on the Bayesian results. Summarize and report the findings in a clear and interpretable manner.

Interpretation and Decision-Making:

• Purpose: Interpret the results in the context of the research question and objectives. Use the Bayesian analysis to

inform decisions or draw scientific conclusions.

Communication:

• Purpose: Communicate the findings to relevant stakeholders, colleagues, or the broader scientific community through

reports, papers, or presentations.

The purpose of this workflow is to ensure a systematic and rigorous approach to Bayesian data analysis, from defining the

research question to drawing meaningful conclusions. It emphasizes the importance of model specification, prior choices,

diagnostics, and model comparisons to build confidence in the results and facilitate robust decision-making.

Question 9 :

(5p) To set correct insurance premiums, a car insurance firm is analyzing past data from several drivers. An example dataset is

shown below:

Driver

Age

Experience

Number of Incidents

1

45

High

1

2

29

Low

3

3

26

Medium

1

4

50

Medium

0

………………..

………………………

………………………

………………………..

With this data, the firm wants to use Bayesian data analysis to predict the number of

accidents that are likely to happen given the age of the driver and their experience level