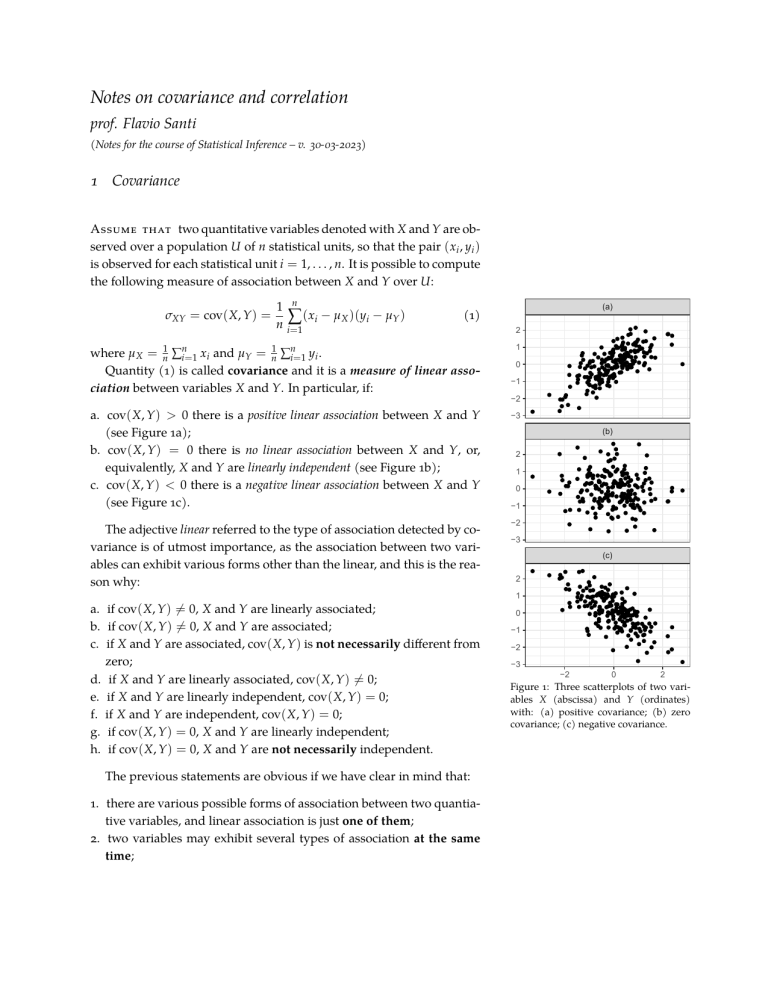

Notes on covariance and correlation prof. Flavio Santi (Notes for the course of Statistical Inference – v. 30-03-2023) 1 Covariance ASSUME THAT two quantitative variables denoted with X and Y are observed over a population U of n statistical units, so that the pair ( xi , yi ) is observed for each statistical unit i = 1, . . . , n. It is possible to compute the following measure of association between X and Y over U: σXY = cov( X, Y ) = 1 n n ∑ (xi − µX )(yi − µY ) (a) (1) i =1 where µ X = n1 ∑in=1 xi and µY = n1 ∑in=1 yi . Quantity (1) is called covariance and it is a measure of linear association between variables X and Y. In particular, if: 2 1 0 −1 −2 a. cov( X, Y ) > 0 there is a positive linear association between X and Y (see Figure 1a); b. cov( X, Y ) = 0 there is no linear association between X and Y, or, equivalently, X and Y are linearly independent (see Figure 1b); c. cov( X, Y ) < 0 there is a negative linear association between X and Y (see Figure 1c). The adjective linear referred to the type of association detected by covariance is of utmost importance, as the association between two variables can exhibit various forms other than the linear, and this is the reason why: −3 (b) 2 1 0 −1 −2 −3 (c) 2 1 a. if cov( X, Y ) ̸= 0, X and Y are linearly associated; b. if cov( X, Y ) ̸= 0, X and Y are associated; c. if X and Y are associated, cov( X, Y ) is not necessarily different from zero; d. if X and Y are linearly associated, cov( X, Y ) ̸= 0; e. if X and Y are linearly independent, cov( X, Y ) = 0; f. if X and Y are independent, cov( X, Y ) = 0; g. if cov( X, Y ) = 0, X and Y are linearly independent; h. if cov( X, Y ) = 0, X and Y are not necessarily independent. The previous statements are obvious if we have clear in mind that: 1. there are various possible forms of association between two quantiative variables, and linear association is just one of them; 2. two variables may exhibit several types of association at the same time; 0 −1 −2 −3 −2 0 2 Figure 1: Three scatterplots of two variables X (abscissa) and Y (ordinates) with: (a) positive covariance; (b) zero covariance; (c) negative covariance. 2 PRO F. F LAV IO SA N T I 3. independence between two quantitative variables means that there is not any form of association between them. Figure 2 provides four examples of linear independence between two quantitative variables. (a) (b) (c) (d) 3 20 2 7.5 1 15 1 5.0 10 0 0 5 2.5 −1 −1 0 −2 0.0 −5 −2 −2 0 2 −2 0 2 −2 0 When information about two quantitative variables is structured in a contingency table: x1 x2 .. . xr y1 y2 ... yc n11 n21 .. . nr1 n12 n22 .. . nr2 ... ... .. . ... n1c n2c .. . nrc n 1· n 2· .. . nr · n ·1 n ·2 ... n·c n 2 −2 0 2 Figure 2: Scatterplots between X (abscissa) and Y (ordinates) where: (a) there is independence; (b) there is quadratic association; (c) there is sinusoidal association; (d) there is cubic association. Covariance between X and Y in all four cases is exactly zero. Equation (1) can be adapted as it follows: cov( X, Y ) = 1 n r c ∑ ∑ (xi − µX )(y j − µY )nij , (2) i =1 j =1 where µ X = n1 ∑ri=1 xi ni· , µY = n1 ∑cj=1 y j n· j , and nij is the absolute frequency of pair ( xi , y j ). Clearly, Equation (2) can be also restated by using relative frequencies:1 r cov( X, Y ) = c ∑ ∑ (xi − µX )(y j − µY ) fij . (3) i =1 j =1 Covariance is actually a bounded measure of linear dependence, as it can take any real value such that: −σX σY ≤ σXY ≤ σX σY , (4) where σX and σY are the standard deviations of X and Y respectively. 1 See “Notes on contingency tables” for details on definitions and notation. Note: Inequality (4) is often referred to as Cauchy-Schwarz inequality, named after Augustin-Louis Cauchy (1789–1857) and Karl Schwarz (1843–1921), albeit it is actually a special case of the CauchySchwarz inequality, which is a far more general result. NOT E S O N COVA R I A NC E A N D CO R R E L AT I O N Covariance between two quantitative variables reaches either of its bounds only when the strenght of linear association is maximum. In those cases, linear association is also the only form of association (look at Figures 3a and 3e), and we say that there is perfect linear dependence or, less often, perfect linear association.2 Figure 3 shows five scatterplots where covariance between variables varies from its lower bound to its upper bound. (a) (b) 3 2 In this lesson we use those terms intercheangeably. (c) (d) (e) 2 0 −2 −4 −2 0 2 −2 0 2 −2 0 2 −2 2 −2 0 2 Figure 3: Scatterplots between X (abscissa) and Y (ordinates) where: (a) σXY = −σX σY ; (b) σXY = −0.75 σX σY ; (c) σXY = 0; (d) σXY = 0.75 σX σY ; σXY = σX σY . 2 Correlation The fact that lower and upper bounds of covariance depend on the product of standard deviations — see Equation (4) —, makes the interpretation of the covariance between two variables a tricky task, as it always requires the value of the covariance to be compared to the nearest bound in order to draw any conclusion about the strength of linear association between the two variables. This is the reason why the correlation coefficient has been proposed. Let X and Y be two quantitative variables observed on a population U of n statistical units. The correlation between X and Y is defined as it follows:3 r XY = cor( X, Y ) = 0 cov( X, Y ) σ = XY . SD( X ) · SD(Y ) σX σY (5) The correlation coefficient (5) is usually referred to as Pearson’s correlation, although its first derivation is due to Auguste Bravais (1811–1863), and for this reason, sometimes it is also referred to as Bravais’ correlation. Equation (5) defines the correlation coefficient r XY as a monotonic increasing transformation (note that σX , σY > 0) of covariance σXY , such that if σXY = 0, r XY = 0. This makes the correlation a measure of linear association equivalent to covariance. Nonetheless, the correlation coefficient has a further property that makes it a useful measure of linear association. If each term of inequal- 3 Usually, the population correlation is denoted with ρ XY , whereas the sample correlation is denoted with r XY . We will omit such a distinction in these pages. The Pearson’s correlation coefficient is not the only correlation coefficient existing in statistical literature. Other two important correlation coefficients are the Spearman’s correlation and the Kendall’s correlation. 4 PRO F. FLAV IO SA N TI ity (4) is divided by the product of the standard deviations, it follows that: −1 ≤ r XY ≤ 1 . (6) Bounds (6) make the interpretation of the correlation coefficient immediate, as they do not depend on other characteristic of the variables, unlike covariance. For example, r XY = 0.85 denotes a pretty strong positive linear association between X and Y, whereas if σZW = 18, it is not possible to conclude anything about the strength of the linear association between Z and W, unless the product of standard deviations σZ σW is known. 3 Covariance of random variables Covariance is defined also for random variables. In case of two discrete random variables X and Y, covariance is defined as it follows: cov( X, Y ) = E (( X − E ( X ))(Y − E (Y )) = = ∑ ∑( xi − E ( X ))(y j − E (Y )) pij , i j where pij is the joint probability mass distribution of the bivariate random variable ( X, Y ), that is pij = P ([ X = xi ], [Y = y j ]). On the other hand, if X and Y are continuous random variables, covariance is defined as follows: cov( X, Y ) = E (( X − E ( X ))(Y − E (Y )) = ∫ ∫ = ( x − E ( X ))(y − E (Y )) f ( x, y) dx dy , where f ( x, y) is the joint probability density function of the bivariate random variable ( X, Y ). 4 Covariance and correlation estimators When a random sample of size n is observed, the population covariance of two variables X and Y can be estimated as follows: σ̂XY = n 1 ( xi − x̄ )(yi − ȳ) n − 1 i∑ =1 (7) where x̄ and ȳ are the sample means of X and Y respectively. The estimator (7) is referred to as sample covariance and is an unbiased and consistent estimator of the population covariance σXY . The following estimator: r̂ XY = σ̂XY , s X · sY (8) NOT E S O N COVA R I A NC E A N D CO R R E L AT I O N 5 is referred to as sample correlation, and it is a consistent estimator of the population correlation r XY , whereas s X and sY are the sample standard deviations of X and Y respectively. Note that Agresti et al. [2018, p. 135] define the correlation coefficient as it follows: n 1 xi − x̄ yi − ȳ r̂ XY = · . (9) ∑ n − 1 i =1 s X sY Equation (9) is equivalent to (8), since: σ̂XY 1 σ̂ = = s X · sY s X · sY XY n 1 1 = · ( x − x̄ )(yi − ȳ) = ∑ s X · sY n − 1 i = 1 i r̂ XY = = n xi − x̄ yi − ȳ 1 · . ∑ n − 1 i =1 s X sY Note that, unlike sample covariance and covariance, sample correlation and correlation are actually identical when computed over a sample: √ 1 n = √ ∑in=1 ( xi − x̄ )(yi − ȳ) √ = ∑in=1 ( xi − x̄ )2 · ∑in=1 (yi − ȳ)2 = √ ∑in=1 ( xi − x̄ )(yi − ȳ) √ = n n 1 1 2 2 n−1 ∑i =1 ( xi − x̄ ) · n−1 ∑i =1 ( yi − ȳ ) = 5 ∑in=1 ( xi − x̄ )(yi − ȳ) √ = ∑in=1 ( xi − x̄ )2 · n1 ∑in=1 (yi − ȳ)2 1 n 1 n −1 σ̂XY = r̂ XY . s X · sY Properties of covariance Besides inequality (4), the following properties of covariance can be proved:4 a. cov( X, Y ) = µ XY − µ X µY , whereas cov( X, Y ) = E ( XY ) − E ( X ) E (Y ) if X and Y are random variables; b. cov( X, X ) = Var( X ); c. cov( X, a) = 0 for any constant a ∈ R; d. cov( X, aX + b) = a Var( X ) for any constants a, b ∈ R; e. cov( X, aY + b) = a cov( X, Y ) for any constants a, b ∈ R; f. cov( X, Y + Z ) = cov( X, Y ) + cov( X, Z ). Note that all these properties hold both for population covariance, sample covariance, and covariance of random variables (both discrete and continuous). 4 Proofs of the following properties as well as their counterparts for correlation are part of the syllabus exam. Every property has been proved during lectures. Try to prove all the following properties as an exercise. (Property a in case of random variables has not been proved as this is beyond the scope of the course.) 6 PROF. F LAV IO SA N T I 6 Properties of correlation Definition (5) and properties of standard deviation5 permit to easily prove the following properties of the correlation coefficient: a. b. c. d. 5 Remind that: SD( aX + b) = | a| SD( X ) , for any constants a, b ∈ R. cor( X, X ) = 1; cor( X, a) = 0 for any constant a ∈ R; cor( X, aX + b) = | aa| for any constants a, b ∈ R; cor( X, aY + b) = | aa| cor( X, Y ) for any constants a, b ∈ R. Note that also these properties hold both for population correlation, sample correlation, and correlation of random variables (both discrete and continuous). 7 Variance of the sum of random variables Let X1 , X2 , . . . , Xn be n random variables, and let S be their sum: n S= ∑ Xi . i =1 It can be proved that: E (S) = n ∑ E ( Xi ) , (10a) i =1 n Var(S) = n ∑ Var(Xi ) + 2 ∑ ∑ cov(Xi , Xj ) . i =1 (10b) i =1 j > i It follows that, for example: E ( X1 + X2 ) = E ( X1 ) + E ( X2 ) , Var( X1 + X2 ) = Var( X1 ) + Var( X2 ) + 2 cov( X1 , X2 ) . and E ( X1 + X2 + X3 ) = E ( X1 ) + E ( X2 ) + E ( X3 ) , Var( X1 + X2 + X3 ) = Var( X1 ) + Var( X2 ) + Var( X3 )+ + 2 cov( X1 , X2 ) + 2 cov( X1 , X3 ) + 2 cov( X2 , X3 ) . If the random variables X1 , X2 , . . . , Xn are linearly independent (hence both correlations and covariances are zero), Equations (10) simplifies to:6 E (S) = n ∑ E ( Xi ) , (11a) ∑ Var(Xi ) . (11b) i =1 n Var(S) = i =1 6 Obviously, the following result holds also if the random variables are independent. NOT E S O N COVA R I A NC E A N D CO R R E L AT I O N Equations (11) allows one to prove the properties of the sample mean estimator: 1 n X̄n = ∑ Xi . n i =1 when X1 , X2 , . . . , Xn are n independent and identically distributed (iid) random variables such that E ( Xi ) = µ and Var( Xi ) = σ2 for any i = 1, 2, . . . , n. First of all, note that: ( ) ( ) n 1 n 1 1 n E ( X̄n ) = E X = E X = E ( Xi ) = i ∑ i n i∑ n n i∑ =1 i =1 =1 = 1 n n ∑µ= i =1 1 nµ = µ . n Secondly, variance of the sample mean can be derived as it follows: ( ) ( ) n 1 n 1 1 n Var( X̄n ) = Var Xi = 2 Var ∑ Xi = 2 ∑ Var( Xi ) = ∑ n i =1 n n i =1 i =1 = 1 n2 n ∑ σ2 = i =1 1 σ2 nσ2 = . 2 n n (12) Mutual independence of X1 , X2 , . . . , Xn pemits the variance of the sum Var (∑in=1 Xi ) in (12) to be computed as the sum of variances ∑in=1 Var( Xi ) according to (11), as all covariances in (10) are zero. 8 Covariance and correlation with R Four functions of R are particularly useful when quantitative variables have to be analysed: 1. 2. 3. 4. 5. 6. length: this function computes the number of elements of a vector; sum: this function computes the sum of a vector; mean: this function computes the sample mean of a vector; var: this function computes the sample variance of a vector; sd: this function computes the sample standard deviation of a vector; cov: this function computes the sample covariance between two vec- tors; 7. cor: this function computes the correlation between two vectors; Then, var and cov use n − 1 instead of n as denominators. It follows that if the following vector is defined: x <- c(2, 3, 0, 5, 6, 8) x ## [1] 2 3 0 5 6 8 7 8 PRO F. FLAV I O SA NT I and the number of elements is computed as it follows: n <- length(x) n ## [1] 6 its variance can be computed either as follows: mean((x - mean(x))^2) ## [1] 7 as follows: sum((x - mean(x))^2) / n ## [1] 7 or as it follows: (n - 1) / n * var(x) ## [1] 7 since var returns the sample variance: var(x) ## [1] 8.4 If vector y is defined as it follows: y <- c(0, 9, -1, 2, 3, 2) sample covariance between x and y is computed as: cov(x, y) ## [1] 2.2 whereas correlation is computed as: cor(x, y) ## [1] 0.2164365 References A. Agresti, C. Franklin, and B. Klingenberg. Statistics. Pearson, 4th edition, 2018. ISBN 978-1-292-16477-9.