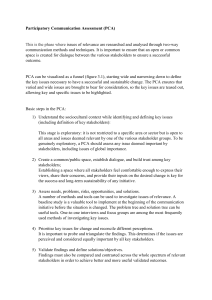

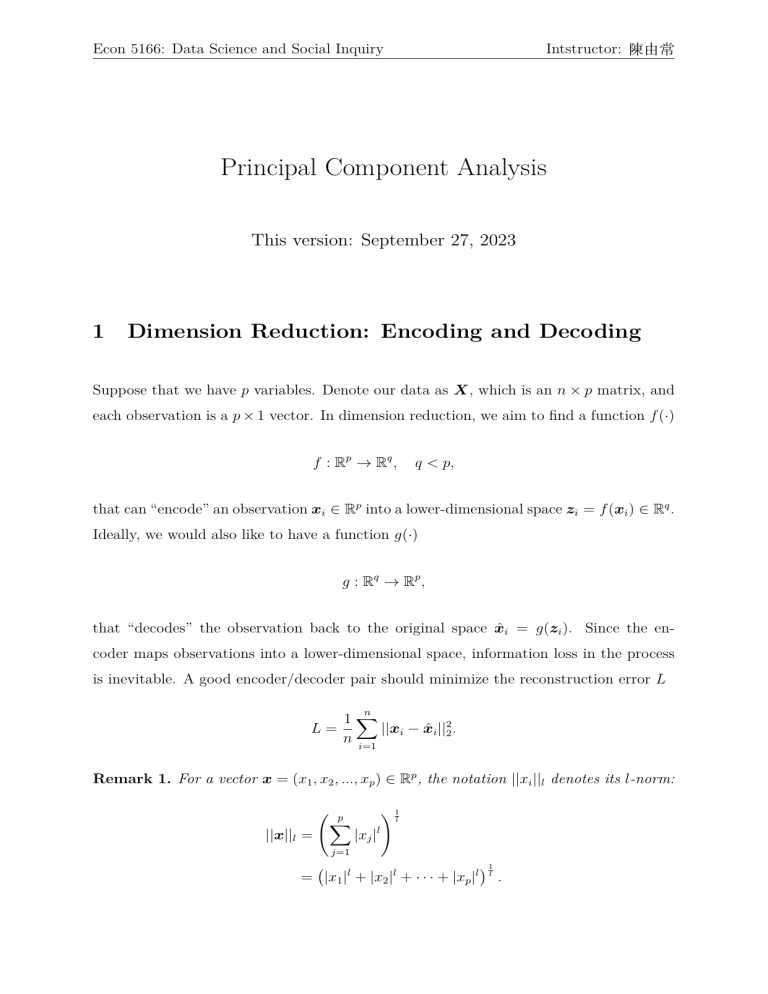

Econ 5166: Data Science and Social Inquiry Intstructor: 陳由常 Principal Component Analysis This version: September 27, 2023 1 Dimension Reduction: Encoding and Decoding Suppose that we have p variables. Denote our data as X, which is an n × p matrix, and each observation is a p × 1 vector. In dimension reduction, we aim to find a function f (·) f : Rp → Rq , q < p, that can “encode” an observation xi ∈ Rp into a lower-dimensional space zi = f (xi ) ∈ Rq . Ideally, we would also like to have a function g(·) g : Rq → R p , that “decodes” the observation back to the original space x̂i = g(zi ). Since the encoder maps observations into a lower-dimensional space, information loss in the process is inevitable. A good encoder/decoder pair should minimize the reconstruction error L 1∑ L= ||xi − x̂i ||22 . n i=1 n Remark 1. For a vector x = (x1 , x2 , ..., xp ) ∈ Rp , the notation ||xi ||l denotes its l-norm: ( ||x||l = ( p ∑ ) 1l |xj |l j=1 = |x1 |l + |x2 |l + · · · + |xp |l ) 1l . Econ 5166: Data Science and Social Inquiry Intstructor: 陳由常 For example, when l = 2, it is also known as the L2 norm, which corresponds to the Euclidean norm that we have been using since high school. Later in this course, we will also use the L1 norm, denoted as ||x||1 . However, unless otherwise specified, we usually consider the L2 norm. How do we find a encoder/ decoder? Consider the following scatter plot of our data p = 2: Is our data two-dimensional or one-dimensional? x2 4 2 x1 −4 −2 2 4 −2 −4 From the graph, we can see that most of our observations are close to the line 45-degree. So, why not project our data onto the line, which is an one dimensional space. Consider the following example. Example 1 (“Eyeball” PCA). Suppose p = 2. From the scatter plot above, it appears that we can reduce the dimension of our data from p = 2 to q = 1 with minimal information loss by projecting the data onto the 45-degree line. Specifically, let A= √1 2 , √1 2 and consider the function f (x) = AT x that maps from R2 to R1 , and g(z) = Az Econ 5166: Data Science and Social Inquiry Intstructor: 陳由常 that maps from R1 to R2 . You can verify that the encoder f (·) is the function that projects observations onto the 45-degree line, and the compressed observation is given by x̂ = g(z) = g(f (x)), with the reconstruction error given by ||x − ^ x||. See the figures and table below for an illustration. Quiz 1. Can you find z ∈ R, x̂ ∈ R2 , and the reconstruction error ||x − x̂|| for x = (3, 4)T ? Graph of projection 10 y 5 (−5, 3) −10 x=y (3, 4) (7, 3) x −5 5 10 −5 −10 x AT x x̂ = Az ||x − x̂|| (3, 4) √7 2 ( 72 , 72 ) √1 2 (7, 3) 10 √ 2 (5, 5) (−5, 3) −2 √ 2 (−1, −1) √ 2 2 √ 2 5 Econ 5166: Data Science and Social Inquiry Intstructor: 陳由常 Graph of x = x̂, ẑ and x̂ − ẑ x x̂ = Az (0, 0) Example 1 essentially demonstrates how principal component analysis (PCA) operates in practice. PCA seeks to exploit the correlation between variables to capture the predominant variation in the data. In the extreme case, when the correlation coefficient is close to 1, two variables are nearly linearly dependent (i.e., y ≈ ax + b for some a, b ∈ R), and they lie along a line, as illustrated in Example 1. For p = 2 or p = 3, we have the luxury of visualizing the data to detect whether there exists a lower-dimensional space (a line or a plane) that adequately represents our data. However, this visual inspection, or ”eyeballing,” becomes unfeasible for dimensions beyond p = 3. What is our alternative? To address cases where p > 3, we must generalize our approach by abstracting the ideas of what we applied in lower dimensions. 2 Beyond p = 3: Deriving PCA from Scratch As illustrated in Example 1, the core concept of PCA is to identify a lower-dimensional space onto which our observations can be projected. How can we formalize this concept for p > 3? The key to generalization is abstraction. For q = 1, we may characterize PCA as Econ 5166: Data Science and Social Inquiry Intstructor: 陳由常 “finding (1) a line to (2) project onto, with the aim of minimizing the (3) reconstruction error.”How might this statement be generalized for q > 1? Essentially, three questions need to be addressed: 1. What is the entity onto which we are projecting when q > 1? 2. How is projection defined for q > 1? 3. How is reconstruction defined for q > 1? What is the entity onto which we are projecting when q > 1? For q = 1, we are looking for a line; for q = 2, we seek a plane, and so forth. Since one vector is required to span a line, and two vectors to define a plane, in general, we need q vectors a1 , a2 , ..., aq to construct a q-dimensional space. In PCA, our goal is to identify these vectors, termed as the “principal components”, which form the lower-dimensional space. Here, a1 is the first principal component (PC), a2 is the second PC, and so on. Project to q=1 q=2 q=3 Find a line a plane a 3D space Need vectors (“principal components”) a 1 ∈ Rp a 1 , a 2 ∈ Rp a 1 , a 2 , a 3 ∈ Rp Remark 2. Note that if the original data is p−dimensional, we have at most p PC’s. Given that the vectors a1 , a2 , . . . , aq ∈ Rp fundamentally define a lower-dimensional subspace (of dimension q) in Rp , we impose the conditions: √ ||aj ||2 = aTj aj = 1, (unit length) aTj ak = 0, (orthogonality for j ̸= k) The principal components a1 , a2 , . . . , aq possess unit length as they fundamentally represent directions. Ensuring their orthogonality, as we will see below, simplifies the definition of the projection onto the space they span. Quiz 2. Which of the following vectors can possibly be the first two PC’s for a data with three variables (p = 3)? Econ 5166: Data Science and Social Inquiry 1 3 1. a1 = , a2 = 2 2 Intstructor: 陳由常 3. a1 = 0.5 , a2 = 0.5 1 0 √ 2. a1 = 2 , a2 = 0 0 1 −1 1 1 0 4. a1 = 0 , a2 = 0 0 1 Now, how do we define project on q > 1?1 It turns out that if we define [ Ap×q = a1 a2 · · · aq ] then for an observation xi = (xi1 , xi2 , ..., xip )T ∈ Rp : T a 2 A T xi = .. . aTq aT x 1 i aT 1 · xp×1 q×p T a2 xi q = .. ∈ R . . aTq xi So, the j−th component in AT xi is simply the inner product of aj and xi . Since aj has unit length, then the projection of xi on aj is simply aTj xi · aj . So, the matrix multiplication AT xi encodes xi in terms of its coordinates on a1 , a2 , ..., aq . In matrix notation, the projection of xi onto the space spanned by a1 , a2 , . . . , aq is then 1 Note that, in math, an understanding of the case for q = 2 would automatically generalize to any finite-dimensional case. So it is fine to assume q = 2 for the discussion. Econ 5166: Data Science and Social Inquiry Intstructor: 陳由常 given by x̂i = aT1 xi · a1 + aT2 xi · a2 + · · · + aq xi · aq T a x 1 i [ ] a T xi 2 = a1 a2 · · · aq .. . T a q xi = AAT xi . Summary: to reduce the dimension from p to q, PCA aims to find q vectors a1 , a2 , ..., aq ∈ Rp so that one can construct a q− dimensional space to project our data onto. Our goal is find the optimal vectors (the principal components) that the reconstruction error is smallest. That is, we want to solve the minimization problem: 1∑ ||xi − AAT xi ||22 a1 ,a2 ,...,ap ∈Rp n i=1 n min s.t. aTj aj = 1, j = 1, 2, ..., q aTj ak = 0, j ̸= k where [ ] Ap×q = a1 a2 · · · aq . and AAT xi ∈ Rq is the projected (compressed) version of xi ∈ Rp . Quiz 3. Suppose that p = 3. What is matrix x for the projection on the plane x = y? 3 Solving PCA So far, we have spent a lot of time defining PCA. But, how can we solve it, i.e., how do we find the PC’s a1 , a2 , ..., ap ? PCA attempts to summarize variation in the random vector X with few principal components (PC). In below, we will define what are principal components and explain how Econ 5166: Data Science and Social Inquiry Intstructor: 陳由常 to derive them. In fact, we can solve PCA iteratively, that is, we can first find the first PC, a1 , then a2 , ..., and aq . So, to find the first PC, we solve 1∑ ||xi − a1 aT1 xi ||22 n i=1 n minp a1 ∈R s.t. aT1 a1 = 1. Given a1 , we can then solve a2 by minimizing the remaining part xi − a1 aT1 xi 1∑ minp ||(xi − a1 aT1 xi ) − a2 aT2 xi ||22 a2 ∈R n i=1 n s.t. aT2 a2 = 1, aT2 a1 = 0. While we motivated PCA by minimizing reconstruction errors, an alternative definition of PCA is by “maximizing variances”. For example, the first PC can be found by solving the following problem:2 max V ar(a′1 X) a1 ∈Rp s.t. a′1 a1 = 1. Remark 3. The restriction a′i ai = 1 is necessary, otherwise V ar(a′i X) can be made arbitrary large by multiplying the coefficient with a constant. The rest of the principal components can be defined iteratively. The second PC, P C2 = a′2 is defined by the optimization problem: max a2 ∈Rp V ar(a′2 X) s.t. aT2 a2 = 1, aT1 a2 = 0. Notice that we require the second PC has to be uncorrelated with the first PC. We can think of it as we are trying to have the second PC explain the variation that is not explained by the first PC. 2 For a rigor argument, see our textbook (Murphy 2022). Econ 5166: Data Science and Social Inquiry Intstructor: 陳由常 Similarly, the jth PC, a′j is the solution to max aj ∈Rp V ar(a′j X) s.t. aTj aj = 1, aTj aj ′ = 0 for j ′ = 1, 2 . . . , j − 1. Remark 4. Recall that ( a′j x = ⟨aj , x⟩ = aj1 aj2 x 1 ) x2 . . . ajp .. = aj1 x1 + aj2 x2 + · · · + ajp xp , . xp so a′ x is a scalar. 3.1 Solve PCA when Σ Diagonal Remark 5. We can verify that V ar(aT X) = aT Σa. See the following example. 1 0 1 and a = . Example 2. Let Σ = 0 2 2 ( ) V ar(aT X) =V ar 1 2 X1 X2 =V ar(X1 + 2X2 ) =V ar(X1 ) + 4V ar(X2 ) =1 + 4 · 2 = 9 Econ 5166: Data Science and Social Inquiry Intstructor: 陳由常 Alternatively, we can calculate V ar(aT X) by ( ) 1 0 1 1 = 1 2 = 9. aT Σa = 1 2 0 2 2 4 ( ) Indeed, we end up with the same result, as the previous remarks implied. By remark 5, the optimization problem can be written as max a1 ∈Rp aT1 Σa1 s.t. aT1 a1 = 1. In his book “How to Solve It”, mathematician and Probabilist George Pólya famously said “If you can’t solve a problem, then there is an easier problem you can solve: find it.” Let’s follow his suggestion by assuming the covariance matrix Σ= λ1 0 .. 0 . λp , p×p where λ1 > λ2 > · · · > λp . That is, we assume Σ is now a diagonal matrix with positive, decreasing diagonal elements. Now we are ready to solve the optimization problem for P C1 : maxp a1 ∈R aT1 Σa1 s.t. aT a = 1. Econ 5166: Data Science and Social Inquiry Intstructor: 陳由常 Notice the objective function is now aT1 Σa1 ( = a11 ) . . . a1p λ1 0 .. 0 a 11 .. . . λp a1p =λ1 a211 + λ2 a212 + · · · + λp a21p . By redefining b1i = a21i , the maximization problem becomes max b λ1 b11 + · · · + λp b1p s.t. b1i + · · · + b1p = 1. It is plain to see that b11 = 1, b12 = · · · = b1p = 0 attains the maximum, while subjecting to the constraint. Hence, a11 = ±1, a12 = · · · = a1p = 0 is the solution to the P C1 problem.3 The first PC is hence 1 0 a1 = .. , . 0 which points to the direction with largest variance. Next we solve the P C2 problem maxp a2 ∈R V ar(aT2 X) s.t. aT2 a2 = 1 aT1 a2 = 0 3 PCs are only uniquely defined up to reflection over the origin. It is not hard to see that if a∗ is a solution to the PCA problem, then −a∗ is also a solution. Econ 5166: Data Science and Social Inquiry Intstructor: 陳由常 0 The constraint aT1 a2 = 0 implies a21 a22 = 0. So a2 = .. , and the problem reduces to . a2p 0 a ( ) 22 . T .. aT Σa2 = 0 a22 . . . a2p . .. 0 λp a2p λ 0 a 22 ( ) 2 .. ... = a22 . . . a2p . . 0 λp a2p λ1 0 The problem is now the same as finding P C1 , with one less variable. Hence the solution to the second component is a22 = ±1, a21 = a23 = · · · = a2p = 0, and the second PC is a2 = (0, 1, 0, · · · , 0)T . Similarly, we can see that j−th PC are just the unit vectors in Rp in which whose j−th component is 1 andzero otherwise. Conclusion: When Xj ’s are uncorrelated, PCA is basically keeping Xj ’s with largest variances. 3.2 Solve PCA when Σ is not Diagonal What if Σ is not diagonal? Luckily, we have the following theorem: Theorem 1 (Real Spectral Theorem). If Σ is symmetric, then there exists a p×p matrix P such that Σ = P DP −1 , where D is a diagonal matrix, and P T P = Ip×p , i.e., P −1 = P T . Econ 5166: Data Science and Social Inquiry Intstructor: 陳由常 Example 3. 3 34 12 = 5 4 12 41 5 −4 50 5 3 5 0 0 25 3 5 −4 5 4 5 3 5 . Since Σ is symmetric, it can now be written as Σ = P DP T , where the notation is followed by the Real Spectral theorem. Let bi = P T ai , aTi Σai = aTi P DP T ai = (P T ai )T D(P T ai ) = bTi Dbi . aTi ai = aTi (P P −1 )ai = aTi (P P T )ai = (P T ai )T (P T ai ) = bTi bi Restate the maximization problem in terms of bi gives max bi ∈Rp b′i Dbi s.t. b′i bi = 1, and we know how to solve it since D is diagonal. 4 Applications of PCA Here we list examples of applications of PCA. 1. Data compression 2. Summarize data and explorative analysis 3. Use PC in regression 4. Use PC in clustering 5. Factor analysis Econ 5166: Data Science and Social Inquiry 5 Intstructor: 陳由常 Choosing q One might ask how much variance is summarized in q, and how we choose such q. A scree plot might be helpful to answer the question. Scree plot Eigenvalue 300 200 100 0 1 5 Component Number The y-axis of a scree plot is the sorted eigenvalues of the covariance matrix, in a decreasing order, and the x-axis is the corresponding rank of the eigenvalue. As a rule of thumb, q is chosen at the elbow of the scree plot, so one might choose q = 2 for the example above. λ 1 λ2 Suppose that the covariance matrix Σ = .. . λp then we have V ar(P C1 ) = V ar(X1 ) = λ1 V ar(P C1 ) = V ar(X2 ) = λ2 .. . V ar(P Cp ) = V ar(Xp ) = λp is a diagonal matrix, p×p