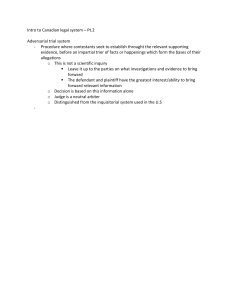

Robotic Control in Adversarial and Sparse Reward Environments: A Robust Goal-Conditioned Reinforcement Learning Approach Xiangkun He1 and Chen Lv1 Abstract— This paper presents a novel robust goalconditioned reinforcement learning (RGCRL) approach for robotic control in adversarial and sparse reward environments. The proposed method is evaluated on three tasks with adversarial attacks and sparse reward settings. The results show that our scheme can ensure robotic control performance and policy robustness on the adversarial and sparse reward tasks. I. INTRODUCTION With deep neural networks (DNNs) as function approximators, reinforcement learning (RL) algorithms have demonstrated their worth in a series of challenging tasks, from games to robotic control. While existing RL-based robot control methods have achieved many compelling results, they mostly assume that the observations are free of uncertainties. This assumption can hardly hold in real-world scenarios. The observations of robots may include unexpected perturbations that naturally arise from inevitable stochastic noises or sensing errors. Control policies that are not robust to observation uncertainty can not only degrade robot performance, but also cause catastrophic failures. Additionally, a common challenge, especially for robotics, is sample-efficient learning from sparse rewards, in which an agent has to find a long sequence of “correct” actions in order to achive a desired outcome. Unfortunately, unpredictable perturbations on observations may make it worse. Hence, we propose a novel RGCRL method for robotic control in adversarial and sparse reward environments. 1 X. He and 1 C. Lv are with the School of Mechanical and Aerospace Engineering, Nanyang Technological University, Singapore 639798 xiangkun.he@ntu.edu.sg, lyuchen@ntu.edu.sg Fig. 1. II. METHODOLOGY The main components of the proposed approach can be summarized as follows: (1) A robust goal-conditioned Markov decision process (RGCMDP) is proposed to model agent behaviors in uncertain and sparse reward environments. (2) A paradigm of mixed adversarial attacks is developed to generate diverse adversarial examples by combining whitebox and black-box attacks. (3) A robust goal-conditioned actor-critic algorithm is advanced to optimize an agent’s control policies and keep the variations of the policies perturbed by the optimal adversarial attacks within bounds. III. EVALUATION AND CONCLUSIONS By combining HER [1] with twin delayed deep deterministic policy gradient (TD3), soft actor-critic (SAC) and robust deep deterministic policy gradient (RDDPG) [2] algorithms, three baselines are implemented to benchmark our approach, which are indicated as TD3-HER, SAC-HER and RDDPGHER, respectively. The results in Fig. 1 demonstrate the proposed RGCRL approach is effective and outperforms the three competitive baselines in terms of the success rate and policy robustness on the three adversarial and sparse reward tasks. R EFERENCES [1] M. Andrychowicz, F. Wolski, A. Ray, J. Schneider, R. Fong, P. Welinder, B. McGrew, J. Tobin, O. Pieter Abbeel, and W. Zaremba, “Hindsight experience replay,” Advances in neural information processing systems, vol. 30, 2017. [2] A. Pattanaik, Z. Tang, S. Liu, G. Bommannan, and G. Chowdhary, “Robust deep reinforcement learning with adversarial attacks,” in Proceedings of the 17th International Conference on Autonomous Agents and MultiAgent Systems, 2018, pp. 2040–2042. Evaluation results for the different agents on the three adversarial and sparse reward tasks. (a): Reach; (b): Push; (c): Pick-and-Place.