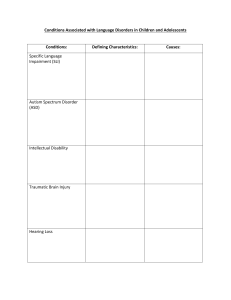

COGNITION AND EMOTION, 2014 Vol. 28, No. 6, 1110–1118, http://dx.doi.org/10.1080/02699931.2013.867832 BRIEF REPORT Emotion recognition of static and dynamic faces in autism spectrum disorder Peter G. Enticott1,2, Hayley A. Kennedy1, Patrick J. Johnston3, Nicole J. Rinehart2, Bruce J. Tonge2, John R. Taffe2, and Paul B. Fitzgerald1 1 Monash Alfred Psychiatry Research Centre, The Alfred and Central Clinical School, Monash University, Melbourne, VIC, Australia 2 Centre for Developmental Psychiatry and Psychology, School of Psychology and Psychiatry, Monash University, Clayton, VIC, Australia 3 Department of Psychology, University of York, York, UK There is substantial evidence for facial emotion recognition (FER) deficits in autism spectrum disorder (ASD). The extent of this impairment, however, remains unclear, and there is some suggestion that clinical groups might benefit from the use of dynamic rather than static images. High-functioning individuals with ASD (n = 36) and typically developing controls (n = 36) completed a computerised FER task involving static and dynamic expressions of the six basic emotions. The ASD group showed poorer overall performance in identifying anger and disgust and were disadvantaged by dynamic (relative to static) stimuli when presented with sad expressions. Among both groups, however, dynamic stimuli appeared to improve recognition of anger. This research provides further evidence of specific impairment in the recognition of negative emotions in ASD, but argues against any broad advantages associated with the use of dynamic displays. Keywords: Autism; Asperger’s disorder; Facial emotion recognition; Dynamic faces. A fundamental component of social cognition involves the ability to recognise emotional states in others from their facial expression. It is generally accepted that individuals with some form of Correspondence should be addressed to: Peter G. Enticott, School of Psychology, Deakin University, 221 Burwood Hwy, Burwood, VIC 3125, Australia. E-mail: peter.enticott@deakin.edu.au Current address: Nicole J. Rinehart, School of Psychology, Deakin University, Burwood, VIC, Australia The authors wish to thank all those who took part in the study and those who assisted with participant recruitment, including Dr Tony Attwood, Ms. Tracel Devereux (Alpha Autism), Dr Richard Eisenmajer, Mr. Dennis Freeman (Wesley College Melbourne), Ms. Pam Langford, Dr Kerryn Saunders, Ms. Linke Smedts-Kreskas (S.P.O.C.A.A.S.), Autism Victoria, A4, Autism Spectrum Australia (ASPECT) and the Asperger Syndrome Support Network. The authors also thank Ms. Sara Arnold for assistance with manuscript preparation. © 2013 Taylor & Francis 1110 FACIAL EMOTION RECOGNITION IN ASD autism spectrum disorder (ASD) display at least some impairment in the recognition of emotion from facial expressions (Harms, Martin, & Wallace, 2010), although there are a host of inconsistent results. From a clinical perspective, impaired social interaction and understanding are generally considered the hallmark of ASD, and an inability to determine emotions from facial expressions is thought to contribute to these social deficits. The Diagnostic and Statistical Manual of Mental Disorders, 4th edition, text revision (DSM-IV-TR) also notes impairments in the use of non-verbal behaviour to regulate social interaction, including facial expression. With respect to behavioural studies of facial emotion recognition (FER) among “high-functioning” individuals with ASD (the focus of the current study1), results are quite varied but have indicated specific deficits in the recognition of negative emotions such as disgust (e.g., Ashwin, BaronCohen, Wheelwright, O’Riordan, & Bullmore, 2007; Law Smith, Montagne, Perrett, Gill, & Gallagher, 2010), sadness (e.g., Philip et al., 2010), anger (e.g., Ashwin et al., 2007; Law Smith et al., 2010; Philip et al., 2010) and fear (e.g., Philip et al., 2010). Recently, Law Smith et al. (2010) found that deficits for the recognition of surprise and anger were only evident for lower intensity presentations of these facial expressions, suggesting that perhaps it is the subtlety of emotional facial expressions that causes difficulties for individuals with ASD. Beyond this, it has been suggested that variation in methodological factors (e.g., use of samples with different diagnostic techniques, differing stimulus sets), and the use of alternative strategies for emotion recognition in some individuals with ASD, underlies the inconsistent results in this field (Harms et al., 2010). The sheer amount of variability in the presentation of ASD, and its heterogeneous nature, is also likely to contribute to the variety of results regarding emotion recognition impairment. A relatively recent development in the assessment of FER relates to the increasing adoption of “dynamic” stimulus materials; that is, moving images of faces as opposed to static depictions of emotion. Where moving faces have been used, there are indications that they may facilitate facial affect processing among individuals with intellectual disability (Harwood, Hall, & Shinkfield, 1999), while there is also some suggestion that, among typically developing (TD) individuals, dynamic displays are better recognised and rated as more intense and realistic (although this latter study involved animated avatar faces and might not generalise to “real” facial expressions; Weyers, Muhlberger, Hefele, & Pauli, 2006). There is also evidence that dynamic displays facilitate recognition of subtle facial emotion displays (Ambadar, Schooler, & Cohn, 2005). In the neuroimaging literature, a number of studies have demonstrated differential patterns of brain activation in response to dynamic versus static facial emotion stimuli (e.g., Kilts, Egan, Gideon, Ely, & Hoffman, 2003), with the former involving enhanced activity in visual and temporal cortices. Since naturally occurring facial expressions are intrinsically dynamic, such findings have led to the suggestion that dynamic depictions of faces may have greater ecological validity than static depictions, and may evoke richer neuronal, autonomic and behavioural consequences than their static counterparts (Johnston et al., 2010; Kilts et al., 2003). With the exception of Katsyri, Saalasti, Tiippana, von Wendt, and Sams (2008) (who examined the impact of spatial filtering on FER among high-functioning individuals), static and dynamic images have not been directly compared in ASD. There have been relatively few FER studies utilising dynamic images in ASD, but there is some suggestion that children with ASD, at least those considered “low-functioning”, might benefit from slow dynamic displays of facial emotion (Gepner, Deruelle, & Grynfeltt, 2001). Conversely, recognition of rapid displays in ASD (akin to that encountered in “real-life” settings) might be particularly problematic not only because of core 1 A comprehensive review of the entire literature, including neuroimaging and electrophysiology, is beyond the scope of this study, but see (Harms et al., 2010) for an excellent recent review. COGNITION AND EMOTION, 2014, 28 (6) 1111 ENTICOTT ET AL. social cognitive deficits but also impaired visualmotion integration (Gepner & Feron, 2009). Eyetracking studies suggest a qualitative difference in gaze direction for dynamic (but not static) presentations in children with high-functioning ASD (Speer, Cook, McMahon, & Clark, 2007), with decreased fixation of eye regions during the viewing of scenes (film and photograph) from the film Who’s Afraid of Virginia Woolf? Neuroimaging evidence suggests that adults with high-functioning ASD show widespread reductions in neural activity (e.g., amygdala, fusiform gyrus) when viewing dynamic (relative to static) facial images (Pelphrey, Morris, McCarthy, & LaBar, 2007). By contrast, however, Katsyri et al. (2008) found no FER deficits in adults with Asperger’s disorder for both static and dynamic images (although impairments were evident when images were filtered to encourage “global” processing of facial images). The current study examined FER of matched static and dynamic images among adolescents and adults with ASD and TD individuals. If a core deficit of ASD involves impairments in social processing (with FER as one aspect of this impairment), dynamic stimuli could be considered a better representation of an aspect of this process (and therefore a more precise means of investigating this process). There is relatively little data from which to derive a hypothesis; however, based on the literature to date, particularly imaging research suggesting greater reductions in neural activity for dynamic depictions of facial expressions among adults with high-functioning ASD, it was expected that individuals with ASD would show impairment for both static and dynamic displays of facial emotion. METHODS Participants Participants were 36 individuals (27 males; mean age = 25.00 years, SD = 8.83) diagnosed with an ASD [autism (n = 10) or Asperger’s disorder (n = 26)], all of whom were within or above the average range of cognitive function [as determined by the Kaufman Brief Intelligence Test Second Edition (KBIT-2)], and 36 TD (23 males; mean age: 1112 COGNITION AND EMOTION, 2014, 28 (6) 25.78 years, SD = 6.65) individuals with no selfreported history of psychiatric or neurological illness. There were no between-group differences in either gender, χ2(1) = 1.05, p = .306, or age, t(70) = 0.42, p = .674. ASD participants were recruited via the Monash Alfred Psychiatry Research Centre participant database, which is comprised of clinically diagnosed individuals who have previously taken part in research and have agreed to be contacted in relation to future projects, and advertisements placed in newsletters, websites and offices of ASD-related organisations (e.g., advocacy groups, support groups, vocational services) and clinicians (e.g., psychologists, psychiatrists, paediatricians). Participants were diagnosed by a psychiatrist, clinical psychologist or paediatrician. A diagnosis of DSM-IV autistic disorder or Asperger’s disorder was confirmed via diagnostic report or, where a diagnostic report was not available, by telephone with the diagnosing clinician (psychologist, psychiatrist or paediatrician). TD participants were recruited via advertisements placed within The Alfred hospital, Monash University, and the newsletter of a local secondary school (Wesley College). This project was approved by the human research ethics committees of both Monash University and The Alfred hospital (Bayside Health). All adult participants (aged 18 and over) provided signed informed consent, while a parent provided signed informed consent for child participants (aged 14– 17). Children provided signed assent to participate. Participants were reimbursed (AU$30) for travel expenses. Participant information is presented in Table 1. One TD participant did not complete the KBIT2. There was no difference in age, t(70) = −0.42, p = .674, or gender, χ2(1) = 1.05, p = .306, between the ASD and TD groups. The TD group had a greater verbal intelligence quotient (VIQ) than the ASD group, t(64) = −2.07, p = .042, but there was no significant difference in non-verbal IQ, t(69) = −1.05, p = .296, or composite IQ, t(61) = −1.74, p = .087. Adult participants completed the Ritvo Autism-Aspergers Diagnostic Scale (RAADS) (Ritvo et al., 2008). This is a self-report measure FACIAL EMOTION RECOGNITION IN ASD Table 1. Participant demographics N Age in years (SD) Gender (m:f) KBIT-2 verbal IQ (SD)* KBIT-2 non-verbal IQ (SD) KBIT-2 composite IQ (SD) AQ (SD)** EQ (SD)** RAADSasocial relating (SD)** RAADSa language/communication (SD)** RAADSa sensorimotor (SD)** RAADSa total (SD)** DBC autism screening algorithm (SD)b* ASD TD 36 25.00 (8.83) 27:9 99.17 (17.62) 107.19 (19.12) 103.89 (19.51) 30.21 (9.33) 25.82 (13.39) 40.89 (15.05) 33.12 (13.25) 30.64 (13.25) 104.64 (39.68) 18.12 (9.45) 36 25.78 (6.65) 23:13 106.80 (12.95) 111.29 (12.93) 110.71 (13.05) 12.11 (5.93) 49.57 (12.86) 14.58 (10.22) 8.24 (5.51) 8.15 (6.13) 30.97 (18.60) 1.00 (0.00) *p < .05, **p < .001. a Completed by adult participants only. b Completed by parents of child participants only. (comprising 78 items) that probes various aspects of ASD, both in relation to childhood and adulthood. An increased score is indicative of greater ASD severity. All participants (child and adult) completed the empathy quotient (EQ) (Baron-Cohen & Wheelwright, 2004), while all participants aged 16 and older completed the Autism Spectrum Quotient (AQ) (Baron-Cohen, Wheelwright, Skinner, Martin, & Clubley, 2001). The latter yields the following subscales: social skill, communication, imagination, attention to detail and attention switching. Parents of child participants completed the developmental behaviour checklist (DBC) (Einfeld & Tonge, 2002), a parent-completed checklist of behavioural and emotional problems that includes an autism screening algorithm, while parents of participants aged 14 or 15 also completed the AQ – Adolescent Version (Baron-Cohen, Hoekstra, Knickmeyer, & Wheelwright, 2006). For analysis purposes (Table 1), child and adult AQ scores were combined; there were five ASD and two TD aged 14 or 15 for whom the child AQ was completed. These measures were not used to diagnose participants, which was done by clinicians according to DSM-IV criteria, but rather to characterise the sample. Independent samples t-test revealed a significant difference between the ASD and TD groups on all of these measures (see Table 1). Procedure The FER task was presented on a notebook computer using E-prime software (v2.0; Psychology Software Tools Inc., Pittsburgh, PA, USA). Images for the task were taken from the NimStim set of facial expressions, a stimulus set that displays strong reliability and validity (Tottenham et al., 2009). There were eight actors in total (four males and four females), each of which displayed the six basic emotions (anger, disgust, fear, happy, sad and surprise). These were selected to represent a broad range of ethnicities. The images were presented centrally over a black background, and occupied the entire height of the screen. There was a static and dynamic form of each expression, making a total of 96 trials (which were presented in a random order). The dynamic images were achieved by creating a “morphed” display from a neutral facial expression to the emotional facial expression (duration = 1000 ms) (see Johnston et al., 2010, for a description of dynamic stimulus production identical to that used in the current study). The task took approximately 8–10 minutes COGNITION AND EMOTION, 2014, 28 (6) 1113 ENTICOTT ET AL. to complete, depending on the participant’s response time. The computer keyboard was covered so that only six keys were displayed (a, d, g, j, l and “), and one of the six basic emotions was written over each key (from left to right: anger, disgust, fear, happy, sad and surprise). Participants were instructed to press the key that best indicated the emotion that the person was feeling based on that person’s facial expression. Participants were asked to respond as quickly as possible, but it was emphasised that accuracy, not speed, was the main aim of the task. The trials were not time limited. There was a two second gap (black screen) between a response and the onset of a new trial. interaction was also investigated within this model. Accuracy (i.e., number correct) served as the dependent variable. VIQ was included due to the language component of the task (i.e., identification of emotion words for identifying facial expressions). Age and gender were included due to the relatively broad age range and use of both male and female participants (which could have an impact on performance), and we wanted to ensure that any possible influence of these factors was accounted for. We also examined, for each of the six emotions, which emotion was most commonly selected for those responses that were incorrect (i. e., index of confusion), and analysed via chisquare. Data analysis Data were analysed via separate random-effects generalised least squares (GLS) regression models (with participant as the random-effect) for each emotion (using STATA 11.1, StataCorp, College Station, TX). This involved the predictive factors group (ASD, TD), motion (dynamic, static), verbal IQ, age and gender. A group × motion Figure 1. 1114 RESULTS Mean group scores for each emotion, both static and dynamic, are presented in Figure 1. Regression was not conducted for the recognition of happy faces, as there were substantial ceiling effects for both groups. Among ASD participants, Mean FER performance (total correct, ±SE) by emotion for each group. COGNITION AND EMOTION, 2014, 28 (6) (.908) (.868) (.370) (.055) (.384) (.487) [0.24] [0.39] [0.34] [0.01] [0.02] [0.37] 0.07 0.03 0.06 −0.31 0.02 −0.02 0.26 (.651) (.489) (.025) (.385) (.839) (.190) [0.18] [0.32] [0.26] [0.01] [0.02] [0.32] 0.10 0.08 −0.22 −0.58 0.01 0.01 0.41 (.826) (.192) (.485) (.026) (.725) (.771) [0.25] [0.42] [0.36] [0.01] [0.02] [0.40] 0.12 0.06 −0.54 −0.25 0.03 −0.01 0.12 (.741) (.021) (.815) (.072) (.644) (.504) [0.25] [0.46] [0.36] [0.01] [0.03] [0.45] 0.14 0.08 −1.05 0.08 0.02 0.01 0.30 (.026) (.000) (1.00) (.003) (.165) (.572) [0.18] [0.38] [0.25] [0.01] [0.02] [0.39] Sad β [SE] (p) Fear β [SE] (p) Disgust β [SE] (p) Anger β [SE] (p) 0.34 0.38 −1.52 0.00 0.03 0.03 −0.22 R (overall) Motion Group Motion × group Verbal Age Gender 2 Individuals with ASD were impaired in the recognition of facial expressions showing anger and disgust. Although there were no overall group differences for the recognition of sad facial expressions, people with ASD were disadvantaged by the presentation of dynamic (compared with static) images of sad facial expressions. Lastly, although there were group differences for IV DISCUSSION Table 2. Results of regression analyses for each emotion (excluding happy; significant results in bold typeface) 27 participants scored 16/16, 6 participants scored 15/16 and 1 participant each score 14/16, 13/16 and 12/16. Among controls, 34 participants scored 16/16, while the remaining 2 scored 15/16. Results of regression analyses are presented in Table 2. There was no significant overall effect of the regression for fear (p = .07) or surprise (p = .31). For anger, there was a significant effect of motion, with dynamic images associated with increased accuracy, and an effect of group, with TD outperforming ASD. There was also a significant effect of VIQ, with increased VIQ associated with marginally improved performance. For disgust, there was a significant effect of group, with TD again outperforming ASD. For sad, there was a significant Group × Motion interaction effect, with accuracy for dynamic stimuli poorer than static stimuli among the ASD group. There were no group differences with respect to the index of confusion (i.e., most commonly selected incorrect response for each emotion). For both groups, fear was most commonly mistaken for surprise (ASD: 56% of participants; TD: 64%; χ2(8) = 8.09, p = .425), while surprise was most confused with fear (ASD: 76%; TD: 86%; χ2(7) = 8.31, p = .306). Similarly, for both groups anger was most frequently confused with disgust (ASD: 56%; TD: 64%; χ2(9) = 11.17, p = .265), and disgust was most commonly mistaken for anger (ASD: 77%; TD: 89%; χ2(4) = 7.56, p = .109). Lastly, for both groups sad was most commonly mistaken for fear (ASD: 48%; TD: 69%; χ2(10) = 9.77, p = .461). Surprise β [SE] (p) FACIAL EMOTION RECOGNITION IN ASD COGNITION AND EMOTION, 2014, 28 (6) 1115 ENTICOTT ET AL. recognition of expressions of anger (with the ASD group performing worse), here there was an overall advantage for both groups when recognising dynamic images showing facial expressions of anger. Impaired recognition of anger and disgust in ASD is somewhat consistent with studies involving similar patient groups (i.e., high-functioning ASD), where deficits have been found in the recognition of basic negative emotions such as fear, disgust, anger and sadness (Harms et al., 2010). These data further highlight that FER impairments in ASD do not extend to all emotions, but rather seem to be limited to negative emotions. From a clinical perspective, a failure to accurately recognise negative emotions in others may be substantially more disadvantageous than a failure to recognise positive emotions, and lead to greater interpersonal difficulties across a range of settings. For instance, a failure to recognise anger or disgust in another person, and a consequent failure to adjust behaviour accordingly, might exacerbate interpersonal difficulties or produce conflict across a range of settings. By contrast, if there was a failure to accurately recognise, for example, happiness, this would be unlikely to produce a similarly negative outcome. Interestingly, and despite the observed differences, there did not appear to be any differences in the pattern of errors for specific emotional expressions between the groups. There were no group differences for happy or surprise; while happy expressions were associated with a ceiling effect, that we did not detect a difference for surprise seems consistent with recent research. For instance, Law Smith et al. (2010) reported deficits in recognising surprise in ASD but only at low intensities. The surprise stimuli used in the present study may have lacked the necessary subtlety to detect impairment in ASD. While we were primarily interested in the influence of dynamic images, it appears that the use of dynamic facial expressions had relatively little effect. It is often suggested that dynamic stimuli have greater ecological validity and should be more easily recognised than static images, and this was the case, for all participants, for anger. 1116 COGNITION AND EMOTION, 2014, 28 (6) Somewhat paradoxically, however, the presentation of dynamic images for the sad facial expression resulted in a reduction in accuracy (relative to static) among individuals with ASD (although overall group differences were not significant), suggesting that in some instances individuals with ASD might actually be disadvantaged by dynamic (relative to static) facial expressions. It is not immediately clear why individuals with ASD would be disadvantaged by a dynamic presentation for just one emotion. Neuroimaging research suggests that brain networks that process static and dynamic faces do differ somewhat (Kilts et al., 2003), and these different networks (or functions underlying these networks) could be differentially affected in ASD (at least in relation to the recognition of sadness). For example, structures thought to comprise the mirror neuron system, which is seemingly responsive to goal-directed and dynamic expressions of behaviour, have been shown as underactive in ASD (Dapretto et al., 2006). Recent research also implicates poor neural connectivity in ASD, particularly to and within prefrontal and parietal regions (Just, Cherkassky, Keller, Kana, & Minshew, 2007; Wicker et al., 2008). In this respect, functional magnetic resonance imaging (fMRI) studies of people with ASD using the current stimuli will be of great interest, as will similar studies with alternate stimulus sets. Lastly, it is possible that the duration of the dynamic images was too brief to benefit individuals with ASD (Gepner & Feron, 2009), although the extent to which this would apply to “high-functioning” individuals is not clear. This study is limited by a relatively broad age range, which may introduce developmental factors related to visual processing. Groups, however, were matched according to age, and regression analyses revealed no significant effects of age. These (and other) dynamic facial stimuli could be criticised because they finish with a final frame that remains displayed until a response is given. Thus, a response is given while viewing a static image, albeit one preceded by a dynamic display. This, however, was considered necessary in order to (1) maintain consistency with the static condition (in which the face is presented until a FACIAL EMOTION RECOGNITION IN ASD response is given) and (2) avoid the perceptual oddness of either cutting off or looping the dynamic display. Additional limitations include the use of morphed stimuli, which might be argued to have less ecological validity than a video of an individual performing a facial expression, and stimuli demonstrating only strong intensity emotional displays. It would also have been useful to have better diagnostic information on our participants (e.g., autism diagnostic interview [ADI]/autism diagnostic observation schedule [ADOS]), while concurrent eye tracking in future studies will allow a determination of possible visual and attentional influences. For example, children with autism have been found to favour the mouth region and/or attend less to the eye regions when attending to faces (e.g., Joseph & Tanaka, 2003; Rutherford, Clements, & Sekuler, 2007), and this could place them at a significant disadvantage when attempting to determine a facial emotional display, be it static or dynamic. In summary, these findings add to the considerable literature concerning the recognition of emotion from facial expressions in ASD, and again highlight specific impairments that seem associated with negative emotions (in this instance, anger and disgust). Dynamic facial expressions are often considered an improvement over traditional static images, but here were associated with relatively little change that was linked to ASD. Manuscript received 15 January Revised manuscript received 29 August Manuscript accepted 16 November First published online 13 December 2013 2013 2013 2013 REFERENCES Ambadar, Z., Schooler, J. W., & Cohn, J. F. (2005). Deciphering the enigmatic face: The importance of facial dynamics in interpreting subtle facial expressions. Psychological Science, 16, 403–410. doi:10.1111/j.0956-7976.2005.01548.x Ashwin, C., Baron-Cohen, S., Wheelwright, S., O’Riordan, M., & Bullmore, E. T. (2007). Differential activation of the amygdala and the ‘social brain’ during fearful face-processing in Asperger syndrome. Neuropsychologia, 45, 2–14. doi:10.1016/ j.neuropsychologia.2006.04.014 Baron-Cohen, S., Hoekstra, R. A., Knickmeyer, R., & Wheelwright, S. (2006). The autism-spectrum quotient (AQ)-adolescent version. Journal of Autism and Developmental Disorders, 36, 343–350. doi:10.1007/ s10803-006-0073-6 Baron-Cohen, S., & Wheelwright, S. (2004). The empathy quotient: An investigation of adults with Asperger syndrome or high functioning autism, and normal sex differences. Journal of Autism and Developmental Disorders, 34, 163–175. doi:10.1023/B: JADD.0000022607.19833.00 Baron-Cohen, S., Wheelwright, S., Skinner, R., Martin, J., & Clubley, E. (2001). The autism spectrum quotient (AQ): Evidence from Asperger syndrome/ high functioning autism, males and females, scientists and mathematicians. Journal of Autism and Developmental Disorders, 31, 5–17. doi:10.1023/ A:1005653411471 Dapretto, M., Davies, M. S., Pfeifer, J. H., Scott, A. A., Sigman, M., Bookheimer, S. Y., & Iacoboni, M. (2006). Understanding emotions in others: Mirror neuron dysfunction in children with autism spectrum disorders. Nature Neuroscience, 9, 28–30. doi:10.1038/nn1611 Einfeld, S. L., & Tonge, B. J. (2002). Manual for the Developmental Behaviour Checklist (Second Edition) – Primary Carer Version (DBC-P) and Teacher Version (DBC-T). Melbourne and Sydney: Monash University Centre for Developmental Psychiatry & Psychology and School of Psychiatry, University of New South Wales. Gepner, B., Deruelle, C., & Grynfeltt, S. (2001). Motion and emotion: A novel approach to the study of face processing by young autistic children. Journal of Autism and Developmental Disorders, 31, 37–45. doi:10.1023/A:1005609629218 Gepner, B., & Feron, F. (2009). Autism: A world changing too fast for a mis-wired brain? Neuroscience and Biobehavioral Reviews, 33, 1227–1242. doi:10.1016/j.neubiorev.2009.06.006 Harms, M. B., Martin, A., & Wallace, G. L. (2010). Facial emotion recognition in autism spectrum disorders: A review of behavioral and neuroimaging studies. Neuropsychology Review, 20, 290–322. doi:10.1007/s11065-010-9138-6 Harwood, N. K., Hall, L. J., & Shinkfield, A. J. (1999). Recognition of facial emotional expressions from moving and static displays by individuals with mental retardation. American Journal of Mental Retardation, COGNITION AND EMOTION, 2014, 28 (6) 1117 ENTICOTT ET AL. 104, 270–278. doi:10.1352/0895-8017(1999)104% 3C0270:ROFEEF%3E2.0.CO;2 Johnston, P. J., Enticott, P. G., Mayes, A. K., Hoy, K. E., Herring, S. E., & Fitzgerald, P. B. (2010). Symptom correlates of static and dynamic facial affect processing in schizophrenia: Evidence of a double dissociation? Schizophrenia Bulletin, 36, 680– 687. doi:10.1093/schbul/sbn136 Joseph, R. M., & Tanaka, J. (2003). Holistic and partbased face recognition in children with autism. Journal of Child Psychology and Psychiatry and Allied Disciplines, 44, 529–542. doi:10.1111/1469-7610.00142 Just, M. A., Cherkassky, V. L., Keller, T. A., Kana, R. K., & Minshew, N. J. (2007). Functional and anatomical cortical underconnectivity in autism: Evidence from an fMRI study of an executive function task and corpus callosum morphometry. Cerebral Cortex, 17, 951–961. doi:10.1093/cercor/bhl006 Katsyri, J., Saalasti, S., Tiippana, K., von Wendt, L., & Sams, M. (2008). Impaired recognition of facial emotions from low-spatial frequencies in Asperger syndrome. Neuropsychologia, 46, 1888–1897. doi:10.1016/j.neuropsychologia.2008.01.005 Kilts, C. D., Egan, G., Gideon, D. A., Ely, T. D., & Hoffman, J. M. (2003). Dissociable neural pathways are involved in the recognition of emotion in static and dynamic facial expressions. NeuroImage, 18, 156–168. doi:10.1006/nimg.2002.1323 Law Smith, M. J., Montagne, B., Perrett, D. I., Gill, M., & Gallagher, L. (2010). Detecting subtle facial emotion recognition deficits in high-functioning Autism using dynamic stimuli of varying intensities. Neuropsychologia, 48, 2777–2781. doi:10.1016/j. neuropsychologia.2010.03.008 Pelphrey, K. A., Morris, J. P., McCarthy, G., & LaBar, K. S. (2007). Perception of dynamic changes in facial affect and identity in autism. Social Cognitive and Affective Neuroscience, 2, 140–149. doi:10.1093/scan/nsm010 1118 COGNITION AND EMOTION, 2014, 28 (6) Philip, R. C., Whalley, H. C., Stanfield, A. C., Sprengelmeyer, R., Santos, I. M., Young, A. W., … Hall, J. (2010). Deficits in facial, body movement and vocal emotional processing in autism spectrum disorders. Psychological Medicine, 40, 1919–1929. doi:10.1017/S0033291709992364 Ritvo, R. A., Ritvo, E. R., Guthrie, D., Yuwiler, A., Ritvo, M. J., & Weisbender, L. (2008). A scale to assist with the diagnosis of autism and Asperger’s disorder (RAADS): A pilot study. Journal of Autism and Developmental Disorders, 38, 213–223. doi:10.1007/s10803-007-0380-6 Rutherford, M. D., Clements, K. A., & Sekuler, A. B. (2007). Differences in discrimination of eye and mouth displacement in autism spectrum disorders. Vision Research, 47, 2099–2110. doi:10.1016/j. visres.2007.01.029 Speer, L. L., Cook, A. E., McMahon, W. M., & Clark, E. (2007). Face processing in children with autism: Effects of stimulus contents and type. Autism, 11, 265–277. doi:10.1177/1362361307076925 Tottenham, N., Tanaka, J. W., Leon, A., McCarry, T., Nurse, M., Hare, T. A., … Nelson, C. A. (2009). The NimStim set of facial expressions: Judgments from untrained research participants. Psychiatry Research, 168, 242–249.doi:10.1016/j.psychres.2008. 05.006 Weyers, P., Muhlberger, A., Hefele, C., & Pauli, P. (2006). Electromyographic responses to static and dynamic avatar emotional facial expressions. Psychophysiology, 43, 450–453. doi:10.1111/j.1469-8986. 2006.00451.x Wicker, B., Fonlupt, P., Hubert, B., Tardif, C., Gepner, B., & Deruelle, C. (2008). Abnormal cerebral effective connectivity during explicit emotional processing in adults with autism spectrum disorder. Social Cognitive and Affective Neuroscience, 3, 135–143. doi:10.1093/scan/nsn007 Copyright of Cognition & Emotion is the property of Psychology Press (UK) and its content may not be copied or emailed to multiple sites or posted to a listserv without the copyright holder's express written permission. However, users may print, download, or email articles for individual use.